yolov8 C++目标检测推理代码上加上关键点输出

发布时间:2023年12月28日

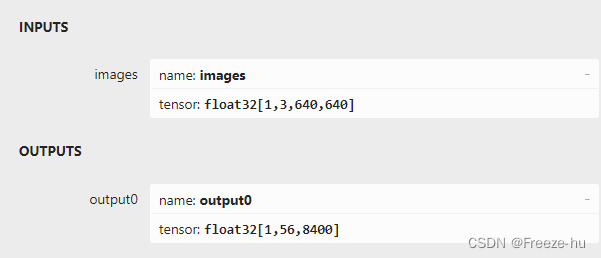

1.在yolov8 的目标检测代码上加上关键点解析输出,下载yolov8n-pose.pt转换为onnx格式的 yolov8n-pose.onnx ,输出 1,56,8400

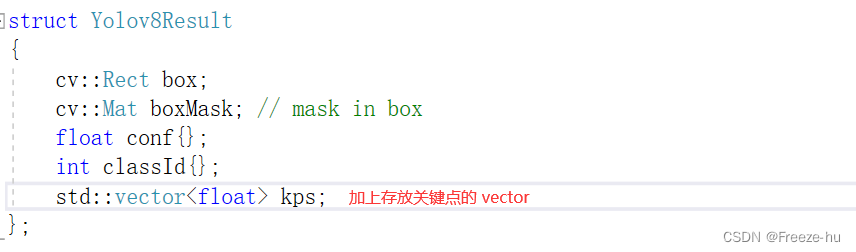

2.在输出结果结构体中加上关键点的集合 kps

3.修改classNums的值获取

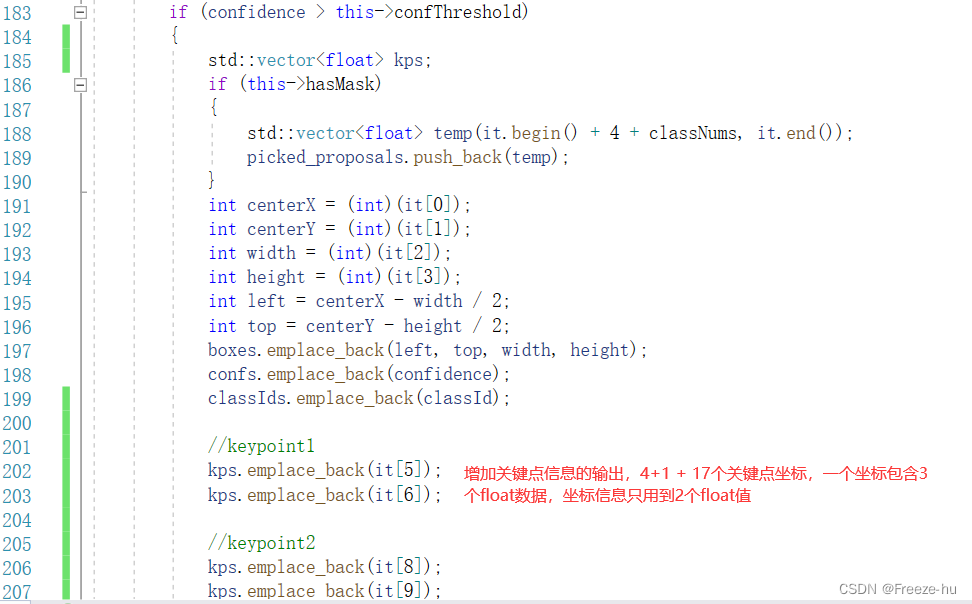

4.在推理结果解析的时候,增加关键点信息的输出,这里只取出17个关键点的中的前5个关键点

5.在640*640推理的后的坐标信息转换为原图坐标信息

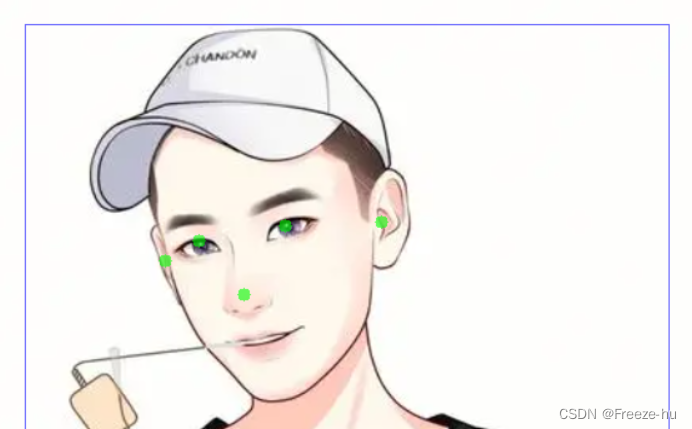

6.在原图中标出最前面的5个关键点

7.运行的效果如下

8.main.cpp

#include <regex>

#include <iostream>

#include <opencv2/opencv.hpp>

#include <filesystem>

#include <ctime>

#include "cmdline.h"

#include "utils.h"

#include "yolov8Predictor.h"

int main(int argc, char *argv[])

{

float confThreshold = 0.1f;

float iouThreshold = 0.2f;

float maskThreshold = 0.2f;

/*

bool isGPU = cmd.exist("gpu");

const std::string classNamesPath = cmd.get<std::string>("class_names");

const std::vector<std::string> classNames = utils::loadNames(classNamesPath);

const std::string imagePath = cmd.get<std::string>("image_path");

const std::string savePath = cmd.get<std::string>("out_path");

const std::string suffixName = cmd.get<std::string>("suffix_name");

const std::string modelPath = cmd.get<std::string>("model_path");

*/

bool isGPU = false;

std::string classNamesPath;

std::vector<std::string>classNames;

std::string imagePath;

std::string savePath;

std::string suffixName;

std::string modelPath;

/*

if (classNames.empty())

{

std::cerr << "Error: Empty class names file." << std::endl;

return -1;

}

if (!std::filesystem::exists(modelPath))

{

std::cerr << "Error: There is no model." << std::endl;

return -1;

}

if (!std::filesystem::is_directory(imagePath))

{

std::cerr << "Error: There is no model." << std::endl;

return -1;

}

if (!std::filesystem::is_directory(savePath))

{

std::filesystem::create_directory(savePath);

}

*/

classNames.push_back("r");

modelPath = "./yolov8n-pose.onnx";

YOLOPredictor predictor{nullptr};

try

{

predictor = YOLOPredictor(modelPath, isGPU,

confThreshold,

iouThreshold,

maskThreshold);

std::cout << "Model was initialized." << std::endl;

}

catch (const std::exception &e)

{

std::cerr << e.what() << std::endl;

return -1;

}

assert(classNames.size() == predictor.classNums);

std::regex pattern(".+\\.(jpg|jpeg|png|gif)$");

std::cout << "Start predicting..." << std::endl;

cv::VideoCapture capture;

capture.open(1);

if (!capture.isOpened()) {

printf("could not read this video file...\n");

return -1;

}

cv::Size S = cv::Size((int)capture.get(cv::CAP_PROP_FRAME_WIDTH),

(int)capture.get(cv::CAP_PROP_FRAME_HEIGHT));

capture.set(cv::CAP_PROP_FRAME_WIDTH, 1280);

capture.set(cv::CAP_PROP_FRAME_HEIGHT, 1024);

cv::Mat frame;

while (capture.read(frame)) {

clock_t startTime, endTime;

startTime = clock();

frame = cv::imread("./person.png");

std::vector<Yolov8Result> result = predictor.predict(frame);

utils::visualizeDetection(frame, result, classNames);

endTime = clock();

std::cout << "The total run time is: " << (double)(endTime - startTime) / CLOCKS_PER_SEC << "seconds" << std::endl;

cv::imshow("Inference", frame);

char c = cv::waitKey(1);

if (c == 27) {

break;

}

}

return 0;

}

9.utils.cpp

#include "utils.h"

size_t utils::vectorProduct(const std::vector<int64_t> &vector)

{

if (vector.empty())

return 0;

size_t product = 1;

for (const auto &element : vector)

product *= element;

return product;

}

std::wstring utils::charToWstring(const char *str)

{

typedef std::codecvt_utf8<wchar_t> convert_type;

std::wstring_convert<convert_type, wchar_t> converter;

return converter.from_bytes(str);

}

std::vector<std::string> utils::loadNames(const std::string &path)

{

// load class names

std::vector<std::string> classNames;

std::ifstream infile(path);

if (infile.good())

{

std::string line;

while (getline(infile, line))

{

if (line.back() == '\r')

line.pop_back();

classNames.emplace_back(line);

}

infile.close();

}

else

{

std::cerr << "ERROR: Failed to access class name path: " << path << std::endl;

}

// set color

srand(time(0));

for (int i = 0; i < 2 * classNames.size(); i++)

{

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

colors.push_back(cv::Scalar(b, g, r));

}

return classNames;

}

void utils::visualizeDetection(cv::Mat &im, std::vector<Yolov8Result> &results,

const std::vector<std::string> &classNames)

{

cv::Mat image = im.clone();

for (const Yolov8Result &result : results)

{

int x = result.box.x;

int y = result.box.y;

int x2 = x + result.box.width;

int y2 = y + result.box.height;

int conf = (int)std::round(result.conf * 100);

int classId = result.classId;

std::string label = classNames[classId] + " 0." + std::to_string(conf);

int baseline = 0;

cv::Size size = cv::getTextSize(label, cv::FONT_ITALIC, 0.4, 1, &baseline);

//image(result.box).setTo(colors[classId + classNames.size()], result.boxMask);

//cv::rectangle(image, result.box, colors[classId], 2);

cv::rectangle(image,

cv::Point(x, y), cv::Point(x2 , y2),

cv::Scalar(255, 0, 0), 1);

cv::circle(image, { (int)std::round(result.kps[0]), (int)std::round(result.kps[1]) }, 5, cv::Scalar(0, 255, 0), -1);

cv::circle(image, { (int)std::round(result.kps[2]), (int)std::round(result.kps[3]) }, 5, cv::Scalar(0, 255, 0), -1);

cv::circle(image, { (int)std::round(result.kps[4]), (int)std::round(result.kps[5]) }, 5, cv::Scalar(0, 255, 0), -1);

cv::circle(image, { (int)std::round(result.kps[6]), (int)std::round(result.kps[7]) }, 5, cv::Scalar(0, 255, 0), -1);

cv::circle(image, { (int)std::round(result.kps[8]), (int)std::round(result.kps[9]) }, 5, cv::Scalar(0, 255, 0), -1);

//cv::putText(image, label,

// cv::Point(x, y - 3 + 12), cv::FONT_ITALIC,

// 0.4, cv::Scalar(0, 0, 0), 1);

}

cv::addWeighted(im, 0.4, image, 0.6, 0, im);

}

void utils::letterbox(const cv::Mat &image, cv::Mat &outImage,

const cv::Size &newShape = cv::Size(640, 640),

const cv::Scalar &color = cv::Scalar(114, 114, 114),

bool auto_ = true,

bool scaleFill = false,

bool scaleUp = true,

int stride = 32)

{

cv::Size shape = image.size();

float r = std::min((float)newShape.height / (float)shape.height,

(float)newShape.width / (float)shape.width);

if (!scaleUp)

r = std::min(r, 1.0f);

float ratio[2]{r, r};

int newUnpad[2]{(int)std::round((float)shape.width * r),

(int)std::round((float)shape.height * r)};

auto dw = (float)(newShape.width - newUnpad[0]);

auto dh = (float)(newShape.height - newUnpad[1]);

if (auto_)

{

dw = (float)((int)dw % stride);

dh = (float)((int)dh % stride);

}

else if (scaleFill)

{

dw = 0.0f;

dh = 0.0f;

newUnpad[0] = newShape.width;

newUnpad[1] = newShape.height;

ratio[0] = (float)newShape.width / (float)shape.width;

ratio[1] = (float)newShape.height / (float)shape.height;

}

dw /= 2.0f;

dh /= 2.0f;

if (shape.width != newUnpad[0] && shape.height != newUnpad[1])

{

cv::resize(image, outImage, cv::Size(newUnpad[0], newUnpad[1]));

}

int top = int(std::round(dh - 0.1f));

int bottom = int(std::round(dh + 0.1f));

int left = int(std::round(dw - 0.1f));

int right = int(std::round(dw + 0.1f));

cv::copyMakeBorder(outImage, outImage, top, bottom, left, right, cv::BORDER_CONSTANT, color);

}

void utils::scaleCoords(cv::Rect &coords,

cv::Mat &mask,

const float maskThreshold,

const cv::Size &imageShape,

const cv::Size &imageOriginalShape,

std::vector<float>& kps)

{

float gain = std::min((float)imageShape.height / (float)imageOriginalShape.height,

(float)imageShape.width / (float)imageOriginalShape.width);

int pad[2] = {(int)(((float)imageShape.width - (float)imageOriginalShape.width * gain) / 2.0f),

(int)(((float)imageShape.height - (float)imageOriginalShape.height * gain) / 2.0f)};

coords.x = (int)std::round(((float)(coords.x - pad[0]) / gain));

coords.x = std::max(0, coords.x);

coords.y = (int)std::round(((float)(coords.y - pad[1]) / gain));

coords.y = std::max(0, coords.y);

//keypoint1

kps[0] = (int)std::round(((float)(kps[0] - pad[0]) / gain));

kps[0] = std::max(0,(int)kps[0]);

kps[1] = (int)std::round(((float)(kps[1] - pad[1]) / gain));

kps[1] = std::max(0, (int)kps[1]);

//keypoint2

kps[2] = (int)std::round(((float)(kps[2] - pad[0]) / gain));

kps[2] = std::max(0, (int)kps[2]);

kps[3] = (int)std::round(((float)(kps[3] - pad[1]) / gain));

kps[3] = std::max(0, (int)kps[3]);

//keyponit3

kps[4] = (int)std::round(((float)(kps[4] - pad[0]) / gain));

kps[4] = std::max(0, (int)kps[4]);

kps[5] = (int)std::round(((float)(kps[5] - pad[1]) / gain));

kps[5] = std::max(0, (int)kps[5]);

//keyponit4

kps[6] = (int)std::round(((float)(kps[6] - pad[0]) / gain));

kps[6] = std::max(0, (int)kps[6]);

kps[7] = (int)std::round(((float)(kps[7] - pad[1]) / gain));

kps[7] = std::max(0, (int)kps[7]);

//keyponit5

kps[8] = (int)std::round(((float)(kps[8] - pad[0]) / gain));

kps[8] = std::max(0, (int)kps[8]);

kps[9] = (int)std::round(((float)(kps[9] - pad[1]) / gain));

kps[9] = std::max(0, (int)kps[9]);

coords.width = (int)std::round(((float)coords.width / gain));

coords.width = std::min(coords.width, imageOriginalShape.width - coords.x);

coords.height = (int)std::round(((float)coords.height / gain));

coords.height = std::min(coords.height, imageOriginalShape.height - coords.y);

mask = mask(cv::Rect(pad[0], pad[1], imageShape.width - 2 * pad[0], imageShape.height - 2 * pad[1]));

//cv::resize(mask, mask, imageOriginalShape, cv::INTER_LINEAR);

//mask = mask(coords) > maskThreshold;

}

template <typename T>

T utils::clip(const T &n, const T &lower, const T &upper)

{

return std::max(lower, std::min(n, upper));

}

10.yolov8Predictor.cpp

#include "yolov8Predictor.h"

YOLOPredictor::YOLOPredictor(const std::string &modelPath,

const bool &isGPU,

float confThreshold,

float iouThreshold,

float maskThreshold)

{

this->confThreshold = confThreshold;

this->iouThreshold = iouThreshold;

this->maskThreshold = maskThreshold;

env = Ort::Env(OrtLoggingLevel::ORT_LOGGING_LEVEL_WARNING, "YOLOV8");

sessionOptions = Ort::SessionOptions();

std::vector<std::string> availableProviders = Ort::GetAvailableProviders();

auto cudaAvailable = std::find(availableProviders.begin(), availableProviders.end(), "CUDAExecutionProvider");

OrtCUDAProviderOptions cudaOption;

if (isGPU && (cudaAvailable == availableProviders.end()))

{

std::cout << "GPU is not supported by your ONNXRuntime build. Fallback to CPU." << std::endl;

std::cout << "Inference device: CPU" << std::endl;

}

else if (isGPU && (cudaAvailable != availableProviders.end()))

{

std::cout << "Inference device: GPU" << std::endl;

sessionOptions.AppendExecutionProvider_CUDA(cudaOption);

}

else

{

std::cout << "Inference device: CPU" << std::endl;

}

#ifdef _WIN32

std::wstring w_modelPath = utils::charToWstring(modelPath.c_str());

session = Ort::Session(env, w_modelPath.c_str(), sessionOptions);

#else

session = Ort::Session(env, modelPath.c_str(), sessionOptions);

#endif

const size_t num_input_nodes = session.GetInputCount(); //==1

const size_t num_output_nodes = session.GetOutputCount(); //==1,2

if (num_output_nodes > 1)

{

this->hasMask = true;

std::cout << "Instance Segmentation" << std::endl;

}

else

std::cout << "Object Detection" << std::endl;

Ort::AllocatorWithDefaultOptions allocator;

for (int i = 0; i < num_input_nodes; i++)

{

auto input_name = session.GetInputNameAllocated(i, allocator);

this->inputNames.push_back(input_name.get());

input_names_ptr.push_back(std::move(input_name));

Ort::TypeInfo inputTypeInfo = session.GetInputTypeInfo(i);

std::vector<int64_t> inputTensorShape = inputTypeInfo.GetTensorTypeAndShapeInfo().GetShape();

this->inputShapes.push_back(inputTensorShape);

this->isDynamicInputShape = false;

// checking if width and height are dynamic

if (inputTensorShape[2] == -1 && inputTensorShape[3] == -1)

{

std::cout << "Dynamic input shape" << std::endl;

this->isDynamicInputShape = true;

}

}

for (int i = 0; i < num_output_nodes; i++)

{

auto output_name = session.GetOutputNameAllocated(i, allocator);

this->outputNames.push_back(output_name.get());

output_names_ptr.push_back(std::move(output_name));

Ort::TypeInfo outputTypeInfo = session.GetOutputTypeInfo(i);

std::vector<int64_t> outputTensorShape = outputTypeInfo.GetTensorTypeAndShapeInfo().GetShape();

this->outputShapes.push_back(outputTensorShape);

if (i == 0)

{

if (!this->hasMask)

classNums = outputTensorShape[1] - 4 - 17*3;

else

classNums = outputTensorShape[1] - 4 - 32;

}

}

// for (const char *x : this->inputNames)

// {

// std::cout << x << std::endl;

// }

// for (const char *x : this->outputNames)

// {

// std::cout << x << std::endl;

// }

// std::cout << classNums << std::endl;

}

void YOLOPredictor::getBestClassInfo(std::vector<float>::iterator it,

float &bestConf,

int &bestClassId,

const int _classNums)

{

// first 4 element are box

bestClassId = 4;

bestConf = 0;

for (int i = 4; i < _classNums + 4; i++)

{

if (it[i] > bestConf)

{

bestConf = it[i];

bestClassId = i - 4;

}

}

}

cv::Mat YOLOPredictor::getMask(const cv::Mat &maskProposals,

const cv::Mat &maskProtos)

{

cv::Mat protos = maskProtos.reshape(0, {(int)this->outputShapes[1][1], (int)this->outputShapes[1][2] * (int)this->outputShapes[1][3]});

cv::Mat matmul_res = (maskProposals * protos).t();

cv::Mat masks = matmul_res.reshape(1, {(int)this->outputShapes[1][2], (int)this->outputShapes[1][3]});

cv::Mat dest;

// sigmoid

cv::exp(-masks, dest);

dest = 1.0 / (1.0 + dest);

cv::resize(dest, dest, cv::Size((int)this->inputShapes[0][2], (int)this->inputShapes[0][3]), cv::INTER_LINEAR);

return dest;

}

void YOLOPredictor::preprocessing(cv::Mat &image, float *&blob, std::vector<int64_t> &inputTensorShape)

{

cv::Mat resizedImage, floatImage;

cv::cvtColor(image, resizedImage, cv::COLOR_BGR2RGB);

utils::letterbox(resizedImage, resizedImage, cv::Size((int)this->inputShapes[0][2], (int)this->inputShapes[0][3]),

cv::Scalar(114, 114, 114), this->isDynamicInputShape,

false, true, 32);

inputTensorShape[2] = resizedImage.rows;

inputTensorShape[3] = resizedImage.cols;

resizedImage.convertTo(floatImage, CV_32FC3, 1 / 255.0);

blob = new float[floatImage.cols * floatImage.rows * floatImage.channels()];

cv::Size floatImageSize{floatImage.cols, floatImage.rows};

// hwc -> chw

std::vector<cv::Mat> chw(floatImage.channels());

for (int i = 0; i < floatImage.channels(); ++i)

{

chw[i] = cv::Mat(floatImageSize, CV_32FC1, blob + i * floatImageSize.width * floatImageSize.height);

}

cv::split(floatImage, chw);

}

std::vector<Yolov8Result> YOLOPredictor::postprocessing(const cv::Size &resizedImageShape,

const cv::Size &originalImageShape,

std::vector<Ort::Value> &outputTensors)

{

// for box

std::vector<cv::Rect> boxes;

std::vector<float> confs;

std::vector<int> classIds;

std::vector<std::vector<float>> kpss;

float *boxOutput = outputTensors[0].GetTensorMutableData<float>();

//[1,4+n,8400]=>[1,8400,4+n] or [1,4+n+32,8400]=>[1,8400,4+n+32]

cv::Mat output0 = cv::Mat(cv::Size((int)this->outputShapes[0][2], (int)this->outputShapes[0][1]), CV_32F, boxOutput).t();

float *output0ptr = (float *)output0.data;

int rows = (int)this->outputShapes[0][2];

int cols = (int)this->outputShapes[0][1];

// std::cout << rows << cols << std::endl;

// if hasMask

std::vector<std::vector<float>> picked_proposals;

cv::Mat mask_protos;

for (int i = 0; i < rows; i++)

{

std::vector<float> it(output0ptr + i * cols, output0ptr + (i + 1) * cols);

float confidence;

int classId;

this->getBestClassInfo(it.begin(), confidence, classId, classNums);

if (confidence > this->confThreshold)

{

std::vector<float> kps;

if (this->hasMask)

{

std::vector<float> temp(it.begin() + 4 + classNums, it.end());

picked_proposals.push_back(temp);

}

int centerX = (int)(it[0]);

int centerY = (int)(it[1]);

int width = (int)(it[2]);

int height = (int)(it[3]);

int left = centerX - width / 2;

int top = centerY - height / 2;

boxes.emplace_back(left, top, width, height);

confs.emplace_back(confidence);

classIds.emplace_back(classId);

//keypoint1

kps.emplace_back(it[5]);

kps.emplace_back(it[6]);

//keypoint2

kps.emplace_back(it[8]);

kps.emplace_back(it[9]);

//keypoint3

kps.emplace_back(it[11]);

kps.emplace_back(it[12]);

//keypoint4

kps.emplace_back(it[14]);

kps.emplace_back(it[15]);

//keypoint5

kps.emplace_back(it[17]);

kps.emplace_back(it[18]);

kpss.emplace_back(kps);

}

}

std::vector<int> indices;

cv::dnn::NMSBoxes(boxes, confs, this->confThreshold, this->iouThreshold, indices);

if (this->hasMask)

{

float *maskOutput = outputTensors[1].GetTensorMutableData<float>();

std::vector<int> mask_protos_shape = {1, (int)this->outputShapes[1][1], (int)this->outputShapes[1][2], (int)this->outputShapes[1][3]};

mask_protos = cv::Mat(mask_protos_shape, CV_32F, maskOutput);

}

std::vector<Yolov8Result> results;

for (int idx : indices)

{

Yolov8Result res;

res.box = cv::Rect(boxes[idx]);

if (this->hasMask)

res.boxMask = this->getMask(cv::Mat(picked_proposals[idx]).t(), mask_protos);

else

res.boxMask = cv::Mat::zeros((int)this->inputShapes[0][2], (int)this->inputShapes[0][3], CV_8U);

res.kps = kpss[idx];

utils::scaleCoords(res.box, res.boxMask, this->maskThreshold, resizedImageShape, originalImageShape, res.kps);

res.conf = confs[idx];

res.classId = classIds[idx];

results.emplace_back(res);

}

return results;

}

std::vector<Yolov8Result> YOLOPredictor::predict(cv::Mat &image)

{

float *blob = nullptr;

std::vector<int64_t> inputTensorShape{1, 3, -1, -1};

this->preprocessing(image, blob, inputTensorShape);

size_t inputTensorSize = utils::vectorProduct(inputTensorShape);

std::vector<float> inputTensorValues(blob, blob + inputTensorSize);

std::vector<Ort::Value> inputTensors;

Ort::MemoryInfo memoryInfo = Ort::MemoryInfo::CreateCpu(

OrtAllocatorType::OrtArenaAllocator, OrtMemType::OrtMemTypeDefault);

inputTensors.push_back(Ort::Value::CreateTensor<float>(

memoryInfo, inputTensorValues.data(), inputTensorSize,

inputTensorShape.data(), inputTensorShape.size()));

std::vector<Ort::Value> outputTensors = this->session.Run(Ort::RunOptions{nullptr},

this->inputNames.data(),

inputTensors.data(),

1,

this->outputNames.data(),

this->outputNames.size());

cv::Size resizedShape = cv::Size((int)inputTensorShape[3], (int)inputTensorShape[2]);

std::vector<Yolov8Result> result = this->postprocessing(resizedShape,

image.size(),

outputTensors);

delete[] blob;

return result;

}

注:原始的目标检测代码来自网上,在此基础上增加关键点检测代码。

文章来源:https://blog.csdn.net/huzhifei/article/details/135275638

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- [C#]OpenCvSharp利用微信二维码引擎实现二维码识别

- 深入JS中的原型链

- Ubuntu fcitx Install

- DiffMIC:融合局部和全局分析,基于扩散模型的医学图像分类方法

- 【51单片机Keil+Proteus8.9+ADC0804】ADC实验 模拟转数字实验

- VBA技术资料MF97:多字段多条件高级筛选

- 【计算机毕业设计】在线点餐(堂食)平台

- Java中的集合List、Set和Map三大类以及常用方法

- mysql数据库清除自增id并从1开始(详细一次成功)

- vue-vant组件库