03MARL-联合策略与期望回报

前言

多智能体强化学习问题中的博弈论知识——联合策略与期望回报

一、MARL问题组成

二、联合策略与期望回报

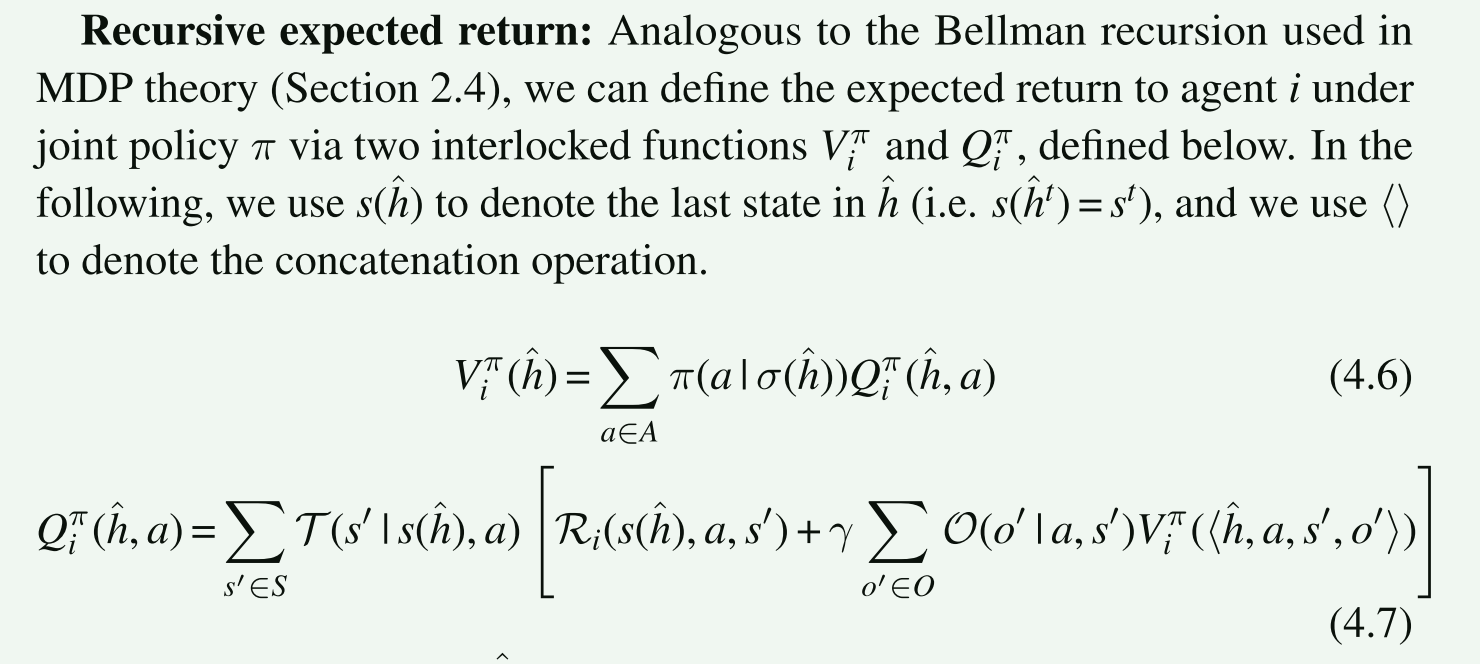

定义一种普遍的期望回报,能够用于所有的多智能体与环境的交互模型当中,因此在POSG的环境下定义,定义了两个等式计算期望回报,如下:

1.History-based expected return

在联合策略

π

\pi

π给定下,智能体i的期望回报为:

U

i

(

π

)

=

E

h

^

t

~

(

P

r

0

,

T

,

O

,

π

)

[

u

i

(

h

^

t

)

]

=

∑

h

^

t

∈

H

^

Pr

?

(

h

^

t

∣

π

)

u

i

(

h

^

t

)

\begin{aligned} U_i(\pi)& =\mathbb{E}_{\hat{h}^t\sim(\mathrm{Pr}^0,\mathcal{T},\mathcal{O},\pi)}\bigg[u_i(\hat{h}^t)\bigg] \\ &\begin{aligned}=\sum_{\hat{h}^t\in\hat{H}}\Pr(\hat{h}^t\mid\pi)u_i(\hat{h}^t)\end{aligned} \end{aligned}

Ui?(π)?=Eh^t~(Pr0,T,O,π)?[ui?(h^t)]=h^t∈H^∑?Pr(h^t∣π)ui?(h^t)??

其中,H包含所有时刻的历史观测序列,

Pr

?

(

h

^

t

∣

π

)

\Pr(\hat{h}^t\mid\pi)

Pr(h^t∣π)代表给定策略下的所有历史观测的概率,

Pr

?

(

h

^

t

∣

π

)

=

Pr

?

0

(

s

0

)

O

(

o

0

∣

?

,

s

0

)

∏

τ

=

0

t

?

1

π

(

a

τ

∣

h

τ

)

T

(

s

τ

+

1

∣

s

τ

,

a

τ

)

O

(

o

τ

+

1

∣

a

τ

,

s

τ

+

1

)

\begin{aligned}\Pr(\hat{h}^t\mid\pi)=&\Pr^0(s^0)\mathcal{O}(o^0\mid\emptyset,s^0)\prod_{\tau=0}^{t-1}\pi(a^\tau\mid h^\tau)\mathcal{T}(s^{\tau+1}\mid s^\tau,a^\tau)\mathcal{O}(o^{\tau+1}\mid a^\tau,s^{\tau+1})\end{aligned}

Pr(h^t∣π)=?Pr0(s0)O(o0∣?,s0)τ=0∏t?1?π(aτ∣hτ)T(sτ+1∣sτ,aτ)O(oτ+1∣aτ,sτ+1)?

u

i

(

h

^

t

)

u_i(\hat{h}^t)

ui?(h^t)是智能体i在观测序列的折扣回报,

u

i

(

h

^

t

)

=

∑

τ

=

0

t

?

1

γ

τ

R

i

(

s

τ

,

a

τ

,

s

τ

+

1

)

u_i(\hat{h}^t)=\sum_{\tau=0}^{t-1}\gamma^\tau\mathcal{R}_i(s^\tau,a^\tau,s^{\tau+1})

ui?(h^t)=∑τ=0t?1?γτRi?(sτ,aτ,sτ+1),使用

π

(

a

τ

∣

h

τ

)

\pi(a^\tau\mid h^\tau)

π(aτ∣hτ)表示观测序列条件下,联合动作的概率分布,前提的假设是智能体之间的动作是独立的,因此

π

(

a

τ

∣

h

τ

)

=

∏

j

∈

I

π

j

(

a

j

τ

∣

h

j

τ

)

\pi(a^\tau\mid h^\tau)=\prod_{j\in I}\pi_j(a_j^\tau\mid h_j^\tau)

π(aτ∣hτ)=∏j∈I?πj?(ajτ?∣hjτ?)。

2.Recursive expected return

类似于贝尔曼方程的形式定义期望回报,首先定义了联合策略下的状态价值函数与动作价值函数

在这里

V

i

π

(

h

^

)

V_i^\pi(\hat{h})

Viπ?(h^)代表智能体i在给定策略下,所有历史序列取得的值,可以当期望回报,而

Q

i

π

(

h

^

,

a

)

Q_i^\pi(\hat{h},a)

Qiπ?(h^,a)代表智能体i根据观测序列,在给定策略下,采取的联合动作带来的即使收益,进一步可以将回报期望写为:

U

i

(

π

)

=

E

s

0

~

P

r

0

,

o

0

~

O

(

?

∣

?

,

s

0

)

[

V

i

π

(

?

s

0

,

o

0

?

)

]

U_i(\pi){=}\mathbb{E}_{s^0\sim\mathrm{Pr}^0,o^0\sim\mathcal{O}(\cdot|\emptyset,s^0)}[V_i^\pi(\langle s^0,o^0\rangle)]

Ui?(π)=Es0~Pr0,o0~O(?∣?,s0)?[Viπ?(?s0,o0?)]

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- Java中的锁(二)

- 什么是Vue.js的响应式系统(reactivity system)?如何实现数据的双向绑定?

- 星耀新春,集星探宝,卡奥斯开源社区双节活动上线啦!

- Java小案例-Sentinel的实现原理

- 大创项目推荐 图像识别-人脸识别与疲劳检测 - python opencv

- 文件处理的重定义,dup2函数

- flex 属性

- “SRP模型+”多技术融合在生态环境脆弱性评价模型构建、时空格局演变分析与RSEI 指数的生态质量评价及拓展应用

- 【css】实现渐变文字效果(linear-gradient&radial-gradient)

- Linux 网络设备 - TUN/TAP