15、Kubernetes核心技术 - 探针

目录

六、Liveness Probe(存活探针) VS Readiness Probe(就绪探针)

一、概述

在k8s中,我们不能仅仅通过查看应用的运行状态,来判断应用是否正常,因为在某些时候,容器正常运行并不能代表应用健康,因此,k8s提供了探针(Probe)技术,来帮助我们判断容器内运行的应用是否运行正常,探针有点类似心跳检测。

二、探针类型

Kubernetes 的探针有三种类型:

2.1、就绪探针(Readiness Probe)

判断容器是否启动完成,即容器的 Ready 是否为 True,可以接收请求,如果ReadinessProbe 探测失败,则容器的 Ready 将为 False,控制器将此Pod 的Endpoint 从对应的Service的Endpoint 列表中移除,从此不再将任何请求调度此Pod 上,直到下次探测成功。通过使用 Readiness 探针,Kubernetes 能够等待应用程序完全启动,然后才允许服务将流量发送到新副本。

2.2、存活探针(Liveness Probe)

判断容器是否存活,即 Pod 是否为 running 状态,如果 LivenessProbe探测到容器不健康,则 kubelet 将 kill 掉容器,并根据容器的重启策略是否重启。如果一个容器不包含 LivenessProbe 探针,则 Kubelet 认为容器的 LivenessProbe 探针的返回值永远成功。

有时应用程序可能因为某些原因(后端服务故障等)导致暂时无法对外提供服务,但应用软件没有终止,导致 k8s无法隔离有故障的 pod,调用者可能会访问到有故障的pod,导致业务不稳定。k8s提供 livenessProbe 来检测应用程序是否正常运行,并且对相应状况进行相应的补救措施。

三、探针探测方法

每类探针都支持三种探测方法:

3.1、exec

通过在容器内执行命令来检查服务是否正常,针对复杂检测或无HTTP 接口的服务,返回值为 0,则表示容器健康。

3.2、httpGet

通过发送 http 请求检查服务是否正常,返回 200-399 状态码则表明容器健康。

3.3、tcpSocket

通过容器的 IP 和 Port 执行 TCP 检查,如果能够建立TCP 连接,则表明容器健康。

四、探针配置项

探针(Probe)有许多可选字段,可以用来更加精确的控制探针的行为。这些参数包括:

- initialDelaySeconds:容器启动后第一次执行探测是需要等待多少秒;

- periodSeconds:执行探测的间隔时间,默认是10秒;

- timeoutSeconds:超时时间,当超过我们定义的时间后,便会被视为失败,默认 1 秒;

- successThreshold:探测失败后,最少连续探测成功多少次才被认定为成功,默认是1次。;

- failureThreshold:探测成功后,最少连续探测失败多少次才被认定为失败,默认是3次;

五、探针使用

5.1、就绪探针(Readiness Probe)

创建Pod资源清单:vim nginx-readiness-probe.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-readiness-probe

spec:

containers:

- name: nginx-readiness-probe

image: nginx

readinessProbe: # 就绪探针

httpGet: # 对容器的IP地址、端口和URL路径来发送GET请求

path: /healthz

port: 80

initialDelaySeconds: 10 # 等待10s后便开始就绪检查

periodSeconds: 5 # 间隔5s检查一次

successThreshold: 2 # 探测失败后,最少连续探测成功多少次才被认定为成功我们指定了探针检测方式为httpGet,通过发送 http 请求检查服务是否正常,返回 200-399 状态码则表明容器健康。

$ kubectl apply -f nginx-readiness-probe.yaml

pod/nginx-readiness-probe created

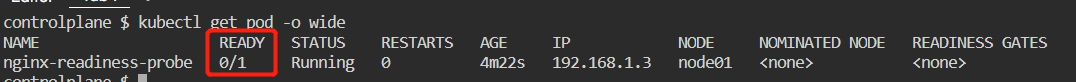

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-readiness-probe 0/1 Running 0 32s 192.168.1.3 node01 <none> <none>

$ kubectl describe pod nginx-readiness-probe

Name: nginx-readiness-probe

Namespace: default

Priority: 0

Service Account: default

Node: node01/172.30.2.2

Start Time: Mon, 16 Jan 2023 03:23:11 +0000

Labels: <none>

Annotations: cni.projectcalico.org/containerID: 67b08cbc5b07020dcd7040cd47565c5405ee82641a9d3d68d9fd68b6b599c10f

cni.projectcalico.org/podIP: 192.168.1.3/32

cni.projectcalico.org/podIPs: 192.168.1.3/32

Status: Running

IP: 192.168.1.3

IPs:

IP: 192.168.1.3

Containers:

nginx-readiness-probe:

Container ID: containerd://23eca4eaeffce3e6801d3e7c26a60360d33b1fdb4046843ff9cf7c647adcf0a2

Image: nginx

Image ID: docker.io/library/nginx@sha256:b8f2383a95879e1ae064940d9a200f67a6c79e710ed82ac42263397367e7cc4e

Port: <none>

Host Port: <none>

State: Running

Started: Mon, 16 Jan 2023 03:23:16 +0000

Ready: False

Restart Count: 0

Readiness: http-get http://:80/healthz delay=10s timeout=1s period=5s #success=2 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-8xsm8 (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-8xsm8:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 43s default-scheduler Successfully assigned default/nginx-readiness-probe to node01

Normal Pulling 43s kubelet Pulling image "nginx"

Normal Pulled 38s kubelet Successfully pulled image "nginx" in 4.968467021s (4.968471311s including waiting)

Normal Created 38s kubelet Created container nginx-readiness-probe

Normal Started 38s kubelet Started container nginx-readiness-probe

Warning Unhealthy 3s (x6 over 28s) kubelet Readiness probe failed: HTTP probe failed with statuscode: 404通过describe查看Pod描述信息后,可以看到,Readiness probe就绪检测失败了,失败原因就是我们的nginx容器中并不存在/healthz这个接口,所以响应码是404,并不在 200-399 状态码中,所以我们看到的Pod的Ready一直都是未就绪状态。

5.2、存活探针(Liveness Probe)

创建Pod资源清单:vim centos-liveness-probe.yaml

apiVersion: v1

kind: Pod

metadata:

name: centos-liveness-probe

spec:

containers:

- name: centos-liveness-probe

image: centos

args: # 容器启动时,执行如下的命令, 30s后删除/tmp/healthy文件

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe: # 存活探针

exec: # 在容器内执行指定命令cat /tmp/healthy

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5 # 等待5s后开始存活检查

periodSeconds: 5 # 间隔5s检查一次在这个配置文件中,可以看到 Pod 中只有一个 Container。 periodSeconds 字段指定了 kubelet 应该每 5 秒执行一次存活探测。 initialDelaySeconds 字段告诉 kubelet 在执行第一次探测前应该等待 5 秒。 kubelet 在容器内执行命令 cat /tmp/healthy 来进行探测。 如果命令执行成功并且返回值为 0,kubelet 就会认为这个容器是健康存活的。 如果这个命令返回非 0 值,kubelet 会杀死这个容器并根据重启策略重新启动它。

当容器启动时,执行如下的命令:

/bin/sh -c "touch /tmp/healthy; sleep 30; rm -f /tmp/healthy; sleep 600"这个容器生命的前 30 秒,/tmp/healthy 文件是存在的。 所以在这最开始的 30 秒内,执行命令 cat /tmp/healthy 会返回成功代码。 30 秒之后,执行命令 cat /tmp/healthy 就会返回失败代码。

创建 Pod:

$ kubectl apply -f centos-liveness-probe.yaml

pod/centos-liveness-probe created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

centos-liveness-probe 1/1 Running 0 9s 192.168.1.6 node01 <none> <none>

# 在 30 秒内,查看 Pod 的事件

$ kubectl describe pod centos-liveness-probe

Name: centos-liveness-probe

Namespace: default

Priority: 0

Service Account: default

Node: node01/172.30.2.2

Start Time: Mon, 16 Jan 2023 03:42:49 +0000

Labels: <none>

Annotations: cni.projectcalico.org/containerID: 74ae52265e8236ec904a23c98f8eb6a929df6709c29643f8cf3a624274105ab6

cni.projectcalico.org/podIP: 192.168.1.6/32

cni.projectcalico.org/podIPs: 192.168.1.6/32

Status: Running

IP: 192.168.1.6

IPs:

IP: 192.168.1.6

Containers:

centos-liveness-probe:

Container ID: containerd://272c3f8bf293271f3657d98e1e23922312de46afebc3ed76a65104bbe4209e39

Image: centos

Image ID: docker.io/library/centos@sha256:a27fd8080b517143cbbbab9dfb7c8571c40d67d534bbdee55bd6c473f432b177

Port: <none>

Host Port: <none>

Args:

/bin/sh

-c

touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

State: Running

Started: Mon, 16 Jan 2023 03:42:50 +0000

Ready: True

Restart Count: 0

Liveness: exec [cat /tmp/healthy] delay=5s timeout=1s period=5s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-d69p9 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-d69p9:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 17s default-scheduler Successfully assigned default/centos-liveness-probe to node01

Normal Pulling 16s kubelet Pulling image "centos"

Normal Pulled 16s kubelet Successfully pulled image "centos" in 458.064227ms (458.070248ms including waiting)

Normal Created 16s kubelet Created container centos-liveness-probe

Normal Started 16s kubelet Started container centos-liveness-probe如上,可以看到,30s内输出结果表明还没有存活探针失败。

那么等待30s以后,我们再次查看Pod详细信息:

$ kubectl describe pod centos-liveness-probe

Name: centos-liveness-probe

Namespace: default

Priority: 0

Service Account: default

Node: node01/172.30.2.2

Start Time: Mon, 16 Jan 2023 03:42:49 +0000

Labels: <none>

Annotations: cni.projectcalico.org/containerID: 74ae52265e8236ec904a23c98f8eb6a929df6709c29643f8cf3a624274105ab6

cni.projectcalico.org/podIP: 192.168.1.6/32

cni.projectcalico.org/podIPs: 192.168.1.6/32

Status: Running

IP: 192.168.1.6

IPs:

IP: 192.168.1.6

Containers:

centos-liveness-probe:

Container ID: containerd://b03f2aaf2b854071223aae43cdfee4b9d1d4d3dd03f8ee7270b857817d362ca7

Image: centos

Image ID: docker.io/library/centos@sha256:a27fd8080b517143cbbbab9dfb7c8571c40d67d534bbdee55bd6c473f432b177

Port: <none>

Host Port: <none>

Args:

/bin/sh

-c

touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

State: Running

Started: Mon, 16 Jan 2023 03:44:05 +0000

Last State: Terminated

Reason: Error

Exit Code: 137

Started: Mon, 16 Jan 2023 03:42:50 +0000

Finished: Mon, 16 Jan 2023 03:44:04 +0000

Ready: True

Restart Count: 1

Liveness: exec [cat /tmp/healthy] delay=5s timeout=1s period=5s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-d69p9 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-d69p9:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 109s default-scheduler Successfully assigned default/centos-liveness-probe to node01

Normal Pulled 108s kubelet Successfully pulled image "centos" in 458.064227ms (458.070248ms including waiting)

Warning Unhealthy 64s (x3 over 74s) kubelet Liveness probe failed: cat: /tmp/healthy: No such file or directory

Normal Killing 64s kubelet Container centos-liveness-probe failed liveness probe, will be restarted

Normal Pulling 33s (x2 over 108s) kubelet Pulling image "centos"

Normal Created 33s (x2 over 108s) kubelet Created container centos-liveness-probe

Normal Started 33s (x2 over 108s) kubelet Started container centos-liveness-probe

Normal Pulled 33s kubelet Successfully pulled image "centos" in 405.865965ms (405.870664ms including waiting)在输出结果的最下面,有信息显示存活探针失败了(Liveness probe failed: cat: /tmp/healthy: No such file or directory),这个失败的容器被杀死并且被重建了。

再等 30 秒,这个容器被重启了,输出结果显示 RESTARTS 的值增加了 1。 请注意,一旦失败的容器恢复为运行状态,RESTARTS 计数器就会增加 1:

controlplane $ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

centos-liveness-probe 1/1 Running 2 (69s ago) 3m39s 192.168.1.6 node01 <none> <none>因为默认的重启策略restartPolicy是Always,所以centos-liveness-probe将会一直重启。

5.3、TCP就绪/存活探测

前面两个示例,分别演示了exec和httpGet探测方式,这里演示一下基于tcpSocket的探测方式。

创建资源清单文件:vim tcp-socket-probe.yaml

apiVersion: v1

kind: Pod

metadata:

name: goproxy

labels:

app: goproxy

spec:

containers:

- name: goproxy

image: registry.k8s.io/goproxy:0.1

ports:

- containerPort: 8080

readinessProbe: # 就绪探针

tcpSocket:

port: 8080

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe: # 存活探针

tcpSocket:

port: 8080

initialDelaySeconds: 15

periodSeconds: 20如上配置,kubelet 会在容器启动 5 秒后发送第一个就绪探针。 探针会尝试连接 goproxy 容器的 8080 端口。 如果探测成功,这个 Pod 会被标记为就绪状态,kubelet 将继续每隔 10 秒运行一次探测。

除了就绪探针,这个配置包括了一个存活探针。 kubelet 会在容器启动 15 秒后进行第一次存活探测。 与就绪探针类似,存活探针会尝试连接 goproxy 容器的 8080 端口。 如果存活探测失败,容器会被重新启动。

$ kubectl apply -f tcp-socket-probe.yaml

pod/goproxy created

$ kubectl get pod/goproxy -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

goproxy 1/1 Running 0 99s 192.168.1.3 node01 <none> <none>可以看到,goproxy容器的READY为1/1,说明就绪探测成功了,并且STATUS为Running运行状态,说明goproxy容器当前是健康的。

$ kubectl describe pod/goproxy

Name: goproxy

Namespace: default

Priority: 0

Service Account: default

Node: node01/172.30.2.2

Start Time: Mon, 16 Jan 2023 05:15:18 +0000

Labels: app=goproxy

Annotations: cni.projectcalico.org/containerID: 24cf48d7ee8e5ea9fe846afb16510c46cd63c9214c3a54aa2d548e647aa162fb

cni.projectcalico.org/podIP: 192.168.1.3/32

cni.projectcalico.org/podIPs: 192.168.1.3/32

Status: Running

IP: 192.168.1.3

IPs:

IP: 192.168.1.3

Containers:

goproxy:

Container ID: containerd://c07e285dda94eb1ebc75c7aef01dc1816d4f027c007a7bb741b2a023ab4112d2

Image: registry.k8s.io/goproxy:0.1

Image ID: registry.k8s.io/goproxy@sha256:5334c7ad43048e3538775cb09aaf184f5e8acf4b0ea60e3bc8f1d93c209865a5

Port: 8080/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 16 Jan 2023 05:15:21 +0000

Ready: True

Restart Count: 0

Liveness: tcp-socket :8080 delay=15s timeout=1s period=20s #success=1 #failure=3

Readiness: tcp-socket :8080 delay=5s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-4bcg8 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-4bcg8:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m27s default-scheduler Successfully assigned default/goproxy to node01

Normal Pulling 3m26s kubelet Pulling image "registry.k8s.io/goproxy:0.1"

Normal Pulled 3m24s kubelet Successfully pulled image "registry.k8s.io/goproxy:0.1" in 2.531715761s (2.53172162s including waiting)

Normal Created 3m24s kubelet Created container goproxy

Normal Started 3m24s kubelet Started container goproxy六、Liveness Probe(存活探针) VS Readiness Probe(就绪探针)

| liveness probe(存活探针) | readiness probe(就绪探针) | |

| 用途 | 判断容器是否存活 | 判断Pod是否就绪 |

| 检测期 | Pod运行期 | Pod启动期 |

| 失败处理 | Kill容器 | 停止向Pod发送流量 |

| 探针类型 | httpGet、exec、tcpSocket | httpGet、exec、tcpSocket |

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!