高分辨率图像合成;可控运动合成;虚拟试衣;在FPGA上高效运行二值Transformer

本文首发于公众号:机器感知

高分辨率图像合成;可控运动合成;虚拟试衣;在FPGA上高效运行二值Transformer

Scalable High-Resolution Pixel-Space Image Synthesis with Hourglass Diffusion Transformers

We present the Hourglass Diffusion Transformer (HDiT), an image generative model that exhibits linear scaling with pixel count, supporting training at high-resolution (e.g. $1024 \times 1024$) directly in pixel-space. Building on the Transformer architecture, which is known to scale to billions of parameters, it bridges the gap between the efficiency of convolutional U-Nets and the scalability of Transformers. HDiT trains successfully without typical high-resolution training techniques such as multiscale architectures, latent autoencoders or self-conditioning. We demonstrate that HDiT performs competitively with existing models on ImageNet $256^2$, and sets a new state-of-the-art for diffusion models on FFHQ-$1024^2$.

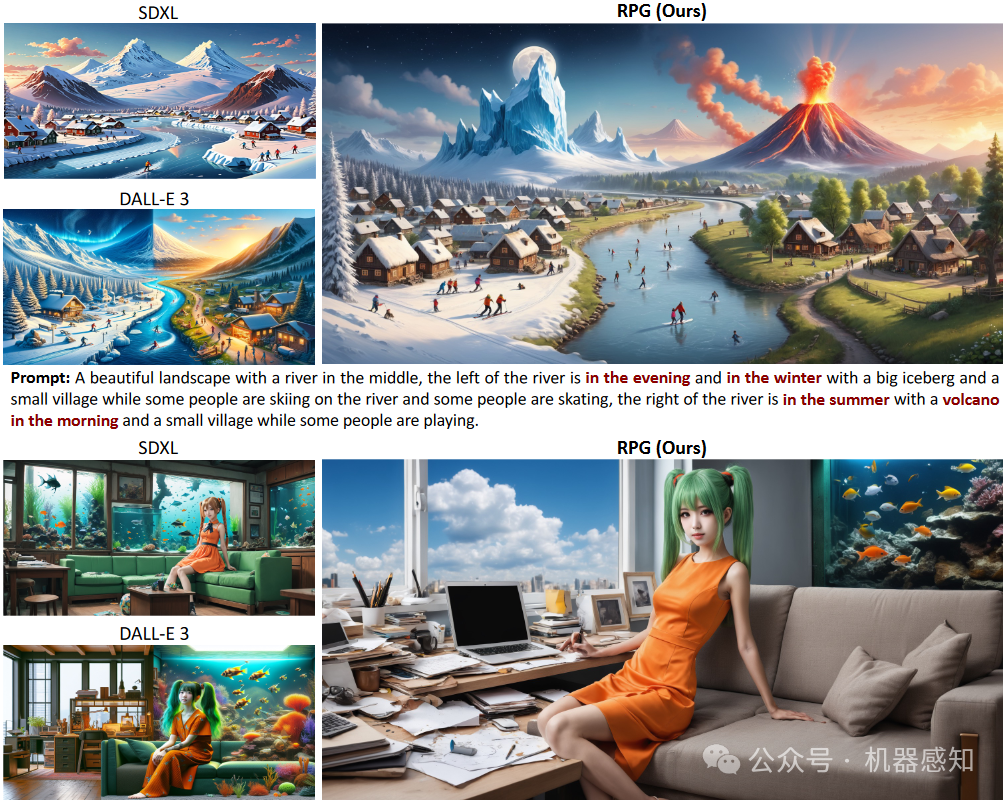

Mastering Text-to-Image Diffusion: Recaptioning, Planning, and Generating with Multimodal LLMs

In this paper, we propose a brand new training-free text-to-image generation/editing framework, namely Recaption, Plan and Generate (RPG), harnessing the powerful chain-of-thought reasoning ability of multimodal LLMs to enhance the compositionality of text-to-image diffusion models. Our approach employs the MLLM as a global planner to decompose the process of generating complex images into multiple simpler generation tasks within subregions. Extensive experiments demonstrate our RPG outperforms state-of-the-art text-to-image diffusion models, including DALL-E 3 and SDXL, particularly in multi-category object composition and text-image semantic alignment.

MotionMix: Weakly-Supervised Diffusion for Controllable Motion Generation

This paper proposed MotionMix, a simple yet effective weakly-supervised diffusion model that leverages both noisy and unannotated motion sequences. Extensive experiments on several benchmarks demonstrate that our MotionMix, as a versatile framework, consistently achieves state-of-the-art performances on text-to-motion, action-to-motion, and music-to-dance tasks.

Product-Level Try-on: Characteristics-preserving Try-on with Realistic Clothes Shading and Wrinkles

We propose a novel diffusion-based Product-level virtual try-on pipeline,\ie PLTON, which can preserve the fine details of logos and embroideries while producing realistic clothes shading and wrinkles. To enhance retention, a Two-stage Blended Denoising method is proposed to guide the diffusion process for correct spatial layout and color. PLTON is finetuned only with our collected small-size try-on dataset.

BETA: Binarized Energy-Efficient Transformer Accelerator at the Edge

Existing binary Transformers are promising in edge deployment due to their compact model size, low computational complexity, and considerable inference accuracy. However, deploying binary Transformers faces challenges on prior processors due to inefficient execution of quantized matrix multiplication (QMM) and the energy consumption overhead caused by multi-precision activations. To tackle the challenges above, we first develop a computation flow abstraction method for binary Transformers to improve QMM execution efficiency by optimizing the computation order. Furthermore, a binarized energy-efficient Transformer accelerator, namely BETA, is proposed to boost the efficient deployment at the edge. Experimental results evaluated on ZCU102 FPGA show BETA achieves an average energy efficiency of 174 GOPS/W, which is 1.76~21.92x higher than prior FPGA-based accelerators, showing BETA's good potential for edge Transformer acceleration.

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- Nginx全面配置

- Shell:计算时间差 && 显示时分秒

- 进程的概念之进程的状态

- 芒果RT-DETR改进实验:深度集成版目标检测 RT-DETR 热力图来了!支持自定义数据集训练出来的模型

- 使用van-calendar手机上用手指没办法滑动。电脑浏览器鼠标可以滑动

- Kubernetes技术与架构-调度 1

- Echarts图表如何利用formatter自定义tooltip的内容和样式

- 目标检测YOLO实战应用案例100讲-基于目标检测的水稻病害识别(续)

- 弹性布局(Flex)

- 代码随想录算法训练营第三十天|332.重新安排行程、51. N皇后、37. 解数独