【PyTorch】PyTorch之Tensors操作篇

文章目录

前言

介绍Tensor的相关操作API。

一、Tensor创建

1、TENSOR

*torch.tensor(data, , dtype=None, device=None, requires_grad=False, pin_memory=False) → Tensor

Parameters:

data (array_like) – Initial data for the tensor. Can be a list, tuple, NumPy ndarray, scalar, and other types.

Keyword Arguments

dtype (torch.dtype, optional) – the desired data type of returned tensor. Default: if None, infers data type from data.

device (torch.device, optional) – the device of the constructed tensor. If None and data is a tensor then the device of data is used. If None and data is not a tensor then the result tensor is constructed on the current device.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

pin_memory (bool, optional) – If set, returned tensor would be allocated in the pinned memory. Works only for CPU tensors. Default: False.

通过复制数据构建一个没有自动求导历史的张量(也称为“叶张量”,请参阅自动求导机制)。

WARNING:

在处理张量时,为了提高可读性,建议使用 torch.Tensor.clone()、torch.Tensor.detach() 和 torch.Tensor.requires_grad_()。如果将 t 视为张量,则 torch.tensor(t) 等同于 t.clone().detach(),而 torch.tensor(t, requires_grad=True) 等同于 t.clone().detach().requires_grad_(True)。

对比torch.as_tensor()和torch.from_numpy() :

torch.as_tensor() 保留自动求导历史,并在可能的情况下避免复制。torch.from_numpy() 创建一个与 NumPy 数组共享存储的张量。

torch.tensor([[0.1, 1.2], [2.2, 3.1], [4.9, 5.2]])

torch.tensor([0, 1]) # Type inference on data

torch.tensor([[0.11111, 0.222222, 0.3333333]],

dtype=torch.float64,

device=torch.device('cuda:0')) # creates a double tensor on a CUDA device

torch.tensor(3.14159) # Create a zero-dimensional (scalar) tensor

torch.tensor([]) # Create an empty tensor (of size (0,))

2、SPARSE_COO_TENSOR

*torch.sparse_coo_tensor(indices, values, size=None, , dtype=None, device=None, requires_grad=False, check_invariants=None, is_coalesced=None) → Tensor

Parameters:

indices (array_like) – Initial data for the tensor. Can be a list, tuple, NumPy ndarray, scalar, and other types. Will be cast to a torch.LongTensor internally. The indices are the coordinates of the non-zero values in the matrix, and thus should be two-dimensional where the first dimension is the number of tensor dimensions and the second dimension is the number of non-zero values.

values (array_like) – Initial values for the tensor. Can be a list, tuple, NumPy ndarray, scalar, and other types.

size (list, tuple, or torch.Size, optional) – Size of the sparse tensor. If not provided the size will be inferred as the minimum size big enough to hold all non-zero elements.

Keyword Arguments:

dtype (torch.dtype, optional) – the desired data type of returned tensor. Default: if None, infers data type from values.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, uses the current device for the default tensor type (see torch.set_default_tensor_type()). device will be the CPU for CPU tensor types and the current CUDA device for CUDA tensor types.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

check_invariants (bool, optional) – If sparse tensor invariants are checked. Default: as returned by torch.sparse.check_sparse_tensor_invariants.is_enabled(), initially False.

is_coalesced (bool, optional) – WhenTrue, the caller is responsible for providing tensor indices that correspond to a coalesced tensor. If the check_invariants flag is False, no error will be raised if the prerequisites are not met and this will lead to silently incorrect results. To force coalescion please use coalesce() on the resulting Tensor. Default: None: except for trivial cases (e.g. nnz < 2) the resulting Tensor has is_coalesced set to False`.

使用给定的索引和数值构建 COO(坐标)格式的稀疏张量。

当is_coalesced未指定或设置为 None 时,此函数返回一个未合并的张量。

如果未指定设备参数,则给定值和索引张量的设备必须匹配。然而,如果指定了参数,则输入张量将被转换到指定的设备,并进而确定构建的稀疏张量的设备。

i = torch.tensor([[0, 1, 1],

[2, 0, 2]])

v = torch.tensor([3, 4, 5], dtype=torch.float32)

torch.sparse_coo_tensor(i, v, [2, 4])

torch.sparse_coo_tensor(i, v) # Shape inference

torch.sparse_coo_tensor(i, v, [2, 4],

dtype=torch.float64,

device=torch.device('cuda:0'))

S = torch.sparse_coo_tensor(torch.empty([1, 0]), [], [1])

S = torch.sparse_coo_tensor(torch.empty([1, 0]), torch.empty([0, 2]), [1, 2])

3、SPARSE_CSR_TENSOR

*torch.sparse_csr_tensor(crow_indices, col_indices, values, size=None, , dtype=None, device=None, requires_grad=False, check_invariants=None) → Tensor

*Parameters:

crow_indices (array_like) – (B+1)-dimensional array of size (*batchsize, nrows + 1). The last element of each batch is the number of non-zeros. This tensor encodes the index in values and col_indices depending on where the given row starts. Each successive number in the tensor subtracted by the number before it denotes the number of elements in a given row.

col_indices (array_like) – Column co-ordinates of each element in values. (B+1)-dimensional tensor with the same length as values.

values (array_list) – Initial values for the tensor. Can be a list, tuple, NumPy ndarray, scalar, and other types that represents a (1+K)-dimensional tensor where K is the number of dense dimensions.

size (list, tuple, torch.Size, optional) – Size of the sparse tensor: (*batchsize, nrows, ncols, densesize). If not provided, the size will be inferred as the minimum size big enough to hold all non-zero elements.

Keyword Arguments:

dtype (torch.dtype, optional) – the desired data type of returned tensor. Default: if None, infers data type from values.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, uses the current device for the default tensor type (see torch.set_default_tensor_type()). device will be the CPU for CPU tensor types and the current CUDA device for CUDA tensor types.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

check_invariants (bool, optional) – If sparse tensor invariants are checked. Default: as returned by torch.sparse.check_sparse_tensor_invariants.is_enabled(), initially False.

使用给定的值在指定的行索引(crow_indices)和列索引(col_indices)上构建 CSR(压缩稀疏行)格式的稀疏张量。CSR 格式的稀疏矩阵乘法通常比 COO 格式的稀疏张量更快。请确保查看关于索引数据类型的注释。

如果未指定设备参数,则给定的值和索引张量的设备必须匹配。然而,如果指定了参数,则输入张量将被转换到指定的设备,并由此确定构建的稀疏张量的设备。

crow_indices = [0, 2, 4]

col_indices = [0, 1, 0, 1]

values = [1, 2, 3, 4]

torch.sparse_csr_tensor(torch.tensor(crow_indices, dtype=torch.int64),

torch.tensor(col_indices, dtype=torch.int64),

torch.tensor(values), dtype=torch.double)

4、ASARRAY

*torch.asarray(obj, , dtype=None, device=None, copy=None, requires_grad=False) → Tensor

Parameters:

obj (object) – a tensor, NumPy array, DLPack Capsule, object that implements Python’s buffer protocol, scalar, or sequence of scalars.

Keyword Arguments:

dtype (torch.dtype, optional) – the datatype of the returned tensor. Default: None, which causes the datatype of the returned tensor to be inferred from obj.

copy (bool, optional) – controls whether the returned tensor shares memory with obj. Default: None, which causes the returned tensor to share memory with obj whenever possible. If True then the returned tensor does not share its memory. If False then the returned tensor shares its memory with obj and an error is thrown if it cannot.

device (torch.device, optional) – the device of the returned tensor. Default: None, which causes the device of obj to be used. Or, if obj is a Python sequence, the current default device will be used.

requires_grad (bool, optional) – whether the returned tensor requires grad. Default: False, which causes the returned tensor not to require a gradient. If True, then the returned tensor will require a gradient, and if obj is also a tensor with an autograd history then the returned tensor will have the same history.

将obj转换为张量。

obj可以是以下之一:

- 一个张量

- 一个NumPy数组或NumPy标量

- 一个DLPack capsule

- 实现了Python缓冲协议的对象

- 一个标量

- 一系列标量

当obj是张量、NumPy数组或DLPack capsule时,默认情况下,返回的张量不需要梯度,具有与对象相同的数据类型,位于相同的设备上,并与其共享内存。这些属性可以通过dtype、device、copy和requires_grad关键字参数进行控制。如果返回的张量具有不同的数据类型,位于不同的设备上,或者请求了副本,那么它将不与对象共享内存。如果requires_grad为True,则返回的张量将需要梯度;如果对象也是具有自动求导历史记录的张量,则返回的张量将具有相同的历史记录。

当对象不是张量、NumPy数组或DLPack capsule,但实现了Python的缓冲协议时,缓冲区将被解释为根据传递给dtype关键字参数的数据类型大小分组的字节数组。(如果没有传递数据类型,则使用默认的浮点数据类型。)返回的张量将具有指定的数据类型(如果未指定,则为默认的浮点数据类型),默认情况下位于CPU设备上,并与缓冲区共享内存。

当对象是NumPy标量时,返回的张量将是一个在CPU上的零维张量,不共享内存(即copy=True)。默认情况下,数据类型将是与NumPy标量的数据类型相对应的PyTorch数据类型。

当对象既不是上述情况,而是一个标量或一系列标量时,默认情况下,返回的张量将从标量值推断其数据类型,位于当前默认设备上,并且不共享内存。

对比torch.tensor() 、torch.from_numpy()、torch.frombuffer() 、torch.from_dlpack() :

torch.tensor() 创建一个张量,它总是从输入对象复制数据。torch.from_numpy() 创建一个张量,它总是与 NumPy 数组共享内存。torch.frombuffer() 创建一个张量,它总是与实现缓冲协议的对象共享内存。torch.from_dlpack() 创建一个张量,它总是与 DLPack capsule共享内存。

a = torch.tensor([1, 2, 3])

# Shares memory with tensor 'a'

b = torch.asarray(a)

a.data_ptr() == b.data_ptr()

# Forces memory copy

c = torch.asarray(a, copy=True)

a.data_ptr() == c.data_ptr()

a = torch.tensor([1., 2., 3.], requires_grad=True)

b = a + 2

print(b)

# Shares memory with tensor 'b', with no grad

c = torch.asarray(b)

print(c)

# Shares memory with tensor 'b', retaining autograd history

d = torch.asarray(b, requires_grad=True)

print(d)

array = numpy.array([1, 2, 3])

# Shares memory with array 'array'

t1 = torch.asarray(array)

array.__array_interface__['data'][0] == t1.data_ptr()

# Copies memory due to dtype mismatch

t2 = torch.asarray(array, dtype=torch.float32)

array.__array_interface__['data'][0] == t2.data_ptr()

scalar = numpy.float64(0.5)

print(torch.asarray(scalar))

5、AS_TENSOR

torch.as_tensor(data, dtype=None, device=None) → Tensor

Parameters:

data (array_like) – Initial data for the tensor. Can be a list, tuple, NumPy ndarray, scalar, and other types.

dtype (torch.dtype, optional) – the desired data type of returned tensor. Default: if None, infers data type from data.

device (torch.device, optional) – the device of the constructed tensor. If None and data is a tensor then the device of data is used. If None and data is not a tensor then the result tensor is constructed on the current device.

将数据转换为张量,尽可能共享数据并保留自动求导历史记录。

如果数据已经是具有请求的dtype和device的张量,则直接返回数据本身,但如果数据是具有不同dtype或device的张量,则会像使用data.to(dtype=dtype, device=device)一样进行复制。

如果数据是一个具有相同dtype和device的NumPy数组(ndarray),则使用torch.from_numpy()构建一个张量。

对比torch.tensor():

torch.tensor() 从不共享其数据,并创建一个新的“leaf tensor”(请参阅自动求导机制)。

a = numpy.array([1, 2, 3])

t = torch.as_tensor(a)

print(t)

t[0] = -1

print(a)

a = numpy.array([1, 2, 3])

t = torch.as_tensor(a, device=torch.device('cuda'))

print(t)

t[0] = -1

print(a)

6、FROM_NUMPY

torch.from_numpy(ndarray) → Tensor

从 numpy.ndarray 创建一个张量。

返回的张量和 ndarray 共享相同的内存。对张量的修改将反映在 ndarray 中,反之亦然。返回的张量不可调整大小。

目前,它接受具有以下 dtype 的 ndarray:numpy.float64、numpy.float32、numpy.float16、numpy.complex64、numpy.complex128、numpy.int64、numpy.int32、numpy.int16、numpy.int8、numpy.uint8 和 numpy.bool。

a = numpy.array([1, 2, 3])

t = torch.from_numpy(a)

print(t)

t[0] = -1

print(a)

7、FROMBUFFER

*torch.frombuffer(buffer, , dtype, count=-1, offset=0, requires_grad=False) → Tensor

Parameters:

buffer (object) – a Python object that exposes the buffer interface.

Keyword Arguments:

dtype (torch.dtype) – the desired data type of returned tensor.

count (int, optional) – the number of desired elements to be read. If negative, all the elements (until the end of the buffer) will be read. Default: -1.

offset (int, optional) – the number of bytes to skip at the start of the buffer. Default: 0.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

从实现了 Python 缓冲协议的对象创建一个一维张量。

在缓冲区中跳过前 offset 字节,并将剩余的原始字节解释为具有 count 个元素的 dtype 类型的一维张量。

请注意,以下两者之一必须为真:

1、count 是正的非零数字,并且缓冲区中的总字节数小于 offset 加上 count 乘以 dtype 的大小(以字节为单位)。

2、count 是负数,并且从 offset 减去的缓冲区的长度(字节数)是 dtype 大小(以字节为单位)的倍数。

返回的张量和缓冲区共享相同的内存。对张量的修改将反映在缓冲区中,反之亦然。返回的张量不可调整大小。

该函数增加拥有共享内存的对象的引用计数。因此,在返回的张量超出范围之前,此类内存不会被释放。

注意:

1、当传递实现缓冲协议的对象,并且其数据不在 CPU 上时,此函数的行为是未定义的。这样做可能会导致分段错误。

2、此函数不会尝试推断 dtype(因此,它不是可选的)。传递与其源不同的 dtype 可能会导致意外的行为。

import array

a = array.array('i', [1, 2, 3])

t = torch.frombuffer(a, dtype=torch.int32)

print(t)

t[0] = -1

print(a)

# Interprets the signed char bytes as 32-bit integers.

# Each 4 signed char elements will be interpreted as

# 1 signed 32-bit integer.

a = array.array('b', [-1, 0, 0, 0])

torch.frombuffer(a, dtype=torch.int32)

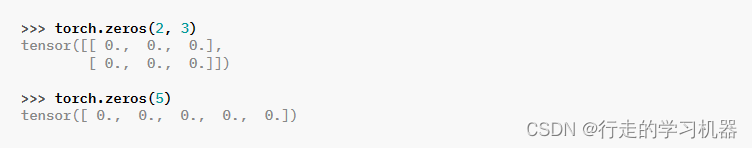

8、ZEROS和ZEROS_LIKE

**torch.zeros(size, , out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor

Parameters:

size (int…) – a sequence of integers defining the shape of the output tensor. Can be a variable number of arguments or a collection like a list or tuple.

Keyword Arguments:

out (Tensor, optional) – the output tensor.

dtype (torch.dtype, optional) – the desired data type of returned tensor. Default: if None, uses a global default (see torch.set_default_tensor_type()).

layout (torch.layout, optional) – the desired layout of returned Tensor. Default: torch.strided.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, uses the current device for the default tensor type (see torch.set_default_tensor_type()). device will be the CPU for CPU tensor types and the current CUDA device for CUDA tensor types.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

返回一个用标量值0填充的张量,其形状由变量参数size定义。

*torch.zeros_like(input, , dtype=None, layout=None, device=None, requires_grad=False, memory_format=torch.preserve_format) → Tensor

Parameters:

input (Tensor) – the size of input will determine size of the output tensor.

Keyword Arguments:

dtype (torch.dtype, optional) – the desired data type of returned Tensor. Default: if None, defaults to the dtype of input.

layout (torch.layout, optional) – the desired layout of returned tensor. Default: if None, defaults to the layout of input.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, defaults to the device of input.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

memory_format (torch.memory_format, optional) – the desired memory format of returned Tensor. Default: torch.preserve_format.

返回一个以标量值 0 填充的张量,大小与输入相同。torch.zeros_like(input) 等同于 torch.zeros(input.size(), dtype=input.dtype, layout=input.layout, device=input.device)。

从版本 0.4 开始,此函数不支持 out 关键字。作为替代,旧的 torch.zeros_like(input, out=output) 等同于 torch.zeros(input.size(), out=output)。

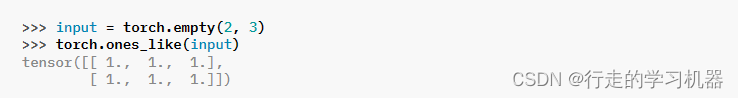

9、ONES和ONES_LIKE

**torch.ones(size, , out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor

Parameters:

size (int…) – a sequence of integers defining the shape of the output tensor. Can be a variable number of arguments or a collection like a list or tuple.

Keyword Arguments:

out (Tensor, optional) – the output tensor.

dtype (torch.dtype, optional) – the desired data type of returned tensor. Default: if None, uses a global default (see torch.set_default_tensor_type()).

layout (torch.layout, optional) – the desired layout of returned Tensor. Default: torch.strided.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, uses the current device for the default tensor type (see torch.set_default_tensor_type()). device will be the CPU for CPU tensor types and the current CUDA device for CUDA tensor types.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

返回一个用标量值1填充的张量,其形状由变量参数size定义。

*torch.ones_like(input, , dtype=None, layout=None, device=None, requires_grad=False, memory_format=torch.preserve_format) → Tensor

Parameters:

input (Tensor) – the size of input will determine size of the output tensor.

Keyword Arguments:

dtype (torch.dtype, optional) – the desired data type of returned Tensor. Default: if None, defaults to the dtype of input.

layout (torch.layout, optional) – the desired layout of returned tensor. Default: if None, defaults to the layout of input.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, defaults to the device of input.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

memory_format (torch.memory_format, optional) – the desired memory format of returned Tensor. Default: torch.preserve_format.

返回一个以标量值 1填充的张量,大小与输入相同。torch.ones_like(input) 等同于 torch.ones(input.size(), dtype=input.dtype, layout=input.layout, device=input.device)。

从版本 0.4 开始,此函数不支持 out 关键字。作为替代,旧的 torch.ones_like(input, out=output) 等同于 torch.ones(input.size(), out=output)。

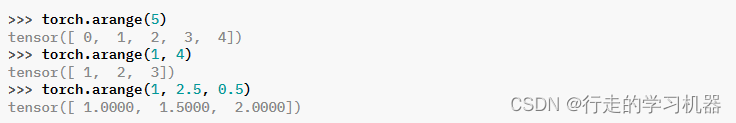

10、ARANGE

*torch.arange(start=0, end, step=1, , out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor

Parameters:

start (Number) – the starting value for the set of points. Default: 0.

end (Number) – the ending value for the set of points

step (Number) – the gap between each pair of adjacent points. Default: 1.

Keyword Arguments:

out (Tensor, optional) – the output tensor.

dtype (torch.dtype, optional) – the desired data type of returned tensor. Default: if None, uses a global default (see torch.set_default_tensor_type()). If dtype is not given, infer the data type from the other input arguments. If any of start, end, or stop are floating-point, the dtype is inferred to be the default dtype, see get_default_dtype(). Otherwise, the dtype is inferred to be torch.int64.

layout (torch.layout, optional) – the desired layout of returned Tensor. Default: torch.strided.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, uses the current device for the default tensor type (see torch.set_default_tensor_type()). device will be the CPU for CPU tensor types and the current CUDA device for CUDA tensor types.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

返回一个大小为(end - start)/step 的一维张量,其值来自于从 start 开始,以步长 step 递增的区间 [start, end)。

请注意,当使用非整数步长与 end 比较时,可能受到浮点舍入误差的影响;为了避免不一致性,在这种情况下我们建议从 end 中减去一个小的 epsilon。

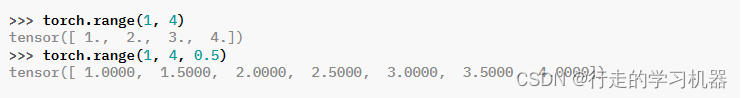

与torch.range的区别:

torch.range()是左闭右闭的,torch.arange()是左闭右开的。

11、LINSPACE

*torch.linspace(start, end, steps, , out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor

Parameters:

start (float) – the starting value for the set of points

end (float) – the ending value for the set of points

steps (int) – size of the constructed tensor

Keyword Arguments:

out (Tensor, optional) – the output tensor.

dtype (torch.dtype, optional) – the data type to perform the computation in. Default: if None, uses the global default dtype (see torch.get_default_dtype()) when both start and end are real, and corresponding complex dtype when either is complex.

layout (torch.layout, optional) – the desired layout of returned Tensor. Default: torch.strided.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, uses the current device for the default tensor type (see torch.set_default_tensor_type()). device will be the CPU for CPU tensor types and the current CUDA device for CUDA tensor types.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

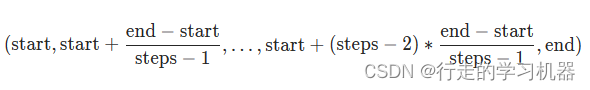

创建一个大小为 steps 的一维张量,其值从 start 到 end 均匀间隔,包括两端。换句话说,这些值是:

从 PyTorch 1.11 开始,linspace 需要指定 steps 参数。使用 steps=100 可以恢复先前的行为。

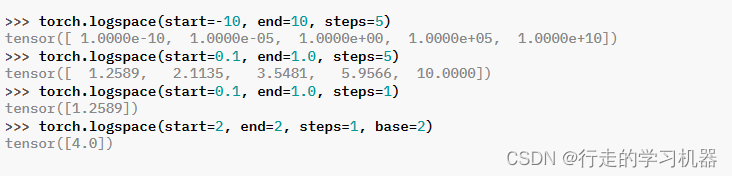

12、LOGSPACE

*torch.logspace(start, end, steps, base=10.0, , out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor

Parameters:

start (float) – the starting value for the set of points

end (float) – the ending value for the set of points

steps (int) – size of the constructed tensor

base (float, optional) – base of the logarithm function. Default: 10.0.

Keyword Arguments:

out (Tensor, optional) – the output tensor.

dtype (torch.dtype, optional) – the data type to perform the computation in. Default: if None, uses the global default dtype (see torch.get_default_dtype()) when both start and end are real, and corresponding complex dtype when either is complex.

layout (torch.layout, optional) – the desired layout of returned Tensor. Default: torch.strided.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, uses the current device for the default tensor type (see torch.set_default_tensor_type()). device will be the CPU for CPU tensor types and the current CUDA device for CUDA tensor types.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

13、EYE

*torch.eye(n, m=None, , out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor

Parameters:

n (int) – the number of rows

m (int, optional) – the number of columns with default being n

Keyword Arguments:

out (Tensor, optional) – the output tensor.

dtype (torch.dtype, optional) – the desired data type of returned tensor. Default: if None, uses a global default (see torch.set_default_tensor_type()).

layout (torch.layout, optional) – the desired layout of returned Tensor. Default: torch.strided.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, uses the current device for the default tensor type (see torch.set_default_tensor_type()). device will be the CPU for CPU tensor types and the current CUDA device for CUDA tensor types.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

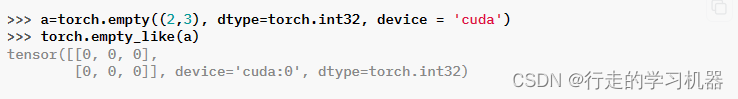

14、EMPTY和EMPTY_LIKE

**torch.empty(size, , out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False, pin_memory=False, memory_format=torch.contiguous_format) → Tensor

Parameters:

size (int…) – a sequence of integers defining the shape of the output tensor. Can be a variable number of arguments or a collection like a list or tuple.

Keyword Arguments:

out (Tensor, optional) – the output tensor.

dtype (torch.dtype, optional) – the desired data type of returned tensor. Default: if None, uses a global default (see torch.set_default_tensor_type()).

layout (torch.layout, optional) – the desired layout of returned Tensor. Default: torch.strided.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, uses the current device for the default tensor type (see torch.set_default_tensor_type()). device will be the CPU for CPU tensor types and the current CUDA device for CUDA tensor types.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

pin_memory (bool, optional) – If set, returned tensor would be allocated in the pinned memory. Works only for CPU tensors. Default: False.

memory_format (torch.memory_format, optional) – the desired memory format of returned Tensor. Default: torch.contiguous_format.

返回一个未初始化数据的tensor。其形状尺寸由size决定。

如果将 torch.use_deterministic_algorithms() 设置为 True,则输出张量将被初始化,以防止将数据用作操作的输入时出现任何可能的非确定性行为。浮点数和复数张量将被填充为 NaN,而整数张量将被填充为最大值。

*torch.empty_like(input, , dtype=None, layout=None, device=None, requires_grad=False, memory_format=torch.preserve_format) → Tensor

返回一个未初始化的张量,大小与输入相同。torch.empty_like(input) 等同于 torch.empty(input.size(), dtype=input.dtype, layout=input.layout, device=input.device)。

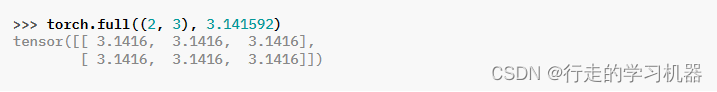

15、FULL和FULL_LIKE

*torch.full(size, fill_value, , out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor

Parameters:

size (int…) – a list, tuple, or torch.Size of integers defining the shape of the output tensor.

fill_value (Scalar) – the value to fill the output tensor with.

Keyword Arguments:

out (Tensor, optional) – the output tensor.

dtype (torch.dtype, optional) – the desired data type of returned tensor. Default: if None, uses a global default (see torch.set_default_tensor_type()).

layout (torch.layout, optional) – the desired layout of returned Tensor. Default: torch.strided.

device (torch.device, optional) – the desired device of returned tensor. Default: if None, uses the current device for the default tensor type (see torch.set_default_tensor_type()). device will be the CPU for CPU tensor types and the current CUDA device for CUDA tensor types.

requires_grad (bool, optional) – If autograd should record operations on the returned tensor. Default: False.

创建一个大小为 size 的张量,其中的元素值均为 fill_value。张量的数据类型(dtype)将从 fill_value 推断得出。

torch.full_like(input, fill_value, *, dtype=None, layout=torch.strided, device=None, requires_grad=False, memory_format=torch.preserve_format) → Tensor

返回一个与输入大小相同并填充了 fill_value 的张量。torch.full_like(input, fill_value) 等同于 torch.full(input.size(), fill_value, dtype=input.dtype, layout=input.layout, device=input.device)。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- GameFi 领域的游戏玩家指南(附长期关注的gamefi项目)

- QT设置鼠标样式 QWidget::setCusor()

- 什么是tomcat?tomcat是干什么用的?

- 如何正确选型传感器?关键参数一览,系统准确性不容忽视

- python操作mysql数据库

- 电影泰坦尼克号带特效带音乐(4页) HTML+CSS+JavaScript

- WiFi+蓝牙物联网定制方案——五大核心难点

- 嵌入式开发——I2C屏幕案例

- Windows | 快速解决环境变量Path被误删 拯救方法

- Matlab交互式的局部放大图