Hidataplus-3.3.2.0-005公测版本基于openEuler release 22.03 (LTS-SP2)的测试

1 概述

本文介绍hidataplus-3.3.2.0-005公测版本在openEuler release 22.03 (LTS-SP2)操作系统(最小化安装)中的部署。

测试角色如下:

? hadoop、yarn、zookeeper、hive、impala。

1.1 服务器

| 序号 | IP | 操作系统 | 部署内容 | 用途 | 备注 |

|---|---|---|---|---|---|

| 01 | 10.10.0.3 | centos7.4 | nginx | http-yum源 | 选用非ambari服务器配置yum源是为了减少实验期间每次安装文件配置yum源的耗时。 |

| 02 | 10.10.0.45 | openEuler release 22.03 (LTS-SP2) | jdk、ambari-server、ambari-agent | ambari-server服务端和ambari-agent端 | 将agent和server放到一起是为了节省机器。生产环境最好分开。 |

| 03 | 10.10.0.46 | openEuler release 22.03 (LTS-SP2) | jdk、ambari-agent | ambari-agent端 | |

| 04 | 10.10.0.47 | openEuler release 22.03 (LTS-SP2) | jdk、ambari-agent | ambari-agent端 |

1.2 服务器初始化

此处生产环境建议使用ansible批量配置,

主要做了如下:配置主机互信、配置主机名、关闭selinux、防火墙、优化文件打开数。

评论区互动可得Ansible脚本。

1.3 安装文件选择

网盘链接:https://pan.baidu.com/s/1z_Yk-inzpZnOvtG8EHo_ow 提取码:wj68

- ambari:ambari-2.7.6.0-25-redhat9-x86_64.tar.gz 注:这个包内的repodata有问题,请使用createrepo重新生成。

- HDP-GPL:HDP-GPL-3.3.2.0-005-redhat789-x86_64.tar.gz

- HDP-UTILS:HDP-UTILS-1.1.0.22-centos7_8-x86_64.tar.gz

- HDP:HDP-3.3.2.0-005-redhat789-x86_64-2.tar.gz

1.4 http-yum源

本操作步骤在10.10.0.3执行。

1.4.1 nginx

1.4.1.1 安装

rpm -ivh nginx-1.20.2-1.el7.ngx.x86_64.rpm

mkdir -p /opt/apps/nginx/temp

mkdir -p /opt/apps/nginx/cache

mkdir -p /opt/apps/nginx/html

1.4.1.2 配置文件

/etc/nginx/nginx.conf

user nginx;

worker_processes 4;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1000000;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr|$remote_user|$time_local'

'|$request|$status|$body_bytes_sent|$http_referer'

'|$http_user_agent|$http_x_forwarded_for|$upstream_addr'

'|$upstream_status|$upstream_response_time|$request_time';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

gzip on;

gzip_comp_level 6;

#gzip_types text/plain text/css application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript image/svg+xml image/x-icon application/javascript;

gzip_types *;

gzip_proxied any;

proxy_connect_timeout 500;

proxy_read_timeout 600;

proxy_send_timeout 500;

proxy_buffer_size 128k;

proxy_buffers 4 128k;

proxy_busy_buffers_size 256k;

proxy_temp_file_write_size 128k;

proxy_temp_path /opt/apps/nginx/temp;

proxy_cache_path /opt/apps/nginx/cache levels=1:2 keys_zone=cache_one:2000m inactive=1d max_size=30g;

include /etc/nginx/conf.d/*.conf;

}

/etc/nginx/conf.d/default.conf

server {

listen 5000;

server_name localhost;

root html;

index index.html index.htm;

location / {

root /opt/apps/nginx/html;

autoindex on;

autoindex_exact_size off;

autoindex_localtime on;

charset utf-8;

}

}

1.4.1.3 启停

启动:systemctl start nginx

停止:systemctl stop nginx

1.4.2 配置yum源

1.4.2.1 ambari源

mkdir -p /opt/apps/nginx/html/ambari

tar -zxf ambari-2.7.6.0-25-redhat9-x86_64.tar.gz -C /opt/apps/nginx/html/ambari

1.4.2.2 HDP源

mkdir -p /opt/apps/nginx/html/HDP

mkdir -p /opt/apps/nginx/html/HDP-GPL

mkdir -p /opt/apps/nginx/html/HDP-UTILS

tar -zxf HDP-GPL-3.3.2.0-005-redhat789-x86_64.tar.gz -C /opt/apps/nginx/html/HDP-GPL/

tar -zxf HDP-UTILS-1.1.0.22-centos7_8-x86_64.tar.gz -C /opt/apps/nginx/html/HDP-UTILS/

tar -zxf HDP-3.3.2.0-005-redhat789-x86_64-2.tar.gz -C /opt/apps/nginx/html/HDP/

- HDP:http://10.10.0.3:5000/HDP/3.3.2.0-005

- HDP-GPL:http://10.10.0.3:5000/HDP-GPL/gpl-3.3.2.0-005

- HDP-UTILS:http://10.10.0.3:5000/HDP-UTILS/HDP-UTILS/centos7/1.1.0.22

2 Ambari-server

以下操作在10.10.0.45上执行。

2.1 配置yum源

2.1.1 ambari源

cat > /etc/yum.repos.d/ambari.repo << EOF

#VERSION_NUMBER=2.7.6.0-25

[ambari-2.7.6.0-25]

name=ambari Version - ambari-2.7.6.0-25

baseurl=http://10.10.0.3:5000/ambari/2.7.6.0-25/

gpgcheck=0

gpgkey=http://10.10.0.3:5000/ambari/2.7.6.0-25/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins

enabled=1

priority=1

EOF

2.2 安装ambari-server

yum install ambari-server -y

2.3 配置mysql

步骤略,此处为mysql配置信息 版本:mysql5.7

host: 10.10.0.202 库名:ambari_20240102 用户:ambari 密码:ambari123

2.4 配置jdbc驱动

mkdir -p /usr/share/java

cd /root && wget https://cdn.mysql.com/archives/mysql-connector-java-5.1/mysql-connector-java-5.1.49.tar.gz

tar -zxf mysql-connector-java-5.1.49.tar.gz

cp /root/mysql-connector-java-5.1.49/mysql-connector-java-5.1.49-bin.jar /usr/share/java/mysql-connector-java-5.1.49-bin.jar

2.5 mysql中导入数据

本操作需要在有mysql命令行客户端的服务器上运行。并把sql文件拷贝到服务器上

sql文件位置:/var/lib/ambari-server/resources/Ambari-DDL-MySQL-CREATE.sql 导入到mysql中

mysql -h10.10.0.202 -uambari -p

source /root/Ambari-DDL-MySQL-CREATE.sql;

2.6 执行ambari-setup

[root@node01 ~]# ambari-server setup

Using python /usr/bin/python

Setup ambari-server

Checking SELinux...

SELinux status is 'enabled'

SELinux mode is 'permissive'

WARNING: SELinux is set to 'permissive' mode and temporarily disabled.

OK to continue [y/n] (y)? y

Customize user account for ambari-server daemon [y/n] (n)? y

Enter user account for ambari-server daemon (root):

Adjusting ambari-server permissions and ownership...

Checking firewall status...

Checking JDK...

[1] Oracle JDK 1.8 + Java Cryptography Extension (JCE) Policy Files 8

[2] Custom JDK

==============================================================================

Enter choice (1): 2

WARNING: JDK must be installed on all hosts and JAVA_HOME must be valid on all hosts.

WARNING: JCE Policy files are required for configuring Kerberos security. If you plan to use Kerberos,please make sure JCE Unlimited Strength Jurisdiction Policy Files are valid on all hosts.

Path to JAVA_HOME: /opt/apps/java

Validating JDK on Ambari Server...done.

Check JDK version for Ambari Server...

JDK version found: 8

Minimum JDK version is 8 for Ambari. Skipping to setup different JDK for Ambari Server.

Checking GPL software agreement...

GPL License for LZO: https://www.gnu.org/licenses/old-licenses/gpl-2.0.en.html

Enable Ambari Server to download and install GPL Licensed LZO packages [y/n] (n)? y

Completing setup...

Configuring database...

Enter advanced database configuration [y/n] (n)? y

Configuring database...

==============================================================================

Choose one of the following options:

[1] - PostgreSQL (Embedded)

[2] - Oracle

[3] - MySQL / MariaDB

[4] - PostgreSQL

[5] - Microsoft SQL Server (Tech Preview)

[6] - SQL Anywhere

[7] - BDB

==============================================================================

Enter choice (1): 3

Hostname (localhost): 10.10.0.202

Port (3306):

Database name (ambari):

Username (ambari):

Enter Database Password (bigdata):

Re-enter password:

Configuring ambari database...

Enter full path to custom jdbc driver: /usr/share/java/mysql-connector-java-5.1.49-bin.jar

Configuring remote database connection properties...

WARNING: Before starting Ambari Server, you must run the following DDL directly from the database shell to create the schema: /var/lib/ambari-server/resources/Ambari-DDL-MySQL-CREATE.sql

Proceed with configuring remote database connection properties [y/n] (y)? y

Extracting system views...

ambari-admin-2.7.6.0.0.jar

..

Ambari repo file doesn't contain latest json url, skipping repoinfos modification

Adjusting ambari-server permissions and ownership...

Ambari Server 'setup' completed successfully.

[root@node01 ~]#

2.7 启动ambari-server

[root@node01 ~]# ambari-server start

Using python /usr/bin/python

Starting ambari-server

Ambari Server running with administrator privileges.

Organizing resource files at /var/lib/ambari-server/resources...

Ambari database consistency check started...

Server PID at: /var/run/ambari-server/ambari-server.pid

Server out at: /var/log/ambari-server/ambari-server.out

Server log at: /var/log/ambari-server/ambari-server.log

Waiting for server start..............................

Server started listening on 8080

DB configs consistency check: no errors and warnings were found.

Ambari Server 'start' completed successfully.

[root@node01 ~]#

2.8 访问ambari-server web界面

http://10.10.0.45:8080

admin/admin

3 Ambari-agent

3.1 配置ambari源

cat > /etc/yum.repos.d/ambari.repo << EOF

#VERSION_NUMBER=2.7.6.0-25

[ambari-2.7.6.0-25]

name=ambari Version - ambari-2.7.6.0-25

baseurl=http://10.10.0.3:5000/ambari/2.7.6.0-25/

gpgcheck=0

gpgkey=http://10.10.0.3:5000/ambari/2.7.6.0-25/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins

enabled=1

priority=1

EOF

3.2 安装ambari-agent

yum install ambari-agent -y

3.3 修改ambari-server地址

/etc/ambari-agent/conf/ambari-agent.ini

[server]

hostname=node01

url_port=8440

secured_url_port=8441

connect_retry_delay=10

max_reconnect_retry_delay=30

3.4 ambari-agent启动

ambari-agent start

4 HDP WEB安装配置

4.1 ambari登陆页面

4.2 设置集群名称:hdpdemo

4.3 设置local repository

4.4 选择机器

4.5 选择安装的服务

4.6 选择服务安装在哪台主机

4.7 选择slave和client安装的主机

4.8 配置服务(有些需要连接数据库)

4.9 设置mysql-jdbc-driver

这个在ambari-server主机上执行:

ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java-5.1.49-bin.jar

[root@node01 java]# ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java-5.1.49-bin.jar

Using python /usr/bin/python

Setup ambari-server

Copying /usr/share/java/mysql-connector-java-5.1.49-bin.jar to /var/lib/ambari-server/resources/mysql-connector-java-5.1.49-bin.jar

Creating symlink /var/lib/ambari-server/resources/mysql-connector-java-5.1.49-bin.jar to /var/lib/ambari-server/resources/mysql-connector-java.jar

If you are updating existing jdbc driver jar for mysql with mysql-connector-java-5.1.49-bin.jar. Please remove the old driver jar, from all hosts. Restarting services that need the driver, will automatically copy the new jar to the hosts.

JDBC driver was successfully initialized.

Ambari Server 'setup' completed successfully.

[root@node01 java]#

4.10 配置用户组

4.11 配置安装路径

4.12 启动安装

4.13 安装结束

5 配置服务高可用

5.1 配置ResourceManager高可用

5.1.1 点击:Enable ResourceManager HA

5.1.2 弹出下图:

5.1.3 选择主机,如图:

5.1.4 弹出下图,安装信息,默认即可

5.1.5 弹出下图,开始安装

5.1.6 出现启动失败的报错,如图:

解决步骤参见6.1章节。

5.1.7 重启所有服务,如图

5.2 配置NameNode HA

5.2.1 点击:Enable NameNode HA

5.2.2 弹出下图,输入集群名称:mycluster,点击next

5.2.3 配置角色安装主机

5.2.4 弹出下图:按图执行命令

请按图操作:

5.2.5 开始部署

5.2.6 安装成功截图

6 附录

6.1 安装结束后启动ResourceManager失败

所选服务:hdfs、yarn、tez、hive、zookeeper、impala。

ambari dashboard:

经服务排查:resource manager启动失败:

2024-01-03 12:53:13,440 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":0,"fileId":16421,"group":"hdfs","length":0,"modificationTime":1704257332851,"owner":"hdfs","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-01-03 12:53:13,441 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://node01:50070/webhdfs/v1/hdp/apps/3.3.2.0-005/hbase?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmptBPX9A 2>/tmp/tmp_e6OSj''] {'logoutput': None, 'quiet': False}

2024-01-03 12:53:13,638 - call returned (0, '')

2024-01-03 12:53:13,639 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":0,"fileId":16421,"group":"hdfs","length":0,"modificationTime":1704257332851,"owner":"hdfs","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-01-03 12:53:13,641 - HdfsResource['/hdp/apps/3.3.2.0-005/hbase/hbase.tar.gz'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.3.2.0-005/hadoop/bin', 'keytab': [EMPTY], 'source': '/var/lib/ambari-agent/tmp/yarn-ats/3.3.2.0-005/hbase.tar.gz', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://node01:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'hdfs', 'group': 'hadoop', 'hadoop_conf_dir': '/usr/hdp/3.3.2.0-005/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2024-01-03 12:53:13,642 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://node01:50070/webhdfs/v1/hdp/apps/3.3.2.0-005/hbase/hbase.tar.gz?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpBluQmv 2>/tmp/tmpx2yGsO''] {'logoutput': None, 'quiet': False}

2024-01-03 12:53:13,804 - call returned (0, '')

2024-01-03 12:53:13,805 - get_user_call_output returned (0, u'{"RemoteException":{"exception":"FileNotFoundException","javaClassName":"java.io.FileNotFoundException","message":"File does not exist: /hdp/apps/3.3.2.0-005/hbase/hbase.tar.gz"}}404', u'')

2024-01-03 12:53:13,806 - Creating new file /hdp/apps/3.3.2.0-005/hbase/hbase.tar.gz in DFS

2024-01-03 12:53:13,807 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT --data-binary @/var/lib/ambari-agent/tmp/yarn-ats/3.3.2.0-005/hbase.tar.gz -H '"'"'Content-Type: application/octet-stream'"'"' '"'"'http://node01:50070/webhdfs/v1/hdp/apps/3.3.2.0-005/hbase/hbase.tar.gz?op=CREATE&user.name=hdfs&overwrite=True&permission=444'"'"' 1>/tmp/tmph6UrLZ 2>/tmp/tmpuwh1hD''] {'logoutput': None, 'quiet': False}

2024-01-03 12:53:16,989 - call returned (2, '')

2024-01-03 12:53:16,990 - Error occured while copying hbase tarball into hdfs '/hdp/apps/3.3.2.0-005/hbase'.

Command failed after 1 tries

处理:

cp /var/lib/ambari-agent/tmp/yarn-ats/3.3.2.0-005/hbase.tar.gz /tmp/hbase.tar.gz

chmod 777 /tmp/hbase.tar.gz

sudo su hdfs -l -c "hadoop fs -put /tmp/hbase.tar.gz /hdp/apps/3.3.2.0-005/hbase"

sudo su hdfs -l -c "hadoop fs -chmod 777 /hdp/apps/3.3.2.0-005/hbase/hbase.tar.gz"

返回ambari-web界面,启动rm服务

6.2 配置ResourceManager HA时,启动失败。

ResourceManager01日志:

Wed Jan 3 13:17:09 CST 2024 Exitting from script..

2024-01-03 13:17:09,816 - Copying hbase tarball into hdfs path'/hdp/apps/3.3.2.0-005/hbase/rm1'.

2024-01-03 13:17:09,816 - HdfsResource['/hdp/apps/3.3.2.0-005/hbase/rm1'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.3.2.0-005/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://node01:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'hdfs', 'group': 'hdfs', 'hadoop_conf_dir': '/usr/hdp/3.3.2.0-005/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0555}

2024-01-03 13:17:09,818 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://node01:50070/webhdfs/v1/hdp/apps/3.3.2.0-005/hbase/rm1?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmp_BtyU8 2>/tmp/tmpKfAeCQ''] {'logoutput': None, 'quiet': False}

2024-01-03 13:17:09,996 - call returned (0, '')

2024-01-03 13:17:09,996 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":0,"fileId":16533,"group":"hdfs","length":0,"modificationTime":1704258899086,"owner":"hdfs","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-01-03 13:17:09,999 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://node01:50070/webhdfs/v1/hdp/apps/3.3.2.0-005/hbase/rm1?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpQyDxXF 2>/tmp/tmpweKVWJ''] {'logoutput': None, 'quiet': False}

2024-01-03 13:17:10,156 - call returned (0, '')

2024-01-03 13:17:10,157 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":0,"fileId":16533,"group":"hdfs","length":0,"modificationTime":1704258899086,"owner":"hdfs","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-01-03 13:17:10,158 - HdfsResource['/hdp/apps/3.3.2.0-005/hbase/rm1/hbase.tar.gz'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.3.2.0-005/hadoop/bin', 'keytab': [EMPTY], 'source': '/var/lib/ambari-agent/tmp/yarn-ats/3.3.2.0-005/hbase.tar.gz', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://node01:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'hdfs', 'group': 'hadoop', 'hadoop_conf_dir': '/usr/hdp/3.3.2.0-005/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2024-01-03 13:17:10,160 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://node01:50070/webhdfs/v1/hdp/apps/3.3.2.0-005/hbase/rm1/hbase.tar.gz?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpI2eg6H 2>/tmp/tmpva7P_I''] {'logoutput': None, 'quiet': False}

2024-01-03 13:17:10,318 - call returned (0, '')

2024-01-03 13:17:10,319 - get_user_call_output returned (0, u'{"RemoteException":{"exception":"FileNotFoundException","javaClassName":"java.io.FileNotFoundException","message":"File does not exist: /hdp/apps/3.3.2.0-005/hbase/rm1/hbase.tar.gz"}}404', u'')

2024-01-03 13:17:10,320 - Creating new file /hdp/apps/3.3.2.0-005/hbase/rm1/hbase.tar.gz in DFS

2024-01-03 13:17:10,322 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT --data-binary @/var/lib/ambari-agent/tmp/yarn-ats/3.3.2.0-005/hbase.tar.gz -H '"'"'Content-Type: application/octet-stream'"'"' '"'"'http://node01:50070/webhdfs/v1/hdp/apps/3.3.2.0-005/hbase/rm1/hbase.tar.gz?op=CREATE&user.name=hdfs&overwrite=True&permission=444'"'"' 1>/tmp/tmpYglg7g 2>/tmp/tmpr6vLoQ''] {'logoutput': None, 'quiet': False}

2024-01-03 13:17:13,001 - call returned (2, '')

2024-01-03 13:17:13,002 - Error occured while copying hbase tarball into hdfs '/hdp/apps/3.3.2.0-005/hbase/rm1'.

Command failed after 1 tries

解决方法:在rm01节点即node01执行:

rm -rf /tmp/hbase.tar.gz

cp /var/lib/ambari-agent/tmp/yarn-ats/3.3.2.0-005/hbase.tar.gz /tmp/hbase.tar.gz

chmod 777 /tmp/hbase.tar.gz

sudo su hdfs -l -c "hadoop fs -put /tmp/hbase.tar.gz /hdp/apps/3.3.2.0-005/hbase/rm1"

sudo su hdfs -l -c "hadoop fs -chmod 777 /hdp/apps/3.3.2.0-005/hbase/rm1/hbase.tar.gz"

返回ambari-web界面,启动rm服务

同理:在rm02节点,即node03

rm -rf /tmp/hbase.tar.gz

cp /var/lib/ambari-agent/tmp/yarn-ats/3.3.2.0-005/hbase.tar.gz /tmp/hbase.tar.gz

chmod 777 /tmp/hbase.tar.gz

sudo su hdfs -l -c "hadoop fs -put /tmp/hbase.tar.gz /hdp/apps/3.3.2.0-005/hbase/rm2"

sudo su hdfs -l -c "hadoop fs -chmod 777 /hdp/apps/3.3.2.0-005/hbase/rm2/hbase.tar.gz"

6.3 测试hdfs服务

touch /tmp/1.txt

chmod 777 /tmp/1.txt

sudo su hdfs -l -c "hadoop fs -put /tmp/1.txt /tmp"

6.4 测试hive

su hive

[hive@node01 ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hdp/3.3.2.0-005/hive/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/3.3.2.0-005/hadoop/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = 04e89152-666e-47b4-9396-9b29d280be10

Logging initialized using configuration in file:/etc/hive/3.3.2.0-005/0/hive-log4j2.properties Async: true

Hive Session ID = 61c02e22-ad5c-4148-933f-658d3b5d78ed

hive> show databases;

OK

default

information_schema

sys

Time taken: 1.003 seconds, Fetched: 3 row(s)

hive> exit;

[hive@node01 ~]$

6.5 测试impala

su impala

[impala@node01 ~]$ impala-shell

Starting Impala Shell with no authentication using Python 2.7.18

Opened TCP connection to node01:21050

Connected to node01:21050

Server version: impalad version 4.2.0-RELEASE RELEASE (build d3c3ae41c4aeb2dec9f55dacb3dfc357d16713a3)

***********************************************************************************

Welcome to the Impala shell.

(Impala Shell v4.2.0-RELEASE (d3c3ae4) built on Tue Sep 26 13:29:42 UTC 2023)

Want to know what version of Impala you're connected to? Run the VERSION command to

find out!

***********************************************************************************

[node01:21050] default> show databases;

Query: show databases

+------------------+----------------------------------------------+

| name | comment |

+------------------+----------------------------------------------+

| _impala_builtins | System database for Impala builtin functions |

| default | Default Hive database |

+------------------+----------------------------------------------+

Fetched 2 row(s) in 0.07s

[node01:21050] default> show tables;

Query: show tables

Fetched 0 row(s) in 0.20s

[node01:21050] default> exit;

Goodbye impala

[impala@node01 ~]$

6.6 在RockyLinux9下的问题

6.6.1 依赖包问题

1.请配置epel源:yum install epel-release

2.安装libtirpc-devel :yum install https://dl.rockylinux.org/pub/rocky/9/CRB/x86_64/os/Packages/l/libtirpc-devel-1.3.3-2.el9.x86_64.rpm -y

3.缺少sh-utils包:经过和社区沟通,他们给做了一个:sh-utils-1.0.0-001.x86_64.rpm。由于pdf无法放附件,因此将文件base64编码贴出,如下:

[root@localhost ambari]# base64 sh-utils-1.0.0-001.x86_64.rpm

7avu2wMAAAAAAXNoLXV0aWxzLTEuMC4wLTAwMQAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

AAAAAAAAAAAAAAAAAAAAAAAAAAABAAUAAAAAAAAAAAAAAAAAAAAAjq3oAQAAAAAAAAAFAAAAVAAA

AD4AAAAHAAAARAAAABAAAAENAAAABgAAAAAAAAABAAAD6AAAAAQAAAAsAAAAAQAAA+wAAAAHAAAA

MAAAABAAAAPvAAAABAAAAEAAAAABZGEyMzQwNTZkMDRmNTA4ZTUyN2ZhOGUyNjE4YjAxODgyMTk0

M2JmMgAAAAAAAASwQJOtCAf4mCraYSS8dus7QgAAAHwAAAA+AAAAB7AAAAAQAAAAAI6t6AEA

AAAAAAAAHQAAAlwAAAA/AAAABwAAAkwAAAAQAAAAZAAAAAgAAAAAAAAAAQAAA+gAAAAGAAAAAgAA

AAEAAAPpAAAABgAAAAsAAAABAAAD6gAAAAYAAAARAAAAAQAAA+wAAAAJAAAAFQAAAAEAAAPtAAAA

CQAAADcAAAABAAAD7gAAAAQAAABAAAAAAQAAA+8AAAAGAAAARAAAAAEAAAPxAAAABAAAAFAAAAAB

AAAD9gAAAAYAAABUAAAAAQAAA/gAAAAJAAAAXAAAAAEAAAP8AAAABgAAAHIAAAABAAAD/QAAAAYA

AACEAAAAAQAAA/4AAAAGAAAAigAAAAEAAAQUAAAABgAAAJEAAAABAAAEFwAAAAgAAACsAAAAAgAA

BBgAAAAEAAAAyAAAAAUAAAQZAAAACAAAANwAAAAFAAAEGgAAAAgAAAFJAAAABQAABCgAAAAGAAAB

ZgAAAAEAAARYAAAABAAAAXAAAAACAAAEWQAAAAgAAAF4AAAAAgAABGIAAAAGAAABjAAAAAEAAARk

AAAABgAAAiMAAAABAAAEZQAAAAYAAAIoAAAAAQAABGYAAAAGAAACKwAAAAEAAARsAAAABgAAAi0A

AAABAAATkwAAAAQAAAJIAAAAAUMAc2gtdXRpbHMAMS4wLjAAMDAxAEFwcGxpY2F0aW9uIHRvIGxh

dW5jaCBqYXZhIGRhZW1vbgBzaC11dGlscwBlirbKYmRwLXRlc3QwMQAAAAAAAEFTTCAyLjAARGV2

ZWxvcG1lbnQvTGlicmFyaWVzAGh0dHA6Ly93d3cuaGRwLmNuAGxpbnV4AHg4Nl82NABzaC11dGls

cy0xLjAuMC0wMDEuc3JjLnJwbQBzaC11dGlscwBzaC11dGlscyh4ODYtNjQpAAAAAAAAAAEAAAoB

AAAKAQAACgEAAApjb3JldXRpbHMAcnBtbGliKEZpbGVEaWdlc3RzKQBycG1saWIoUGF5bG9hZEZp

bGVzSGF2ZVByZWZpeCkAcnBtbGliKENvbXByZXNzZWRGaWxlTmFtZXMpAHJwbWxpYihQYXlsb2Fk

SXNYeikAADQuNi4wLTEANC4wLTEAMy4wLjQtMQA1LjItMQA0LjExLjMAAAAAAAAACAAAAAgxLjAu

MC0wMDEAMS4wLjAtMDAxAC1PMiAtZyAtcGlwZSAtV2FsbCAtV3AsLURfRk9SVElGWV9TT1VSQ0U9

MiAtZmV4Y2VwdGlvbnMgLWZzdGFjay1wcm90ZWN0b3Itc3Ryb25nIC0tcGFyYW09c3NwLWJ1ZmZl

ci1zaXplPTQgLWdyZWNvcmQtZ2NjLXN3aXRjaGVzICAgLW02NCAtbXR1bmU9Z2VuZXJpYwBjcGlv

AHh6ADIAeDg2XzY0LXJlZGhhdC1saW51eC1nbnUAAAAAAAAACAAAAD8AAAAH///+MAAAABD9N3pY

WgAACuH7DKECACEBEgAAACO4hyzgAHsAHV0AGA3dBGIzAjtB5VAHdHFCtKSplybex7HJLhz/GAAA

AAAAI9BCK0/qKPdx6HJ0G7NweQs80FOOr7RhIz6CC4S1ei4AAVF8KeSV2hibS5oBAAAAAApZWg==

[root@localhost ambari]#

#将上述编码存为文件1

#使用以下命令完成base64解码

[root@localhost tmp]# cat 1 |base64 -d > sh-utils-1.0.0-001.x86_64.rpm

#对比生成文件的md5值

[root@localhost tmp]# md5sum sh-utils-1.0.0-001.x86_64.rpm

3c9a91bafe54a3e3c12920d4e546a9dc sh-utils-1.0.0-001.x86_64.rpm

[root@localhost tmp]# md5sum /opt/apps/nginx/html/ambari/2.7.6.0-25/ambari/sh-utils-1.0.0-001.x86_64.rpm

3c9a91bafe54a3e3c12920d4e546a9dc /opt/apps/nginx/html/ambari/2.7.6.0-25/ambari/sh-utils-1.0.0-001.x86_64.rpm

[root@localhost tmp]#

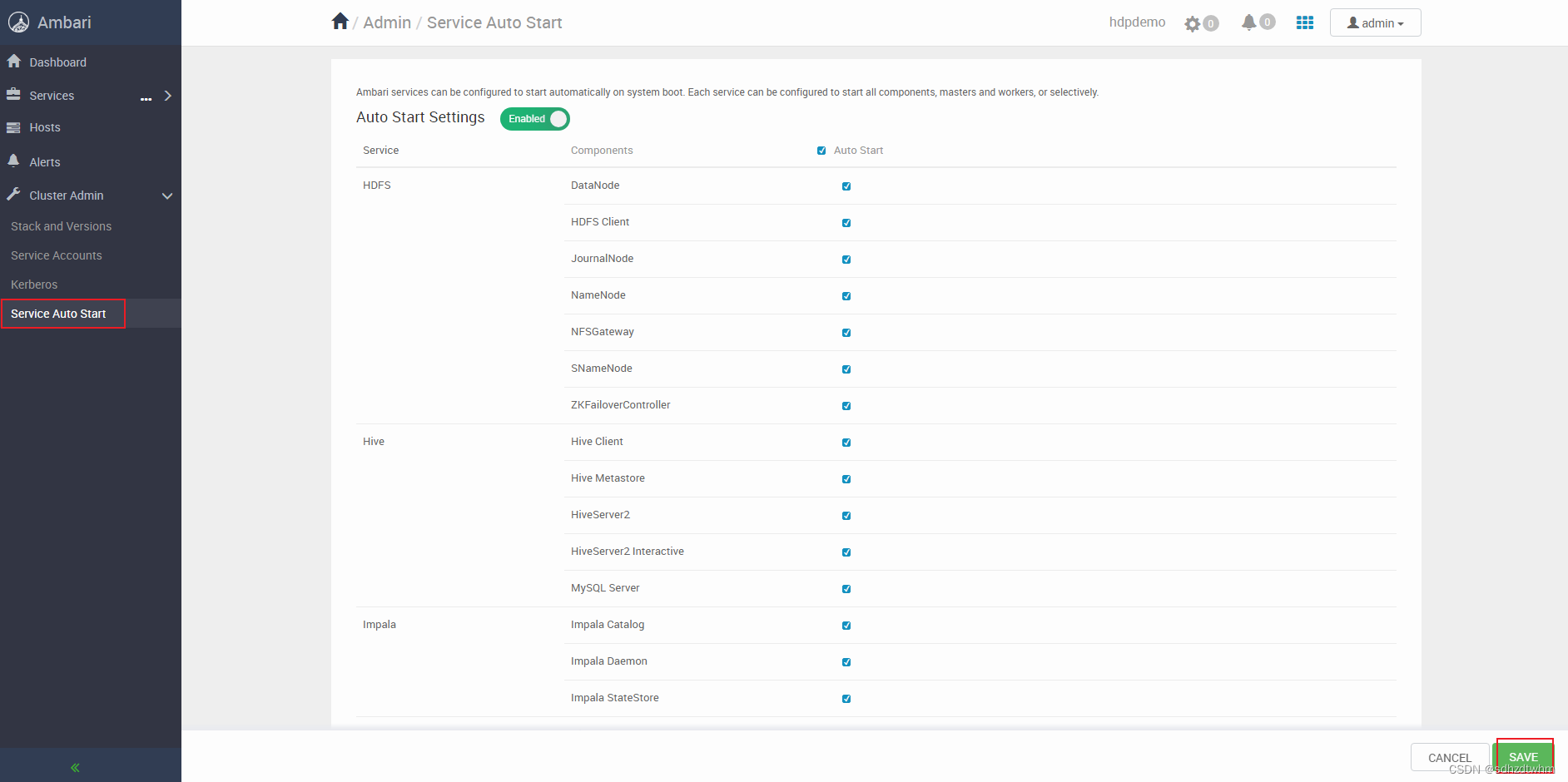

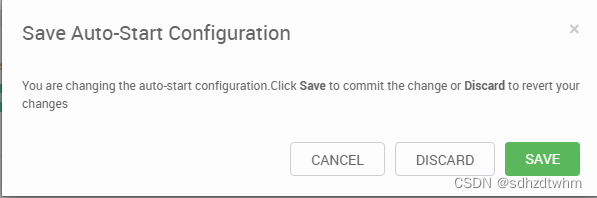

6.7 服务开机自启动

6.8 Dolphinscheduler的报错

6.8.1 启动报错

解决:

datasource:

driver-class-name: {{ dolphin_database_config['dolphin_database_driver'] }}

url: {{ dolphin_database_config['dolphin_database_url'] }}

username: {{ dolphin_database_config['dolphin_database_username'] }}

password: {{ dolphin_database_config['dolphin_database_password'] }}

hikari:

connection-test-query: select 1

minimum-idle: 5

auto-commit: true

validation-timeout: 3000

pool-name: DolphinScheduler

maximum-pool-size: 50

connection-timeout: 30000

idle-timeout: 600000

leak-detection-threshold: 0

initialization-fail-timeout: 1

解释:官网bug:https://github.com/apache/dolphinscheduler/pull/15169

知识分享、码字不易,请点赞支持。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 模型的 F1 分数

- Spring统一数据返回格式处理String类型出错解析

- groovy XmlParser 递归遍历 xml 文件,修改并保存

- mycat读写分离部署

- p1 H264码流结构分析 (上)

- Sqoop数据传输中的常见挑战及其解决方法

- C++力扣题目347--前k个高频元素

- Unity中URP下的线性雾

- Python爬蟲海外動態IP防止被封的方法 - okey proxy

- 【nginx实战】nginx正向代理、反向代理、由反向代理实现的负载均衡、故障转移详解