图像融合论文阅读:Real-time infrared and visible image fusion network using adaptive pixel weighting strategy

@article{zhang2023real,

title={Real-time infrared and visible image fusion network using adaptive pixel weighting strategy},

author={Zhang, Xuchong and Zhai, Han and Liu, Jiaxing and Wang, Zhiping and Sun, Hongbin},

journal={Information Fusion},

pages={101863},

year={2023},

publisher={Elsevier}

}

论文级别:SCI A1

影响因子:18.6

文章目录

🌻【如侵权请私信我删除】

📖论文解读

本文作者提出了一个【轻量级、实时】的IVF网络,该网络能够自适应地学习像素权值进行图像融合。并且作者将【目标检测作为下游任务】,【联合优化】网络参数。

🔑关键词

Multispectral image fusion 多光谱图像融合

Lightweight model 轻量级模型

Joint optimization 联合优化

Real-time 实时

Embedded platform 嵌入式平台

💭核心思想

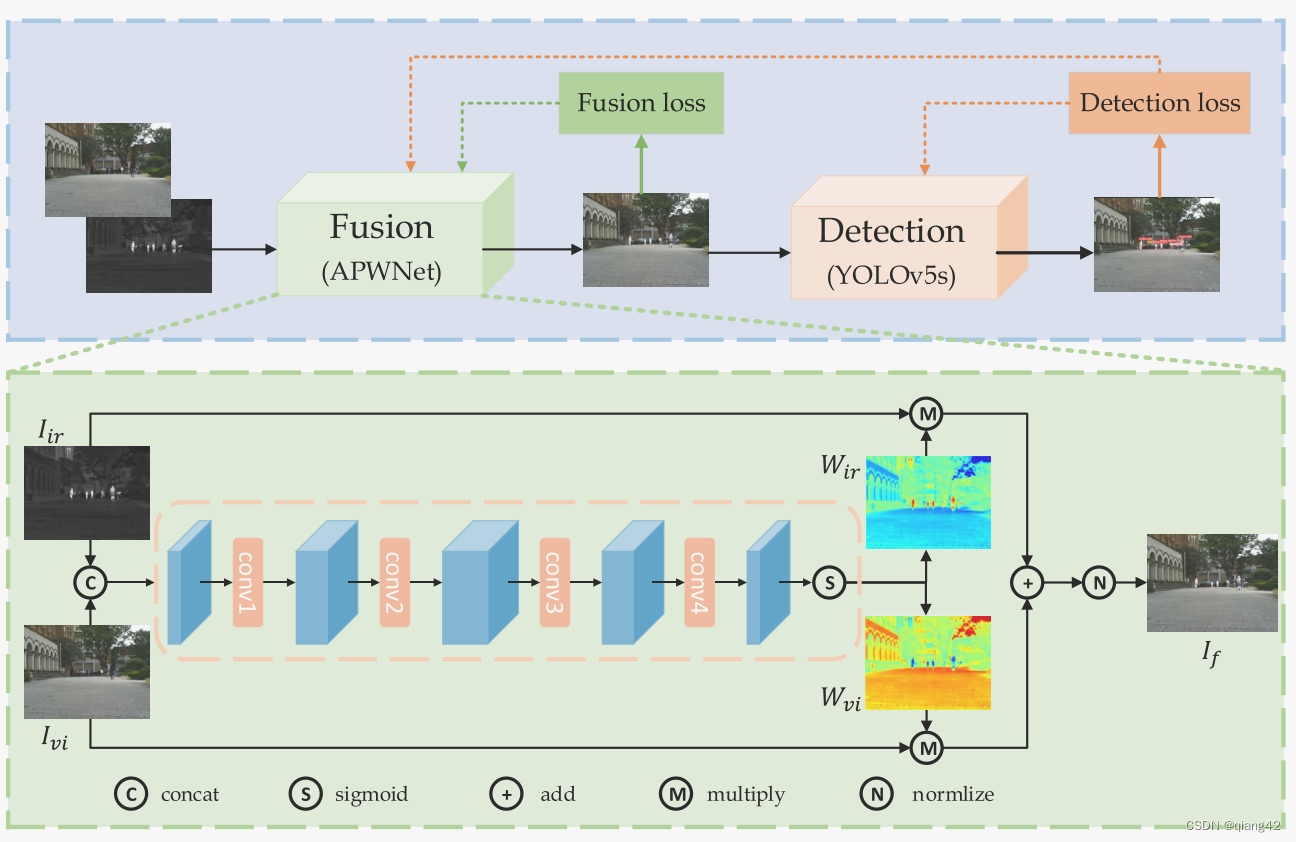

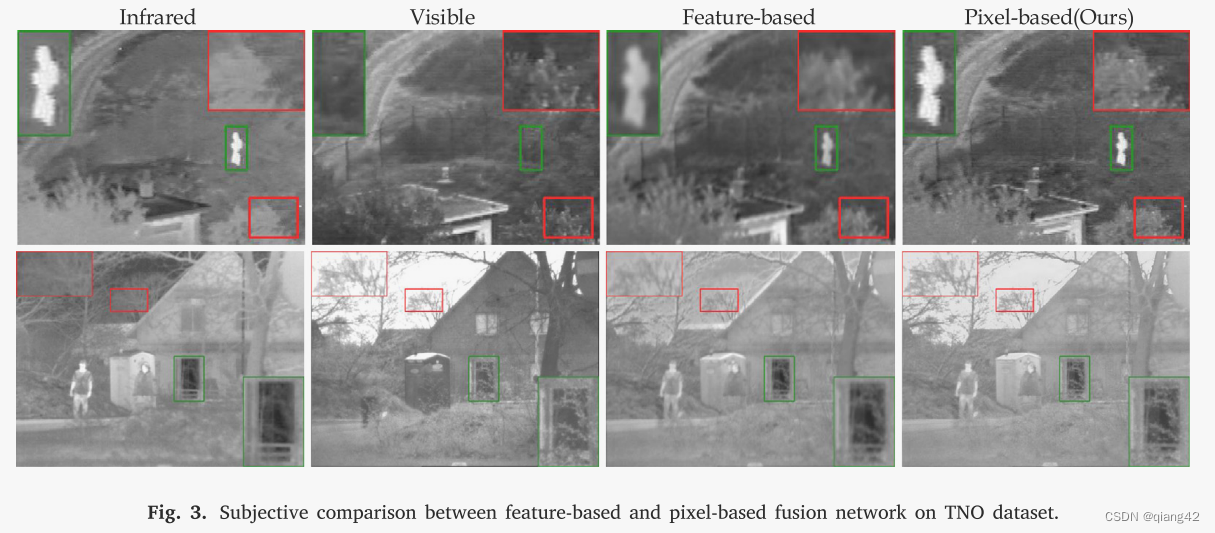

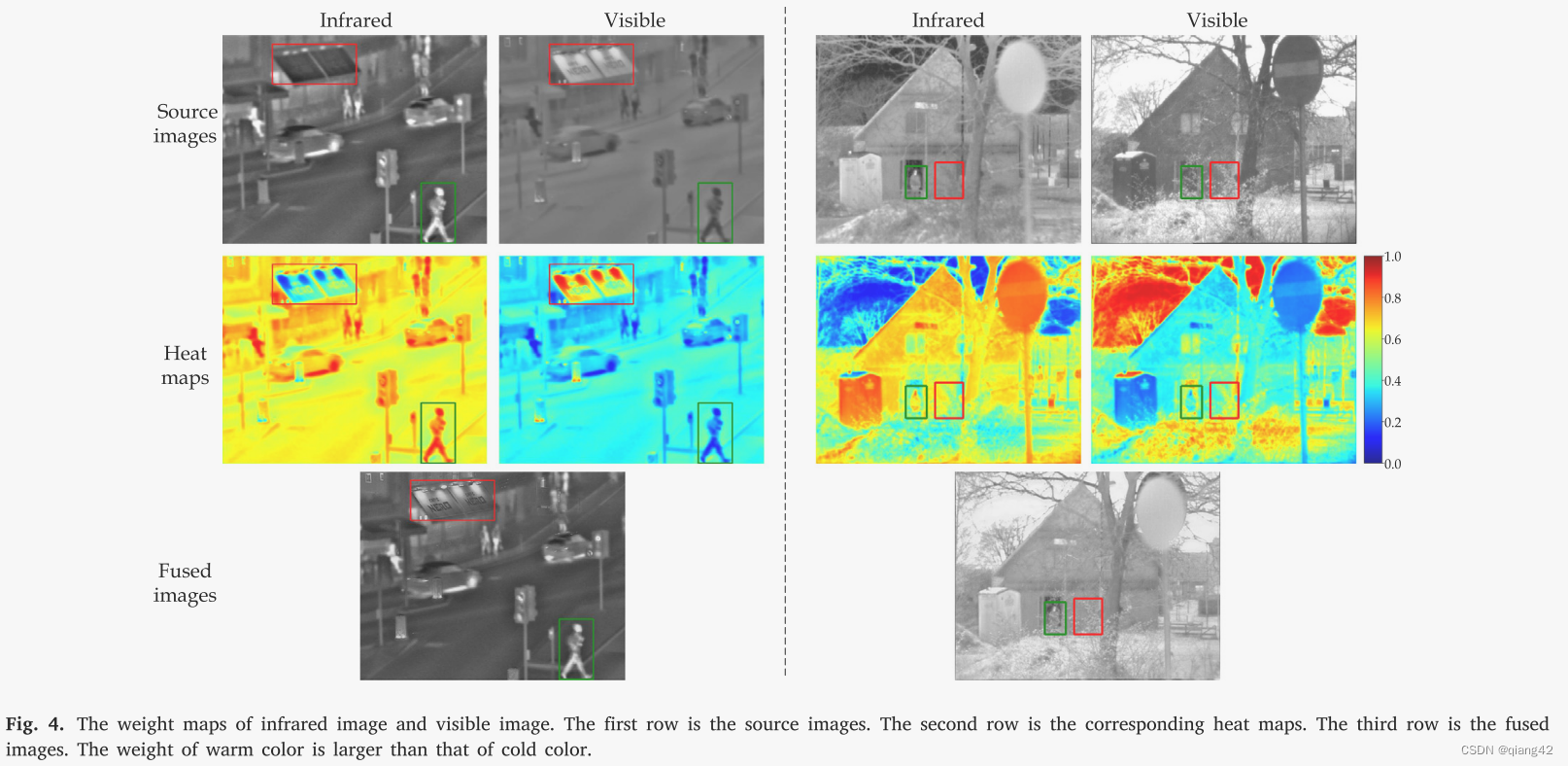

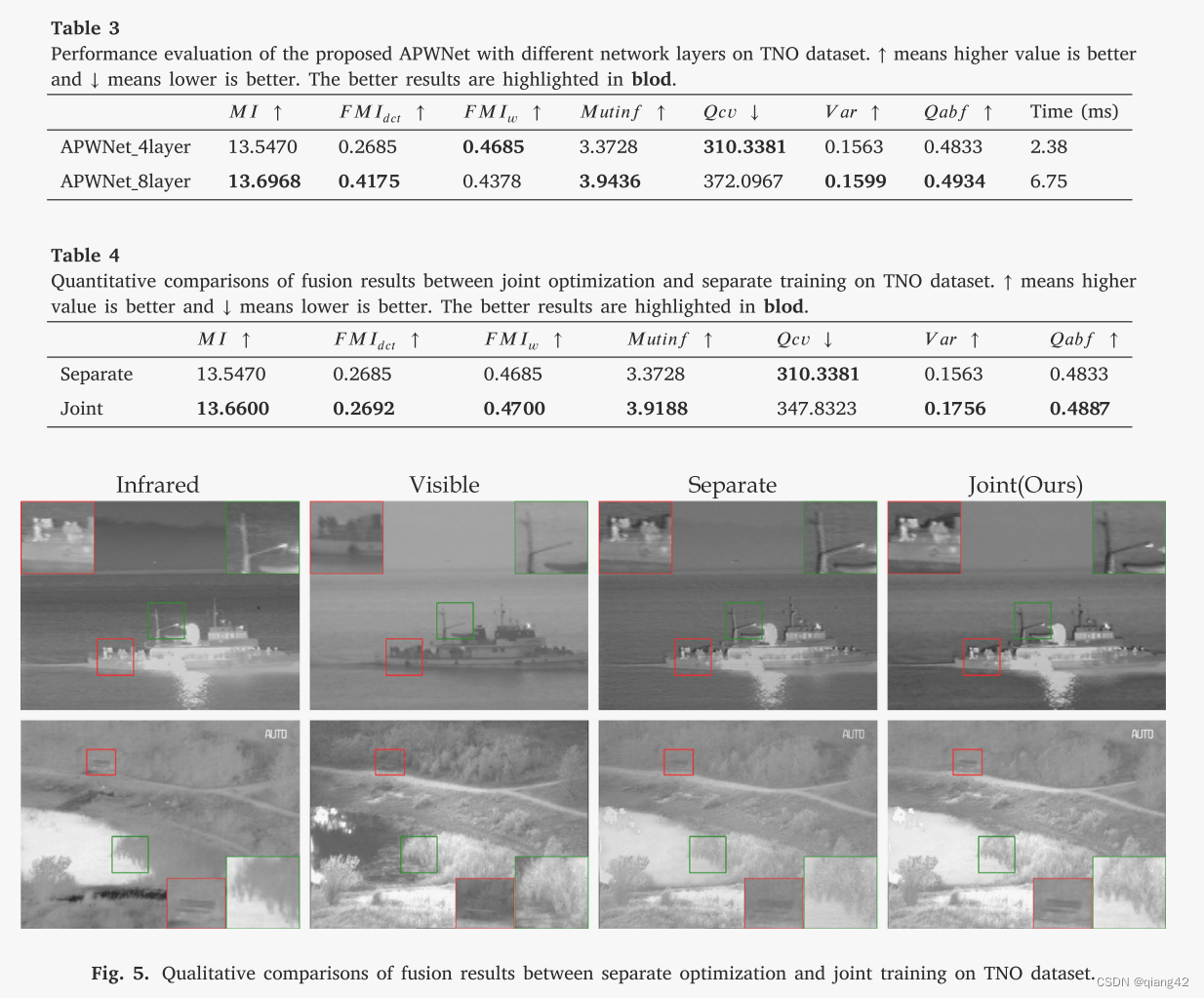

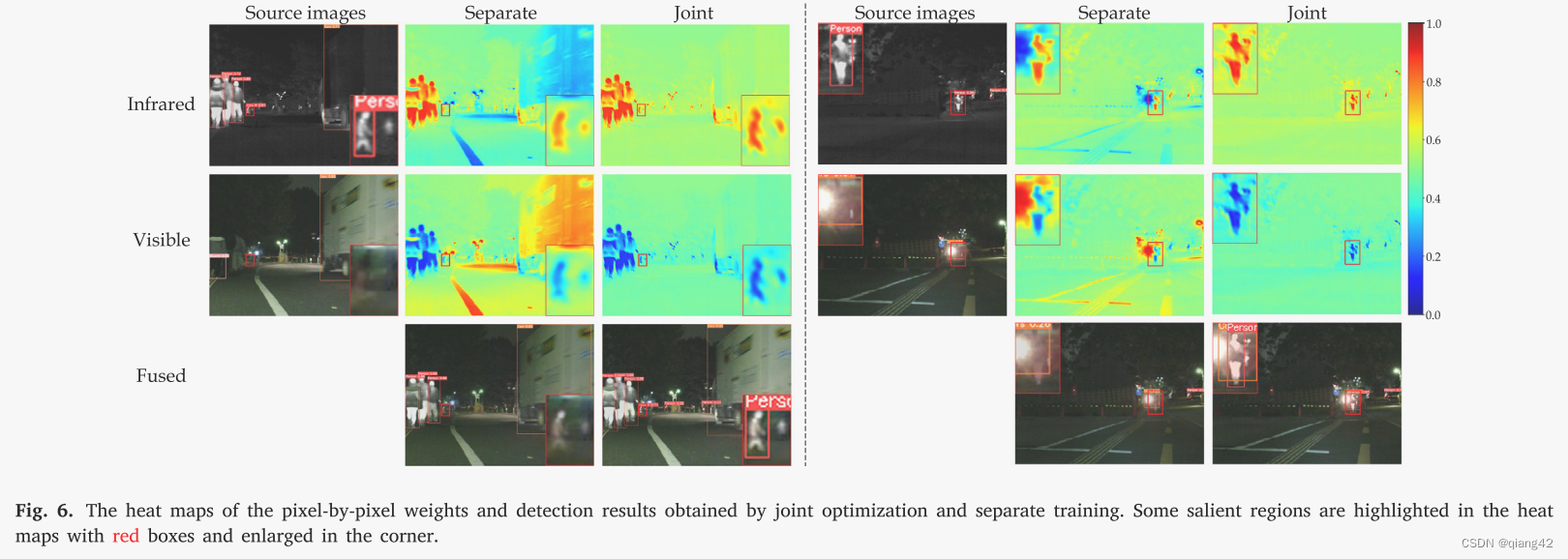

采用【自适应像素加权】(Adaptive Pixel Weighting strategy, APWNet)策略融合图像,并联合【目标检测】下游任务。具体来说,将可见光和红外光图像concat后输入卷积层,提取权值图,然后将其分别与对应的源图逐元素乘和加操作得到融合图像,将融合图像作为yolov5s的输入进行目标检测。(在训练过程中,根据检测结果反向优化网络参数)

🪢网络结构

作者提出的网络结构如下所示。

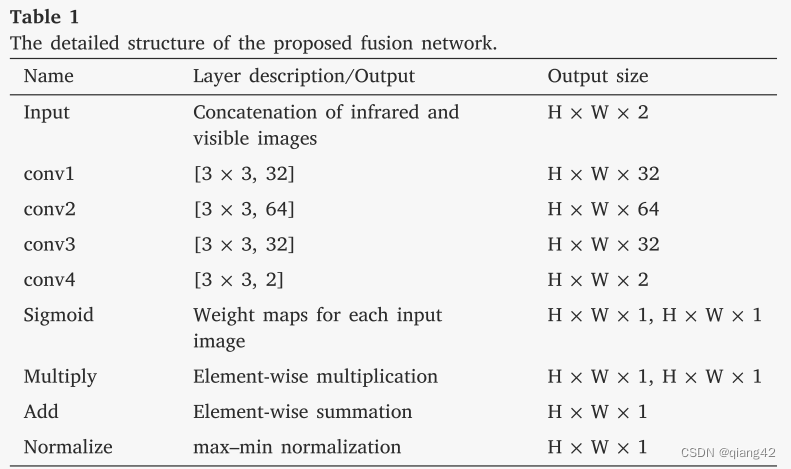

首先将拼接后的红外和可见光图像送入一系列卷积层和sigmoid层,生成输入图像的权值图。然后对融合图像进行逐元运算和最大最小归一化。最后,将融合后的图像输入检测器,端到端联合训练整个网络。

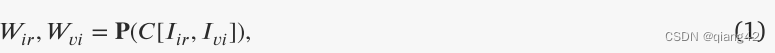

I

i

r

I_{ir}

Iir?和

I

v

i

I_{vi}

Ivi?分别代表红外图像和可见光图像,

W

i

r

W_{ir}

Wir?和

W

v

i

W_{vi}

Wvi?代表源图像对应的融合权重

C

[

?

]

C[·]

C[?]是concatenation,即维度通道拼接。

P

(

?

)

P(·)

P(?)代表自适应像素权重生成模块。

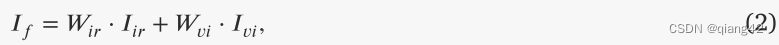

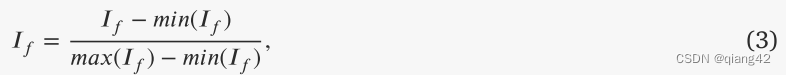

然后分别将源图与对应的权重相乘并相加,随后归一化融合图像:

最后,将融合图像输入目标检测网络得到检测结果:

网络详细结构如下图:

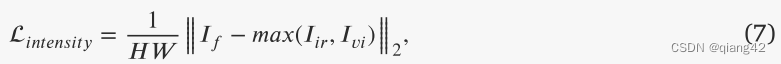

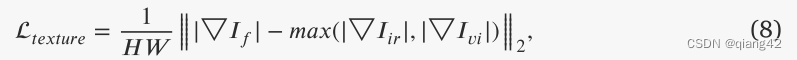

📉损失函数

清晰明了的损失函数,没什么多说的,大道至简。如有疑问可以阅读博主之前的文章。

🔢数据集

- TNO、RoadScene 、MSRS

图像融合数据集链接

[图像融合常用数据集整理]

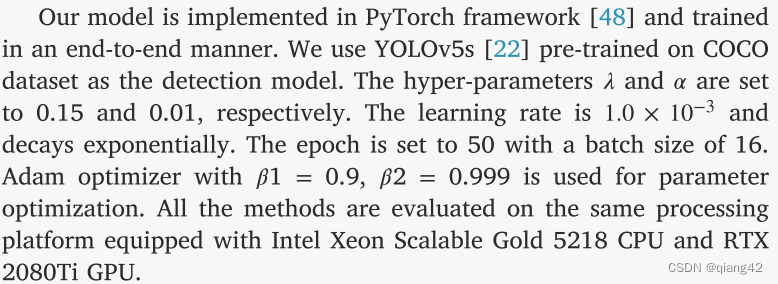

🎢训练设置

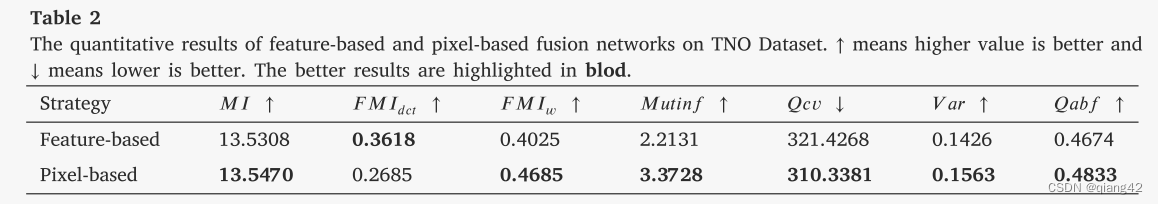

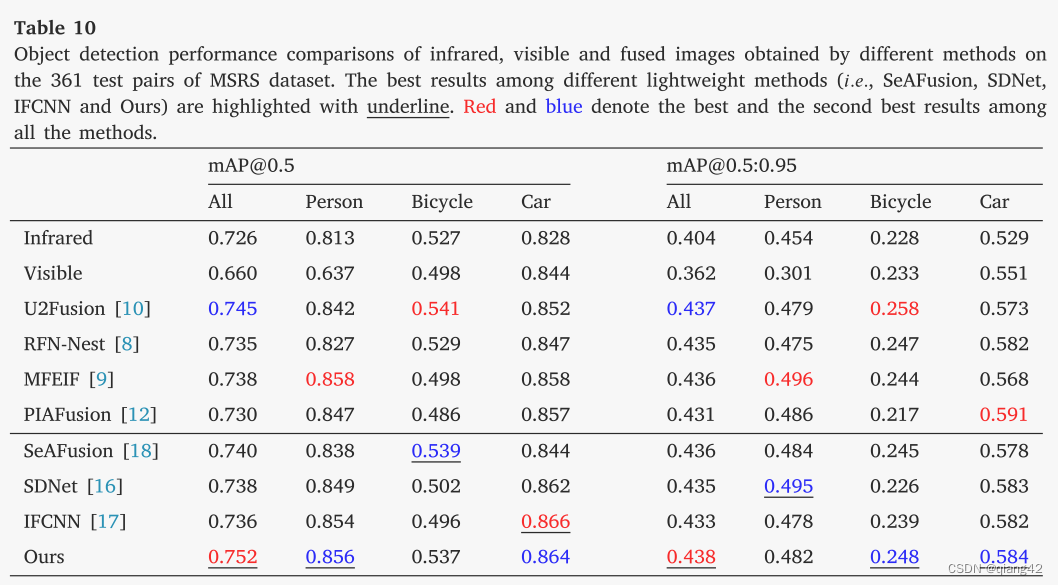

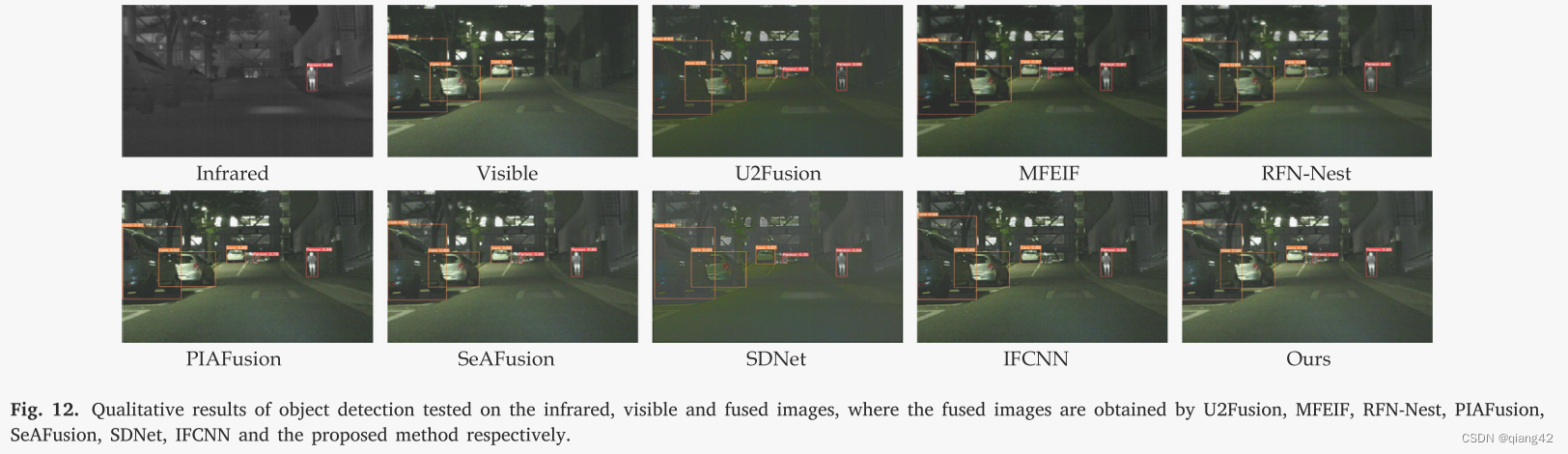

🔬实验

📏评价指标

- MI

- FMIdct

- FMIw

- Mutinf

- Qcv

- Var

- Qabf

- mAP@0.5

- mAP@[0.5:0.95]

参考资料

[图像融合定量指标分析]

🥅Baseline

- U2Fusion ,RFNNest , MFEIF , PIAFusion

and three lightweight networks

- SeAFusion, SDNet, IFCNN

???参考资料

???强烈推荐必看博客[图像融合论文baseline及其网络模型]???

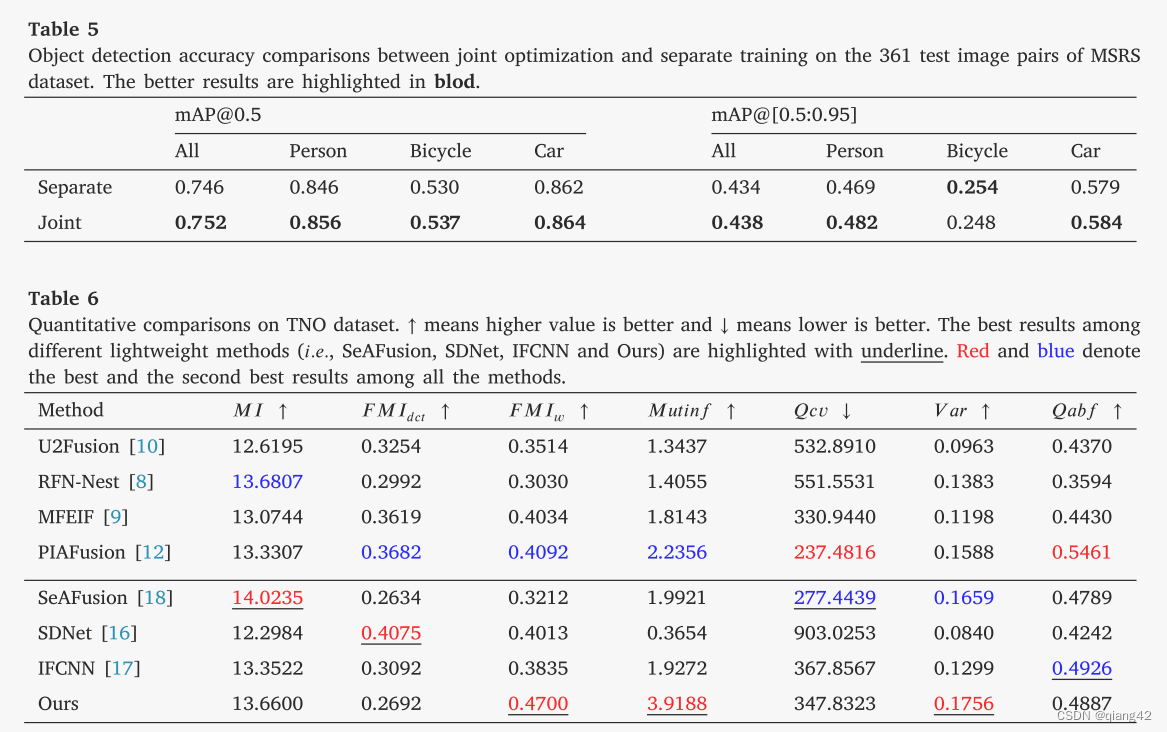

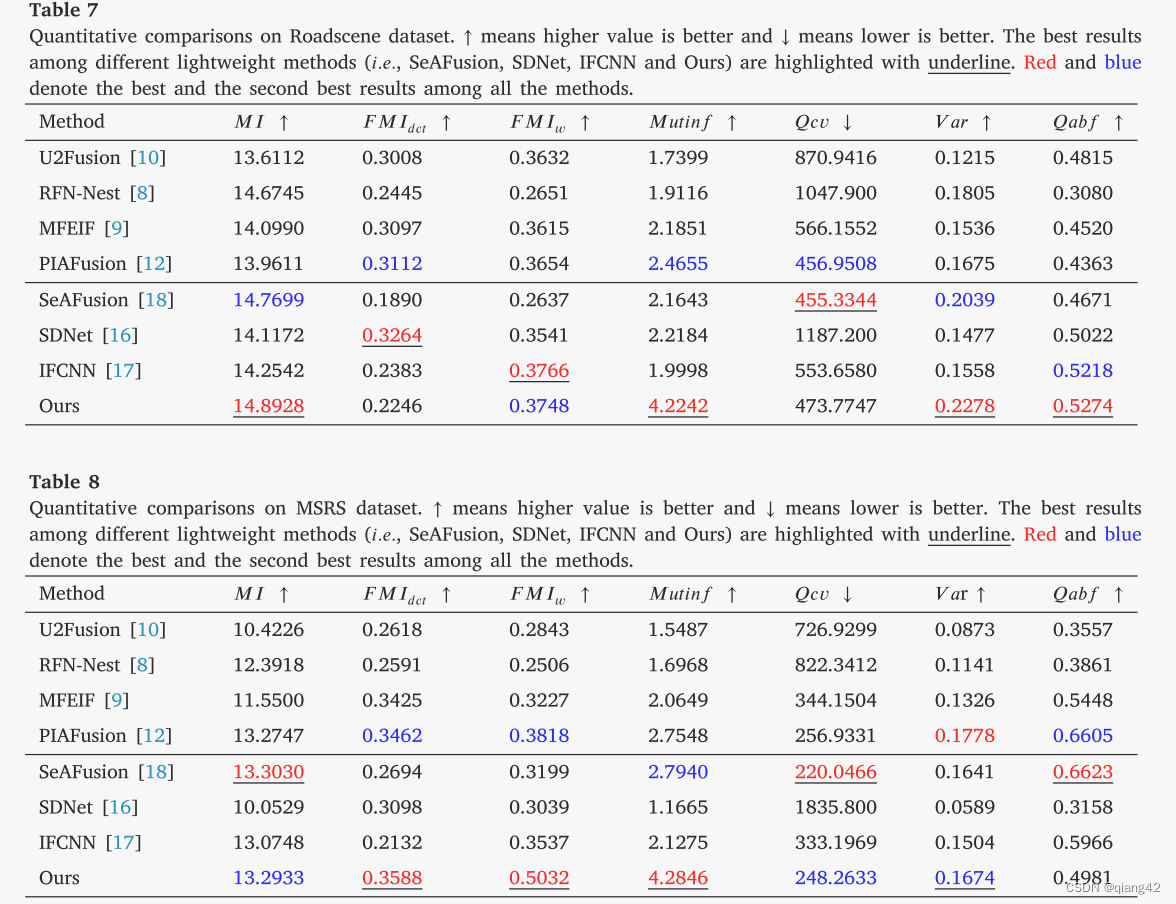

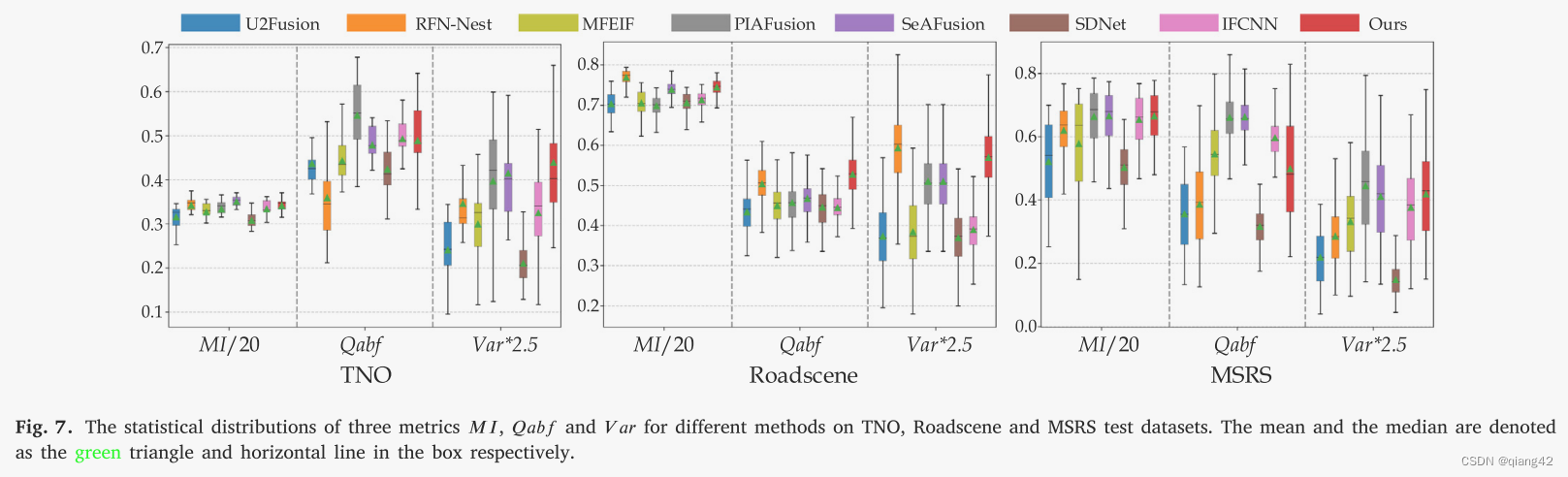

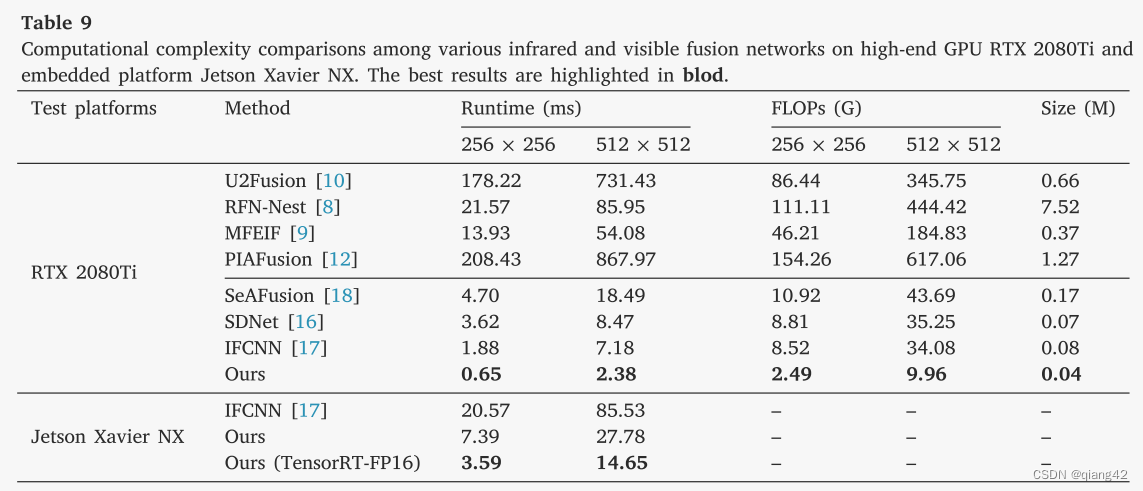

🔬实验结果

更多实验结果及分析可以查看原文:

📖[论文下载地址]

🚀传送门

📑图像融合相关论文阅读笔记

📑[Dif-fusion: Towards high color fidelity in infrared and visible image fusion with diffusion models]

📑[Coconet: Coupled contrastive learning network with multi-level feature ensemble for multi-modality image fusion]

📑[LRRNet: A Novel Representation Learning Guided Fusion Network for Infrared and Visible Images]

📑[(DeFusion)Fusion from decomposition: A self-supervised decomposition approach for image fusion]

📑[ReCoNet: Recurrent Correction Network for Fast and Efficient Multi-modality Image Fusion]

📑[RFN-Nest: An end-to-end resid- ual fusion network for infrared and visible images]

📑[SwinFuse: A Residual Swin Transformer Fusion Network for Infrared and Visible Images]

📑[SwinFusion: Cross-domain Long-range Learning for General Image Fusion via Swin Transformer]

📑[(MFEIF)Learning a Deep Multi-Scale Feature Ensemble and an Edge-Attention Guidance for Image Fusion]

📑[DenseFuse: A fusion approach to infrared and visible images]

📑[DeepFuse: A Deep Unsupervised Approach for Exposure Fusion with Extreme Exposure Image Pair]

📑[GANMcC: A Generative Adversarial Network With Multiclassification Constraints for IVIF]

📑[DIDFuse: Deep Image Decomposition for Infrared and Visible Image Fusion]

📑[IFCNN: A general image fusion framework based on convolutional neural network]

📑[(PMGI) Rethinking the image fusion: A fast unified image fusion network based on proportional maintenance of gradient and intensity]

📑[SDNet: A Versatile Squeeze-and-Decomposition Network for Real-Time Image Fusion]

📑[DDcGAN: A Dual-Discriminator Conditional Generative Adversarial Network for Multi-Resolution Image Fusion]

📑[FusionGAN: A generative adversarial network for infrared and visible image fusion]

📑[PIAFusion: A progressive infrared and visible image fusion network based on illumination aw]

📑[CDDFuse: Correlation-Driven Dual-Branch Feature Decomposition for Multi-Modality Image Fusion]

📑[U2Fusion: A Unified Unsupervised Image Fusion Network]

📑综述[Visible and Infrared Image Fusion Using Deep Learning]

📚图像融合论文baseline总结

📑其他论文

📑[3D目标检测综述:Multi-Modal 3D Object Detection in Autonomous Driving:A Survey]

🎈其他总结

🎈[CVPR2023、ICCV2023论文题目汇总及词频统计]

?精品文章总结

?[图像融合论文及代码整理最全大合集]

?[图像融合常用数据集整理]

如有疑问可联系:420269520@qq.com;

码字不易,【关注,收藏,点赞】一键三连是我持续更新的动力,祝各位早发paper,顺利毕业~

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 如何提高React组件的渲染效率的?在React中如何避免不必要的render?

- (Java企业 / 公司项目)微服务Sentinel限流如何使用?

- redis pipeline实现,合并多个请求,可有效降低redis访问延迟

- 解码 JWT 的有效负载

- 将WebGL打包的unity项目部署至Vue中

- 103基于matlab的极限学习机(ELM)和改进的YELM和集成极限学习机(EELM)是现在流行的超强学习机

- 【华为OD真题 Python】求字符串中所有整数的最小和

- python小工具开发专题:gpu监控工具

- 【计组考点】:第四章 存储系统(中)由存储芯片扩展存储器

- excel统计分析——Lilliefors正态性检验