爬虫之牛刀小试(三):爬取中国天气网全国天气

发布时间:2024年01月11日

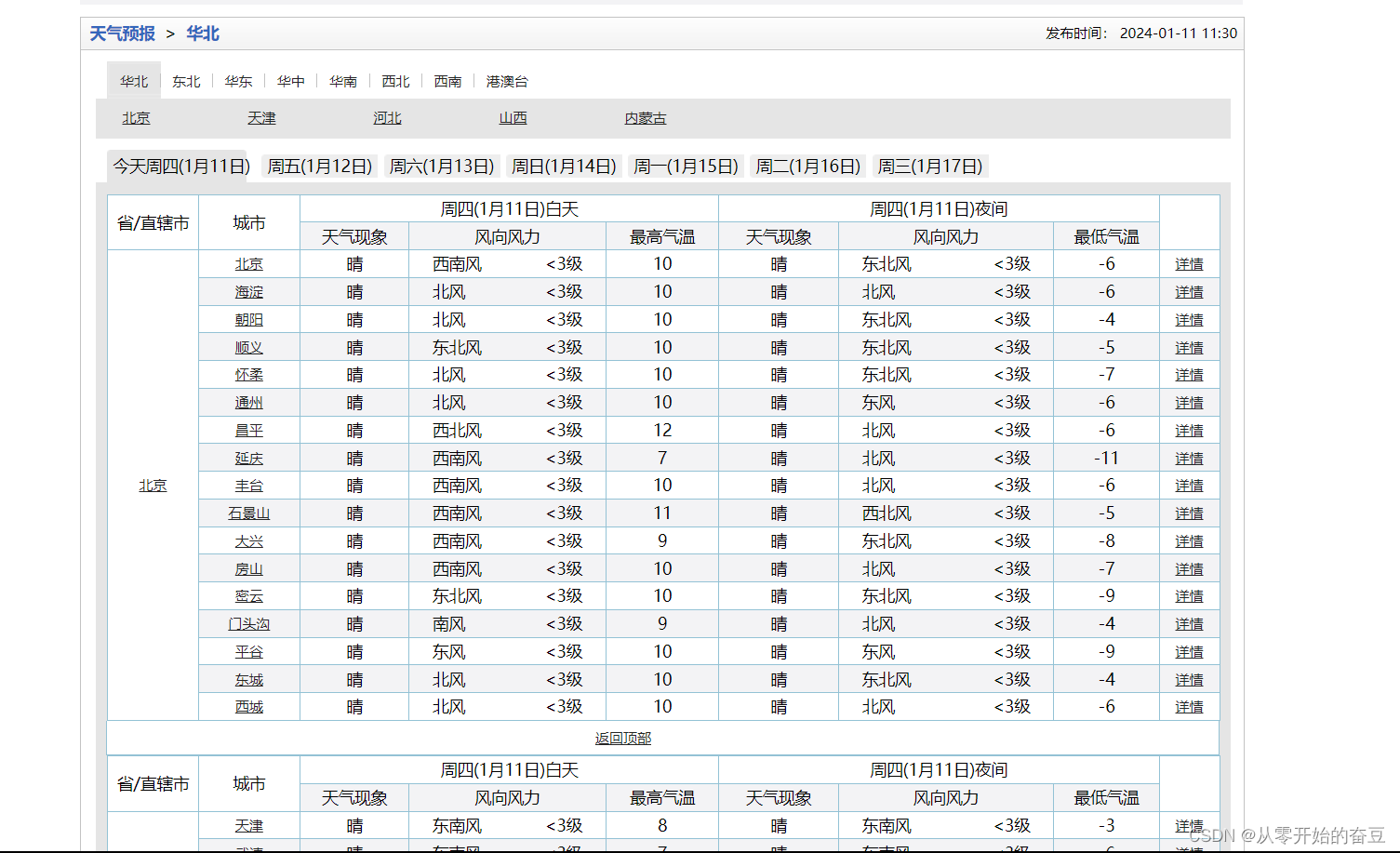

天气网:

import requests

from bs4 import BeautifulSoup

import time

from pyecharts.charts import Bar

from pyecharts import options as opts

url_hb = 'http://www.weather.com.cn/textFC/hb.shtml'

url_db = 'http://www.weather.com.cn/textFC/db.shtml'

url_hd = 'http://www.weather.com.cn/textFC/hd.shtml'

url_hz = 'http://www.weather.com.cn/textFC/hz.shtml'

url_hn = 'http://www.weather.com.cn/textFC/hn.shtml'

url_xb = 'http://www.weather.com.cn/textFC/xb.shtml'

url_xn = 'http://www.weather.com.cn/textFC/xn.shtml'

url_gat = 'http://www.weather.com.cn/textFC/gat.shtml'

url_areas = [url_hb, url_db, url_hd, url_hz, url_hn, url_xb, url_xn, url_gat]

HEADERS = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

'Referer': 'http://www.weather.com.cn/textFC/hb.shtml'

}

ALL_DATA = []

def paser_url(url):

response = requests.get(url, headers=HEADERS)

text = response.content.decode('utf-8')

soup = BeautifulSoup(text, 'html5lib')

conMidtab = soup.find('div', class_='conMidtab')

tabels = conMidtab.find_all('table')

for tabel in tabels:

trs = tabel.find_all('tr')[2:]

for index, tr in enumerate(trs):

tds = tr.find_all('td')

city_td = tds[0]

if index == 0:

city_td = tds[1]

city = list(city_td.stripped_strings)[0]

min_temp_td = tds[-2]

wind_td = tds[-3]

tq_td = tds[-4]

max_temp_td = tds[-5]

soup = BeautifulSoup(str(wind_td), 'html.parser')

spans = soup.find_all('span')

wind_direction = spans[0].text # 北风

wind_level = spans[1].text.strip('<>级') # 3

min_temp = list(min_temp_td.stripped_strings)[0]

tq_data = list(tq_td.stripped_strings)[0]

max_temp = list(max_temp_td.stripped_strings)[0]

ALL_DATA.append({'city': city, 'max_temp':int(max_temp),'min_temp': int(min_temp), 'wind_direction': wind_direction, 'wind_level': wind_level, 'tq_data': tq_data})

def spider():

for index, url in enumerate(url_areas):

paser_url(url)

print('第{}个区域爬取完毕'.format(index + 1))

time.sleep(1)

def main():

spider()

print(ALL_DATA)

if __name__ == '__main__':

main()

paser_url(url) 函数用于解析每个区域页面的 HTML,提取出城市名、最高温度、最低温度、风向、风级和天气数据,并将这些数据以字典的形式添加到 ALL_DATA 列表中。

spider() 函数遍历所有区域的 URL,对每个 URL 调用 paser_url(url) 函数,并在每次调用后暂停 1 秒。

使用了 requests 库来发送 HTTP 请求,使用 BeautifulSoup 库来解析 HTML,使用 time 库来暂停执行。

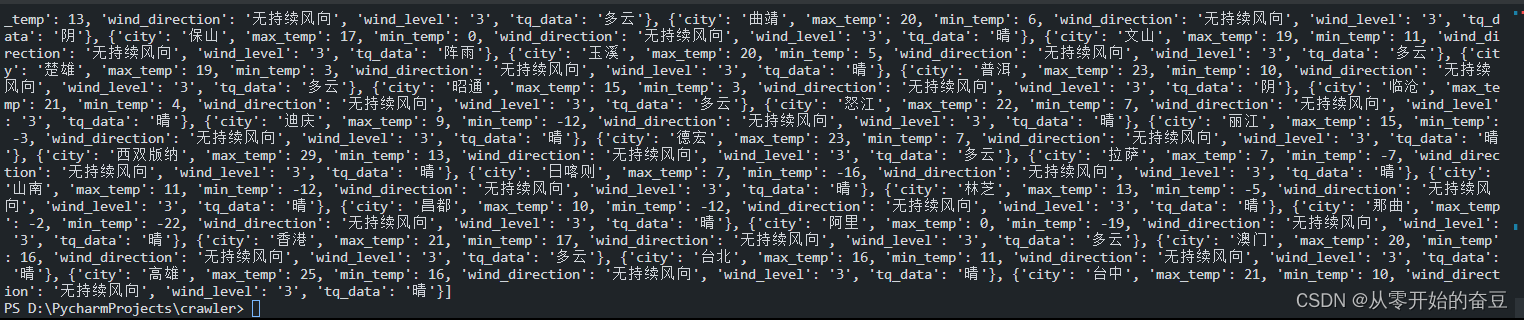

结果:

注意不要爬太多。

最近新开了公众号,请大家关注一下。

文章来源:https://blog.csdn.net/m0_68926749/article/details/135533192

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- (c语言进阶)动态内存管理

- spark:RDD编程(Python版)

- ROS学习笔记(七)---参数服务器

- 第16章 网络io与io多路复用select/pool/epool

- 2023.12.27 关于 Redis 数据类型 List 常用命令

- “关爱长者 托起夕阳无限美好”清峰公益开展寒冬暖心慰问活动

- 【Java+SpringBoot】房屋交易系统(源码+项目定制开发+代码讲解+答辩教学+计算机毕设选题)

- 飞致云开源社区月度动态报告(2023年12月)

- Docker-harbor私有仓库

- flutter设置windows是否显示标题栏和状态栏和全屏显示