Docker网络

1 docker网络命令

docker network COMMAND

COMMAND :

- connect Connect a container to a network

- create Create a network

- disconnect Disconnect a container from a network

- inspect Display detailed information on one or more networks

- ls List networks

- prune Remove all unused networks

- rm Remove one or more networks

查看docker启动后默认创建的三个网络

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

c2c9bb7a06a5 bridge bridge local

7abdab89da53 host host local

a3a93d075f92 none null local

创建一个 名字叫mywork 的网络,不指定网络模式 默认为bridge

$ docker network create mywork

125ce095e4ceebbe305641d42a949c36d3f8746d8571c7a98933752484e9c09b

查看docker 的网络情况,能够看到新创建的网络:mywork ,diriver(模式) 为 bridge

设置网络模式参数

- bridge 模式, --network bridge

- host 模式 ,–network host

- none 模式,–network none

- 容器模式 --network container:NAME或者容器ID

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

c2c9bb7a06a5 bridge bridge local

7abdab89da53 host host local

125ce095e4ce mywork bridge local

a3a93d075f92 none null local

查看某个网络详情

$ docker network inspect mywork

[

{

"Name": "mywork",

"Id": "125ce095e4ceebbe305641d42a949c36d3f8746d8571c7a98933752484e9c09b",

"Created": "2024-01-13T13:10:39.926239688+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

...

删除网络

$ docker network rm mywork

2 网络模式

Libnetwork 是 Docker 启动容器时,用来为 Docker 容器提供网络接入功能的插件,它可以让 Docker 容器顺利接入网络,实现主机和容器网络的互通

Libnetwork 比较典型的网络模式主要有四种,这四种网络模式基本满足了我们单机容器的所有场景。

- bridge 桥接模式:为每个容器设置IP,并将容器连接到主机的一个叫docker0的虚拟网桥,容器默认使用该模式

- host 主机网络模式:容器不会虚拟出自己的网卡,不会配置自己的IP,容器内的进程使用主机的网络即 使用主机的IP和端口。

- none 空网络模式:没有对其进行任何网络配置,可以帮助我们构建一个没有网络接入的容器环境,以保障数据安全。

- container 网络模式:新创建的容器不会创建自己的网卡和配置自己的IP,而是共享指定的容器的IP、端口范围

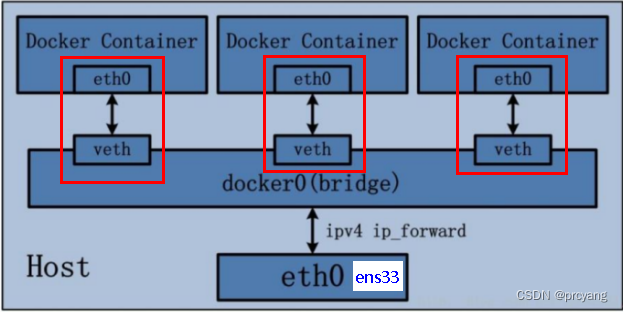

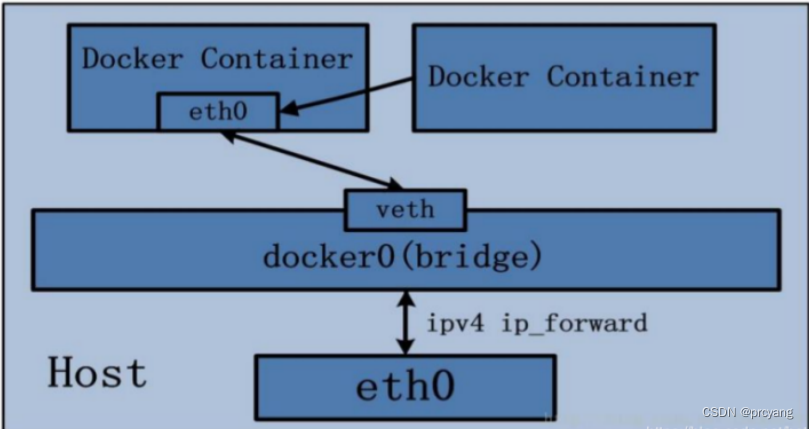

2.1 bridge 桥接模式

Docker 服务启动后默认会创建一个名为docker0 虚拟网桥,它在内核层连通了其他的物理或虚拟网卡,这就将所有容器和本地主机都放到同一个物理网络。Docker 默认指定了 docker0 接口 的 IP 地址和子网掩码,让主机和容器,容器和容器 之间可以通过网桥相互通信。

查看宿主机docker0

$ ifconfig

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:c1ff:fe52:a0c3 prefixlen 64 scopeid 0x20<link>

ether 02:42:c1:52:a0:c3 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 5 bytes 526 (526.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

- Docker使用Linux桥接,在宿主机虚拟一个Docker容器网桥(docker0),Docker启动一个容器时会根据Docker网桥的网段分配给容器一个IP地址,称为Container-IP,同时Docker网桥是每个容器的默认网关。因为在同一宿主机内的容器都接入同一个网桥,这样容器之间就能够通过容器的Container-IP直接通信。

- docker run 的时候,没有指定network的话默认使用的模式就是bridge模式,使用的网桥就是docker0。在宿主机ifconfig,就可以看到docker0和自己create的network :eth0,eth1,eth2……代表网卡一,网卡二,网卡三……,lo代表127.0.0.1,即localhost。

- 网桥docker0创建一对 对等虚拟设备接口一个叫veth,另一个叫eth0,成对匹配。

. (ps: 宿主机(一块网卡的话)的eth0 多数操作系统的名字都是ens33(不同系统可能名称不同))

(ps: 宿主机(一块网卡的话)的eth0 多数操作系统的名字都是ens33(不同系统可能名称不同))

- 整个宿主机的网桥是docker0,类似一个交换机有一堆接口,每个接口叫veth,在本地主机和容器内分别创建一个虚拟接口,并让他们彼此联通(这样一对接口叫veth pair);

- 每个容器实例内部也有一块网卡,每个接口叫eth0;

- docker0上面的每个veth匹配某个容器实例内部的eth0,两两配对,一一匹配。

通过上述,将宿主机上的所有容器都连接到这个内部网络上,两个容器在同一个网络下,会从这个网关下各自拿到分配的ip,此时两个容器的网络是互通的。

实操

查看创建容器前宿主机的网络,可以看到只有 lo,etho,docker0 三个网卡

$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:15:5d:c2:a9:cf brd ff:ff:ff:ff:ff:ff

inet 172.25.54.84/20 brd 172.25.63.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::215:5dff:fec2:a9cf/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:c1:52:a0:c3 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:c1ff:fe52:a0c3/64 scope link

valid_lft forever preferred_lft forever

启动两个bridge 模式容器

#指定网络模式为bridge

$ docker run -it --name alpine1 --network bridge alpine:latest

#未指定网络模式 默认为bridge

$ docker run -it --name alpine2 alpine:latest

通过inspect 查看容器的网络情况,可以看到两个容器的网络模式都为“bridge”模式,“Gateway"都为"172.17.0.1”(宿主机docke0),IP 为两个不同的"IPAddress": “172.17.0.2”,“IPAddress”: “172.17.0.3”,

#alpine1 的网络

docker inspect alpine1 | tail -n 20

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "c2c9bb7a06a52ef763a1fbad5b16fcdaa3e4d8b4f61e1b403a397d05a4de7c3a",

"EndpointID": "19a5e289931f363c68d823861a957f39ad1449150fb12f196e3f31b3b349776a",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:02",

"DriverOpts": null

}

}

}

}

]

# alpine2 的网络

$ docker inspect alpine2 | tail -n 20

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "c2c9bb7a06a52ef763a1fbad5b16fcdaa3e4d8b4f61e1b403a397d05a4de7c3a",

"EndpointID": "e1f72bd0d0679c4d475aabc3e981e441f9c6bd8e424c3c79e5f60873cdefc98d",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.3",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:03",

"DriverOpts": null

}

}

}

}

]

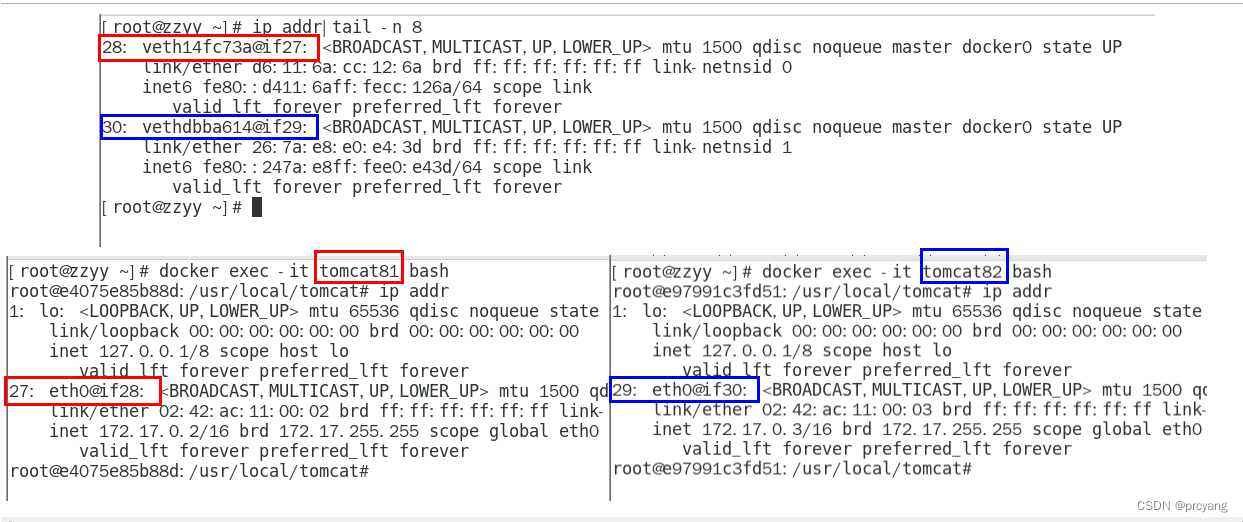

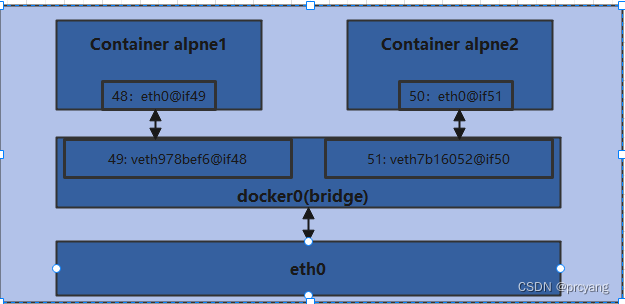

查看创建了两个容器后宿主机的网络,比创建容器前多了两个: 49: veth978bef6@if48,51: veth7b16052@if50:

$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:15:5d:c2:a9:cf brd ff:ff:ff:ff:ff:ff

inet 172.25.54.84/20 brd 172.25.63.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::215:5dff:fec2:a9cf/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c1:52:a0:c3 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:c1ff:fe52:a0c3/64 scope link

valid_lft forever preferred_lft forever

49: veth978bef6@if48: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 66:b9:fe:aa:1c:6a brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::64b9:feff:feaa:1c6a/64 scope link

valid_lft forever preferred_lft forever

51: veth7b16052@if50: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether fe:c1:8c:92:b9:89 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::fcc1:8cff:fe92:b989/64 scope link

valid_lft forever preferred_lft forever

查看容器内的网络,可以看到除了都有lo外

alpne1 有“48: eth0@if49” 其与宿主机的 “49: veth978bef6@if48:”配对

alpne2 有“50: eth0@if51” 其与宿主机的 “51: veth7b16052@if50:”配对

#查看alpne1 的网络 ip addr

$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

48: eth0@if49: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

#查看alpne2 的网络 ip addr

$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

50: eth0@if51: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

此时容器、主机的网络连接情况就是

容器的IP在重启后可能会变化

重启两个容器

$ docker stop alpne1

$ docker stop alpne2

$ docker start alpne2

#查看alpne2 的网络 ip addr

$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

50: eth0@if51: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

查看先启动的alpne2,发现其IP 由172.17.0.3(重启前IP)变成了 172.17.0.2(原来alpne1的IP),说明bridge模式的容器IP是可以变的,相当于会自动分配的IP,重启就变化。

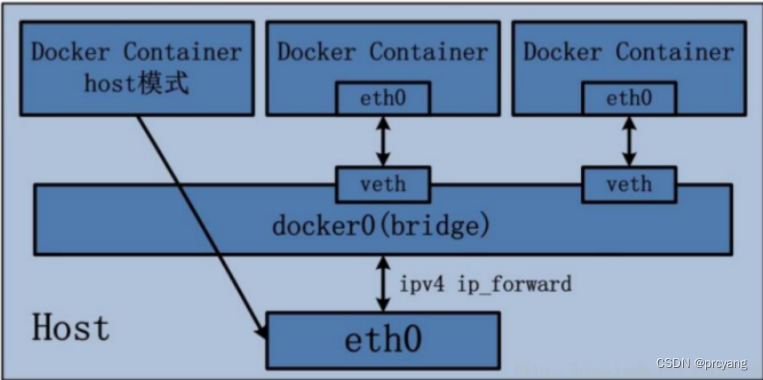

2.2 host 主机模式

容器直接使用宿主机的 IP 地址与外界进行通信,不再需要额外进行NAT 转换。

容器将不会获得一个独立的Network Namespace, 而是和宿主机共用一个Network Namespace。容器将不会虚拟出自己的网卡而是使用宿主机的IP和端口。

启动两个一个host 模式的容器,观察宿主机和两个容器的网络情况发现

宿主机(启动容器前后)的ip addr、两个容器内的ip addr ,完全一样(都只有 lo,eth0,docker0 三个网卡)

$ docker run -it --name alpne1 --network host alpine:latest

$ docker run -it --name alpne2 --network host alpine:latest

$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000

link/ether 00:15:5d:c2:a9:cf brd ff:ff:ff:ff:ff:ff

inet 172.25.54.84/20 brd 172.25.63.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::215:5dff:fec2:a9cf/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:c1:52:a0:c3 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:c1ff:fe52:a0c3/64 scope link

valid_lft forever preferred_lft forever

inspect 查看容器详情,发现 IPAddress Gateway为空,因为其共用了宿主机的IP

$ docker inspect alpne1| tail -n 20

"Networks": {

"host": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "7abdab89da53b175ba407fa6dd7d589fdede9bb2e9ae65a3b8b983786eba40eb",

"EndpointID": "6a0d67612804ff89b2518f1fdbbec33c7fdcb5f2b3cecbd4c97faae55cfd5ae9",

"Gateway": "",

"IPAddress": "",

"IPPrefixLen": 0,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "",

"DriverOpts": null

}

}

}

}

]

host模式下 启动容器映射端口 将没有意义,容器自身需要使用哪个端口就会使用主机哪个端口,同一个镜像不能启动多个容器(如果需要占用端口的话),容器之间也不能占用相同的端口

分别 启动一个bridge 和host 模式的容器,发现启动host模式的 容器指定端口 会收到警告,提示映射端口的配置会被忽略,并且,查看容器状态 PORTS 列,主机模式值为空

$ docker run -it --name tomcat-host-1 --network host -p 8082:8080 billygoo/tomcat8-jdk8:latest /bin/bash

WARNING: Published ports are discarded when using host network mode

$ docker run -it --name tomcat-bridge-1 --network bridge -p 8083:8080 billygoo/tomcat8-jdk8:latest /bin/bash

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

52d9c9a45063 billygoo/tomcat8-jdk8:latest "/bin/bash" About a minute ago Up About a minute 0.0.0.0:8083->8080/tcp, :::8083->8080/tcp tomcat-bridge-1

7d9a73179000 billygoo/tomcat8-jdk8:latest "/bin/bash" 3 minutes ago Up 3 minutes tomcat-host-1

2.3 none 空模式

none 模式下 会禁用网络功能即没有接入网络,只有lo标识(就是127.0.0.1表示本地回环),适合不需要网络的容器,保障以保障数据安全。

启动一个none 模式容器,查看容器ip addr ,只有lo,宿主机也没有出现新的网卡

docker run -it --name tomcat-none-1 --network none billygoo/tomcat8-jdk8 /bin/bash

root@d8d3864f2f1b:/usr/local/tomcat# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inspect 容器的网络,并没有Gateway,IPAddress

$ docker inspect d8| tail -n 20

"Networks": {

"none": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "a3a93d075f922cc6411dc39871c0144a7156b7b717f2b8c1e6aae5c20f9089b2",

"EndpointID": "4dd3a04cfc918c44ebded0d569e6f43cb230c857da4a58d8b56bcede75035b16",

"Gateway": "",

"IPAddress": "",

"IPPrefixLen": 0,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "",

"DriverOpts": null

}

}

}

}

]

2.4 container 容器模式

新创建的容器不会创建自己的网卡,不配置自己的IP,而是共享指定的容器的IP、端口范围等。同样,两个容器除了网络方面,其他的如文件系统、进程列表等还是隔离的

命令参数格式:–network container:容器名称

启动一个bridge 模式的容器,查看容器内部 ip addr

$ docker run -it --name alpine1 --network bridge alpine:latest /bin/sh

/ # ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

35: eth0@if36: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

启动第二个容器 ,网络模式共用上一个容器

$ docker run -it --name alpine2 --network container:alpine1 alpine:latest /bin/sh

/ # ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

35: eth0@if36: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

查看宿主机的ip addr

$ ip addr

36: veth5a2fd75@if35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether ae:67:f6:07:e9:c7 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::ac67:f6ff:fe07:e9c7/64 scope link

valid_lft forever preferred_lft forever

宿主机只有一个veth ,两个容器内veth0完全一样即共用了一个IP。

停止第一个容器,查看第二个容器的ip addr ,发现第二个容器只有lo了

$ docker stop alpine1

#查看第二个容器的ip addr

$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

容器模式,第二个容器内不能占用和第一个容器内一样的端口

启动两个容器,两个容器内都需要占用8080 端口,报端口冲突,第二个容器启动失败

$ docker run -it --network bridge --name alpine1 -p 8081:8080 alpine:latest

$ docker run -it --network container:alpine1 --name alpine2 -p 8082:8080 alpine:latest

docker: Error response from daemon: conflicting options: port publishing and the container type network mode.

2.5 自定义网络模式

重要特点:自定义网络本身维护好了主机名和ip的对应关系(通过ip和容器名都能通)

创建自定义网络命令:docker network create 网络名称

创建容器使用自定义的网络命令:–network 网络名称

启动两个bridge 模式容器

$ docker run -it --network bridge --name alpine1 -p 8081:8080 alpine:latest

$ docker run -it --network bridge --name alpine2 -p 8082:8080 alpine:latest

两个容器内通过容器IP互相ping,可以ping 同,而通过docker name ping 则不通

$ ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2): 56 data bytes

64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.073 ms

$ ping alpine1

ping: bad address 'alpine1'

新建一个自定义网络,默认也是使用的bridge

$ docker network create mynetwork

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

c2c9bb7a06a5 bridge bridge local

7abdab89da53 host host local

a3a93d075f92 none null local

68a4f0aac475 mynetwork bridge local

创建两个容器,使用刚刚自定义的网络

#容器 1

$ docker run -it --name alpine1 --network mynetwork alpine:latest

$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

44: eth0@if45: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:13:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.19.0.2/16 brd 172.19.255.255 scope global eth0

valid_lft forever preferred_lft forever

#容器2

$ docker run -it --name alpine2 --network mynetwork alpine:latest

/ # ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

46: eth0@if47: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:13:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.19.0.3/16 brd 172.19.255.255 scope global eth0

valid_lft forever preferred_lft forever

可以看到创建的两个容器 的网络和直接使用 --network bridge 的效果完全一样

两个容器互相ping ,发现通过IP和容器名都能ping 通。

#PING IP

$ ping 172.19.0.2

PING 172.19.0.2 (172.19.0.2): 56 data bytes

64 bytes from 172.19.0.2: seq=0 ttl=64 time=0.060 ms

#PING 容器名

$ ping alpine1

PING alpine1 (172.19.0.2): 56 data bytes

64 bytes from 172.19.0.2: seq=0 ttl=64 time=0.112 ms

64 bytes from 172.19.0.2: seq=1 ttl=64 time=0.078 ms

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- oracle连接封装

- 科学和统计分析软件GraphPad Prism mac介绍说明

- 天津大数据培训班推荐,数据分析过程的常见错误

- [Kubernetes]8. K8s使用Helm部署mysql集群(主从数据库集群)

- 连锁门店管理需要信息化系统

- 深度学习恶劣天气车辆目标检

- 2023年度盘点:AIGC、AGI、GhatGPT、人工智能大模型必读书单

- 卓健医疗设备防漏费控制系统(益医非凡?)

- 在 Linux 中使用 cat 命令

- 什么是网络安全,如何防范?