yolov7添加FPPI评价指标

学术上目标检测大多用mAP去评价一个模型的好坏,mAP用来作为比较模型的指标是挺好的,不过有个问题就是不够直观,比如mAP=0.9到底代表什么呢?平均一个图会误检几个呢?该取什么阈值呢?mAP说明不了,所以有时候我们还需要其他更直观的指标。

FPPI

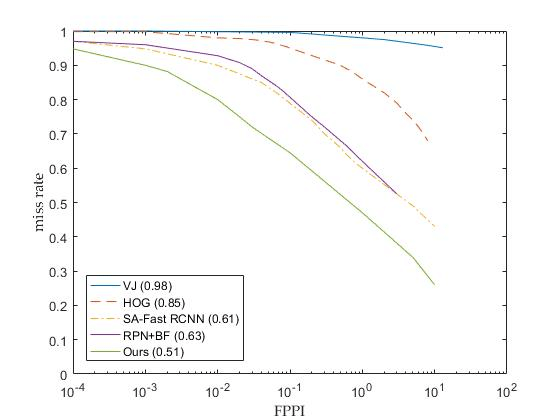

fppi:false positive per image, 顾名思义就是平均每张图误检的个数。是目标检测中也比较常见的指标。FPPI与missrate(漏检率)可以构成如下的图像,曲线越低越好。

通过FPPI曲线图,我们可以知道在一个FPPI下面的漏检率,可以作为阈值选取的指导。

yolov7中增加FPPI

FPPI实现

yolo7中的评价指标实现位于utils/metrics.py中,我们只需要参照mAP指标在其中增加FPPI的内容即可:

def fppi_per_class(tp, conf, pred_cls, target_cls, image_num, plot=False, save_dir='.', names=(), return_plt=False):

""" Compute the false positives per image (FPPW) metric, given the recall and precision curves.

Source:

# Arguments

tp: True positives (nparray, nx1 or nx10).

conf: Objectness value from 0-1 (nparray).

pred_cls: Predicted object classes (nparray).

target_cls: True object classes (nparray).

plot: Plot precision-recall curve at mAP@0.5

save_dir: Plot save directory

# Returns

The fppi curve

"""

# Sort by objectness

i = np.argsort(-conf)

tp, conf, pred_cls = tp[i], conf[i], pred_cls[i]

# Find unique classes

unique_classes = np.unique(target_cls)

nc = unique_classes.shape[0] # number of classes, number of detections

# Create Precision-Recall curve and compute AP for each class

px, py = np.linspace(0, 1, 1000), np.linspace(0,100,1000) # for plotting

r = np.zeros((nc, 1000))

miss_rate = np.zeros((nc, 1000))

fppi = np.zeros((nc, 1000))

miss_rate_at_fppi = np.zeros((nc, 3)) # missrate at fppi 1, 0.1, 0.01

p_miss_rate = np.array([1, 0.1, 0.01])

for ci, c in enumerate(unique_classes):

i = pred_cls == c

n_l = (target_cls == c).sum() # number of labels

n_p = i.sum() # number of predictions

if n_p == 0 or n_l == 0:

continue

else:

# Accumulate FPs and TPs

fpc = (1 - tp[i]).cumsum(0)

tpc = tp[i].cumsum(0)

# Recall

recall = tpc / (n_l + 1e-16) # recall curve

r[ci] = np.interp(-px, -conf[i], recall[:, 0], left=0) # negative x, xp because xp decreases

miss_rate[ci] = 1 - r[ci]

fp_per_image = fpc/image_num

fppi[ci] = np.interp(-px,-conf[i], fp_per_image[:,0], left=0)

miss_rate_at_fppi[ci] = np.interp(-p_miss_rate, -fppi[ci], miss_rate[ci])

if plot:

fig = plot_fppi_curve(fppi, miss_rate, miss_rate_at_fppi, Path(save_dir) / 'fppi_curve.png', names)

if return_plt:

return fppi, miss_rate, miss_rate_at_fppi, fig

return miss_rate, fppi, miss_rate_at_fppi

和mAP比较类似

f

p

p

i

=

f

p

/

i

m

a

g

e

_

n

u

m

fppi=fp/{image\_num}

fppi=fp/image_num

m

i

s

s

r

a

t

e

=

1

?

r

e

c

a

l

l

missrate=1-recall

missrate=1?recall

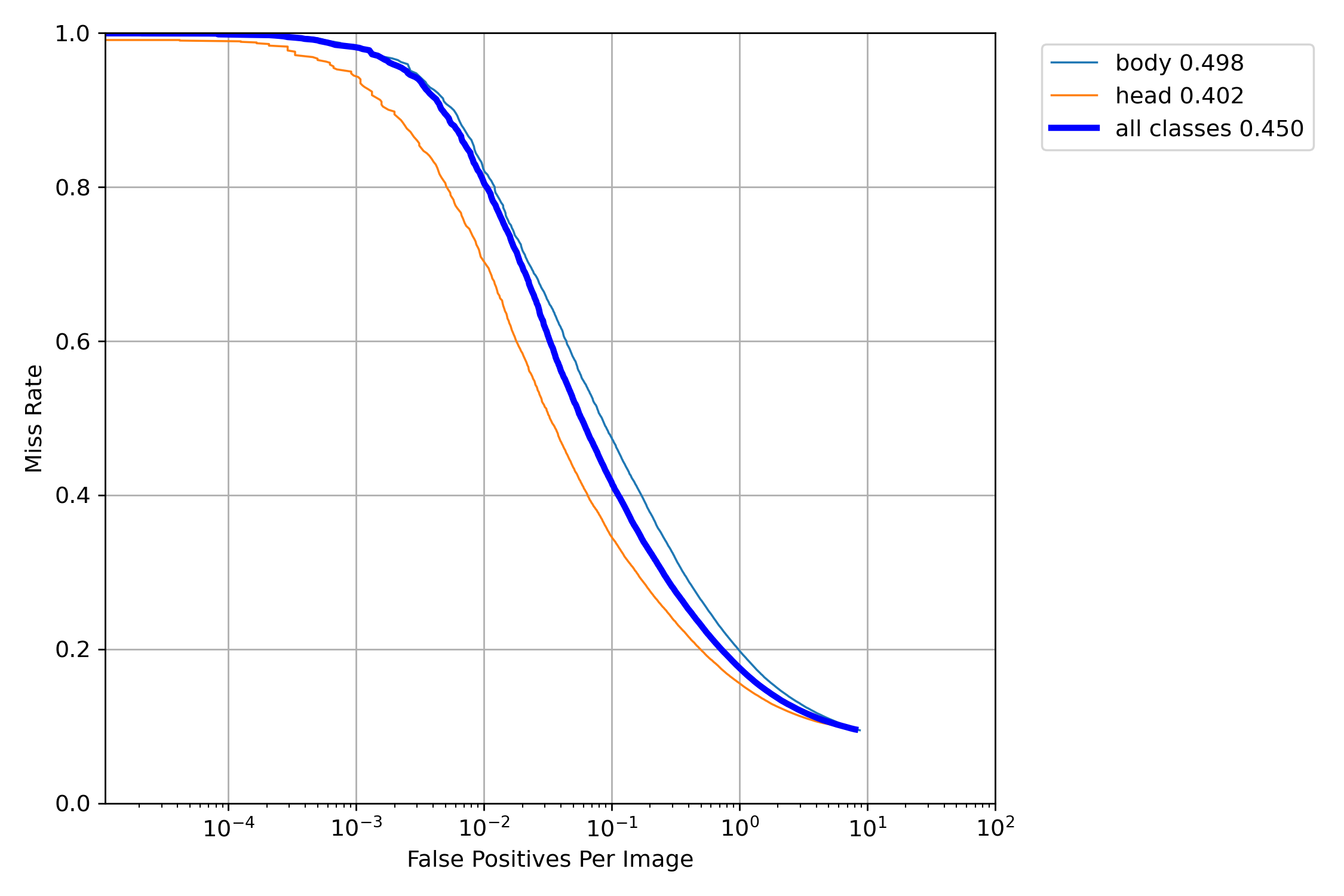

将fppi以对数坐标画图:

def plot_fppi_curve(px,py, missrate_at_fppi, save_dir='fppi_curve.png', names=()):

fig, ax = plt.subplots(1, 1, figsize=(9, 6), tight_layout=True)

py = np.stack(py, axis=1)

# semi log

for i, y in enumerate(py.T):

ax.semilogx(px[i],y, linewidth=1, label=f'{names[i]} {missrate_at_fppi[i].mean():.3f}') # plot(recall, precision)

ax.semilogx(px.mean(0), py.mean(1), linewidth=3, color='blue', label='all classes %.3f' % missrate_at_fppi.mean())

ax.set_xlabel('False Positives Per Image')

ax.set_ylabel('Miss Rate')

ax.set_xlim(0, 100)

ax.set_ylim(0, 1)

ax.grid(True)

plt.legend(bbox_to_anchor=(1.04, 1), loc="upper left")

fig.savefig(Path(save_dir), dpi=250)

return fig

训练中调用

在test.py中在map计算的下方增加fppi的计算:

p, r, f1, mp, mr, map50, map, t0, t1, mfppi_1 = 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0

........

........

stats = [np.concatenate(x, 0) for x in zip(*stats)] # to numpy

if len(stats) and stats[0].any():

p, r, ap, f1, ap_class = ap_per_class(*stats, plot=plots, save_dir=save_dir, names=names)

miss_rate, fppi, miss_rate_at_fppi = fppi_per_class(*stats, plot=plots, image_num= image_num, save_dir=save_dir, names=names)

mfppi_1 = miss_rate_at_fppi[:,0].mean()

ap50, ap = ap[:, 0], ap.mean(1) # AP@0.5, AP@0.5:0.95

mp, mr, map50, map = p.mean(), r.mean(), ap50.mean(), ap.mean()

nt = np.bincount(stats[3].astype(np.int64), minlength=nc) # number of targets per class

else:

nt = torch.zeros(1)# Print results

pf = "%20s" + "%12i" * 2 + "%12.3g" * 5 # print format

print(pf % ("all", seen, nt.sum(), mp, mr, map50, map, mfppi_1))

········

#返回fppi

return (mp, mr, map50, map, *(loss.cpu() / len(dataloader)).tolist(), mfppi_1), maps, t

wandb中增加,我习惯使用wandb, 所以加了这步,如果不使用wandb的话,上面的函数不返回fppi就不用修改train.py了:

train.py# Log

tags = [

"train/box_loss",

"train/obj_loss",

"train/cls_loss", # train loss

"metrics/precision",

"metrics/recall",

"metrics/mAP_0.5",

"metrics/mAP_0.5:0.95",

"val/box_loss",

"val/obj_loss",

"val/cls_loss", # val loss,

"val/missrate@fppi=1",

"x/lr0",

"x/lr1",

"x/lr2",

] # params

效果

训练过程中会在mAP的后面打印fppi, 训练完成后以及调用test.py测试时,会画fppi图:

结语

本文简述了在yolov7中增加FPPI评价指标,可以用来直观的表现模型的效果,指导阈值的选取。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 有哪些好用的视频剪辑工具?这几款剪辑必备

- 多租户数据隔离分案

- 程序媛的mac修炼手册--浏览器设置篇Safari vs Chrome

- 抖店新手该如何运营?

- P9852 [ICPC2021 Nanjing R] Windblume Festival 题解(SPJ)

- 蓝桥杯官网题目:2.包子凑数

- 深入理解Mysql事务隔离级别与锁机制

- 002集filter()函数及lambda()函数应用实例—python基础入门实例

- sql宽字节注入

- 04 在Vue3中使用setup语法糖