kubectl常用命令(全局篇)

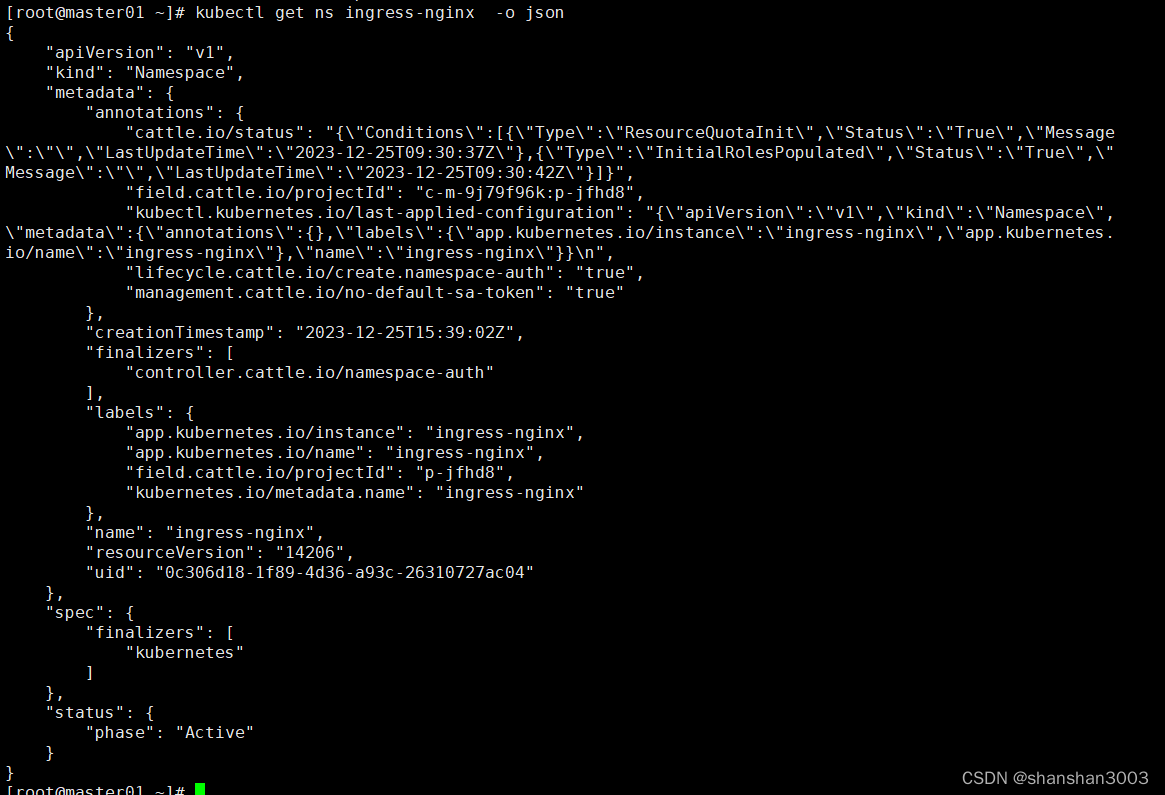

格式 -o

[cmd] -o json|yaml|wide

如:输出json格式

kubectl get ns ingress-nginx -o json

获取基本信息get

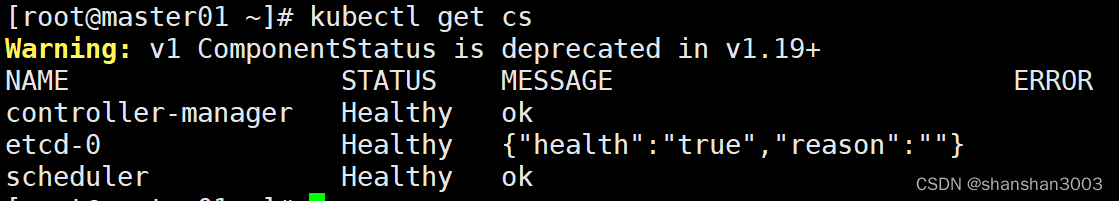

#查看集群基本信息

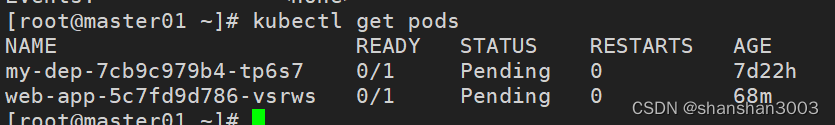

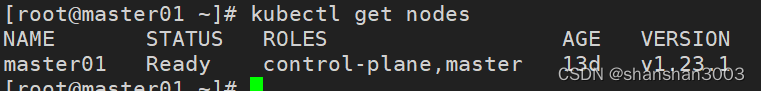

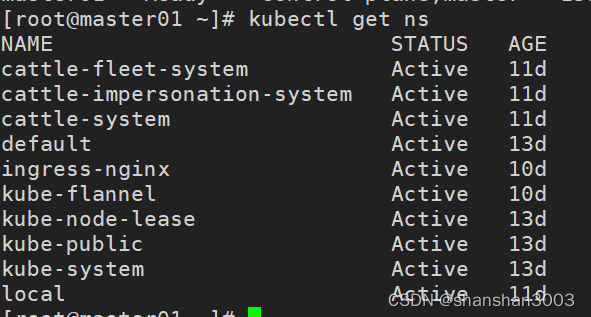

kubectl get cs|pods|nodes|ns|svc|rc|deployments

kubectl get cs

kubectl get pods

kubectl get nodes

kubectl get ns

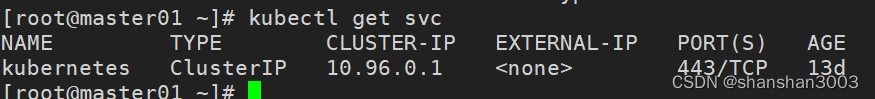

kubectl get svc

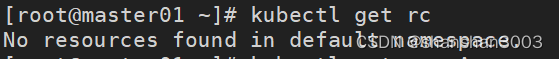

kubectl get rc

kubectl get deployments

查看详细信息 describe|logs

查看描述性信息describe

kubectl describe node|pod|deployment node-name|pod-name|deployment-name

kubectl describe node master01

[root@master01 ~]# kubectl describe node master01

Name: master01

Roles: control-plane,master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=master01

kubernetes.io/os=linux

node-role.kubernetes.io/control-plane=

node-role.kubernetes.io/master=

node.kubernetes.io/exclude-from-external-load-balancers=

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"a6:4d:30:a1:32:aa"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.182.127

kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

management.cattle.io/pod-limits: {"memory":"340Mi"}

management.cattle.io/pod-requests: {"cpu":"950m","memory":"290Mi","pods":"9"}

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Fri, 22 Dec 2023 23:20:54 +0800

Taints: node-role.kubernetes.io/master:NoSchedule

Unschedulable: false

Lease:

HolderIdentity: master01

AcquireTime: <unset>

RenewTime: Fri, 05 Jan 2024 22:28:05 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Fri, 05 Jan 2024 18:35:20 +0800 Fri, 05 Jan 2024 18:35:20 +0800 FlannelIsUp Flannel is running on this node

MemoryPressure False Fri, 05 Jan 2024 22:25:12 +0800 Wed, 03 Jan 2024 22:40:27 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Fri, 05 Jan 2024 22:25:12 +0800 Wed, 03 Jan 2024 22:40:27 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Fri, 05 Jan 2024 22:25:12 +0800 Wed, 03 Jan 2024 22:40:27 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Fri, 05 Jan 2024 22:25:12 +0800 Wed, 03 Jan 2024 22:40:27 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.182.127

Hostname: master01

Capacity:

cpu: 2

ephemeral-storage: 17394Mi

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3990120Ki

pods: 110

Allocatable:

cpu: 2

ephemeral-storage: 16415037823

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3887720Ki

pods: 110

System Info:

Machine ID: b5c63e049a3344f9a3bb0d4147e8237d

System UUID: 45da4d56-daf6-0055-e7a7-b414b6f8c4ac

Boot ID: 960d557f-4c01-48fd-b67d-ed3ce46a2935

Kernel Version: 5.4.265-1.el7.elrepo.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://24.0.7

Kubelet Version: v1.23.1

Kube-Proxy Version: v1.23.1

PodCIDR: 10.244.0.0/24

PodCIDRs: 10.244.0.0/24

Non-terminated Pods: (9 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

cattle-system cattle-cluster-agent-694c664f67-m26qw 0 (0%) 0 (0%) 0 (0%) 0 (0%) 8d

kube-flannel kube-flannel-ds-554jc 100m (5%) 0 (0%) 50Mi (1%) 0 (0%) 10d

kube-system coredns-6d8c4cb4d-8qkwj 100m (5%) 0 (0%) 70Mi (1%) 170Mi (4%) 13d

kube-system coredns-6d8c4cb4d-kbj92 100m (5%) 0 (0%) 70Mi (1%) 170Mi (4%) 13d

kube-system etcd-master01 100m (5%) 0 (0%) 100Mi (2%) 0 (0%) 13d

kube-system kube-apiserver-master01 250m (12%) 0 (0%) 0 (0%) 0 (0%) 13d

kube-system kube-controller-manager-master01 200m (10%) 0 (0%) 0 (0%) 0 (0%) 13d

kube-system kube-proxy-r2l4s 0 (0%) 0 (0%) 0 (0%) 0 (0%) 13d

kube-system kube-scheduler-master01 100m (5%) 0 (0%) 0 (0%) 0 (0%) 13d

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 950m (47%) 0 (0%)

memory 290Mi (7%) 340Mi (8%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events: <none>

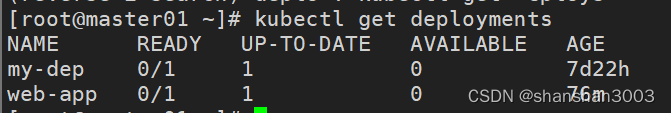

kubectl describe pod my-dep-7cb9c979b4-tp6s7

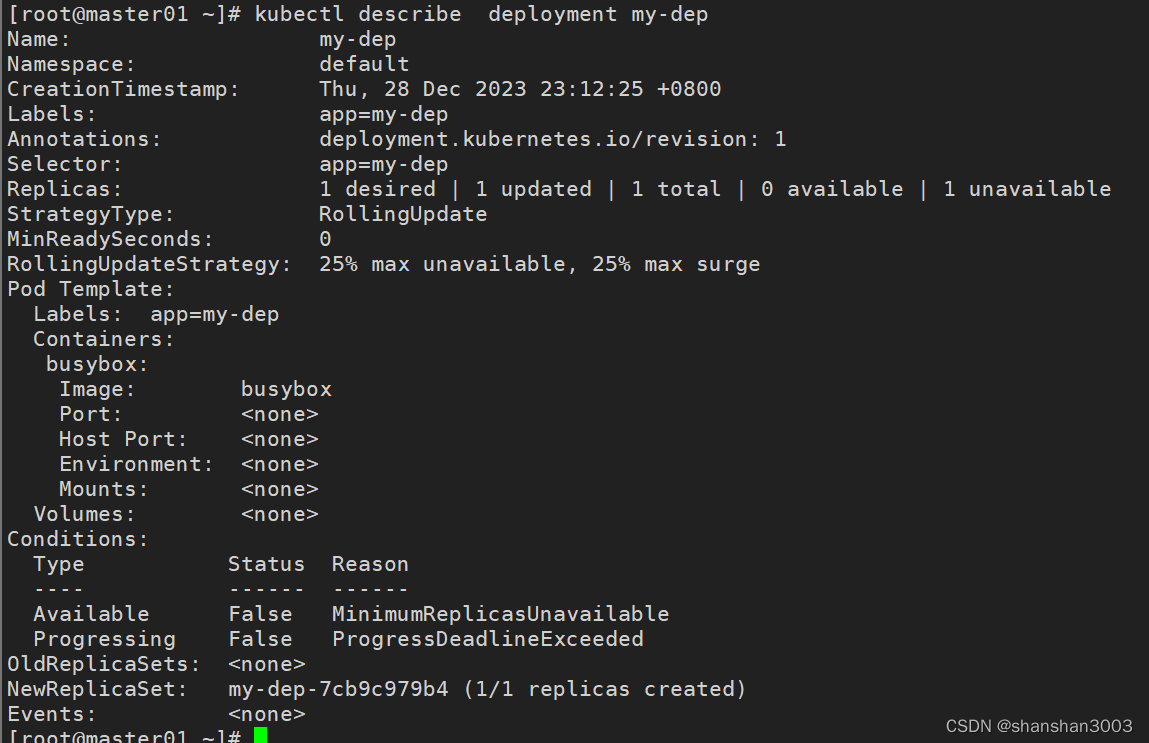

kubectl describe deployment my-dep

查看运行日志

kubectl logs?[-f] <Pod_name>? -n? <namespace> #如果有-f ,这是持续输出

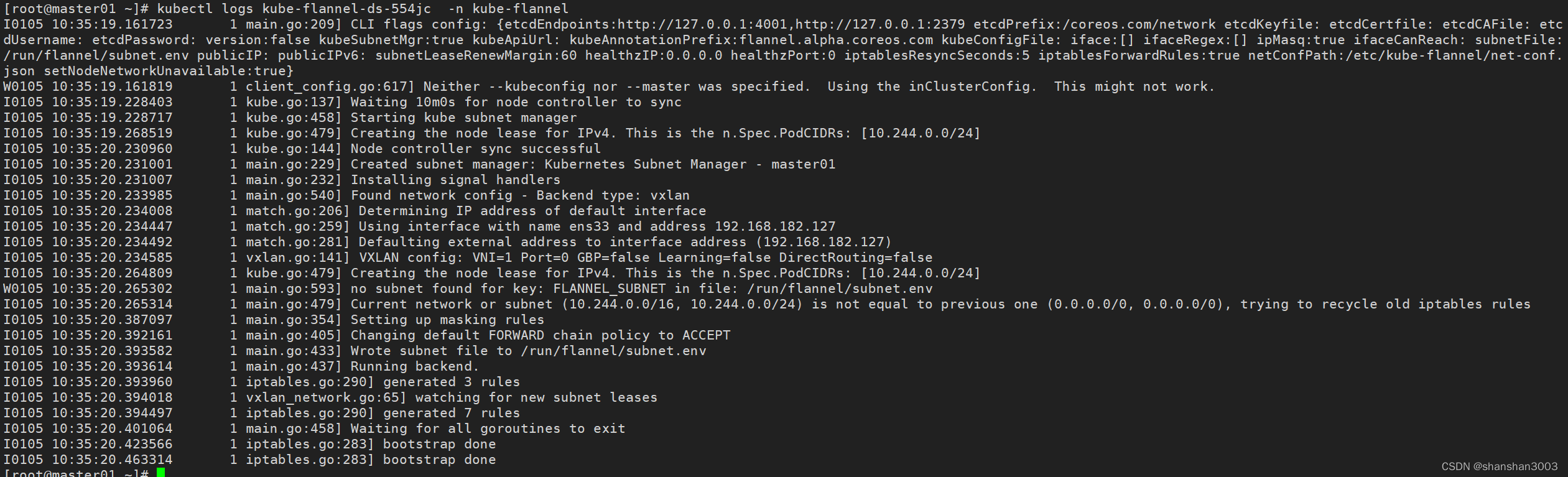

kubectl logs kube-flannel-ds-554jc -n kube-flannel

解释说明explain

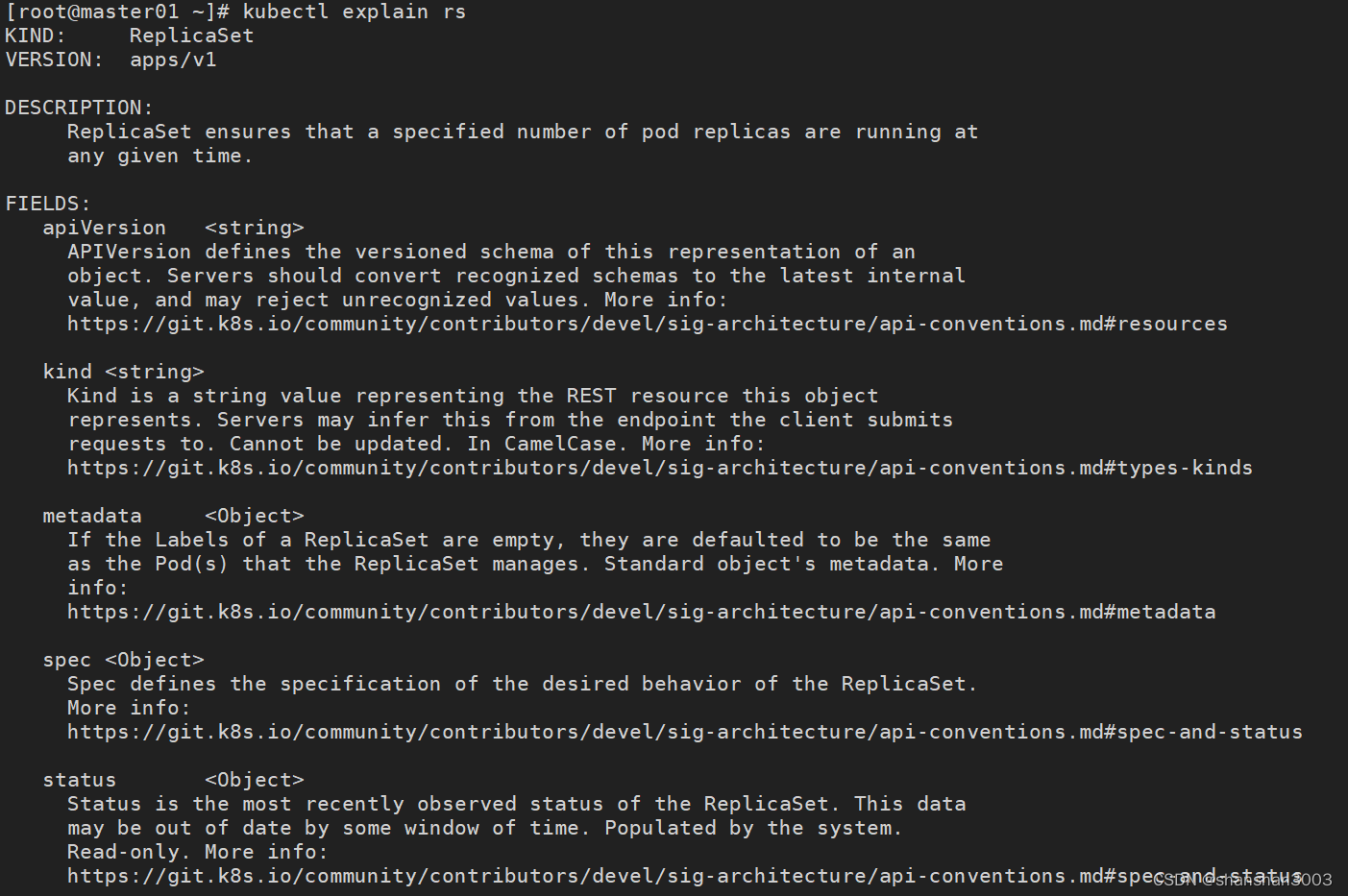

kubectl explain rs

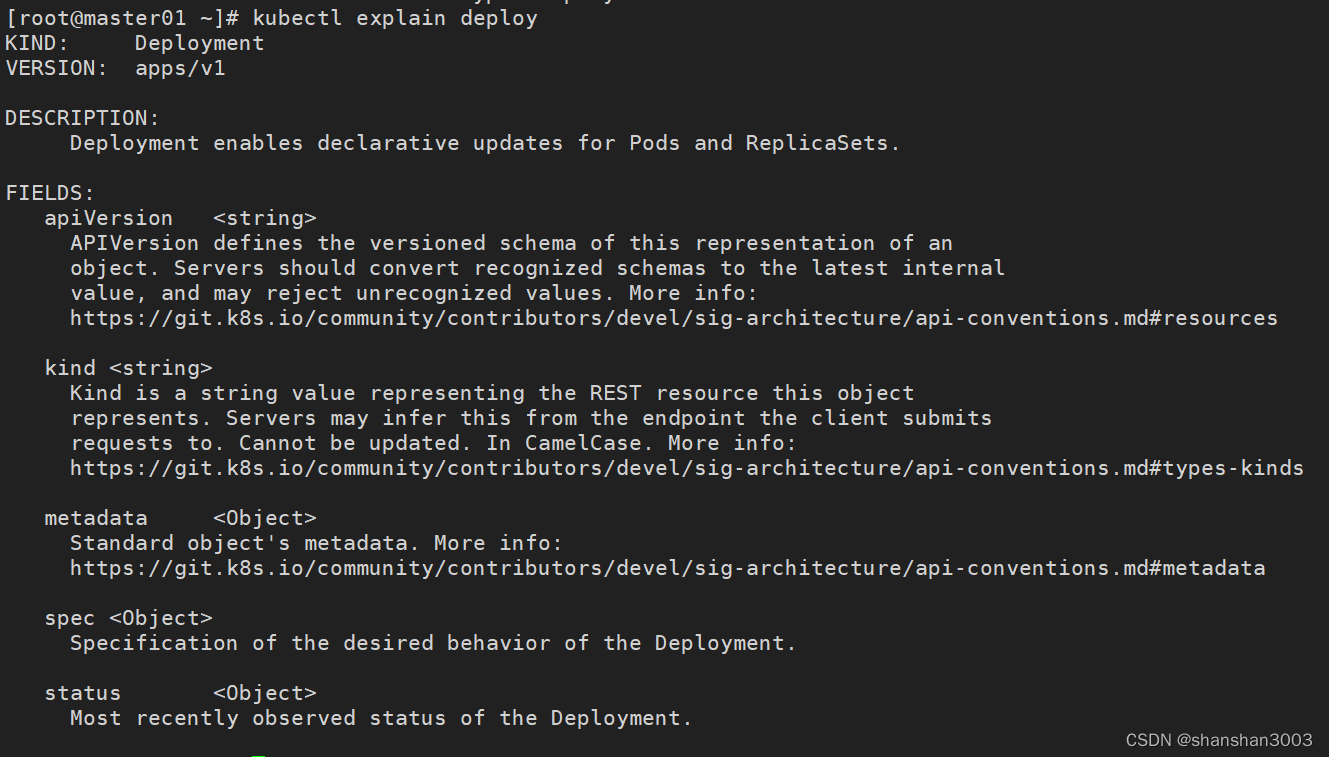

kubectl explain deploy

操作 create|run|delete|exec|exit

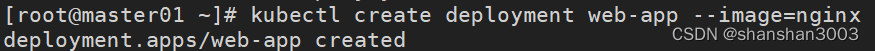

创建create

kubectl create deployment web-app --image=nginx

kubectl create -f deployment.yaml #根据指定文件创建

例如一个最简单的yaml文件

apiVersion: v1

kind: Pod

metadata:

name: demo

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

删除delete

kubectl delete deployment web-app

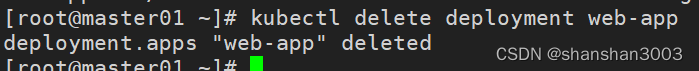

运行run

kubectl run nginx --image=nginx

kubectl run nginx --image=nginx --port=8088

![]()

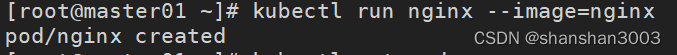

删除 delete

kubectl delete

kubectl delete ?pod demo

kubectl delete pods --all #据说是清空所有pod,没试过,大家看情况尝试

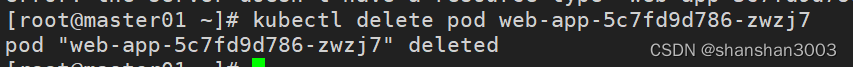

注意:如果是创建了deployment,会自动创建对应的pod,此时手动删除pod,系统会自动再启动pod,若想清除,需要删除创建的deployment,就如下面的例子

删除指定pod,看似删掉了,删除之后会自动创建

kubectl delete pod <pod_name>

kubectl delete pod web-app-5c7fd9d786-zwzj7

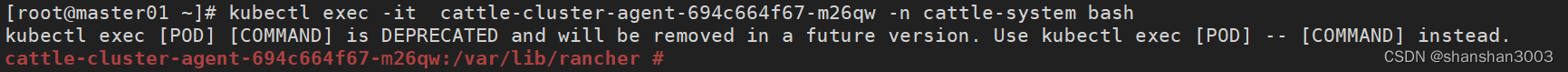

进入pod? exec

kubectl exec -it ?cattle-cluster-agent-694c664f67-m26qw -n cattle-system bash

退出pod exit

exit

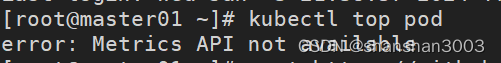

资源查看? top

kubectl top [flags] [options]

kubectl top pod

对应的api服务没有开启,则需要开启对应的服务。

问题解决需要参照如下链接去安装服务:

Releases · kubernetes-sigs/metrics-server · GitHub

kubectl apply -f components.yaml

部署完毕之后,发现有新的问题,也许是版本问题,待后续解决

kubectl top node #情况同上

暴漏 expose

将已经有的资源,创建一个service服务,并且暴露端口让外部可以访问

kubectl expose deployment nginx --port=88 --type=NodePort --target-port=80 --name=nginx-service

设定 set?

资源设定 resources

# 将deployment的nginx容器cpu限制为“200m”,将内存设置为“512Mi”

kubectl set resources deployment nginx -c=nginx --limits=cpu=200m,memory=512Mi

# 设置所有nginx容器中 Requests和Limits

kubectl set resources deployment nginx --limits=cpu=200m,memory=512Mi --requests=cpu=100m,memory=256Mi

# 删除nginx中容器的计算资源值

kubectl set resources deployment nginx --limits=cpu=0,memory=0 --requests=cpu=0,memory=0

单位m:CPU的计量单位叫毫核(m)。一个节点的CPU核心数量乘以1000,得到的就是节点总的CPU总数量。如,一个节点有两个核,那么该节点的CPU总量为2000m

镜像设定 images

给特定的容器设置镜像

kubectl set image deployment/nginx busybox=busybox nginx=nginx:1.9.1

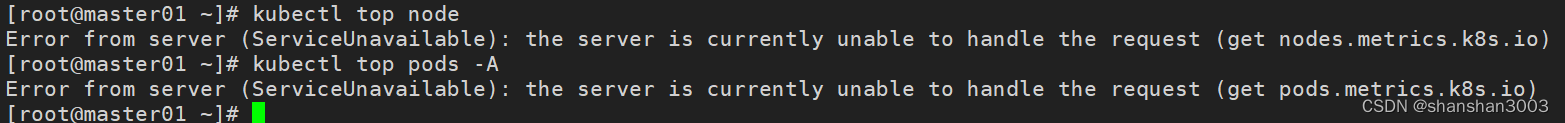

回滚 rollout

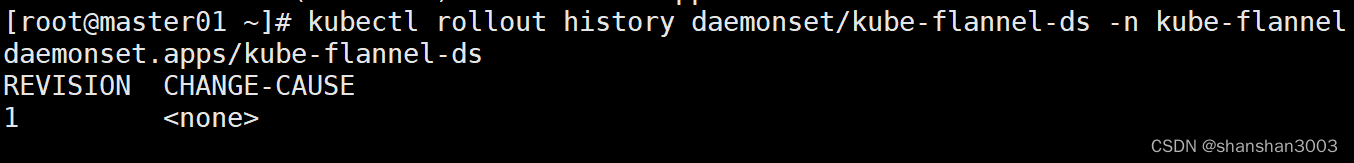

查看指定资源的历史

kubectl rollout history deployment/my-dep

kubectl rollout history daemonset/kube-flannel-ds -n kube-flannel

回滚到上一个版本

kubectl rollout undo deployment/nginx

回滚到指定版本

kubectl rollout undo deployment/nginx-service --to-revision=2

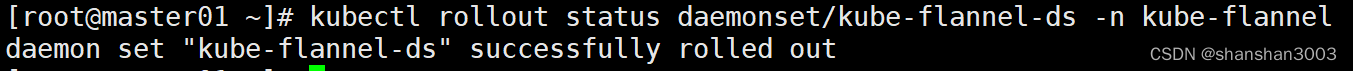

查看回滚状态

kubectl rollout status daemonset/kube-flannel-ds -n kube-flannel

暂停 pause

kubectl rollout pause deployment my-dep #暂停

![]()

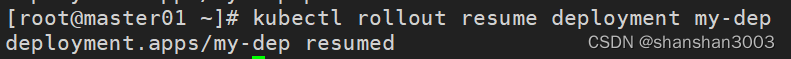

恢复 resume

kubectl rollout resume deployment my-dep #恢复

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 静态路由高级特性(HCIA)

- 大数据开发之Spark(累加器、广播变量、Top10热门品类实战)

- C++ 编程需要什么样的开发环境?

- 121. 买卖股票的最佳时机(Java)

- AJAX入门到实战,学习前端框架前必会的(ajax+node.js+webpack+git)(七)

- 苹果科技:应对下滑的困境与未来策略

- 什么是跨链桥?

- 输入两个时间,判断时间是否为非工作日,并且是日期否为同一天。是的话返回true,否返回false

- 如何下载和处理Sentinel-2数据

- 基于ssm智能社区管理系统的设计与实现+vue论文