轻松玩转书生·浦语大模型趣味Demo

发布时间:2024年01月06日

轻松玩转书生·浦语大模型趣味Demo

大模型及InternLM模型介绍

人工智能领域中 参数数量巨大 拥有庞大计算能力和参数规模的模型

特点及应用:

- 利用大量数据进行训练

- 拥有数十亿甚至数千亿个参数

- 模型在各种任务重展现出惊人的性能

InternLM模型全链路开源

- InternLM是一个开源的轻量级训练框架

- Lagent是一个轻量级、开源的基于大预言模型的智能体框架,用户可以快速地将一个大预言模型转变为多种类型的智能体。

InternLM-Chat-7B智能对话Demo

模型介绍

通过单一代码库,InternLM支持在拥有数千个GPU的大型集群上进行预训练,并在单个GPU上进行微调,同时实现了卓越的性能优化。在1024个GPU上训练时,InternLM可以实现90%的加速效率。

InternLM-7B包含了一个拥有70亿擦上农户的基础模型 和一个为实际场景量身定制的对话模型,该模型具有一下特点:

- 利用数万亿高质量token进行训练,建立了一个强大的知识库

- 支持8K token的上下文窗口长度,使得输入序列更长并增强了推理能力

模型部署

环境中安装运行 demo 所需要的依赖。

# 升级pip

python -m pip install --upgrade pip

pip install modelscope==1.9.5

pip install transformers==4.35.2

pip install streamlit==1.24.0

pip install sentencepiece==0.1.99

pip install accelerate==0.24.1

模型下载

import torch

from modelscope import snapshot_download, AutoModel, AutoTokenizer

import os

model_dir = snapshot_download('Shanghai_AI_Laboratory/internlm-chat-7b', cache_dir='/root/model', revision='v1.0.3')

首先 clone 代码,在 /root 路径下新建 code 目录,然后切换路径, clone 代码.

cd /root/code

git clone https://gitee.com/internlm/InternLM.git

目录下新建一个 cli_demo.py 文件,将以下代码填入其中:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

model_name_or_path = "/root/model/Shanghai_AI_Laboratory/internlm-chat-7b"

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(model_name_or_path, trust_remote_code=True, torch_dtype=torch.bfloat16, device_map='auto')

model = model.eval()

system_prompt = """You are an AI assistant whose name is InternLM (书生·浦语).

- InternLM (书生·浦语) is a conversational language model that is developed by Shanghai AI Laboratory (上海人工智能实验室). It is designed to be helpful, honest, and harmless.

- InternLM (书生·浦语) can understand and communicate fluently in the language chosen by the user such as English and 中文.

"""

messages = [(system_prompt, '')]

print("=============Welcome to InternLM chatbot, type 'exit' to exit.=============")

while True:

input_text = input("User >>> ")

input_text.replace(' ', '')

if input_text == "exit":

break

response, history = model.chat(tokenizer, input_text, history=messages)

messages.append((input_text, response))

print(f"robot >>> {response}")

webDemo运行

在vscode可以直接转发端口

streamlit run web_demo.py --server.address 127.0.0.1 --server.port 6006

Lagent智能体工具调用Demo

Lagent介绍

Lagent是一个轻量级、开源的基于大预言模型的智能体框架,用户可以快速地将一个大语言模型转变为多种类型的智能体,并提供了一个典型工具为大语言模型赋能

首先切换路径到 /root/code 克隆 lagent 仓库,并通过 pip install -e . 源码安装 Lagent

cd /root/code

git clone https://gitee.com/internlm/lagent.git

cd /root/code/lagent

git checkout 511b03889010c4811b1701abb153e02b8e94fb5e # 尽量保证和教程commit版本一致

pip install -e . # 源码安装

运行代码

运行代码

streamlit run /root/code/lagent/examples/react_web_demo.py --server.address 127.0.0.1 --server.port 6006

浦语·灵笔图文创作理解Demo

- 为用户打造图文并貌的专属文章

通用环境配置

- pip换源设置pip默认镜像源,升级pip到最新版本后进行配置

python -m pip install --upgrade pip

pip config set global.index-url 镜像源

- conda快速换源

cat <<'EOF' > ~/.condarc

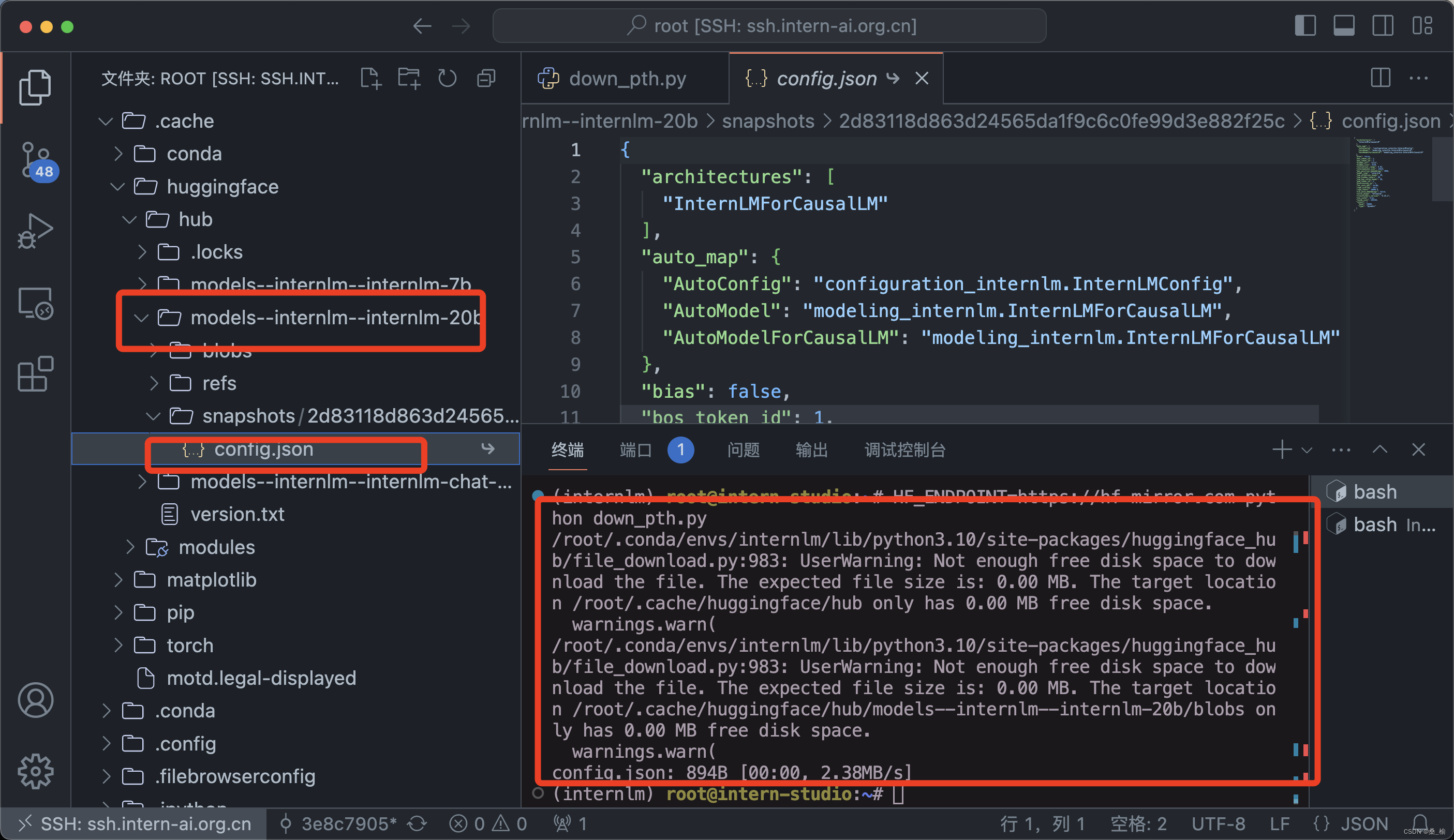

模型下载

- Hugging Face 下载使用Hugging Face 官方提供的

huggingface-cli 命令行工具。安装依赖:

pip install -U huggingface_hub

huggingface-cli download --resume-download internlm/internlm-chat-7b --local-dir your_path

- OpenXLab可以通过指定模型仓库的地址,以及需要下载的文件的名称,文件所需下载的位置等,直接下载模型权重文件。使用python脚本下载模型首先要安装依赖

pip instgall -U openxlab

from openxlab.model import download

download(model_repo='OpenLMLab/InternLM-7b',model_name='InternLM-7b',output='your local path')

- 使用modelscope中的snapshot_download函数下载模型,第一个参数为模型名称,参数cache_dir为模型的下载路径

pip install modelscope

pip install transformers

import torch

from modelscope import snapshot_download,AutoModel,AutoTokenizer

import os

model_dir = snapshot_download('Shanghai_AI_Laboratory/internLM-chat-7b',cache_dir='your path',revision='master')

HF_ENDPOINT=https://hf-mirror.com python down_pth.py

文章来源:https://blog.csdn.net/shengweiit/article/details/135423659

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- [足式机器人]Part2 Dr. CAN学习笔记- Kalman Filter卡尔曼滤波器Ch05-3+4

- 大师学SwiftUI第6章 - 声明式用户界面 Part 4

- [设计模式 Go实现] 创建型~抽象工厂模式

- GOOS=darwin 代表macOS环境

- 前后端分离项目中实现图形验证码

- python+playwright 学习-87 launch_persistent_context开启手机模式

- java链接hive数据库实现增删改查操作

- 吾爱破解置顶的“太极”,太好用了吧!

- Python知识(2/20):Python基本数据类型

- 微服务实战系列之MQ