大模型系列:OpenAI使用技巧_FinTunging微调做文本分类

我们将微调一个ada分类器,以区分两种运动:棒球和曲棍球。

# 导入fetch_20newsgroups函数,用于获取20个新闻组数据集

from sklearn.datasets import fetch_20newsgroups

# 导入pandas库,用于数据处理

import pandas as pd

# 导入openai库,用于人工智能相关操作

import openai

# 定义要获取的新闻组类别

categories = ['rec.sport.baseball', 'rec.sport.hockey']

# 使用fetch_20newsgroups函数获取训练集数据,subset参数指定为'train',shuffle参数指定为True,random_state参数指定为42,categories参数指定为上面定义的类别

sports_dataset = fetch_20newsgroups(subset='train', shuffle=True, random_state=42, categories=categories)

数据探索

可以使用sklearn加载新闻组数据集。首先,我们将查看数据本身:

# 打印出体育数据集中的第一条数据

print(sports_dataset['data'][0])

From: dougb@comm.mot.com (Doug Bank)

Subject: Re: Info needed for Cleveland tickets

Reply-To: dougb@ecs.comm.mot.com

Organization: Motorola Land Mobile Products Sector

Distribution: usa

Nntp-Posting-Host: 145.1.146.35

Lines: 17

In article <1993Apr1.234031.4950@leland.Stanford.EDU>, bohnert@leland.Stanford.EDU (matthew bohnert) writes:

|> I'm going to be in Cleveland Thursday, April 15 to Sunday, April 18.

|> Does anybody know if the Tribe will be in town on those dates, and

|> if so, who're they playing and if tickets are available?

The tribe will be in town from April 16 to the 19th.

There are ALWAYS tickets available! (Though they are playing Toronto,

and many Toronto fans make the trip to Cleveland as it is easier to

get tickets in Cleveland than in Toronto. Either way, I seriously

doubt they will sell out until the end of the season.)

--

Doug Bank Private Systems Division

dougb@ecs.comm.mot.com Motorola Communications Sector

dougb@nwu.edu Schaumburg, Illinois

dougb@casbah.acns.nwu.edu 708-576-8207

# 获取第一条新闻的目标类别名称

sports_dataset.target_names[sports_dataset['target'][0]] # 返回值为字符串,表示第一条新闻所属的类别名称

'rec.sport.baseball'

# 统计数据集中的样本数量

len_all, len_baseball, len_hockey = len(sports_dataset.data), len([e for e in sports_dataset.target if e == 0]), len([e for e in sports_dataset.target if e == 1])

# 打印总样本数、棒球样本数和曲棍球样本数

print(f"Total examples: {len_all}, Baseball examples: {len_baseball}, Hockey examples: {len_hockey}")

Total examples: 1197, Baseball examples: 597, Hockey examples: 600

棒球类别中的一个样本如上所示。这是一封发给邮件列表的电子邮件。我们可以观察到,我们总共有1197个例子,这些例子在两个运动之间均匀分布。

数据准备

我们将数据集转换为一个pandas dataframe,其中包含一个用于提示的列和一个用于完成的列。提示包含邮件列表中的电子邮件,完成是一个运动的名称,可以是冰球或棒球。仅用于演示目的和微调速度,我们只选择了300个示例。在实际使用中,示例越多,性能越好。

# 导入pandas库

# 从sports_dataset中获取target_names,并将其转换为只包含最后一个元素的列表

labels = [sports_dataset.target_names[x].split('.')[-1] for x in sports_dataset['target']]

# 从sports_dataset中获取data,并去除每个文本的前后空格

texts = [text.strip() for text in sports_dataset['data']]

# 使用zip函数将texts和labels合并为一个元组,并使用DataFrame函数将其转换为DataFrame对象

df = pd.DataFrame(zip(texts, labels), columns = ['prompt','completion']) #[:300]

# 打印DataFrame的前几行数据

df.head()

| prompt | completion | |

|---|---|---|

| 0 | From: dougb@comm.mot.com (Doug Bank)\nSubject:... | baseball |

| 1 | From: gld@cunixb.cc.columbia.edu (Gary L Dare)... | hockey |

| 2 | From: rudy@netcom.com (Rudy Wade)\nSubject: Re... | baseball |

| 3 | From: monack@helium.gas.uug.arizona.edu (david... | hockey |

| 4 | Subject: Let it be Known\nFrom: <ISSBTL@BYUVM.... | baseball |

棒球和曲棍球都是单个标记。我们将数据集保存为jsonl文件。

# 将DataFrame数据保存为JSON格式的文件

df.to_json("sport2.jsonl", orient='records', lines=True)

数据准备工具

现在我们可以使用一个数据准备工具,在微调之前对我们的数据集提出一些建议的改进。在启动工具之前,我们会更新openai库,以确保我们使用的是最新的数据准备工具。我们还额外指定了-q选项,以自动接受所有建议。

# 安装openai库的最新版本

!pip install --upgrade openai

# 导入openai工具包中的fine_tunes.prepare_data模块

# 调用prepare_data模块中的函数,对名为sport2.jsonl的数据文件进行处理

# -f参数指定要处理的数据文件名,-q参数指定要处理的数据文件所在的路径

!openai tools fine_tunes.prepare_data -f sport2.jsonl -q

Analyzing...

- Your file contains 1197 prompt-completion pairs

- Based on your data it seems like you're trying to fine-tune a model for classification

- For classification, we recommend you try one of the faster and cheaper models, such as `ada`

- For classification, you can estimate the expected model performance by keeping a held out dataset, which is not used for training

- There are 11 examples that are very long. These are rows: [134, 200, 281, 320, 404, 595, 704, 838, 1113, 1139, 1174]

For conditional generation, and for classification the examples shouldn't be longer than 2048 tokens.

- Your data does not contain a common separator at the end of your prompts. Having a separator string appended to the end of the prompt makes it clearer to the fine-tuned model where the completion should begin. See https://beta.openai.com/docs/guides/fine-tuning/preparing-your-dataset for more detail and examples. If you intend to do open-ended generation, then you should leave the prompts empty

- The completion should start with a whitespace character (` `). This tends to produce better results due to the tokenization we use. See https://beta.openai.com/docs/guides/fine-tuning/preparing-your-dataset for more details

Based on the analysis we will perform the following actions:

- [Recommended] Remove 11 long examples [Y/n]: Y

- [Recommended] Add a suffix separator `\n\n###\n\n` to all prompts [Y/n]: Y

- [Recommended] Add a whitespace character to the beginning of the completion [Y/n]: Y

- [Recommended] Would you like to split into training and validation set? [Y/n]: Y

Your data will be written to a new JSONL file. Proceed [Y/n]: Y

Wrote modified files to `sport2_prepared_train.jsonl` and `sport2_prepared_valid.jsonl`

Feel free to take a look!

Now use that file when fine-tuning:

> openai api fine_tunes.create -t "sport2_prepared_train.jsonl" -v "sport2_prepared_valid.jsonl" --compute_classification_metrics --classification_positive_class " baseball"

After you’ve fine-tuned a model, remember that your prompt has to end with the indicator string `\n\n###\n\n` for the model to start generating completions, rather than continuing with the prompt.

Once your model starts training, it'll approximately take 30.8 minutes to train a `curie` model, and less for `ada` and `babbage`. Queue will approximately take half an hour per job ahead of you.

工具会有帮助性地对数据集提出一些建议,并将数据集分成训练集和验证集。

在提示和完成之间需要一个后缀来告诉模型输入文本已经结束,现在需要预测类别。由于我们在每个示例中使用相同的分隔符,模型能够学习到它应该在分隔符后面预测棒球或曲棍球。

在完成中使用空格前缀是有用的,因为大多数单词标记都是以空格前缀进行标记化。

工具还识别出这很可能是一个分类任务,因此建议将数据集分成训练集和验证集。这将使我们能够轻松地测量对新数据的预期性能。

微调

该工具建议我们运行以下命令来训练数据集。由于这是一个分类任务,我们想知道在提供的验证集上的泛化性能如何,以满足我们的分类用例。该工具建议添加 --compute_classification_metrics --classification_positive_class " baseball" 以计算分类指标。

我们可以直接从CLI工具中复制建议的命令。我们特别添加了 -m ada 来微调一个更便宜和更快的ada模型,通常在分类用例上与更慢和更昂贵的模型在性能上相当。

# Fine-tuning OpenAI API for sport classification

# This code is used to fine-tune the OpenAI API for sport classification. It takes in two input files, "sport2_prepared_train.jsonl" and "sport2_prepared_valid.jsonl", which contain the training and validation data respectively.

# The "--compute_classification_metrics" flag is used to compute classification metrics during the fine-tuning process.

# The "--classification_positive_class" flag is set to "baseball" to specify that "baseball" is the positive class for the classification task.

# The "-m ada" flag specifies the model to be used for fine-tuning, in this case, the Ada model.

!openai api fine_tunes.create -t "sport2_prepared_train.jsonl" -v "sport2_prepared_valid.jsonl" --compute_classification_metrics --classification_positive_class " baseball" -m ada

Upload progress: 100%|████████████████████| 1.52M/1.52M [00:00<00:00, 1.81Mit/s]

Uploaded file from sport2_prepared_train.jsonl: file-Dxx2xJqyjcwlhfDHpZdmCXlF

Upload progress: 100%|███████████████████████| 388k/388k [00:00<00:00, 507kit/s]

Uploaded file from sport2_prepared_valid.jsonl: file-Mvb8YAeLnGdneSAFcfiVcgcN

Created fine-tune: ft-2zaA7qi0rxJduWQpdvOvmGn3

Streaming events until fine-tuning is complete...

(Ctrl-C will interrupt the stream, but not cancel the fine-tune)

[2021-07-30 13:15:50] Created fine-tune: ft-2zaA7qi0rxJduWQpdvOvmGn3

[2021-07-30 13:15:52] Fine-tune enqueued. Queue number: 0

[2021-07-30 13:15:56] Fine-tune started

[2021-07-30 13:18:55] Completed epoch 1/4

[2021-07-30 13:20:47] Completed epoch 2/4

[2021-07-30 13:22:40] Completed epoch 3/4

[2021-07-30 13:24:31] Completed epoch 4/4

[2021-07-30 13:26:22] Uploaded model: ada:ft-openai-2021-07-30-12-26-20

[2021-07-30 13:26:27] Uploaded result file: file-6Ki9RqLQwkChGsr9CHcr1ncg

[2021-07-30 13:26:28] Fine-tune succeeded

Job complete! Status: succeeded 🎉

Try out your fine-tuned model:

openai api completions.create -m ada:ft-openai-2021-07-30-12-26-20 -p <YOUR_PROMPT>

这个模型在大约十分钟内成功训练。我们可以看到模型名称是ada:ft-openai-2021-07-30-12-26-20,我们可以用它来进行推理。

我们现在可以下载结果文件,观察在一个保留的验证集上的预期性能。

# Fine-tuning OpenAI API

# This code is used to fine-tune the OpenAI API model using the provided dataset.

# The command "!openai api fine_tunes.results -i ft-2zaA7qi0rxJduWQpdvOvmGn3 > result.csv" is used to fine-tune the model and save the results in a CSV file named "result.csv".

!openai api fine_tunes.results -i ft-2zaA7qi0rxJduWQpdvOvmGn3 > result.csv

# 读取result.csv文件并将其存储在results变量中

results = pd.read_csv('result.csv')

# 使用条件筛选,选择classification/accuracy列不为空的行,并返回最后一行

last_row = results[results['classification/accuracy'].notnull()].tail(1)

| step | elapsed_tokens | elapsed_examples | training_loss | training_sequence_accuracy | training_token_accuracy | classification/accuracy | classification/precision | classification/recall | classification/auroc | classification/auprc | classification/f1.0 | validation_loss | validation_sequence_accuracy | validation_token_accuracy | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 929 | 930 | 3027688 | 3720 | 0.044408 | 1.0 | 1.0 | 0.991597 | 0.983471 | 1.0 | 1.0 | 1.0 | 0.991667 | NaN | NaN | NaN |

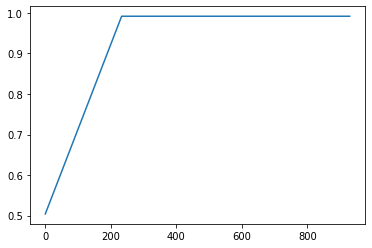

准确率达到了99.6%。在下面的图表中,我们可以看到验证集上的准确率在训练过程中如何增加。

results[results['classification/accuracy'].notnull()]['classification/accuracy'].plot()

<AxesSubplot:>

使用模型

现在我们可以调用模型来获取预测结果。

# 读取json文件,并将每行数据转换为一个DataFrame

test = pd.read_json('sport2_prepared_valid.jsonl', lines=True)

# 显示DataFrame的前几行数据

test.head()

| prompt | completion | |

|---|---|---|

| 0 | From: gld@cunixb.cc.columbia.edu (Gary L Dare)... | hockey |

| 1 | From: smorris@venus.lerc.nasa.gov (Ron Morris ... | hockey |

| 2 | From: golchowy@alchemy.chem.utoronto.ca (Geral... | hockey |

| 3 | From: krattige@hpcc01.corp.hp.com (Kim Krattig... | baseball |

| 4 | From: warped@cs.montana.edu (Doug Dolven)\nSub... | baseball |

我们需要在继续使用时使用与微调期间相同的分隔符。在这种情况下,它是\n\n###\n\n。由于我们关心的是分类,我们希望温度尽可能低,并且我们只需要一个令牌完成来确定模型的预测。

# 定义预训练模型ft_model

# 使用openai库的Completion.create方法创建一个新的完成请求

# 参数model指定使用的模型为ft_model

# 参数prompt指定输入的提示文本为test['prompt'][0] + '\n\n###\n\n'

# 参数max_tokens指定生成的文本长度为1个token

# 参数temperature指定生成文本的多样性为0

# 将生成的结果保存在res变量中

ft_model = 'ada:ft-openai-2021-07-30-12-26-20'

res = openai.Completion.create(model=ft_model, prompt=test['prompt'][0] + '\n\n###\n\n', max_tokens=1, temperature=0)

# 从res中获取生成的文本结果

res['choices'][0]['text']

' hockey'

为了获得对数概率,我们可以在完成请求中指定logprobs参数。

# 调用openai.Completion.create方法,创建一个Completion对象,并传入参数model、prompt、max_tokens、temperature和logprobs

# model参数指定了使用的模型,ft_model是一个模型的名称

# prompt参数指定了输入的文本,test['prompt'][0]是一个字符串,表示输入的文本

# max_tokens参数指定了生成的文本的最大长度,这里设置为1

# temperature参数指定了生成文本的多样性,这里设置为0,表示生成的文本是确定性的

# logprobs参数指定了返回的结果中是否包含每个token的概率值,这里设置为2,表示返回结果中包含每个token的概率值

res = openai.Completion.create(model=ft_model, prompt=test['prompt'][0] + '\n\n###\n\n', max_tokens=1, temperature=0, logprobs=2)

# 从返回的结果中获取生成的文本的概率值

res['choices'][0]['logprobs']['top_logprobs'][0] #表示返回结果中第一个choice的top_logprobs中的第一个概率值

<OpenAIObject at 0x7fe114e435c8> JSON: {

" baseball": -7.6311407,

" hockey": -0.0006307676

}

我们可以看到,模型预测冰球比棒球更有可能,这是正确的预测。通过请求log_probs,我们可以看到每个类别的预测(log)概率。

概括

有趣的是,我们经过优化的分类器非常灵活。尽管是在不同邮件列表的邮件上进行训练,它也成功地预测了推文。

# 给代码添加中文注释

# 定义一个字符串变量,存储一条关于冰球的推文

sample_hockey_tweet = """Thank you to the

@Canes

and all you amazing Caniacs that have been so supportive! You guys are some of the best fans in the NHL without a doubt! Really excited to start this new chapter in my career with the

@DetroitRedWings

!!"""

# 调用OpenAI的Completion API,使用指定的模型和参数生成文本

# 使用之前定义的字符串变量作为输入的prompt

# 设置max_tokens为1,表示只生成一个token

# 设置temperature为0,表示生成的文本更加确定和保守

# 设置logprobs为2,表示返回生成文本的log probabilities

res = openai.Completion.create(model=ft_model, prompt=sample_hockey_tweet + '\n\n###\n\n', max_tokens=1, temperature=0, logprobs=2)

# 获取生成文本的结果

generated_text = res['choices'][0]['text']

# 返回生成的文本

generated_text

' hockey'

# 导入openai模块

# sample_baseball_tweet为一个字符串,描述了一则棒球消息,其中包含了球队交易的信息

sample_baseball_tweet="""BREAKING: The Tampa Bay Rays are finalizing a deal to acquire slugger Nelson Cruz from the Minnesota Twins, sources tell ESPN."""

# 调用openai模块中的Completion.create()方法,生成一个文本自动补全的结果

# ft_model为一个预训练模型,用于生成文本

# prompt参数为输入的文本,即上述的棒球消息

# max_tokens参数为生成的文本长度,此处为1

# temperature参数为生成文本的随机度,此处为0,即不加随机性

# logprobs参数为生成文本的概率值,此处为2,即返回生成文本的概率值

res = openai.Completion.create(model=ft_model, prompt=sample_baseball_tweet + '\n\n###\n\n', max_tokens=1, temperature=0, logprobs=2)

# 输出生成的文本结果

# res['choices'][0]['text']为生成的文本

print(res['choices'][0]['text'])

' baseball'

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- FID score

- 强化学习AI构建实战 - 基于“黄金点”游戏(二)

- android studio设置gradle和gradle JDK版本

- nginx转发ingress-nginx问题记录

- 线上展览馆可以展示哪些内容,线上展览馆如何搭建

- 「模问题」AI原生小游戏强势来袭!

- 8路DI高速计数器,8路DO支持PWM输出,Modbus TCP模块 YL93 开关量输入输出

- 抖店爆品之后,为什么流量一蹶不振?

- C语言实现崩铁“寻径指津”(有源码),不看后悔37天

- 基于SpringBoot+Vue的儿童书法机构管理系统