Linux单主机模拟测试docker macvlan网络

在单台宿主机上使用Linux Bridge,桥接不同网络命名空间(namespace)的方式,模拟测试docker macvlan网络,记录如下:

参考

- 链接: Macvlan network driver

- 链接: Docker 网络模型之 macvlan 详解,图解,实验完整

- 链接: Docker 网络模型之 macvlan 详解,图解,实验完整

- 链接: linux iptables详解

- 链接: iptables详解(图文)

- 链接: Linux 虚拟网络设备 veth-pair 详解,看这一篇就够了

- 链接: 图解几个与Linux网络虚拟化相关的虚拟网卡-VETH/MACVLAN/MACVTAP/IPVLAN

- 链接.Linux Macvlan

- 链接: Linux namespace之:network namespace

- 链接: linux虚拟网络设备之VLAN配置详解

- 链接: Linux 网络设备 - Bridge 详解

环境

Ubuntu22.04

测试

测试涉及的相关知识:

1. Macvlan网络的概念,请见:

2. Docker Macvlan网络,请见:

3. Linux Bridge概念,请见:

4. Linux Namespace概念,请见:

1. 测试内容

1.1 测试Linux bridge 和 namespace 模拟 docker macvlan 网络访问外部的情况

- 在宿主机上创建2个独立的命名空间(namespace),并在命名空间中分别创建vlan1和vlan2。

- 在宿主机上创建2个docker macvlan网络,IP地址段与vlan1和vlan2相同;

- 创建两个容器分别加入docker macvlan网络中

- 测试两个容器访问独立命名空间中vlan1和vlan2的IP(相当于访问网络中其它设备的IP)

1.2 测试 docker macvlan网络中容器与 docker macvlan网络父网卡的通讯情况

- docker macvlan网络中的容器默认是无法与父网卡通讯的(详见macvlan概念)

- 为docker macvlan网络的父网卡单独创建Linux macvlan网卡(模式bridge),并添加转发路由

- 测试容器与docker macvlan网络的父网卡,经Linux macvlan网卡转发通讯

1.3 测试两个不同网段 docker macvlan网络通过三层转发跨网通讯的情况

- 在宿主机上新创建1个命名空间(namespace),在其中创建vlan1和vlan2。

- 新命名空间中创建vlan1和vlan2 ,分别配置与容器同网段IP,作为网络网关

- 新命名空间中,启用NAT转发功能,三层转发vlan1与vlan2网段地址

- 测试docker macvlan网络中两个容器跨网通讯

2. 使用bridge和namespace模拟docker macvlan网络访问外部的情况

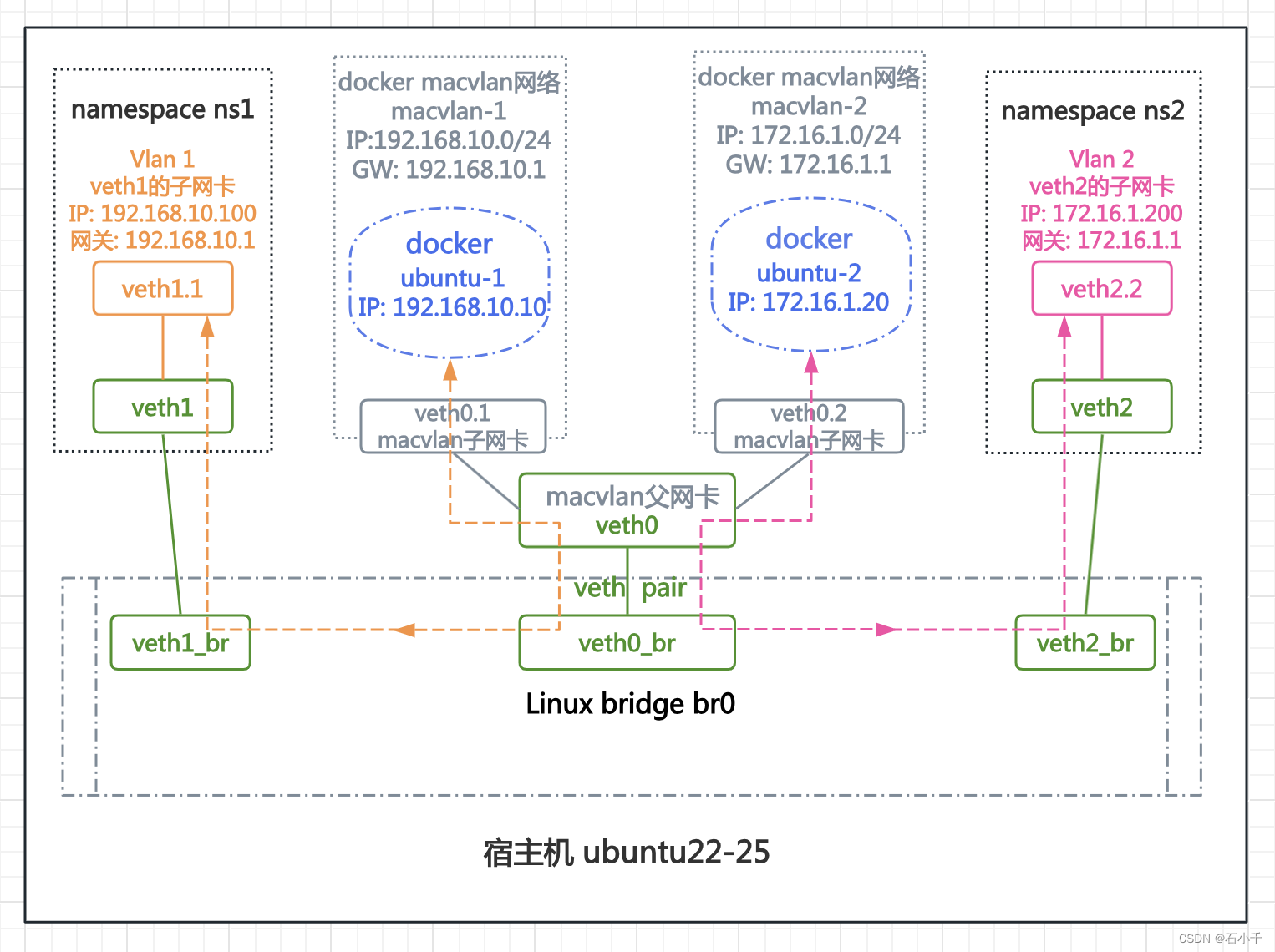

- 如图示:

- 通过Linux bridge连接了3对veth pair,其中veth0连接了两个中继桥接macvlan网络,每个网络运行1个容器。另外两个veth1和veth2分属两个独立命名空间(ns1和ns2),连接了vlan 1子网卡(veth1.1)和vlan 2子网卡(veth2.2)。

- docker ubuntu-1与vlan 1子网卡(veth1.1),网段地址相同,但命名空间隔离,经Linux bridge br0,二层转发通讯。

- docker ubuntu-2与vlan 2子网卡(veth2.2),网段地址相同,但命名空间隔离,经Linux bridge br0,二层转发通讯。

- 测试步骤

- 创建3对veth pair:veth0和veth0_br、veth1和veth1_br、veth2和veth2_br

- 创建Linux bridge:br0,将虚拟网卡veth0_br、veth1_br、veth2_br加入br0

- 创建2个namespace:ns1和ns2,将虚拟网卡veth1加入ns1、veth2加入ns2

- ns1中创建vlan 1,配置IP(192.168.10.100)并启用

- ns2中创建vlan 2,配置IP(172.16.1.200)并启用

- 创建两个中继桥接docker macvlan网络:macvlan-1和macvlan-2

- 创建两个容器:ubuntu-1和ubuntu-2,分别加入两个docker macvlan网络

- 测试容器ubuntu-1(192.168.10.10)与ns1(192.168.10.100)通讯,测试ubuntu-2(172.16.1.20)与ns2(172.16.1.200)通讯

2.1 创建3对veth pair:veth0和veth0_br、veth1和veth1_br、veth2和veth2_br

// 创建veth pair

root@ubuntu22-25:~# ip link add name veth0 type veth peer veth0_br

root@ubuntu22-25:~# ip link add name veth1 type veth peer veth1_br

root@ubuntu22-25:~# ip link add name veth2 type veth peer veth2_br

// 启用veth

root@ubuntu22-25:~# ip link set veth0 up

root@ubuntu22-25:~# ip link set veth0_br up

root@ubuntu22-25:~# ip link set veth1 up

root@ubuntu22-25:~# ip link set veth1_br up

root@ubuntu22-25:~# ip link set veth2 up

root@ubuntu22-25:~# ip link set veth2_br up

2.2 创建Linux bridge br0,虚拟网卡veth0_br、veth1_br、veth2_br加入br0

// 创建Linux bridge br0

root@ubuntu22-25:~# brctl addbr br0

root@ubuntu22-25:~# ip link set br0 up

// 添加veth0_br、veth1_br、veth2_br

root@ubuntu22-25:~# brctl addif br0 veth0_br

root@ubuntu22-25:~# brctl addif br0 veth1_br

root@ubuntu22-25:~# brctl addif br0 veth2_br

root@ubuntu22-25:~# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.ca19426b60e5 no veth0_br

veth1_br

veth2_br

docker0 8000.02429a5bdfc4 no

2.3 创建2个namespace:ns1和ns2,虚拟网卡veth1加入ns1、veth2加入ns2

// 创建namespace

root@ubuntu22-25:~# ip netns add ns1

root@ubuntu22-25:~# ip netns add ns2

// veth加入namespace

root@ubuntu22-25:~# ip link set veth1 netns ns1

root@ubuntu22-25:~# ip link set veth2 netns ns2

2.4 ns1中创建vlan 1子网卡,配置IP并启用

- ns1配置vlan子网卡,vlan id为1,名称veth1.1

// ns1中创建vlan 1

root@ubuntu22-25:~# ip netns exec ns1 ip link add link veth1 name veth1.1 type vlan id 1

- 配置veth1.1 IP 192.168.10.100,并启用

// 配置IP并启用

root@ubuntu22-25:~# ip netns exec ns1 ip addr add 192.168.10.100/24 dev veth1.1

root@ubuntu22-25:~# ip netns exec ns1 ip link set lo up

root@ubuntu22-25:~# ip netns exec ns1 ip link set veth1 up

root@ubuntu22-25:~# ip netns exec ns1 ip link set veth1.1 up

- 查看ns1网卡情况

// 查看ns1网卡

root@ubuntu22-25:~# ip netns exec ns1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: veth1.1@veth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 9a:72:89:8d:28:29 brd ff:ff:ff:ff:ff:ff

inet 192.168.10.100/24 scope global veth1.1

valid_lft forever preferred_lft forever

inet6 fe80::9872:89ff:fe8d:2829/64 scope link

valid_lft forever preferred_lft forever

7: veth1@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 9a:72:89:8d:28:29 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::9872:89ff:fe8d:2829/64 scope link

valid_lft forever preferred_lft forever

root@ubuntu22-25:~#

2.5 ns2中创建vlan 2子网卡,配置IP并启用

- ns2配置vlan子网卡,vlan id为2,名称veth2.2

// ns2中创建vlan2

root@ubuntu22-25:~# ip netns exec ns2 ip link add link veth2 name veth2.2 type vlan id 2

- 配置veth2.2 IP 172.16.1.200,并启用

// 配置IP 172.16.1.200,

root@ubuntu22-25:~# ip netns exec ns2 ip addr add 172.16.1.200/24 dev veth2.2

root@ubuntu22-25:~# ip netns exec ns2 ip link set lo up

root@ubuntu22-25:~# ip netns exec ns2 ip link set veth2 up

root@ubuntu22-25:~# ip netns exec ns2 ip link set veth2.2 up

root@ubuntu22-25:~#

- 查看ns2网卡情况

root@ubuntu22-25:~# ip netns exec ns2 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: veth2.2@veth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 3a:bf:7f:75:16:89 brd ff:ff:ff:ff:ff:ff

inet 172.16.1.200/24 scope global veth2.2

valid_lft forever preferred_lft forever

inet6 fe80::38bf:7fff:fe75:1689/64 scope link

valid_lft forever preferred_lft forever

9: veth2@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 3a:bf:7f:75:16:89 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::38bf:7fff:fe75:1689/64 scope link

valid_lft forever preferred_lft forever

root@ubuntu22-25:~#

2.6 创建两个中继桥接docker macvlan网络:macvlan-1和macvlan-2

- 创建docker macvlan网络macvlan-1

- 父网卡veth0,子网卡veth0.1

- 网段 192.168.10.0/24,网关 192.168.10.1(与ns1中vlan 1子网卡的网段相同)

- macvlan模式bridge

- 创建docker macvlan网络macvlan-2

- 父网卡veth0,子网卡veth0.2

- 网段 172.16.1.0/24,网关 172.16.1.1(与ns2中vlan 2子网卡的网段相同)

- macvlan模式bridge

// 创建macvlan-1

root@ubuntu22-25:~# docker network create -d macvlan --subnet 192.168.10.0/24 --gateway 192.168.10.1 --aux-address used_ip1=192.168.10.100 -o parent=veth0.1 -o macvlan_mode=bridge macvlan-1

319a68a47f96f72e2ee261644ca3bacadf88179ced583f5212b07ff39a0ed7ee

// 创建macvlan-2

root@ubuntu22-25:~# docker network create -d macvlan --subnet 172.16.1.0/24 --gateway 172.16.1.1 --aux-address used_ip2=172.16.1.200 -o parent=veth0.2 -o macvlan_mode=bridge macvlan-2

7a292c3731731ece096de819f9c4fb9912a79175d7ed8420194d70e333d523ae

root@ubuntu22-25:~#

- 查看docker网络

// 查看docker网络

root@ubuntu22-25:~# docker network ls

NETWORK ID NAME DRIVER SCOPE

b87f126980a5 bridge bridge local

52518594eab6 host host local

319a68a47f96 macvlan-1 macvlan local

7a292c373173 macvlan-2 macvlan local

14101777743d none null local

2.7 创建两个容器:ubuntu-1和ubuntu-2,分别加入macvlan-1和macvlan-2

- 创建容器ubuntu-1,加入网络macvlan-1,配置IP 192.168.10.10

- 创建容器ubuntu-2,加入网络macvlan-2,配置IP 172.16.1.20

// 创建ubuntu-1,加入macvlan-1,配置IP 192.168.10.10

root@ubuntu22-25:~# docker run -itd --rm --network macvlan-1 --ip 192.168.10.10 --name ubuntu-1 ubuntu22:latest /bin/bash

7ee98218c2aa1dbcaae1284f289f6f2d813da9c7beeb670727a8cfe65f3a2cc7

// 创建ubuntu-2,加入macvlan-2,配置IP 172.16.1.20

root@ubuntu22-25:~# docker run -itd --rm --network macvlan-2 --ip 172.16.1.20 --name ubuntu-2 ubuntu22:latest /bin/bash

5caaaa0e7232a8cd60795cad0a69b186c021f0a114e4af9a7afe14fe9b082a73

2.8 测试容器ubuntu-1与ns1中vlan 1子网卡通讯,测试ubuntu-2与ns2中vlan 2子网卡通讯

ubuntu-1与ns1 中veth1.1的网络地址段相同(192.168.10.0/24),ubuntu-2与ns2 中veth2.2的网络地址段相同(172.16.1.0/24)。Linux bridge br0 实现二层转发,相同地址段可以通讯。

说明:ns1和ns2是独立命名空间,veth1.1和veth2.2等同于网络中其它设备网卡。

- 测试容器ubuntu-1与ns1 中veth1.1 通讯,网络通

- 测试容器ubuntu-2与ns2 中veth2.2 通讯,网络通

veth1.1(192.168.10.100) ping ubuntu-1(192.168.10.10),通

veth2.2(172.16.1.200) ping ubuntu-2(172.16.1.20),通

root@ubuntu22-25:~# ip netns exec ns1 ping 192.168.10.10 -c2

PING 192.168.10.10 (192.168.10.10): 56 data bytes

64 bytes from 192.168.10.10: icmp_seq=0 ttl=64 time=0.142 ms

64 bytes from 192.168.10.10: icmp_seq=1 ttl=64 time=0.145 ms

--- 192.168.10.10 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.142/0.143/0.145/0.000 ms

root@ubuntu22-25:~#

root@ubuntu22-25:~# ip netns exec ns2 ping 172.16.1.20 -c2

PING 172.16.1.20 (172.16.1.20): 56 data bytes

64 bytes from 172.16.1.20: icmp_seq=0 ttl=64 time=0.085 ms

64 bytes from 172.16.1.20: icmp_seq=1 ttl=64 time=0.111 ms

--- 172.16.1.20 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.085/0.098/0.111/0.000 ms

ubuntu-1(192.168.10.10)ping veth1.1(192.168.10.100) ,通

ubuntu-2(172.16.1.20)ping veth2.2(172.16.1.200) ,通

root@ubuntu22-25:~# docker exec -it ubuntu-1 ping 192.168.10.100 -c2

PING 192.168.10.100 (192.168.10.100): 56 data bytes

64 bytes from 192.168.10.100: icmp_seq=0 ttl=64 time=0.116 ms

64 bytes from 192.168.10.100: icmp_seq=1 ttl=64 time=0.104 ms

--- 192.168.10.100 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.104/0.110/0.116/0.000 ms

root@ubuntu22-25:~#

root@ubuntu22-25:~# docker exec -it ubuntu-2 ping 172.16.1.200 -c2

PING 172.16.1.200 (172.16.1.200): 56 data bytes

64 bytes from 172.16.1.200: icmp_seq=0 ttl=64 time=0.148 ms

64 bytes from 172.16.1.200: icmp_seq=1 ttl=64 time=0.109 ms

--- 172.16.1.200 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.109/0.129/0.148/0.000 ms

3. docker macvlan网络中容器与docker macvlan网络父网卡的通讯情况

注意:本测试创建macvlan网络,模式为bridge。如果采用vepa模式,需要接入外部Linux bridge,并开启hairpin模式,请参考我另一篇笔记: Linux bridge开启hairpin模拟测试macvlan vepa模式

-

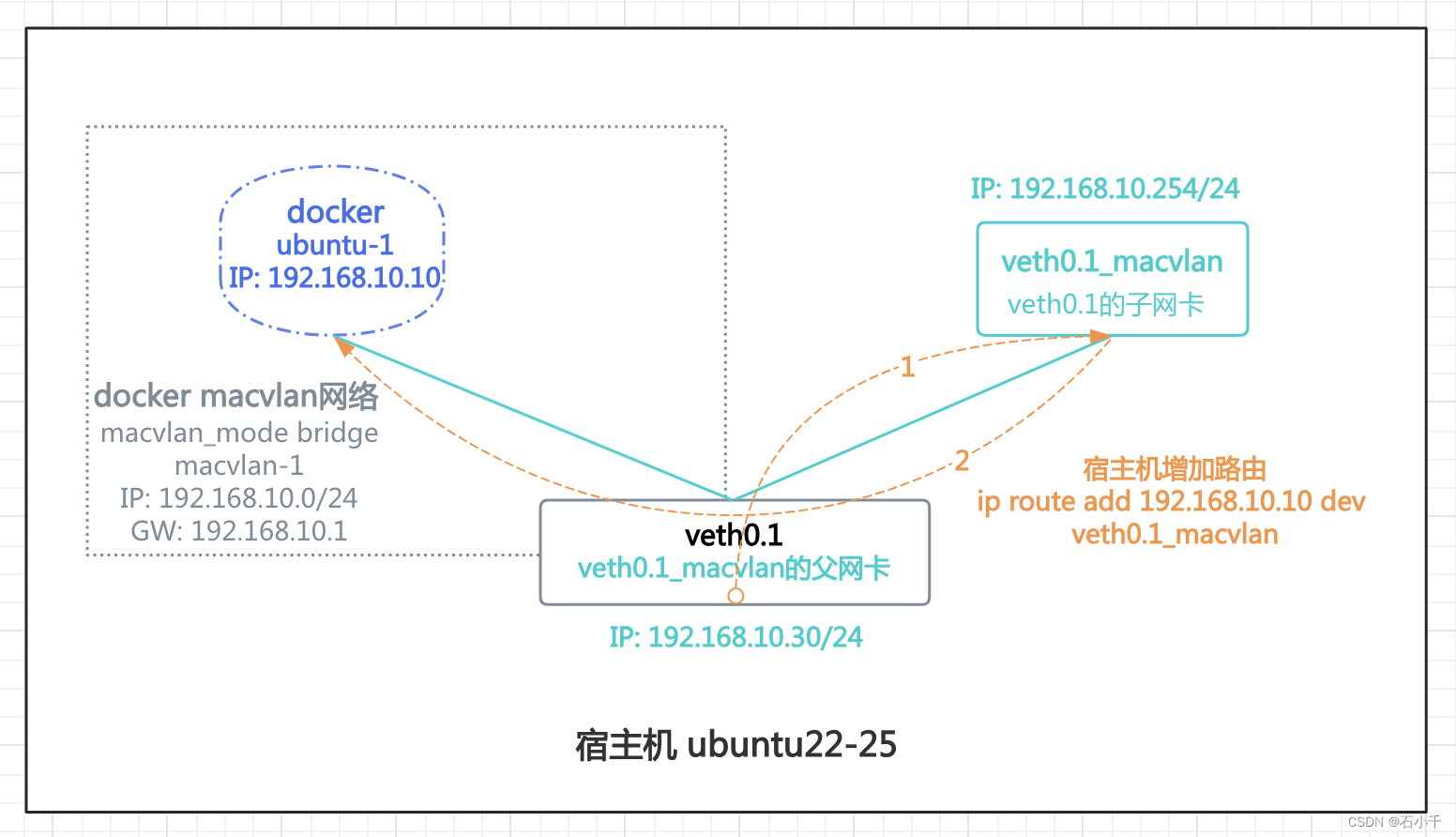

如图示:

- 父网卡veth0.1连接了docker macvlan网络和veth0.1_macvlan(Linux macvlan子网卡)。docker macvlan网络中运行容器ubuntu-1

- 父网卡veth0.1与容器ubuntu-1,网段地址相同,但受macvlan网络限制,不能直接通讯,需经veth0.1_macvlan 转发通讯。

-

测试步骤

- 配置父网卡veth0.1 IP 192.168.10.30

- 测试docker macvlan网络的容器ubuntu-1无法与父网卡veth0.1直接通讯

- 创建veth0.1的Linux macvlan子网卡(模式bridge):veth0.1_macvlan

- 配置veth0.1_macvlan IP 192.168.10.254,并启用

- 增加路由: 父网卡veth0.1经veth0.1_macvlan访问容器ubuntu-1

- 测试容器ubuntu-1(192.168.10.10)与父网卡veth0.1(192.168.10.30)通讯.

3.1 配置父网卡veth0.1网卡IP 192.168.10.30

// 配置父网卡veth0.1网卡IP 192.168.10.30

root@ubuntu22-25:~# ip addr add 192.168.10.30/24 dev veth0.1

root@ubuntu22-25:~# ip a | grep -A3 "veth0\."

11: veth0.1@veth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether e2:a9:88:2f:bb:43 brd ff:ff:ff:ff:ff:ff

inet 192.168.10.30/24 scope global veth0.1

valid_lft forever preferred_lft forever

inet6 fe80::e0a9:88ff:fe2f:bb43/64 scope link

valid_lft forever preferred_lft forever

3.2 测试docker macvlan网络的容器ubuntu-1无法与父网卡veth0.1通讯

正常情况下,macvlan网络的子网卡无法与父网卡直接通讯(详见macvlan概念)。

- 通过ns1测试veth0.1的IP(192.168.10.30)已生效

root@ubuntu22-25:~# ip netns exec ns1 ping 192.168.10.30 -c2

PING 192.168.10.30 (192.168.10.30): 56 data bytes

64 bytes from 192.168.10.30: icmp_seq=0 ttl=64 time=0.089 ms

64 bytes from 192.168.10.30: icmp_seq=1 ttl=64 time=0.169 ms

--- 192.168.10.30 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.089/0.129/0.169/0.040 ms

root@ubuntu22-25:~#

- 容器ubuntu-1(192.168.10.10)无法访问父网卡veth0.1(192.168.10.30)

root@ubuntu22-25:~# docker exec -it ubuntu-1 ping 192.168.10.30 -c2

PING 192.168.10.30 (192.168.10.30): 56 data bytes

92 bytes from 7ee98218c2aa (192.168.10.10): Destination Host Unreachable

92 bytes from 7ee98218c2aa (192.168.10.10): Destination Host Unreachable

--- 192.168.10.30 ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

root@ubuntu22-25:~#

3.3 创建veth0.1的Linux macvlan子网卡:veth0.1_macvlan

// 配置Linux macvlan子网卡

root@ubuntu22-25:~# ip link add link veth0.1 name veth0.1_macvlan type macvlan mode bridge

root@ubuntu22-25:~#

3.4 配置veth0.1_macvlan IP 192.168.10.254,并启用

root@ubuntu22-25:~# ip addr add 192.168.10.254/24 dev veth0.1_macvlan

root@ubuntu22-25:~# ip link set veth0.1_macvlan up

root@ubuntu22-25:~# ip a | grep -A4 veth0.1_macvlan

15: veth0.1_macvlan@veth0.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 06:16:bf:f7:2c:f8 brd ff:ff:ff:ff:ff:ff

inet 192.168.10.254/24 scope global veth0.1_macvlan

valid_lft forever preferred_lft forever

inet6 fe80::416:bfff:fef7:2cf8/64 scope link

root@ubuntu22-25:~#

3.5 增加路由: 父网卡veth0.1经veth0.1_macvlan访问容器ubuntu-1

// 配置路由

root@ubuntu22-25:~# ip route add 192.168.10.10 dev veth0.1_macvlan

// 查看路由

root@ubuntu22-25:~# ip route

default via 10.211.55.1 dev enp0s5 proto static

10.211.55.0/24 dev enp0s5 proto kernel scope link src 10.211.55.25

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

192.168.10.0/24 dev veth0.1 proto kernel scope link src 192.168.10.30

192.168.10.0/24 dev veth0.1_macvlan proto kernel scope link src 192.168.10.254

192.168.10.10 dev veth0.1_macvlan scope link

root@ubuntu22-25:~#

3.6 测试容器ubuntu-1(192.168.10.10)与父网卡veth0.1(192.168.10.30)通讯

- 容器ubuntu-1(192.168.10.10)ping 父网卡veth0.1(192.168.10.30),通

root@ubuntu22-25:~# docker exec -it ubuntu-1 ping 192.168.10.30 -c2

PING 192.168.10.30 (192.168.10.30): 56 data bytes

64 bytes from 192.168.10.30: icmp_seq=0 ttl=64 time=0.437 ms

64 bytes from 192.168.10.30: icmp_seq=1 ttl=64 time=0.092 ms

--- 192.168.10.30 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.092/0.265/0.437/0.173 ms

root@ubuntu22-25:~#

疑问:正常情况下,macvlan网络同一父网卡下两块子网卡通讯,需要父网卡开启混杂模式(promisc),但本次测试使用虚拟网卡,没有开启也能通讯,原因待查!

4. 两个不同网段 docker macvlan网络,通过三层转发跨网通讯的情况

-

如图示:

- 保留上述两项测试的实验环境

- 新增1对veth pair,其中veth_forward_br连接Linux bridge(br0),veth_forward属于命名空间(ns_forward),连接了两块Vlan子网卡:veth_forward.1(vlan 1)和veth_forward.2(vlan 2)

- docker ubuntu-1与docker ubuntu-2,网段地址不同,经Linux bridge br0,由命名空间 ns_forward的NAT功能,完成三层转发通讯。

- docker ubuntu-1与子网卡veth2.2,网段地址不同,命名空间隔离,经Linux bridge br0,由命名空间 ns_forward的NAT功能,完成三层转发通讯。

-

测试步骤

- 创建1对veth pair: veth_forward和veth_forward_br

- veth_forward_br加入bridge br0

- 创建命名空间 ns_forward,veth_forward加入ns_forward

- 在 ns_forward中创建vlan 1和vlan 2子网卡,配置IP并启用

- 测试两个不同网段 docker macvlan网络下的容器,通过三层转发通讯

- 测试命名空间IP与容器IP网段不同时,通过三层转发通讯

4.1 创建1对veth pair: veth_forward和veth_forward_br

// 创建veth pair: veth_forward和veth_forward_br

root@ubuntu22-25:~# ip link add name veth_forward type veth peer veth_forward_br

// 启用网卡

root@ubuntu22-25:~# ip link set veth_forward up

root@ubuntu22-25:~# ip link set veth_forward_br up

// 查看网卡

root@ubuntu22-25:~# ip a | grep -A5 veth_forward

16: veth_forward_br@veth_forward: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 72:50:65:6a:ef:bc brd ff:ff:ff:ff:ff:ff

inet6 fe80::7050:65ff:fe6a:efbc/64 scope link

valid_lft forever preferred_lft forever

17: veth_forward@veth_forward_br: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:35:0f:5b:70:74 brd ff:ff:ff:ff:ff:ff

inet6 fe80::f835:fff:fe5b:7074/64 scope link

valid_lft forever preferred_lft forever

root@ubuntu22-25:~#

4.2 veth_forward_br加入bridge br0

// 接入linux bridge br0

root@ubuntu22-25:~# brctl addif br0 veth_forward_br

root@ubuntu22-25:~# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.ca19426b60e5 no veth0_br

veth1_br

veth2_br

veth_forward_br

docker0 8000.02429a5bdfc4 no

root@ubuntu22-25:~#

4.3 创建命名空间 ns_forward,veth_forward加入ns_forward

// An highlighted block

root@ubuntu22-25:~# ip netns add ns_forward

root@ubuntu22-25:~# ip netns list

ns_forward

ns2 (id: 1)

ns1 (id: 0)

root@ubuntu22-25:~#

root@ubuntu22-25:~# ip link set veth_forward netns ns_forward

4.4 在 ns_forward中创建vlan 1和vlan 2子网卡,配置IP并启用

- 创建vlan 1子网卡

- 名称veth_forward.1

- vlan id 为1

- 配置IP为192.168.10.1/24,作为网关

- 创建vlan 2子网卡

- 名称veth_forward.2

- vlan id 为2

- 配置IP为172.16.1.1/24,作为网关

// 创建vlan子网卡

root@ubuntu22-25:~# ip netns exec ns_forward ip link add link veth_forward name veth_forward.1 type vlan id 1

root@ubuntu22-25:~# ip netns exec ns_forward ip link add link veth_forward name veth_forward.2 type vlan id 2

// 配置vlan子网卡IP

root@ubuntu22-25:~# ip netns exec ns_forward ip addr add 192.168.10.1/24 dev veth_forward.1

root@ubuntu22-25:~# ip netns exec ns_forward ip addr add 172.16.1.1/24 dev veth_forward.2

// 启用vlan子网卡

root@ubuntu22-25:~# ip netns exec ns_forward ip link set lo up

root@ubuntu22-25:~# ip netns exec ns_forward ip link set veth_forward up

root@ubuntu22-25:~# ip netns exec ns_forward ip link set veth_forward.1 up

root@ubuntu22-25:~# ip netns exec ns_forward ip link set veth_forward.2 up

- 查看ns_forward的网卡配置结果

root@ubuntu22-25:~# ip netns exec ns_forward ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: veth_forward.1@veth_forward: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:35:0f:5b:70:74 brd ff:ff:ff:ff:ff:ff

inet 192.168.10.1/24 scope global veth_forward.1

valid_lft forever preferred_lft forever

inet6 fe80::f835:fff:fe5b:7074/64 scope link

valid_lft forever preferred_lft forever

3: veth_forward.2@veth_forward: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:35:0f:5b:70:74 brd ff:ff:ff:ff:ff:ff

inet 172.16.1.1/24 scope global veth_forward.2

valid_lft forever preferred_lft forever

inet6 fe80::f835:fff:fe5b:7074/64 scope link

valid_lft forever preferred_lft forever

17: veth_forward@if16: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:35:0f:5b:70:74 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::f835:fff:fe5b:7074/64 scope link

valid_lft forever preferred_lft forever

root@ubuntu22-25:~#

- 查看ns_forward的访问容器ubuntu-1和ubuntu-2的情况

- ping ubuntu-1 IP 192.168.10.10,通,网关IP生效

- ping ubuntu-2 IP 172.16.1.20,通,网关IP生效

// ping ubuntu-1 192.168.10.10,通

root@ubuntu22-25:~# ip netns exec ns_forward ping 192.168.10.10 -c2

PING 192.168.10.10 (192.168.10.10): 56 data bytes

64 bytes from 192.168.10.10: icmp_seq=0 ttl=64 time=0.089 ms

64 bytes from 192.168.10.10: icmp_seq=1 ttl=64 time=0.084 ms

--- 192.168.10.10 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.084/0.086/0.089/0.000 ms

// ping ubuntu-2 172.16.1.20,通

root@ubuntu22-25:~# ip netns exec ns_forward ping 172.16.1.20 -c2

PING 172.16.1.20 (172.16.1.20): 56 data bytes

64 bytes from 172.16.1.20: icmp_seq=0 ttl=64 time=0.173 ms

64 bytes from 172.16.1.20: icmp_seq=1 ttl=64 time=0.087 ms

--- 172.16.1.20 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.087/0.130/0.173/0.043 ms

root@ubuntu22-25:~#

4.5 测试两个不同网段docker macvlan网络下的容器,通过三层转发跨网通讯

- 两个docker macvlan网络下的容器(网段不同):ubuntu-1(192.168.10.10)和ubuntu-2(172.16.1.20),默认是不能通讯的。

// ubuntu-1(192.168.10.10)不能访问ubuntu-2(172.16.1.20)

root@ubuntu22-25:~# docker exec -it ubuntu-1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

13: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:a8:0a:0a brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.10.10/24 brd 192.168.10.255 scope global eth0

valid_lft forever preferred_lft forever

root@ubuntu22-25:~#

root@ubuntu22-25:~# docker exec -it ubuntu-1 ping 172.16.1.20 -c2

PING 172.16.1.20 (172.16.1.20): 56 data bytes

--- 172.16.1.20 ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

root@ubuntu22-25:~#

// ubuntu-2(172.16.1.20)不能访问ubuntu-1(192.168.10.10)

root@ubuntu22-25:~# docker exec -it ubuntu-2 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

14: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:10:01:14 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.1.20/24 brd 172.16.1.255 scope global eth0

valid_lft forever preferred_lft forever

root@ubuntu22-25:~# docker exec -it ubuntu-2 ping 192.168.10.10 -c2

PING 192.168.10.10 (192.168.10.10): 56 data bytes

--- 192.168.10.10 ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

- 开启命名空间 ns_forward 的 NAT 转发功能

注意,要在命名空间下使用sysctl -w net.ipv4.ip_forward=1 命令设置

// 开启命名空间 ns_forward 的 NAT 转发功能

root@ubuntu22-25:~# ip netns exec ns_forward sysctl -w net.ipv4.ip_forward=1

net.ipv4.ip_forward = 1

// 查看设置结果

root@ubuntu22-25:~# ip netns exec ns_forward sysctl net.ipv4.ip_forward

net.ipv4.ip_forward = 1

- 再次测试ubuntu-1(192.168.10.10)ping ubuntu-2(172.16.1.20),经三层转发,网络通

- 再次测试ubuntu-2(172.16.1.20)ping ubuntu-1(192.168.10.10),经三层转发,网络通

// ubuntu-1(192.168.10.10) ping ubuntu-2(172.16.1.20),通

root@ubuntu22-25:~# docker exec -it ubuntu-1 ping 172.16.1.20 -c2

PING 172.16.1.20 (172.16.1.20): 56 data bytes

64 bytes from 172.16.1.20: icmp_seq=0 ttl=63 time=0.370 ms

64 bytes from 172.16.1.20: icmp_seq=1 ttl=63 time=0.131 ms

--- 172.16.1.20 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.131/0.251/0.370/0.120 ms

// ubuntu-2(172.16.1.20) ping ubuntu-1(192.168.10.10),通

root@ubuntu22-25:~# docker exec -it ubuntu-2 ping 192.168.10.10 -c2

PING 192.168.10.10 (192.168.10.10): 56 data bytes

64 bytes from 192.168.10.10: icmp_seq=0 ttl=63 time=0.085 ms

64 bytes from 192.168.10.10: icmp_seq=1 ttl=63 time=0.124 ms

--- 192.168.10.10 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.085/0.105/0.124/0.000 ms

root@ubuntu22-25:~#

4.6 测试命名空间IP与容器IP网段不同时,通过三层转发通讯

- ns1增加默认路由192.168.10.1;

- ns2下增加默认路由172.16.1.1

// ns1、ns2增加默认路由

root@ubuntu22-25:~# ip netns exec ns1 ip route add default via 192.168.10.1

root@ubuntu22-25:~# ip netns exec ns2 ip route add default via 172.16.1.1

// 查看ns1、ns2 路由表

root@ubuntu22-25:~# ip netns exec ns1 ip route

default via 192.168.10.1 dev veth1.1

192.168.10.0/24 dev veth1.1 proto kernel scope link src 192.168.10.100

root@ubuntu22-25:~# ip netns exec ns2 ip route

default via 172.16.1.1 dev veth2.2

172.16.1.0/24 dev veth2.2 proto kernel scope link src 172.16.1.200

- ns1(192.168.1.100) ping ubuntu-2(172.16.1.20),三层转发,网络通

- ns2(172.16.1.200)ping ubuntu-1(192.168.10.10),三层转发,网络通

// ns1(192.168.1.100) ping ubuntu-2(172.16.1.20),通

root@ubuntu22-25:~# ip netns exec ns1 ping 172.16.1.20 -c2

PING 172.16.1.20 (172.16.1.20): 56 data bytes

64 bytes from 172.16.1.20: icmp_seq=0 ttl=63 time=0.119 ms

64 bytes from 172.16.1.20: icmp_seq=1 ttl=63 time=0.197 ms

--- 172.16.1.20 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.119/0.158/0.197/0.039 ms

// ns2(172.16.1.200) ping ubuntu-1(192.168.1.10),通

root@ubuntu22-25:~# ip netns exec ns2 ping 192.168.10.10 -c2

PING 192.168.10.10 (192.168.10.10): 56 data bytes

64 bytes from 192.168.10.10: icmp_seq=0 ttl=63 time=0.135 ms

64 bytes from 192.168.10.10: icmp_seq=1 ttl=63 time=0.126 ms

--- 192.168.10.10 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.126/0.131/0.135/0.000 ms

root@ubuntu22-25:~#

总结

通过单台宿主机上配置Linux bridge和多个命名空间,测试docker macvlan网络,验证了以下结果。

- docker macvlan使容器直接暴露在网络中,与网络中其它设备可实现二层通讯。

- docker macvlan中子网卡(子接口)不能与父网卡(父接口)直接通讯,可以通过添加父网卡的macvlan子网卡,间接转发通讯。

- 不同网段的docker macvlan网络,可通过三层转发方式实现跨网段通讯。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!