Datax同步(高可用HDFS版本)

发布时间:2024年01月15日

这是一个mysql同步到hive的json脚本,hive的底层使用的是HDFS存储,同步到hive,只能用hdfswriter

mysql ->hive

{

"job": {

"setting": {

"speed": {

"channel": 3,

"byte": 1048576

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "******",

"column": [

"`DEPTNO`",

"`DNAME`",

"`LOC`"

],

"splitPk": "",

"connection": [

{

"table": [

"dept"

],

"jdbcUrl": [

"jdbc:mysql://192.168.88.101:3306/scott"

]

}

]

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"hadoopConfig": {

"dfs.nameservices": "hdfs-xxy",

"dfs.ha.namenodes.hdfs-xxy": "nn1,nn2",

"dfs.namenode.rpc-address.hdfs-xxy.nn1": "node01:8020",

"dfs.namenode.rpc-address.hdfs-xxy.nn2": "node02:8020",

"dfs.client.failover.proxy.provider.hdfs-xxy":

"org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"},

"defaultFS": "hdfs://hdfs-xxy",

"fileType": "text",

"path": "/hive/warehouse/test.db/dept_test/dt=${dt}",

"fileName": "test_dept",

"writeMode": "append",

"fieldDelimiter": "|",

"column": [

{

"name": "DEPTNO",

"type": "bigint"

},

{

"name": "DNAME",

"type": "string"

},

{

"name": "LOC",

"type": "string"

},

{

"name":"dt",

"type":"string"

}

]

}

}

}

]

}

}

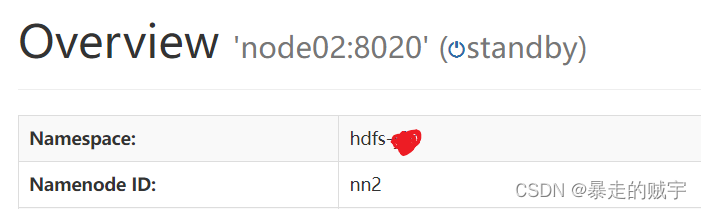

这个是我hdfs页面的信息,根据自己的信息做json代码的改动

这个是我hdfs页面的信息,根据自己的信息做json代码的改动

hive->mysql

{

"job": {

"setting": {

"speed": {

"channel": 3,

"byte": 1048576

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [

{

"reader": {

"name": "hdfsreader",

"parameter": {

"column": ["*"],

"hadoopConfig": {

"dfs.nameservices": "hdfs-xxy",

"dfs.ha.namenodes.hdfs-xxy": "nn1,nn2",

"dfs.namenode.rpc-address.hdfs-xxy.nn1": "node01:8020",

"dfs.namenode.rpc-address.hdfs-xxy.nn2": "node02:8020",

"dfs.client.failover.proxy.provider.hdfs-xxy":

"org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"},

"defaultFS": "hdfs://hdfs-xxy",

"fileType": "text",

"path": "/hive/warehouse/testads/test_ads_scott",

"fieldDelimiter": "|"

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"username": "root",

"password": "123456",

"column": ["empno", "ename", "job", "sal", "dt"],

"writeMode": "replace",

"connection": [

{

"table": ["ads_test"],

"jdbcUrl": "jdbc:mysql://192.168.88.101:3306/scott"

}

]

}

}

}

]

}

}

文章来源:https://blog.csdn.net/xxy1022_/article/details/135593248

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 私域朋友圈运营 | 最全攻略

- 国内前十大连锁酒店集团之一『东呈集团』商城项目启动,企企通赋能酒店管理集团采购数字化

- 06-微服务-SpringAMQP

- 【深度解析】MyBatis体系结构与工作原理,让你一次掌握!

- ai智能语音机器人怎么使用?

- 本地化部署大模型方案二:fastchat+llm(vllm)

- 爬取的数据可以入表吗?怎样入表?

- 中国数字化进程简史(1980-2022)之一

- 微信小程序开发系列-12label组件使用理解

- 最高200万!2024年陕西省制造业单项冠军企业申报条件奖励、程序材料