Java原来可以这么玩!CV视频合成处理,视频前后拼接,画面合并

发布时间:2023年12月18日

前言

本章内容教会你如何用java代码实现 两个视频的画面合并 或者前后拼接。原理是使用了javacv开源jar包,代码经过反复修改,已经实现我能想到的最优最快的实现,如果你有更好更快的实现,欢迎评论区留言!!!

先展示一下效果吧!!!

两个视频前后拼接

两个视频画面合并

JAVACV

简介

JavaCV是一款基于JavaCPP调用方式(JNI的一层封装),由多种开源计算机视觉库组成的包装库,封装了包含FFmpeg、OpenCV、tensorflow、caffe、tesseract、libdc1394、OpenKinect、videoInput和ARToolKitPlus等在内的计算机视觉领域的常用库和实用程序类。

JavaCV基于Apache License Version 2.0协议和GPLv2两种协议,JavaCV支持Windows、Linux、MacOS,Android、IOS在内的Java平台上调用这些接口。

JavaCV是一个基于Java语言的计算机视觉库,它提供了对多种计算机视觉库的接口和包装,使得Java开发者可以方便地使用计算机视觉技术。计算机视觉是人工智能领域的一个重要分支,它涉及到图像处理、视频分析、模式识别、机器学习等多个领域。应用场景需要使用到计算机视觉技术,例如人脸识别、目标检测、物体跟踪、手势识别等等。

JavaCV的特点

- 跨平台性:JavaCV支持Windows、Linux、MacOS等多个操作系统,同时也支持Android、IOS等移动平台,使得Java开发者可以方便地在不同的平台上使用计算机视觉技术。

- 丰富的接口和包装:JavaCV提供了对多种计算机视觉库的接口和包装,例如FFmpeg、OpenCV、tensorflow、caffe、tesseract等等。这些库都是计算机视觉领域的常用库和实用程序类,JavaCV的封装使得Java开发者可以方便地使用这些库的功能。

- 易于使用:JavaCV的使用非常简单,只需要调用相应的接口即可实现相应的功能。同时,JavaCV还提供了丰富的示例代码和文档,方便开发者学习和使用。

- 灵活扩展:JavaCV的设计非常灵活,可以方便地扩展新的接口和功能。同时,JavaCV还提供了开源的代码库和社区支持,方便开发者进行二次开发和交流。

JavaCV的使用场景

- 图像处理:JavaCV提供了对图像处理的各种功能,例如图像转换、图像增强、图像分割等等。这些功能可以用于图像处理的各种应用场景,例如人脸识别、目标检测、图像识别等等。

- 视频分析:JavaCV提供了对视频分析的各种功能,例如视频帧提取、视频流处理、视频目标跟踪等等。这些功能可以用于视频监控、视频分析、视频编辑等等应用场景。

- 机器学习:JavaCV提供了对机器学习的各种功能,例如特征提取、分类器训练、模型评估等等。这些功能可以用于机器学习应用场景的各种任务,例如图像分类、目标检测、情感分析等等。

- 3D重建:JavaCV提供了对3D重建的各种功能,例如3D点云处理、3D模型重建等等。这些功能可以用于3D扫描、3D建模、3D打印等等应用场景。

- 其他应用:除了以上几个应用场景之外,JavaCV还可以用于其他各种计算机视觉应用场景,例如手势识别、物体识别、人脸识别等等。

添加jar依赖

<!-- https://mvnrepository.com/artifact/org.bytedeco/javacv-platform -->

<dependency>

<groupId>org.bytedeco</groupId>

<artifactId>javacv-platform</artifactId>

<version>1.5.5</version>

</dependency>

完整代码展示

核心代码,单类实现,idea等编辑器内创建VideoMergeJoin类,复制粘贴下面代码,右键点击run运行主方法即可。

- 其中 mergeVideo 方法是将两个帧数相同的的视频合并到一个画面中。

- 其中 joinVideo 方法 是将两个视频进行前后拼接,第一个参数视频路径,拼接在前,第二个参数视频路径,拼接在后。

- 修改 VIDEO_PATH1、VIDEO_PATH2 等需要合并和处理的视频文件路径

- 修改MERGE_PATH、JOIN_PATH 视频合并或者拼接的生成文件路径

import org.bytedeco.javacv.*;

import org.bytedeco.javacv.Frame;

import java.awt.*;

import java.awt.image.BufferedImage;

/**

* @author tarzan

*/

public class VideoMergeJoin {

final static String VIDEO_PATH1 = "E:\\test.mp4";

final static String VIDEO_PATH2 = "E:\\aivideo\\20231217-190828_with_snd.mp4";

final static String MERGE_PATH= "E:\\test_merge.mp4";

final static String JOIN_PATH= "E:\\test_join.mp4";

public static void main(String[] args) throws Exception {

long start=System.currentTimeMillis();

mergeVideo(VIDEO_PATH1,VIDEO_PATH2,MERGE_PATH);

joinVideo(VIDEO_PATH1,VIDEO_PATH2,JOIN_PATH);

System.out.println("耗时 "+(System.currentTimeMillis()-start)+" ms");

}

public static void joinVideo(String videoPath1,String videoPath2, String outPath) throws Exception {

// 初始化视频源

FFmpegFrameGrabber grabber1 = new FFmpegFrameGrabber(videoPath1);

FFmpegFrameGrabber grabber2 = new FFmpegFrameGrabber(videoPath2);

grabber1.start();

grabber2.start();

// 初始化目标视频

FFmpegFrameRecorder recorder = new FFmpegFrameRecorder(outPath, grabber1.getImageWidth(), grabber1.getImageHeight(),grabber1.getAudioChannels());

// 录制视频

recorder.start();

while (true){

Frame frame= grabber1.grab();

if (frame == null) {

break;

}

recorder.record(frame);

}

while (true){

Frame frame= grabber2.grab();

if (frame == null) {

break;

}

recorder.record(frame);

}

// 释放资源

grabber1.stop();

grabber2.stop();

recorder.stop();

}

public static void mergeVideo(String videoPath1,String videoPath2, String outPath) throws Exception {

// 初始化视频源

FFmpegFrameGrabber grabber1 = new FFmpegFrameGrabber(videoPath1);

FFmpegFrameGrabber grabber2 = new FFmpegFrameGrabber(videoPath2);

grabber1.start();

grabber2.start();

// 检查帧率是否一样

if (grabber1.getFrameRate() != grabber2.getFrameRate()) {

throw new Exception("Video frame rates are not the same!");

}

// 初始化目标视频

FFmpegFrameRecorder recorder = new FFmpegFrameRecorder(outPath, (grabber1.getImageWidth()+grabber2.getImageWidth()), grabber1.getImageHeight(),grabber1.getAudioChannels());

// 录制视频

recorder.start();

int i=1;

int videoSize = grabber1.getLengthInVideoFrames();

while (true){

Frame frame1= grabber1.grab();

if (frame1 == null) {

break;

}

if (frame1.image != null) {

// 将两个帧合并为一个画面

Frame frame2=grabber2.grabImage();

System.out.println("视频共" + videoSize + "帧,正处理第" + i + "帧图片 ");

// 创建一个新的 BufferedImage 用于合并画面

BufferedImage combinedImage = new BufferedImage(grabber1.getImageWidth()+grabber2.getImageWidth(), grabber1.getImageHeight(), BufferedImage.TYPE_3BYTE_BGR);

Graphics2D g2d = combinedImage.createGraphics();

// 在合并画面上绘制两个视频帧

g2d.drawImage(Java2DFrameUtils.toBufferedImage(frame1), 0, 0, null);

g2d.drawImage(Java2DFrameUtils.toBufferedImage(frame2), grabber1.getImageWidth(), 0, null);

g2d.dispose();

// ImageIO.write(combinedImage,"png",new File("E:\\images1\\combinedImage"+i+".png"));

// 将合并后的 BufferedImage 转换为帧并录制到目标视频中

recorder.record(Java2DFrameUtils.toFrame(combinedImage));

i++;

}

if (frame1.samples != null) {

recorder.recordSamples(frame1.sampleRate, frame1.audioChannels, frame1.samples);

}

}

// 释放资源

grabber1.stop();

grabber2.stop();

recorder.stop();

}

}

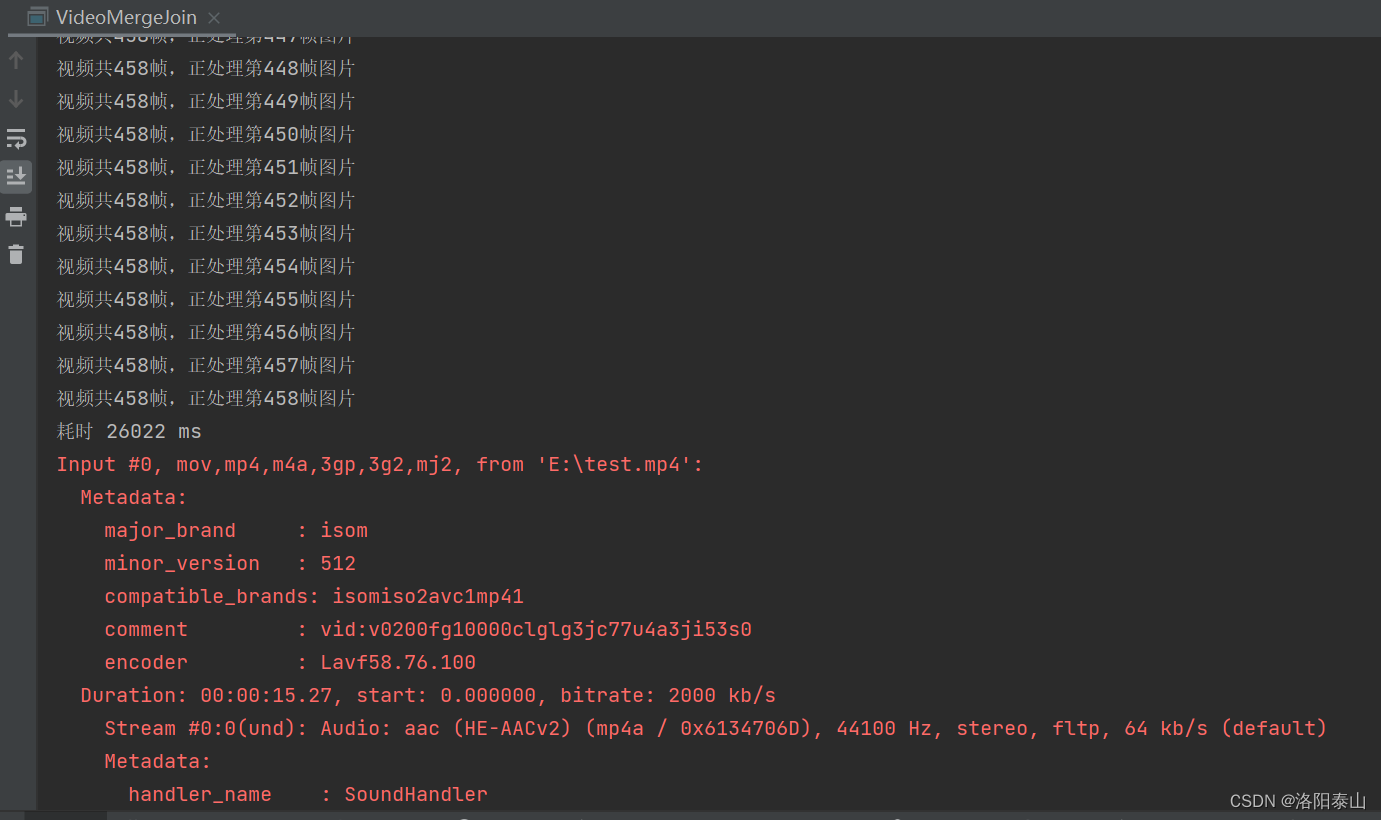

控制台运行输出日志

- 两个 15s- 458帧的视频,合并画面 耗时 26s

- 两个 15s- 458帧的视频,前后拼接 耗时 6.6s

文章来源:https://blog.csdn.net/weixin_40986713/article/details/135056989

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- Ansible自动化工具之Playbook剧本编写

- Android 权限管理

- 配置dns主从服务器,能够实现正常的正反向解析

- 博客摘录「 Apollo安装和基本使用」2023年11月27日

- 软件设计师——软件工程(四)

- 解密Java面试题:final修饰的ArrayList容量为10,添加第11个元素究竟会发生什么?

- Linux操作系统( YUM软件仓库技术 )

- burp靶场-path traversal

- vuex和mixin的区别和使用

- 二叉树简单题|对称、翻转、合并二叉树