开源模型应用落地-qwen模型小试-入门篇(四)

发布时间:2024年01月19日

一、前言

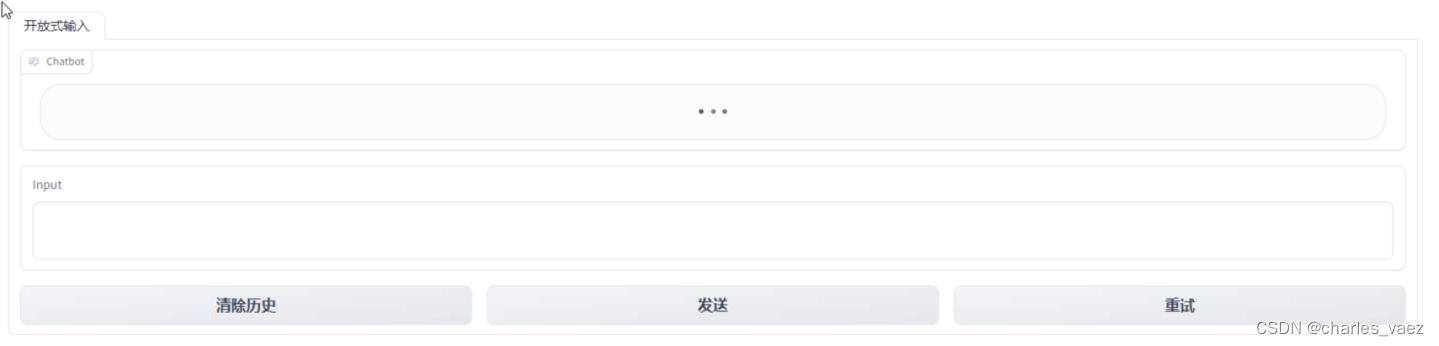

? ? 通过前面三篇的学习,我相信您已经学会了如何使用qwen模型的基本方法。现在,我们将利用gradio工具,为qwen模型加上一个漂亮的界面,以实现人与机器之间的交互操作。

二、术语

2.1. gradio

? ??Gradio是一个Python库,它可以帮助我们轻松地为机器学习模型创建用户界面。它提供了一个简单而强大的方式,让我们能够通过图形界面与模型进行交互。使用Gradio,我们可以快速地将模型部署为一个Web应用程序或者本地应用程序,而无需编写复杂的前端代码。它还支持文本输入、图像输入和实时摄像头输入等多种输入方式,让用户能够方便地与模型进行对话或者测试。

三、前置条件

3.1.windows操作系统

3.2.下载Qwen-1_8B-Chat模型

? ? 从huggingface下载:https://huggingface.co/Qwen/Qwen-1_8B-Chat/tree/main

? ? 从魔搭下载:https://modelscope.cn/models/qwen/Qwen-1_8B-Chat/files

四、技术实现

# -*- coding = utf-8 -*-

import os

import logging

import warnings

import gradio as gr

import torch

import sys

import mdtex2html

from transformers import GenerationConfig, AutoTokenizer, AutoModelForCausalLM

warnings.filterwarnings("ignore")

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s [%(levelname)s]: %(message)s', # 指定日志输出格式

datefmt='%Y-%m-%d %H:%M:%S' # 指定日期时间格式

)

root_path = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

sys.path.append(root_path)

logging.info(f'root_path: {root_path}')

model_name = "qwen-1_8b-chat"

if sys.platform == "linux":

model_path = f"/data/model/{model_name}"

else:

model_path = f"E:\\model\\{model_name}"

system = '你是一个乐于助人的人工智能助手,愿意解决人类提出的各种问题'

def loadTokenizer(modelPath):

tokenizer = AutoTokenizer.from_pretrained(modelPath, trust_remote_code=True)

return tokenizer

def loadModel(modelPath,config):

if torch.cuda.is_available():

model = AutoModelForCausalLM.from_pretrained(modelPath, device_map="auto", trust_remote_code=True,fp16=True).eval()

else:

model = AutoModelForCausalLM.from_pretrained(modelPath, device_map="cpu", trust_remote_code=True).eval()

model.generation_config = config

return model

def predict(_query, _chatbot, _task_history):

global model,tokenizer

_chatbot.append((_parse_text(_query), ""))

result = ""

for response in model.chat_stream(tokenizer=tokenizer,query=_query, history=_task_history, system=system):

_chatbot[-1] = (_parse_text(_query), _parse_text(response))

yield _chatbot

result = _parse_text(response)

# print(f"History: {_task_history}")

_task_history.append((_query, result))

# print(f"Qwen-Chat: {_parse_text(result)}")

def postprocess(self, y):

if y is None:

return []

for i, (message, response) in enumerate(y):

y[i] = (

None if message is None else mdtex2html.convert(message),

None if response is None else mdtex2html.convert(response),

)

return y

gr.Chatbot.postprocess = postprocess

def _parse_text(text):

lines = text.split("\n")

lines = [line for line in lines if line != ""]

count = 0

for i, line in enumerate(lines):

if "```" in line:

count += 1

items = line.split("`")

if count % 2 == 1:

lines[i] = f'<pre><code class="language-{items[-1]}">'

else:

lines[i] = f"<br></code></pre>"

else:

if i > 0:

if count % 2 == 1:

line = line.replace("`", r"\`")

line = line.replace("<", "<")

line = line.replace(">", ">")

line = line.replace(" ", " ")

line = line.replace("*", "*")

line = line.replace("_", "_")

line = line.replace("-", "-")

line = line.replace(".", ".")

line = line.replace("!", "!")

line = line.replace("(", "(")

line = line.replace(")", ")")

line = line.replace("$", "$")

lines[i] = "<br>" + line

text = "".join(lines)

return text

def _gc():

import gc

gc.collect()

if torch.cuda.is_available():

torch.cuda.empty_cache()

def regenerate(_chatbot, _task_history):

if not _task_history:

yield _chatbot

return

item = _task_history.pop(-1)

_chatbot.pop(-1)

yield from predict(_query=item[0], _chatbot=_chatbot, _task_history=_task_history)

def reset_user_input():

return gr.update(value="")

def reset_state(_chatbot, _task_history):

_task_history.clear()

_chatbot.clear()

_gc()

return _chatbot

with gr.Blocks(css=".app.svelte-1kyws56.svelte-1kyws56 {width: 50%}") as interface:

# 设置tab选项卡

with gr.Tab("开放式输入"):

chatbot = gr.Chatbot()

query = gr.Textbox(lines=2, label='Input')

task_history = gr.State([])

with gr.Row():

empty_btn = gr.Button("清除历史")

submit_btn = gr.Button("发送")

regen_btn = gr.Button("重试")

# 聊天

submit_btn.click(predict, [query, chatbot, task_history], [chatbot], show_progress=True)

submit_btn.click(reset_user_input, [], [query])

empty_btn.click(reset_state, [chatbot, task_history], outputs=[chatbot], show_progress=True)

regen_btn.click(regenerate, [chatbot, task_history], [chatbot], show_progress=True)

if __name__ == "__main__":

config = GenerationConfig.from_pretrained(model_path, trust_remote_code=True, top_p=0.9,

temperature=0.45, repetition_penalty=1.1, do_sample=True,

max_new_tokens=8192)

tokenizer = loadTokenizer(model_path)

model = loadModel(model_path,config)

app, local_url, share_url = interface.queue(concurrency_count=5).launch(debug=True, share=False, show_api=False,

inbrowser=False, server_name='0.0.0.0',

auth=("zhangsan", '123456'), width='70%')

启动:

五、测试

5.1.访问

? ? 浏览器访问:http://127.0.0.1:7860

5.2.交互

5.3.清除历史

5.4.重试

六、附带说明

6.1. 登录账号

? ? 在代码指定登录信息:auth=("zhangsan", '123456')

? ? 若需要自定义校验规则可以指定校验方法:例如

app, local_url, share_url = interface.queue().launch(debug=True,share=False,show_api=False,inbrowser=False, server_name='0.0.0.0',auth=same_auth, auth_message="username and password must be the same")

# 账户和密码相同就可以通过

def same_auth(username, password):

return username == password

文章来源:https://blog.csdn.net/qq839019311/article/details/135672748

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 网络安全的概念、类型和重要性

- 程序员副业之AI文库项目(超详细完整全流程)

- 基于JAYA算法优化的Elman神经网络数据预测 - 附代码

- 51 回溯算法求解子集

- 彻底搞懂DevOps是什么,CI/CD是什么,跟敏捷开发有什么关系

- 汽车总线中,为何CAN FD还不能大面积取代CAN总线?

- 当你遇到这些情况的时候,发版到白了少年头,代码还是不会更新...

- 深度学习中的张量维度

- Java实现Leetcode题(二叉树-2)

- openAI 通过php方式 发送请求,流数据形式传输,php 实现chatGPT功能