ML Design Patterns——Checkpoints

In the ML context

Introduction: Machine learning (ML) algorithms are at the core of intelligent systems that make decisions and predictions based on data. Developing efficient and accurate ML models requires a solid understanding of design patterns and techniques. Checkpoints provide a mechanism for saving and loading model training progress and serve as a powerful tool for experimentation, optimization, and debugging.

-

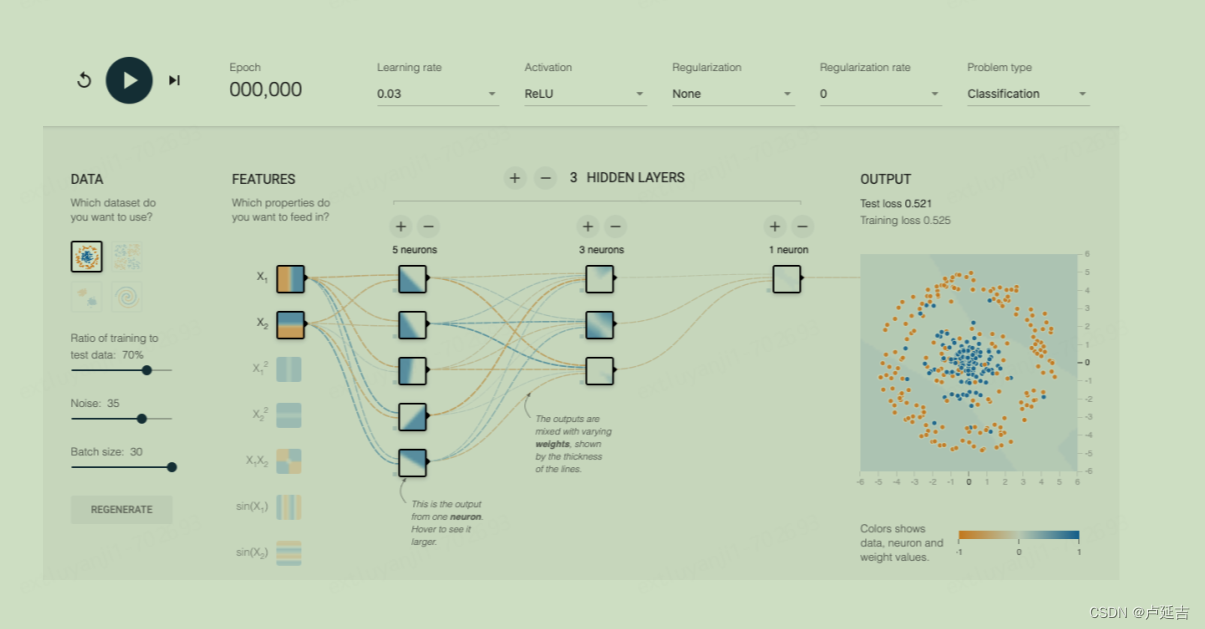

Understanding Design Patterns in ML: Design patterns provide reusable solutions to common problems that software developers encounter. In the context of ML, design patterns refer to general strategies and principles that guide the development and deployment of ML algorithms. While ML design patterns are still a relatively developing field, their importance cannot be overstated when it comes to creating scalable, maintainable, and efficient ML solutions.

-

The Role of Checkpoints in ML: Checkpoints are an essential component of ML design patterns. They allow developers to save and load the state of a model during the training process. Checkpoints enable various functionalities, such as resuming training from a saved state, performing model evaluations at different stages, fine-tuning models, and facilitating distributed training. Checkpoints are especially critical when training deep learning models, which can be computationally expensive and time-consuming.

-

Training Workflow with Checkpoints: To effectively utilize checkpoints, it is crucial to establish a well-defined training workflow.This includes:

- a. Model Initialization:Defining the architecture and initializing the model parameters.

- b. Data Loading: Preparing and loading the training data.

- c. Training Loop: Iterating over the data to update the model parameters.

- d. Validation and Monitoring: Interleaving validation steps to monitor model performance and early stopping if required.

- e. Checkpointing: Saving the model state at regular intervals or based on a predefined condition.

- f. Restoring from Checkpoints: Loading the model state to resume training or for inference purposes.

-

Selecting the Right Checkpoint Strategy: Choosing the appropriate checkpoint strategy directly impacts the efficiency and performance of ML algorithms. Considerations include:

- a. Frequency: Deciding on how often to save checkpoint files based on computational resources and the need for regular backups.

- b. Storage Format: Selecting an appropriate format for saving checkpoints, such as HDFS or TensorFlow’s SavedModel, that suits the requirements of the ML framework being used.

- c. Metadata Tracking: Including additional metadata (e.g., training parameters, evaluation metrics, hyperparameters) in the checkpoint files to track model evolution.

- d. Retention Policy: Establishing criteria for retaining and managing checkpoints to optimize storage usage.

-

Best Practices for Checkpoints: Here are some best practices to consider when working with checkpoints:

- a. Version Control: Storing checkpoints in a version control system to ensure reproducibility and easy collaboration.

- b. Checkpoint Size: Balancing checkpoint file sizes to minimize storage requirements while enabling efficient retrieval.

- c. System Failure Handling: Implementing mechanisms to handle unexpected system failures during training or inference, such as checkpoint backups and error handling.

- d. Distributed Training: Leveraging checkpoints for distributed training to synchronize and aggregate model updates across multiple workers.

Conclusion: Checkpoints serve as a powerful tool for ML algorithm development, allowing for efficient model training, evaluation, optimization, and debugging. By following best practices and incorporating checkpoints into your ML workflows, you can enhance the efficiency and effectiveness of your machine learning algorithms.

In the software context

In software development, a checkpoint is a specific moment or stage in the development process where progress is reviewed and evaluated. It serves as a “pause” point to assess the current state of the project, validate its completeness, and determine if it aligns with the defined goals and requirements.

The purpose of checkpoints is to ensure that the project is on track, identify any issues or risks, and make necessary adjustments or corrections. It allows developers, project managers, and stakeholders to have a clear understanding of the project’s progress and its suitability for moving forward.

Checkpoints can be defined at various development stages, depending on the specific project and its requirements. Some common checkpoints in software development include:

- Requirement Checkpoint: The initial stage where project requirements are defined and validated. It ensures that all necessary features and functionalities are identified and understood.

- Design Checkpoint: Evaluating the system architecture, database design, software interfaces, and other design elements to ensure they meet the project requirements and standards.

- Development Checkpoint: Assessing the progress made in coding and implementation. It ensures the functionality is being developed as intended and that coding standards are being followed.

- Testing Checkpoint: Reviewing the testing strategy, test cases, and test coverage to ensure sufficient testing is performed on the software. This checkpoint helps identify any issues or bugs that need to be addressed before deployment.

- Integration Checkpoint: Verifying the successful integration of different modules or components of the software to ensure they work together seamlessly.

- Deployment Checkpoint: Assessing the readiness and stability of the software for deployment to production environments, ensuring proper documentation and release procedures are followed.

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 【Petalinux】 增加Atheros网口驱动

- 【AUTOSAR MCAL】MCAL基础与EB tresos工程新建

- 跨语言调用

- 【ARM 嵌入式 C 入门及渐进7 -- C代码中的可变参数宏详细介绍】

- 重生奇迹MU觉醒魔法师装备

- Docker命令---运行容器,启动springboot应用

- 快乐学Python,数据分析之使用爬虫获取网页内容

- 网络信息安全十大隐患,如何做好防范,实践方法

- C++面向对象(OOP)编程-STL详解(vector)

- mysql实现分区