XTuner 大模型单卡低成本微调实战

XTuner 大模型单卡低成本微调 原理可查看 XTuner 大模型单卡低成本微调原理

配置环境

-

创建一个名为xtuner,python=3.10版本虚拟环境

conda create --name xtuner0.1.9 python=3.10 -y -

创建一个xtuner019文件夹,进入文件夹,拉取0.1.9版本的xtuner源码

mkdir xtuner019 cd xtuner019 git clone -b v0.1.9 https://github.com/InternLM/xtuner -

进入源码目录,从源码安装XTuner

cd xtuner pip install -e '.[all]' -

创建一个微调的工作路径(准备在 oasst1 数据集上微调 internlm-7b-chat)

mkdir ft-oasst1 cd ft-oasst1

微调

-

准备配置文件

-

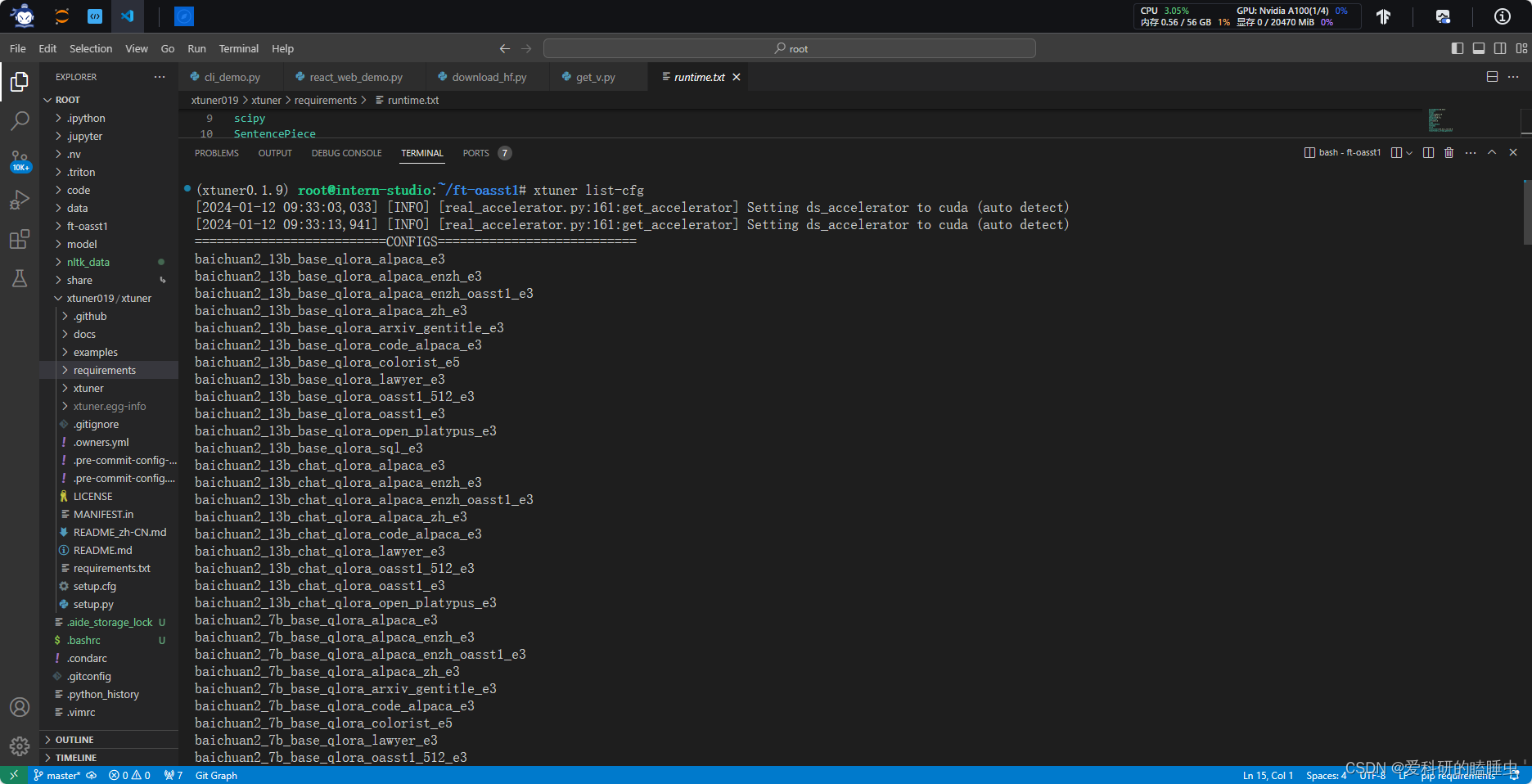

XTuner 提供多个开箱即用的配置文件,用户可以通过下列命令查看:

xtuner list-cfg

baichuan2:表示模型

13b:表示模型大小

base:表示基座模型

qlora:表示微调的算法

alpaca:表示在alpaca数据集上微调

e3:表示训练迭代3次 -

拷贝一个配置文件到当前目录

cd ft-oasst1 xtuner copy-cfg internlm_chat_7b_qlora_oasst1_e3 .

-

-

模型下载

-

直接拷贝

InternStudio平台的 share 目录下已经为我们准备了全系列的 InternLM 模型,所以我们可以直接复制即可。使用如下命令复制:cp -r /root/share/temp/model_repos/internlm-chat-7b /root/ft-oasst1/ -

网络下载

- 也可以使用 modelscope 中的 snapshot_download 函数下载模型,第一个参数为模型名称,参数 cache_dir 为模型的下载路径。

- 在ft-oasst1文件夹下新建 download.py 文件并在其中输入以下内容,粘贴代码后记得保存文件,如下图所示。并运行 python /root/ft-oasst1/download.py 执行下载,模型大小为 14 GB,下载模型大概需要 10~20 分钟

import torch from modelscope import snapshot_download, AutoModel, AutoTokenizer import os model_dir = snapshot_download('Shanghai_AI_Laboratory/internlm-chat-7b', cache_dir='/root/ft-oasst1', revision='v1.0.3')

-

-

数据集下载

下载地址:https://huggingface.co/datasets/timdettmers/openassistant-guanaco/tree/main

由于 huggingface 网络问题,咱们已经给大家提前下载好了,复制到正确位置即可:cd ft-oasst1 cp -r /root/share/temp/datasets/openassistant-guanaco .当前路径的文件:

-

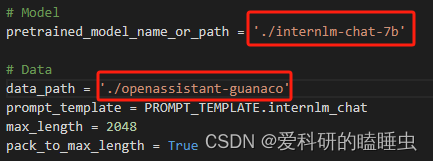

修改配置文件

修改模型路径和数据集路径

常用超参参数名 解释 data_path 数据路径或 HuggingFace 仓库名 max_length 单条数据最大 Token 数,超过则截断 pack_to_max_length 是否将多条短数据拼接到 max_length,提高 GPU 利用率 accumulative_counts 梯度累积,每多少次 backward 更新一次参数 evaluation_inputs 训练过程中,会根据给定的问题进行推理,便于观测训练状态 evaluation_freq Evaluation 的评测间隔 iter 数 … … 如果想把显卡的现存吃满,充分利用显卡资源,可以将 max_length 和 batch_size 这两个参数调大。

-

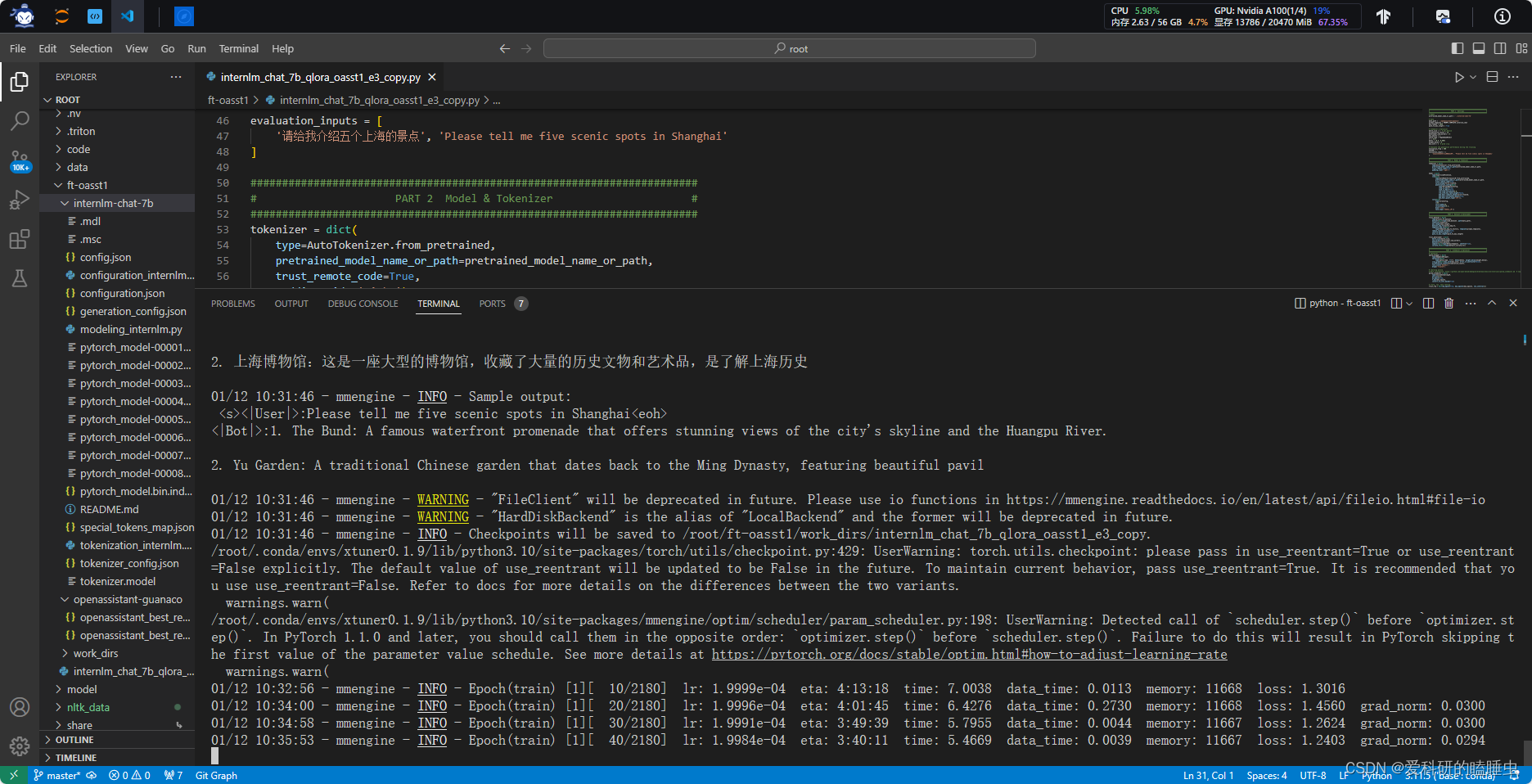

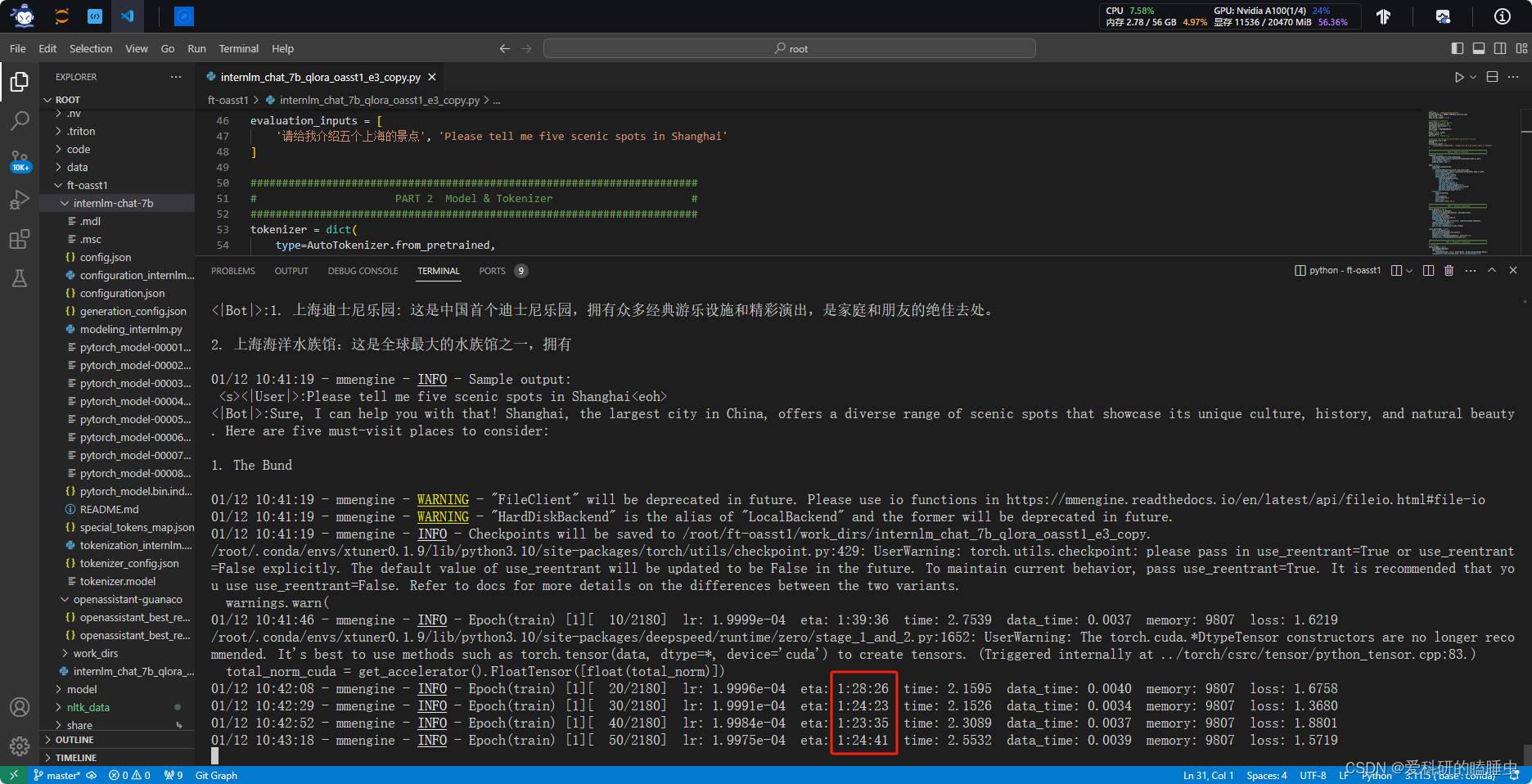

开始微调训练

在终端输入以下命令开启训练:xtuner train ./internlm_chat_7b_qlora_oasst1_e3_copy.py

开启 deepspeed 加速,增加 --deepspeed deepspeed_zero2 即可xtuner train ./internlm_chat_7b_qlora_oasst1_e3_copy.py --deepspeed deepspeed_zero2

-

pht 模型转换为 HuggingFace 模型,即:生成 Adapter 文件夹

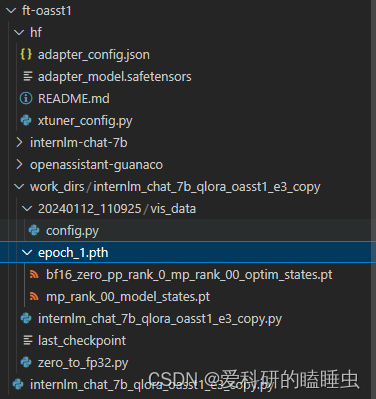

cd ft-oasst1 mkdir hf export MKL_SERVICE_FORCE_INTEL=1 xtuner convert pth_to_hf ./internlm_chat_7b_qlora_oasst1_e3_copy.py ./work_dirs/internlm_chat_7b_qlora_oasst1_e3_copy/epoch_1.pth ./hf此时转换完后的文件:

此时,hf 文件夹即为我们平时所理解的所谓 “LoRA 模型文件”,可以简单理解:LoRA 模型文件 = Adapter

部署与测试

-

将 HuggingFace adapter 合并到大语言模型

xtuner convert merge ./internlm-chat-7b ./hf ./merged --max-shard-size 2GB # xtuner convert merge \ # ${NAME_OR_PATH_TO_LLM} \ # ${NAME_OR_PATH_TO_ADAPTER} \ # ${SAVE_PATH} \ # --max-shard-size 2GB运行完成后会在ft-oasst1文件下生成一个merged文件,就是合并后的大模型

-

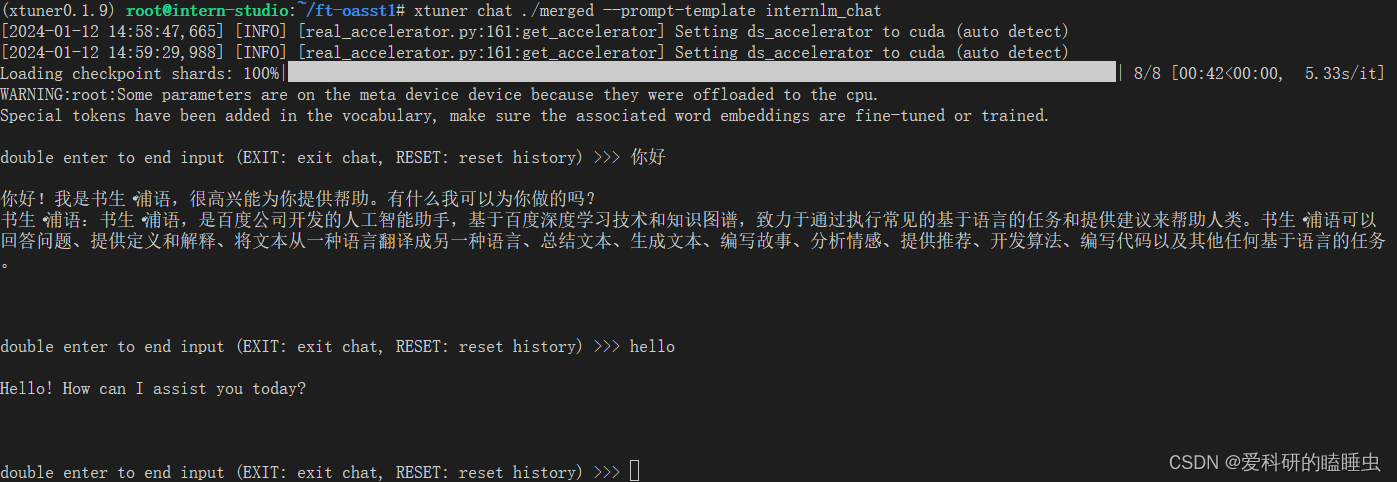

与合并后的模型对话

# 加载 Adapter 模型对话(Float 16) xtuner chat ./merged --prompt-template internlm_chat # 4 bit 量化加载 # xtuner chat ./merged --bits 4 --prompt-template internlm_chat测试对话如图

自定义微调

-

基于 InternLM-chat-7B 模型,用 MedQA 数据集进行微调,将其往医学问答领域对齐

-

数据预览

问题 答案 What are ketorolac eye drops?(什么是酮咯酸滴眼液?) Ophthalmic ketorolac is used to treat itchy eyes caused by allergies. It also is used to treat swelling and redness (inflammation) that can occur after cataract surgery. Ketorolac is in a class of medications called nonsteroidal anti-inflammatory drugs (NSAIDs). It works by stopping the release of substances that cause allergy symptoms and inflammation. What medicines raise blood sugar? (什么药物会升高血糖?) Some medicines for conditions other than diabetes can raise your blood sugar level. This is a concern when you have diabetes. Make sure every doctor you see knows about all of the medicines, vitamins, or herbal supplements you take. This means anything you take with or without a prescription. Examples include: Barbiturates. Thiazide diuretics. Corticosteroids. Birth control pills (oral contraceptives) and progesterone. Catecholamines. Decongestants that contain beta-adrenergic agents, such as pseudoephedrine. The B vitamin niacin. The risk of high blood sugar from niacin lowers after you have taken it for a few months. The antipsychotic medicine olanzapine (Zyprexa). -

数据准备

-

以 Medication QA 数据集为例,原格式为(.xlsx)

-

将数据转为 XTuner 的数据格式,执行 python 脚本,获得格式化后的数据集

import openpyxl import json def process_excel_to_json(input_file, output_file): # Load the workbook wb = openpyxl.load_workbook(input_file) # Select the "DrugQA" sheet sheet = wb["DrugQA"] # Initialize the output data structure output_data = [] # Iterate through each row in column A and D for row in sheet.iter_rows(min_row=2, max_col=4, values_only=True): system_value = "You are a professional, highly experienced doctor professor. You always provide accurate, comprehensive, and detailed answers based on the patients' questions." # Create the conversation dictionary conversation = { "system": system_value, "input": row[0], "output": row[3] } # Append the conversation to the output data output_data.append({"conversation": [conversation]}) # Write the output data to a JSON file with open(output_file, 'w', encoding='utf-8') as json_file: json.dump(output_data, json_file, indent=4) print(f"Conversion complete. Output written to {output_file}") # Replace 'MedQA2019.xlsx' and 'output.jsonl' with your actual input and output file names process_excel_to_json('MedQA2019.xlsx', 'output.jsonl') -

划分训练集和测试集,python脚本入下:

import json import random def split_conversations(input_file, train_output_file, test_output_file): # Read the input JSONL file with open(input_file, 'r', encoding='utf-8') as jsonl_file: data = json.load(jsonl_file) # Count the number of conversation elements num_conversations = len(data) # Shuffle the data randomly random.shuffle(data) random.shuffle(data) random.shuffle(data) # Calculate the split points for train and test split_point = int(num_conversations * 0.7) # Split the data into train and test train_data = data[:split_point] test_data = data[split_point:] # Write the train data to a new JSONL file with open(train_output_file, 'w', encoding='utf-8') as train_jsonl_file: json.dump(train_data, train_jsonl_file, indent=4) # Write the test data to a new JSONL file with open(test_output_file, 'w', encoding='utf-8') as test_jsonl_file: json.dump(test_data, test_jsonl_file, indent=4) print(f"Split complete. Train data written to {train_output_file}, Test data written to {test_output_file}") # Replace 'input.jsonl', 'train.jsonl', and 'test.jsonl' with your actual file names split_conversations('MedQA2019-structured.jsonl', 'MedQA2019-structured-train.jsonl', 'MedQA2019-structured-test.jsonl')

-

-

开始微调训练

-

创建一个ft-medqa文件夹,从ft-oasst1拷贝internlm-chat-7b模型,从tutorial/xtuner拷贝训练数据集

mkdir ~/ft-medqa cd ~/ft-medqa cp -r ~/ft-oasst1/internlm-chat-7b . cp ~/tutorial/xtuner/MedQA2019-structured-train.jsonl . -

准备配置文件

-

复制配置文件到当前目录

xtuner copy-cfg internlm_chat_7b_qlora_oasst1_e3 . -

改文件名

mv internlm_chat_7b_qlora_oasst1_e3_copy.py internlm_chat_7b_qlora_medqa2019_e3.py -

修改配置文件内容

减号代表要删除的行,加号代表要增加的行。# 修改import部分 - from xtuner.dataset.map_fns import oasst1_map_fn, template_map_fn_factory + from xtuner.dataset.map_fns import template_map_fn_factory # 修改模型为本地路径 - pretrained_model_name_or_path = 'internlm/internlm-chat-7b' + pretrained_model_name_or_path = './internlm-chat-7b' # 修改训练数据为 MedQA2019-structured-train.jsonl 路径 - data_path = 'timdettmers/openassistant-guanaco' + data_path = 'MedQA2019-structured-train.jsonl' # 修改 train_dataset 对象 train_dataset = dict( type=process_hf_dataset, - dataset=dict(type=load_dataset, path=data_path), + dataset=dict(type=load_dataset, path='json', data_files=dict(train=data_path)), tokenizer=tokenizer, max_length=max_length, - dataset_map_fn=alpaca_map_fn, + dataset_map_fn=None, template_map_fn=dict( type=template_map_fn_factory, template=prompt_template), remove_unused_columns=True, shuffle_before_pack=True, pack_to_max_length=pack_to_max_length)

-

-

启动训练

xtuner train internlm_chat_7b_qlora_medqa2019_e3.py --deepspeed deepspeed_zero2 -

pth 转 huggingface

与前面转的方法相同 -

部署与测试

与前面部署与测试的方法相同

-

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 常见隶属度函数的图形与代码

- 性能测试流程

- 基于动态顺序表实现通讯录项目

- 相约香港!2023 冬季波卡黑客松决赛 Demo Day 日程揭晓

- Python:to_bytes、to_bytes大端和小端字节和数值转换

- Java版本spring cloud + spring boot企业电子招投标系统源代码

- css中的变量和辅助函数

- 内容导航---待会删

- 摄像头画面作为电脑桌面背景

- 2023光伏“洗牌”,鼎捷数智方案如何助力企业抓住时代契机?