Kubernetes实战(十五)-Pod垂直自动伸缩VPA实战

1 介绍

VPA 全称 Vertical Pod Autoscaler,即垂直 Pod 自动扩缩容,它根据容器资源使用率自动设置 CPU 和 内存 的requests,从而允许在节点上进行适当的调度,以便为每个 Pod 提供适当的资源。

它既可以缩小过度请求资源的容器,也可以根据其使用情况随时提升资源不足的容量。

VPA不会改变Pod的资源limits值,只调整pod的request 值。

使用 VPA 的意义:

- Pod 资源用其所需,提升集群节点使用效率;

- 不必运行基准测试任务来确定 CPU 和内存请求的合适值;

- VPA可以随时调整CPU和内存请求,无需人为操作,因此可以减少维护时间。

VPA目前还没有生产就绪,在使用之前需要了解资源调节对应用的影响。?

2?VPA原理

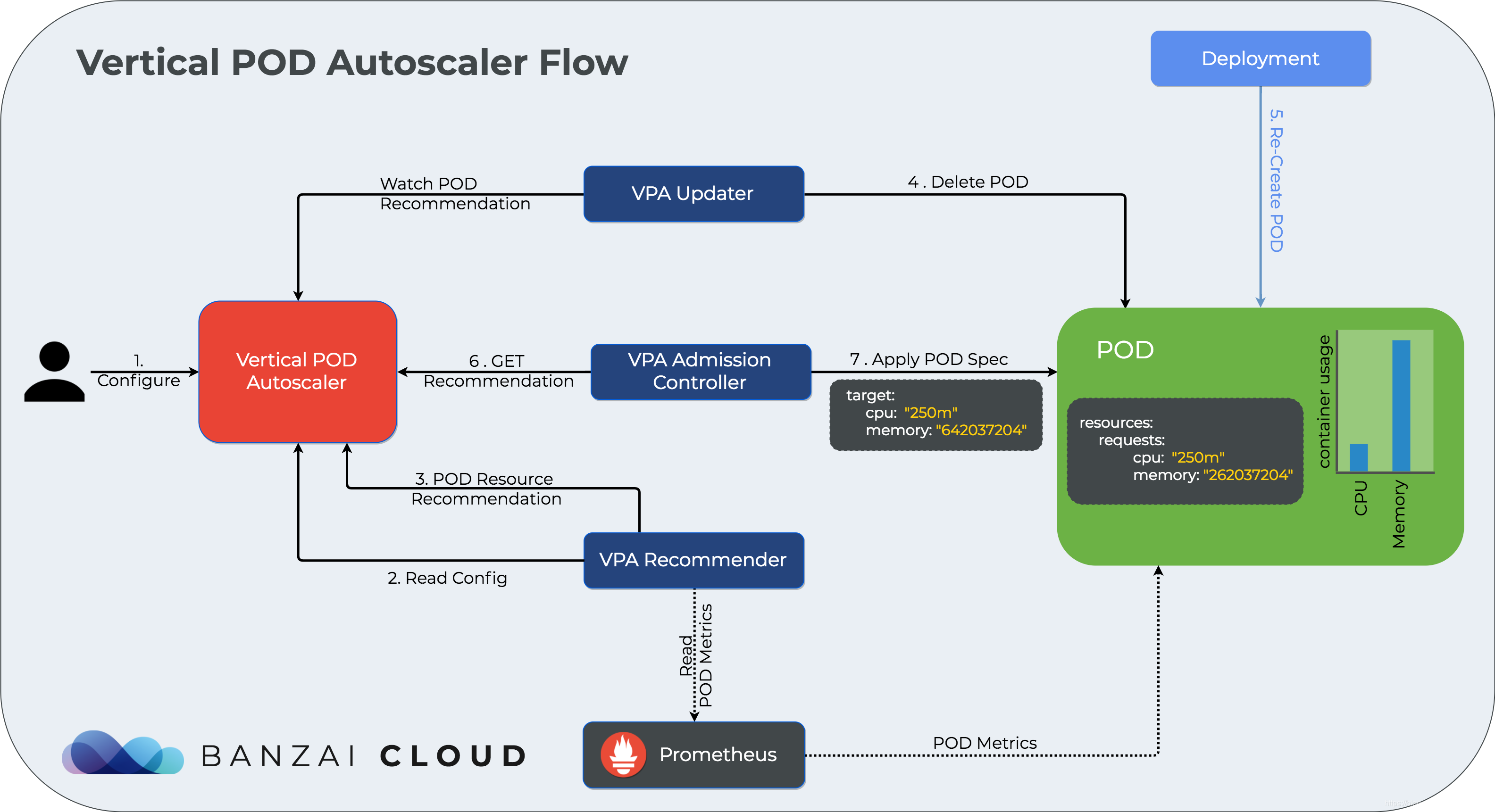

2.1 工作流

首先?Recommender 会根据应用当前的资源使用情况以及历史的资源使用情况,计算接下来可能的资源使用阈值,如果计算出的值和当前值不一致则会给出一条资源调整建议。

然后?Updater 则根据这些建议进行调整,具体调整方法为:

- 1)Updater 根据建议发现需要调整,然后调用 api 驱逐 Pod

- 2)Pod 被驱逐后就会重建,然后再重建过程中VPA Admission Controller 会进行拦截,根据 Recommend 来调整 Pod 的资源请求量

- 3)最终 Pod 重建出来就是按照推荐资源请求量重建的了。

根据上述流程可知,调整资源请求量需要重建 Pod,这是一个破坏性的操作,所以 VPA 还没有生产就绪。

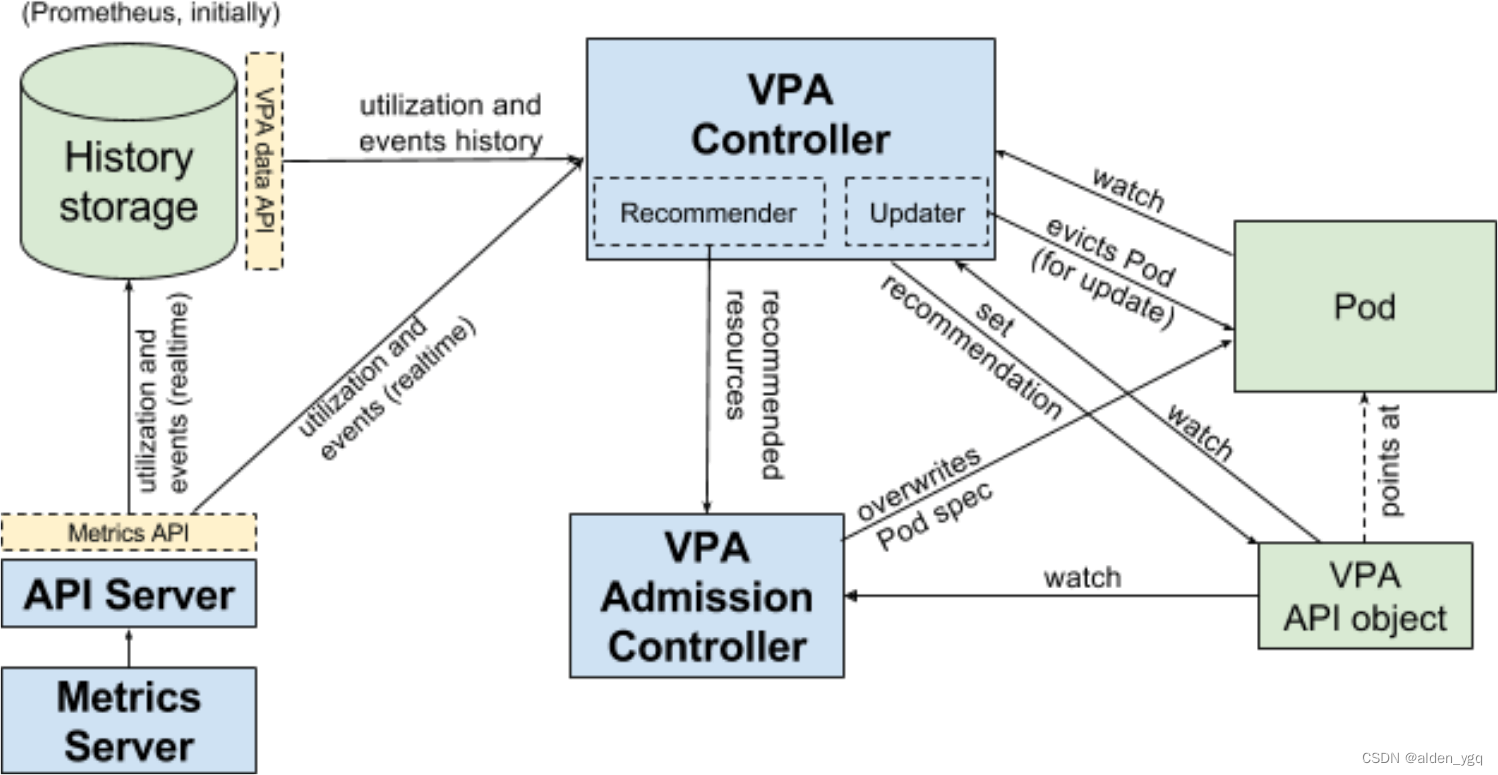

2.2?VPA架构图

VPA 主要包括两个组件:

- 1)VPA Controller

- Recommendr:给出 pod 资源调整建议

- Updater:对比建议值和当前值,不一致时驱逐 Pod

- 2)VPA Admission Controller

- Pod 重建时将 Pod 的资源请求量修改为推荐值?

2.3?Recommenderd 设计理念

推荐模型(MVP) 假设内存和CPU利用率是独立的随机变量,其分布等于过去 N 天观察到的变量(推荐值为 N=8 以捕获每周峰值)。

-

对于?CPU,目标是将容器使用率超过请求的高百分比(例如95%)时的时间部分保持在某个阈值(例如1%的时间)以下。

- 在此模型中,CPU 使用率?被定义为在短时间间隔内测量的平均使用率。测量间隔越短,针对尖峰、延迟敏感的工作负载的建议质量就越高。

- 最小合理分辨率为1/min,推荐为1/sec。

-

对于内存,目标是将特定时间窗口内容器使用率超过请求的概率保持在某个阈值以下(例如,24小时内低于1%)。

- 窗口必须很长(≥24小时)以确保由 OOM 引起的驱逐不会明显影响(a)服务应用程序的可用性(b)批处理计算的进度(更高级的模型可以允许用户指定SLO来控制它)。

2.4?VPA优缺点

2.4.1 优点

- Pod 资源用其所需,所以集群节点使用效率高。

- Pod 会被安排到具有适当可用资源的节点上。

- 不必运行基准测试任务来确定 CPU 和内存请求的合适值。

- VPA 可以随时调整 CPU 和内存请求,无需人为操作,因此可以减少维护时间。

2.4.2 缺点

-

VPA的成熟度还不足,更新正在运行的 Pod 资源配置是 VPA 的一项试验性功能,会导致 Pod 的重建和重启,而且有可能被调度到其他的节点上。

-

VPA 不会驱逐没有在副本控制器管理下的 Pod。目前 VPA 不能和监控 CPU 和内存度量的Horizontal Pod Autoscaler (HPA) 同时运行,除非 HPA 只监控其他定制化的或者外部的资源度量。

-

VPA 使用 admission webhook 作为其准入控制器。如果集群中有其他的 admission webhook,需要确保它们不会与 VPA 发生冲突。准入控制器的执行顺序定义在 APIServer 的配置参数中。

-

VPA 会处理出现的绝大多数 OOM 的事件,但不保证所有的场景下都有效。

-

VPA 性能尚未在大型集群中进行测试。

-

VPA 对 Pod 资源 requests 的修改值可能超过实际的资源上限,例如节点资源上限、空闲资源或资源配额,从而造成 Pod 处于 Pending 状态无法被调度。

- 同时使用集群自动伸缩(ClusterAutoscaler) 可以一定程度上解决这个问题。

-

多个 VPA 同时匹配同一个 Pod 会造成未定义的行为。

2.4.3 限制

- 不能与HPA(Horizontal Pod Autoscaler )一起使用

- Pod使用有限制,比如使用副本控制器的工作负载,例如属于Deployment或者StatefulSet

2.5?In-Place Update of Pod Resources

当前 VPA 需要重建 Pod 才能调整 resource.requst,因此局限性会比较大,毕竟频繁重建 Pod 可能会对业务稳定性有影响。

社区在 2019 年就有人提出In-Place Update of Pod Resources?功能,最新进展见?#1287,根据 issue 中的描述,最快在 k8s v1.26 版本就能完成 Alpha 版本。

该功能实现后对 VPA 来说是一个巨大的优化,毕竟一直破坏性的重建 Pod 风险还是有的。

3 VPA测试验证

3.1?部署metrics-server

3.1.1?下载部署清单文件

$ wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.7/components.yaml

3.1.2?修改components.yaml文件

$ cat components.yaml

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:aggregated-metrics-reader

labels:

rbac.authorization.k8s.io/aggregate-to-view: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rules:

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

containers:

- name: metrics-server

image: registry.aliyuncs.com/google_containers/metrics-server:v0.3.7

imagePullPolicy: IfNotPresent

args:

- --cert-dir=/tmp

- --secure-port=4443

- /metrics-server

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

ports:

- name: main-port

containerPort: 4443

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- name: tmp-dir

mountPath: /tmp

nodeSelector:

kubernetes.io/os: linux

---

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: main-port

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system- 修改了镜像地址为内部地址:registry.aliyuncs.com/google_containers/metrics-server:v0.3.7

- 修改了metrics-server启动参数args

3.1.3?执行部署

$ kubectl apply -f components.yaml3.1.4?验证

$ kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5d4b78db86-4wpbx 1/1 Running 0 24d

calico-kube-controllers-5d4b78db86-cdcx6 1/1 Running 0 23d

calico-kube-controllers-5d4b78db86-gmvg5 1/1 Running 0 24d

calico-kube-controllers-5d4b78db86-qfmzm 1/1 Running 0 24d

calico-kube-controllers-5d4b78db86-srrxj 1/1 Running 0 24d

calico-node-f5s6w 1/1 Running 1 24d

calico-node-f6pmk 1/1 Running 0 24d

calico-node-jk7zc 1/1 Running 0 24d

calico-node-p2c7d 1/1 Running 7 24d

calico-node-v8z5x 1/1 Running 0 24d

coredns-59d64cd4d4-85h7g 1/1 Running 0 24d

coredns-59d64cd4d4-tll9s 1/1 Running 0 23d

coredns-59d64cd4d4-zr4hd 1/1 Running 0 24d

etcd-ops-master-1 1/1 Running 8 24d

etcd-ops-master-2 1/1 Running 3 24d

etcd-ops-master-3 1/1 Running 0 24d

kube-apiserver-ops-master-1 1/1 Running 9 24d

kube-apiserver-ops-master-2 1/1 Running 8 24d

kube-apiserver-ops-master-3 1/1 Running 0 24d

kube-controller-manager-ops-master-1 1/1 Running 9 24d

kube-controller-manager-ops-master-2 1/1 Running 3 24d

kube-controller-manager-ops-master-3 1/1 Running 1 24d

kube-proxy-cxjz8 1/1 Running 0 24d

kube-proxy-dhjxj 1/1 Running 8 24d

kube-proxy-rm64j 1/1 Running 0 24d

kube-proxy-xg6bp 1/1 Running 0 24d

kube-proxy-zcvzs 1/1 Running 1 24d

kube-scheduler-ops-master-1 1/1 Running 9 24d

kube-scheduler-ops-master-2 1/1 Running 4 24d

kube-scheduler-ops-master-3 1/1 Running 1 24d

metrics-server-54cc454bdd-ds4zp 1/1 Running 0 34s

$ kubectl top nodes

W0110 18:58:33.909569 14156 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

ops-master-1 82m 1% 1212Mi 8%

ops-master-2 98m 1% 2974Mi 19%

ops-master-3 106m 1% 2666Mi 17%

ops-worker-1 55m 0% 2014Mi 13%

ops-worker-2 59m 0% 2011Mi 13% 3.2?部署vertical-pod-autoscaler

VPA 与 k8s 版本兼容性如下:

| VPA version | Kubernetes version |

|---|---|

| 0.12 | 1.25+ |

| 0.11 | 1.22 - 1.24 |

| 0.10 | 1.22+ |

| 0.9 | 1.16+ |

当前使用的是 k8s 1.23.6 版本,根据 VPA 兼容性,这个版本的 k8s 需要使用 VPA 0.11 版本。

3.2.1?克隆autoscaler项目

$ git clone -b vpa-release-0.11 https://github.com/kubernetes/autoscaler.git3.2.2 替换镜像

将gcr仓库改成国内仓库。

admission-controller-deployment.yaml文件将us.gcr.io/k8s-artifacts-prod/autoscaling/vpa-admission-controller:0.8.0改为scofield/vpa-admission-controller:0.8.0

recommender-deployment.yaml文件将us.gcr.io/k8s-artifacts-prod/autoscaling/vpa-recommender:0.8.0改为image: scofield/vpa-recommender:0.8.0

updater-deployment.yaml文件将us.gcr.io/k8s-artifacts-prod/autoscaling/vpa-updater:0.8.0改为scofield/vpa-updater:0.8.03.2.3?部署

$ cd autoscaler/vertical-pod-autoscaler

$ ./hack/vpa-up.sh

customresourcedefinition.apiextensions.k8s.io/verticalpodautoscalers.autoscaling.k8s.io created

customresourcedefinition.apiextensions.k8s.io/verticalpodautoscalercheckpoints.autoscaling.k8s.io created

clusterrole.rbac.authorization.k8s.io/system:metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:vpa-actor created

clusterrole.rbac.authorization.k8s.io/system:vpa-checkpoint-actor created

clusterrole.rbac.authorization.k8s.io/system:evictioner created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-actor created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-checkpoint-actor created

clusterrole.rbac.authorization.k8s.io/system:vpa-target-reader created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-target-reader-binding created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-evictionter-binding created

serviceaccount/vpa-admission-controller created

clusterrole.rbac.authorization.k8s.io/system:vpa-admission-controller created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-admission-controller created

clusterrole.rbac.authorization.k8s.io/system:vpa-status-reader created

clusterrolebinding.rbac.authorization.k8s.io/system:vpa-status-reader-binding created

serviceaccount/vpa-updater created

deployment.apps/vpa-updater created

serviceaccount/vpa-recommender created

deployment.apps/vpa-recommender created

Generating certs for the VPA Admission Controller in /tmp/vpa-certs.

Generating RSA private key, 2048 bit long modulus (2 primes)

............................................................................+++++

.+++++

e is 65537 (0x010001)

Generating RSA private key, 2048 bit long modulus (2 primes)

............+++++

...........................................................................+++++

e is 65537 (0x010001)

Signature ok

subject=CN = vpa-webhook.kube-system.svc

Getting CA Private Key

Uploading certs to the cluster.

secret/vpa-tls-certs created

Deleting /tmp/vpa-certs.

deployment.apps/vpa-admission-controller created

service/vpa-webhook created这里如果出现错误:ERROR: Failed to create CA certificate for self-signing. If the error is “unknown option -addext”, update your openssl version or deploy VPA from the vpa-release-0.8 branch

需要升级openssl的版本解决,openssl升级见:OpenSSL升级版本-CSDN博客

升级完openssl后执行以下操作:

$ vertical-pod-autoscaler/pkg/admission-controller/gencerts.sh3.2.4?查看部署结果

可以看到metrics-server和vpa都已经正常运行了。

$ kubectl get po -n kube-system | grep -E "metrics-server|vpa"

metrics-server-5b58f4df77-f7nks 1/1 Running 0 35d

vpa-admission-controller-7ff888c959-tvtmk 1/1 Running 0 104m

vpa-recommender-74f69c56cb-zmzwg 1/1 Running 0 104m

vpa-updater-79b88f9c55-m4xx5 1/1 Running 0 103m本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!