开源可观测性平台Signoz(三)【服务器主机监控篇】

转载说明:如果您喜欢这篇文章并打算转载它,请私信作者取得授权。感谢您喜爱本文,请文明转载,谢谢。

前文链接:

开源可观测性平台Signoz系列(一)【开篇】

开源可观测性平台Signoz(二)【日志采集篇】

在前文中,分享了signoz的安装、基础配置、日志采集,本文则分享signoz中如何添加主机监控。

1. 主机监控接入

对主机的监控,也同docker日志收集一样,signoz所在主机会默认收集自身的主机监控指标,非signoz主机需要接入主机监控指标,就需要通过otel-collector客户端收集和上传指标。

1.1 修改配置文件

1)在开源可观测性平台Signoz(二)【日志采集篇】收集日志的配置文件otel-collector-config.yaml的基础上,再增加主机采集配置即可。

如下注释,增加三段内容:

[root@test102 otel]# cat otel-collector-config.yaml

receivers:

filelog/containers:

include: [ "/var/lib/docker/containers/*/*.log" ]

start_at: end

include_file_path: true

include_file_name: false

operators:

- type: json_parser

id: parser-docker

output: extract_metadata_from_filepath

timestamp:

parse_from: attributes.time

layout: '%Y-%m-%dT%H:%M:%S.%LZ'

- type: regex_parser

id: extract_metadata_from_filepath

regex: '^.*containers/(?P<container_id>[^_]+)/.*log$'

parse_from: attributes["log.file.path"]

output: parse_body

- type: move

id: parse_body

from: attributes.log

to: body

output: add_source

- type: add

id: add_source

field: resource["source"]

value: "docker"

- type: remove

id: time

field: attributes.time

filelog:

include: [ "/var/log/nginx/*.log" ] #本机nginx日志路径

start_at: beginning

operators:

- type: json_parser

timestamp:

parse_from: attributes.time

layout: '%Y-%m-%d,%H:%M:%S %z'

- type: move

from: attributes.message

to: body

- type: remove

field: attributes.time

#####新增内容-1开始#####

hostmetrics:

collection_interval: 30s

scrapers:

cpu: {}

load: {}

memory: {}

disk: {}

filesystem: {}

network: {}

#####新增内容-1结束#####

processors:

batch:

send_batch_size: 10000

send_batch_max_size: 11000

timeout: 10s

#####新增内容-2开始######

resourcedetection:

# Using OTEL_RESOURCE_ATTRIBUTES envvar, env detector adds custom labels.

detectors: [env, system] # include ec2 for AWS, gce for GCP and azure for Azure.

timeout: 2s

#####新增内容-2结束######

exporters:

otlp/log:

endpoint: http://10.0.0.101:4317 #如果部署signoz未修改端口映射,则默认是4317

tls:

insecure: true

service:

pipelines:

logs:

receivers: [filelog/containers,filelog]

processors: [batch]

exporters: [ otlp/log ]

#####新增加内容-3开始######

metrics: #加载前面每一个相关模块的配置

receivers: [hostmetrics]

processors: [batch,resourcedetection]

exporters: [otlp/log] #因为exporters信息简单,所以这里复用了[otlp/log],如有个性化设置,可再增加一个[otlp/xx]模块。名称不可重复

#####新增加内容-3结束######

[root@test102 otel]#

如果不要日志采集,只需要加个host监控,则可以这样简单配置:

[root@test102 otel]# cat otel-collector-config.yaml

receivers:

hostmetrics:

collection_interval: 30s

scrapers:

cpu: {}

load: {}

memory: {}

disk: {}

filesystem: {}

network: {}

processors:

batch:

send_batch_size: 10000

send_batch_max_size: 11000

timeout: 10s

resourcedetection:

# Using OTEL_RESOURCE_ATTRIBUTES envvar, env detector adds custom labels.

detectors: [env, system] # include ec2 for AWS, gce for GCP and azure for Azure.

timeout: 2s

exporters:

otlp/host: #如果与日志收集配置文件合并,这里可以共用

endpoint: http://10.0.0.101:4317 #如果部署signoz未修改端口映射,则默认是4317

tls:

insecure: true

service:

pipelines:

metrics: #加载前面每一个相关模块的配置

receivers: [hostmetrics]

processors: [batch,resourcedetection]

exporters: [otlp/host]

[root@test102 otel]#

2)检查docker-compose.yaml文件,确保有如下内容:

[root@test102 otel]# cat docker-compose.yaml

version: "3"

services:

otel-collector:

image: signoz/signoz-otel-collector:0.66.5

command: ["--config=/etc/otel-collector-config.yaml"]

user: root # required for reading docker container logs

container_name: signoz-host-otel-collector

volumes:

- ./otel-collector-config.yaml:/etc/otel-collector-config.yaml

- /var/lib/docker/containers:/var/lib/docker/containers:ro

- /var/log/nginx:/var/log/nginx:ro

##这块env主要用在主机监控时用

environment:

- OTEL_RESOURCE_ATTRIBUTES=host.name=test102,os.type=linux #${hostname}改为本机hostname

- DOCKER_MULTI_NODE_CLUSTER=false

- LOW_CARDINAL_EXCEPTION_GROUPING=false

restart: on-failure

[root@test102 otel]#

1.2 修重启目标主机otel-collector

重启:

[root@test102 otel]# docker-compose -f docker-compose.yaml restart otel-collector

Restarting signoz-host-otel-collector ... done

[root@test102 otel]#

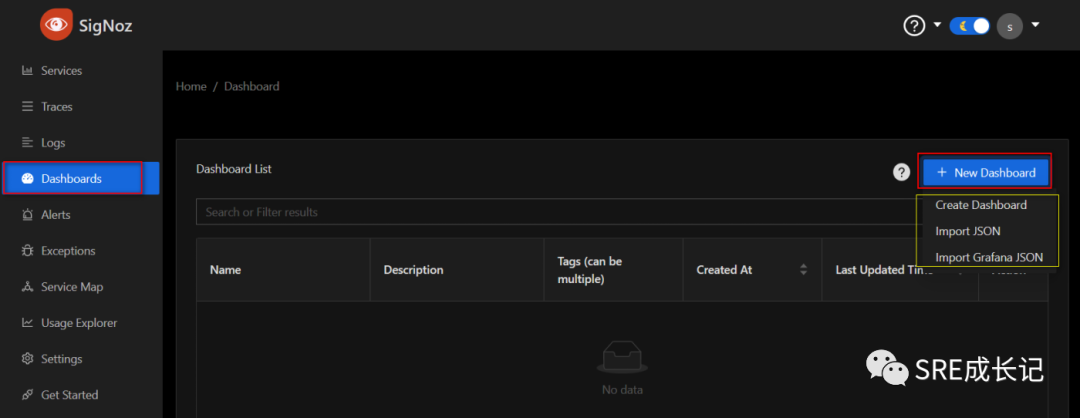

1.3 创建或导入dashboard看板

Dashboard—New Dashboard:

如图,dashboard有三种创建方式:

Create Dashboard:手动创建一个新的dashboard,添加自己关心的指标;

Import JSON:导入编辑好的、适应signoz的jsonz的dashboard看板json文件;

Import Grafana JSON:导入Grafana的模板文件,导入后,某些数据会自动进行一些改动,以适应signoz的dashboard。但这种方式目前尚处于实验阶段,从0.16.2版本试用来看,支持还不够友好,需要手动进行较大的调整修改。

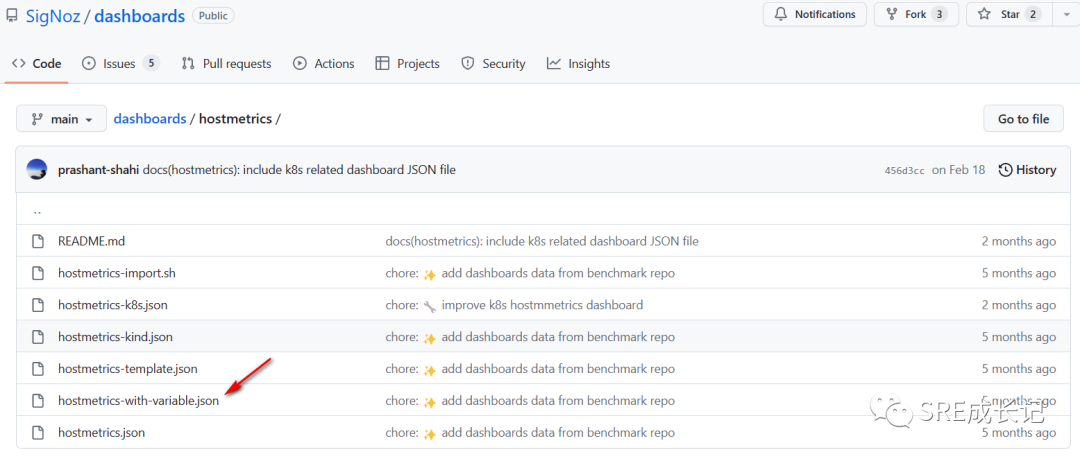

目前signoz官网有几个可用的看板可以直接导入使用:https://github.com/SigNoz/dashboards

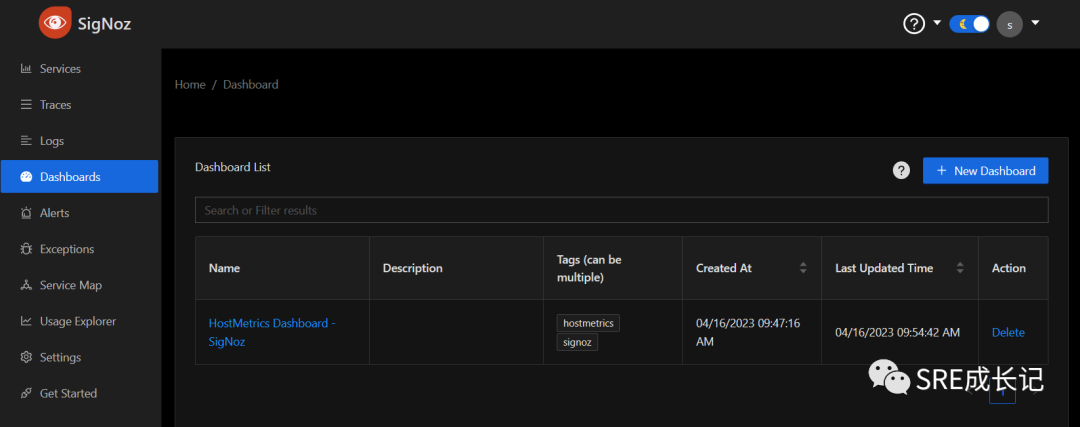

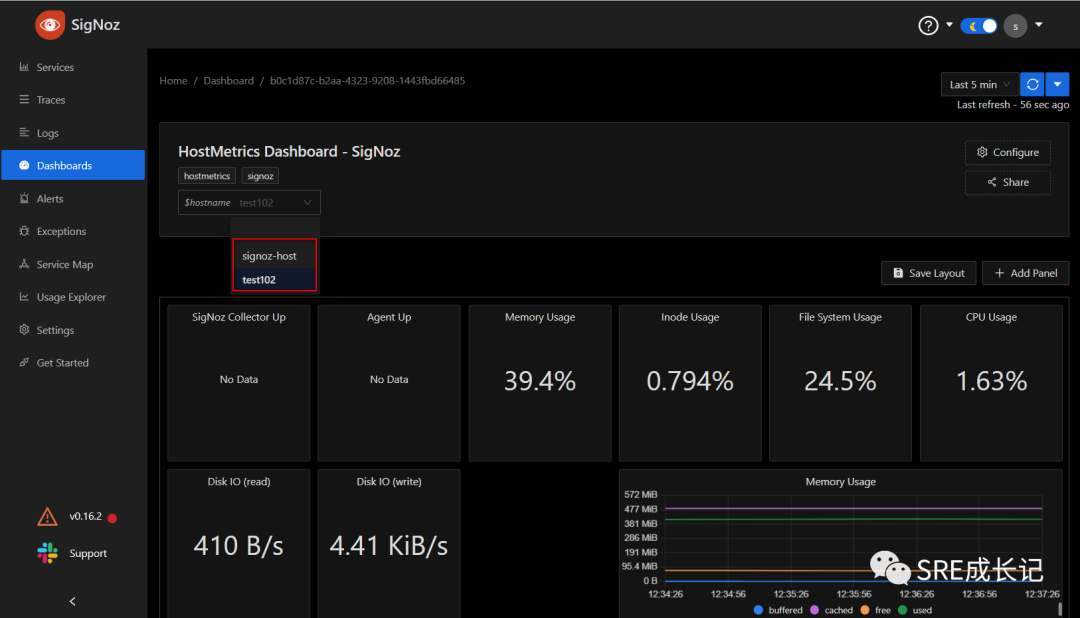

导入带变量的模板hostmetrics-with-variable.json后,效果如下:

以主机名为变量,查看多个主机的监控看板信息。

1.4 给主机创建独立dashboard看板

如果要给某个特别的主机创建它单独使用的dashboard,即不带hostname变量那种,则步骤如下:

1)在https://github.com/SigNoz/dashboards下载dashboards-main到目标服务器上;

2)修改脚本dashboards-main/hostmetrics/hostmetrics-import.sh,将SIGNOZ_ENDPOINT="http://localhost:3301"中localhost 改为signoz的真实IP或域名;

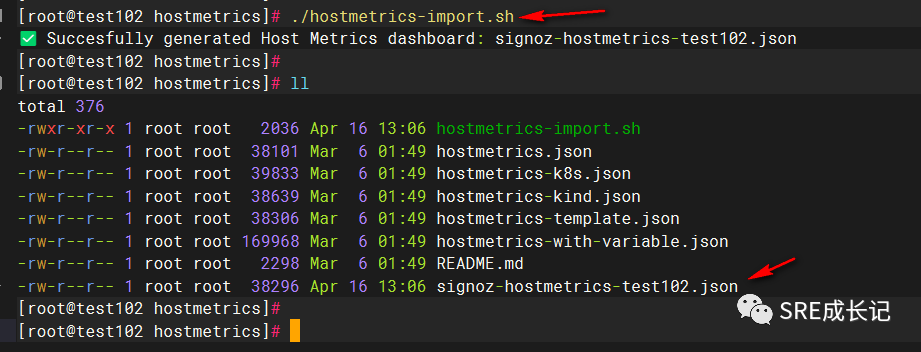

3)执行脚本sh hostmetrics-import.sh,生成本机的Host Metrics dashboard:

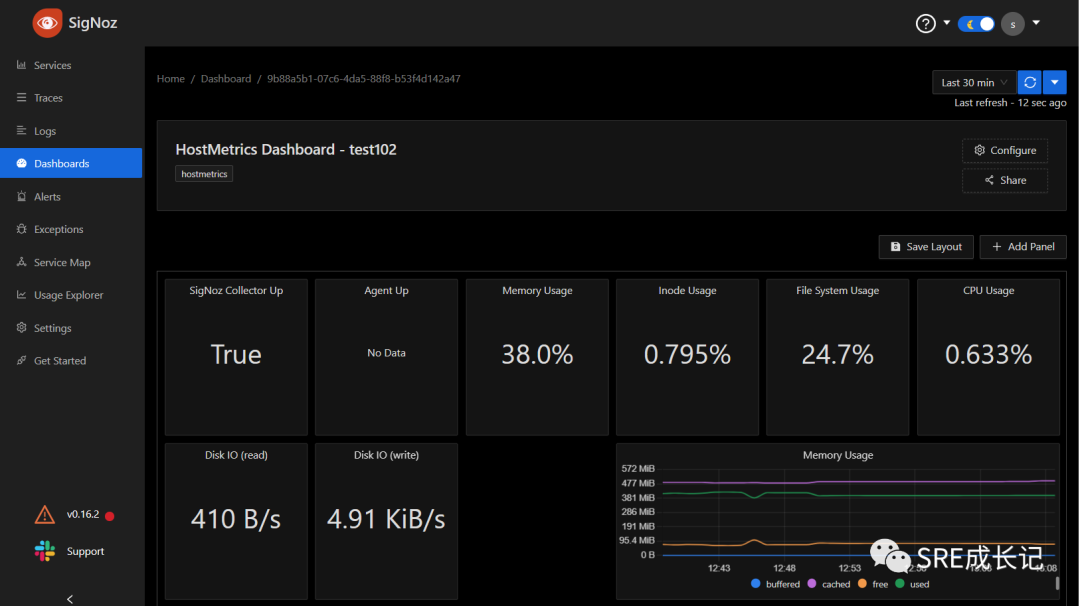

将新生成的文件signoz-hostmetrics-test102.json导入dashboard即可:

1.5 监看监控指标

登录clickhouse可以查看支持的监控指标,语法和mysql类似。登录和查询全步骤如下:

[root@test101 clickhouse-setup]# docker exec -it clickhouse /bin/bash #进入clickhouse容器

bash-5.1#

bash-5.1# clickhouse-client #登录clickhouse

ClickHouse client version 22.8.8.3 (official build).

Connecting to localhost:9000 as user default.

Connected to ClickHouse server version 22.8.8 revision 54460.

Warnings:

* Linux transparent hugepages are set to "always". Check /sys/kernel/mm/transparent_hugepage/enabled

* Linux threads max count is too low. Check /proc/sys/kernel/threads-max

* Available memory at server startup is too low (2GiB).

clickhouse :)

clickhouse :)

clickhouse :) select DISTINCT(JSONExtractString(time_series_v2.labels,'__name__')) as metrics from time_series_v2 #查看指标

##也可以将指标导出到某个文件

clickhouse :) select DISTINCT(labels) from signoz_metrics.time_series_v2 INTO OUTFILE '/tmp/output.csv' #导出指标到文件

2. 告警设置

2.1 配置告警渠道

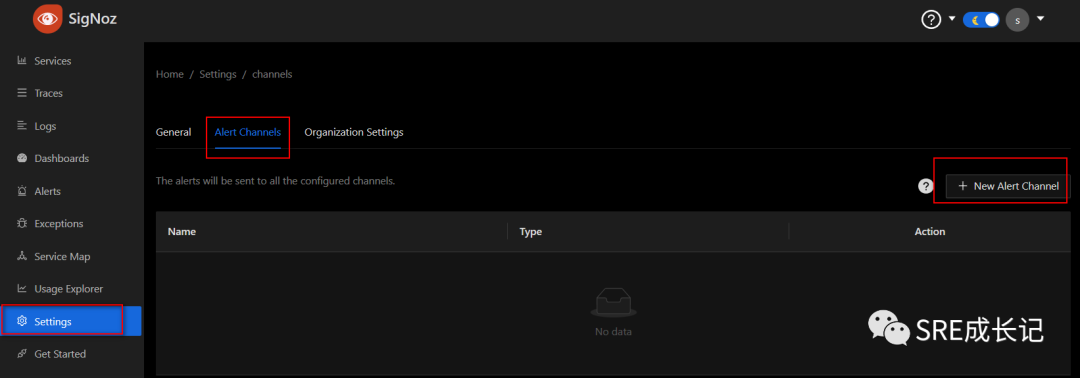

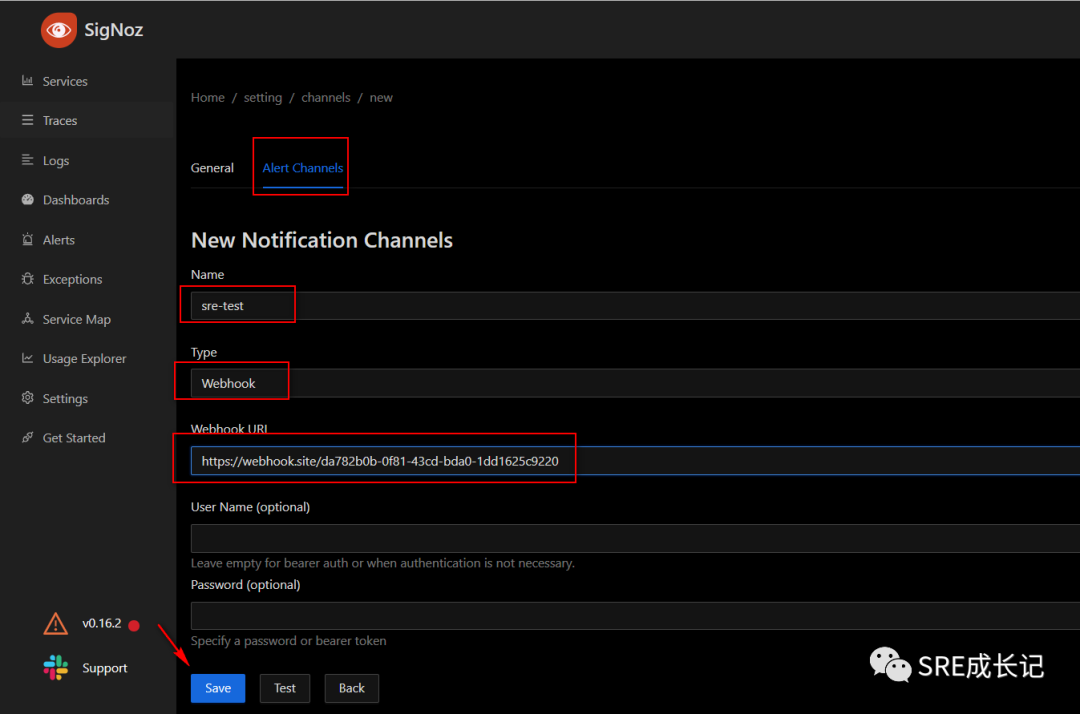

Setting—Alert Channels—New Alert Channel,添加告警渠道:

目前支持的告警渠道只有三种:slack、webhook、Pagerduty。

此处用webhook作个实验。

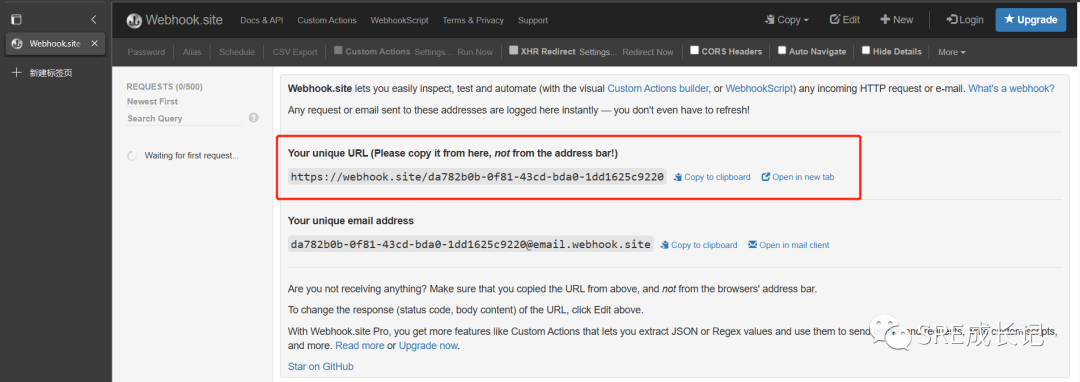

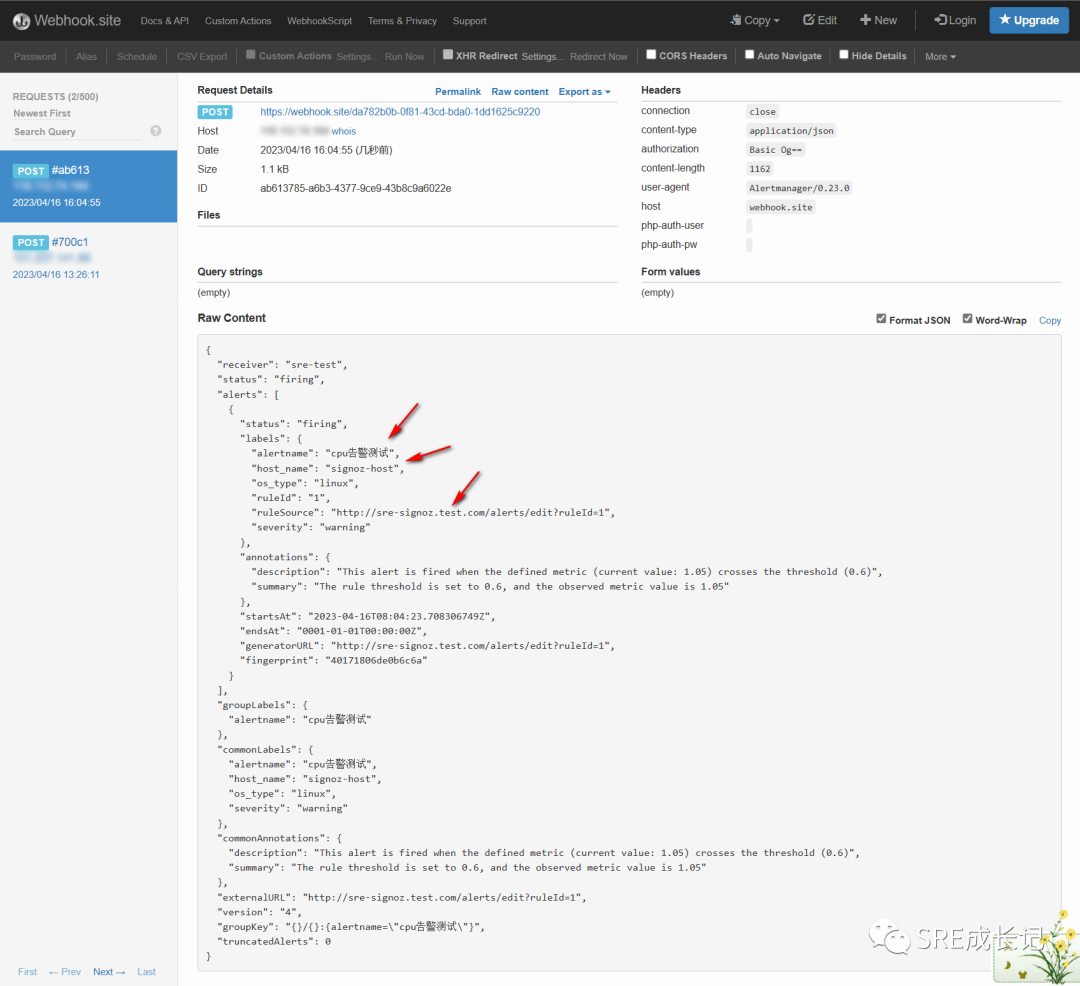

访问https://webhook.site,拿到一个url:https://webhook.site/da782b0b-0f81-43cd-bda0-1dd1625c9220

将此URL配置到告警渠道,填写好信息后,可以点“test”按钮测试连通性:

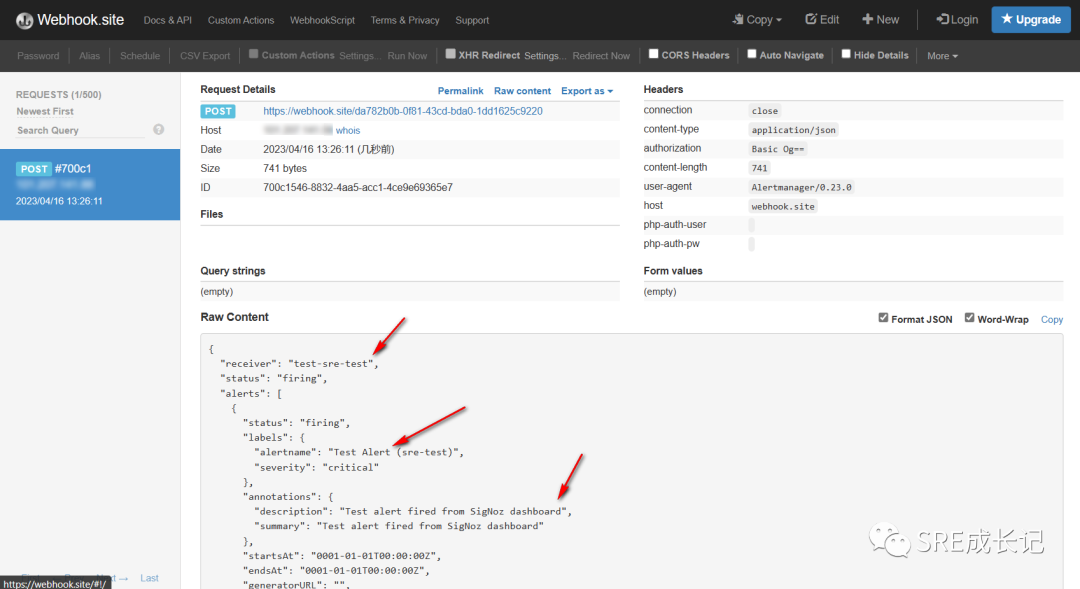

test测试成功后,https://webhook.site/界面能收到信息:

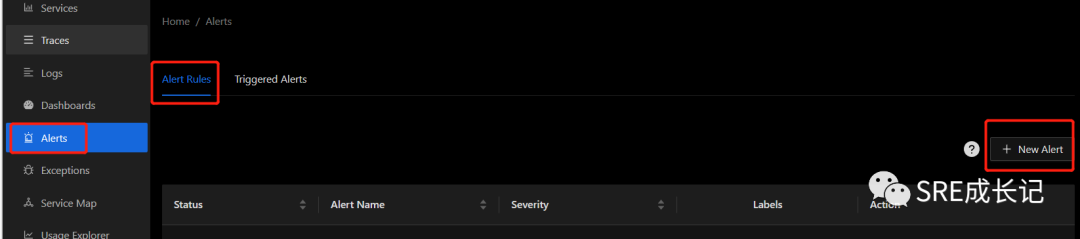

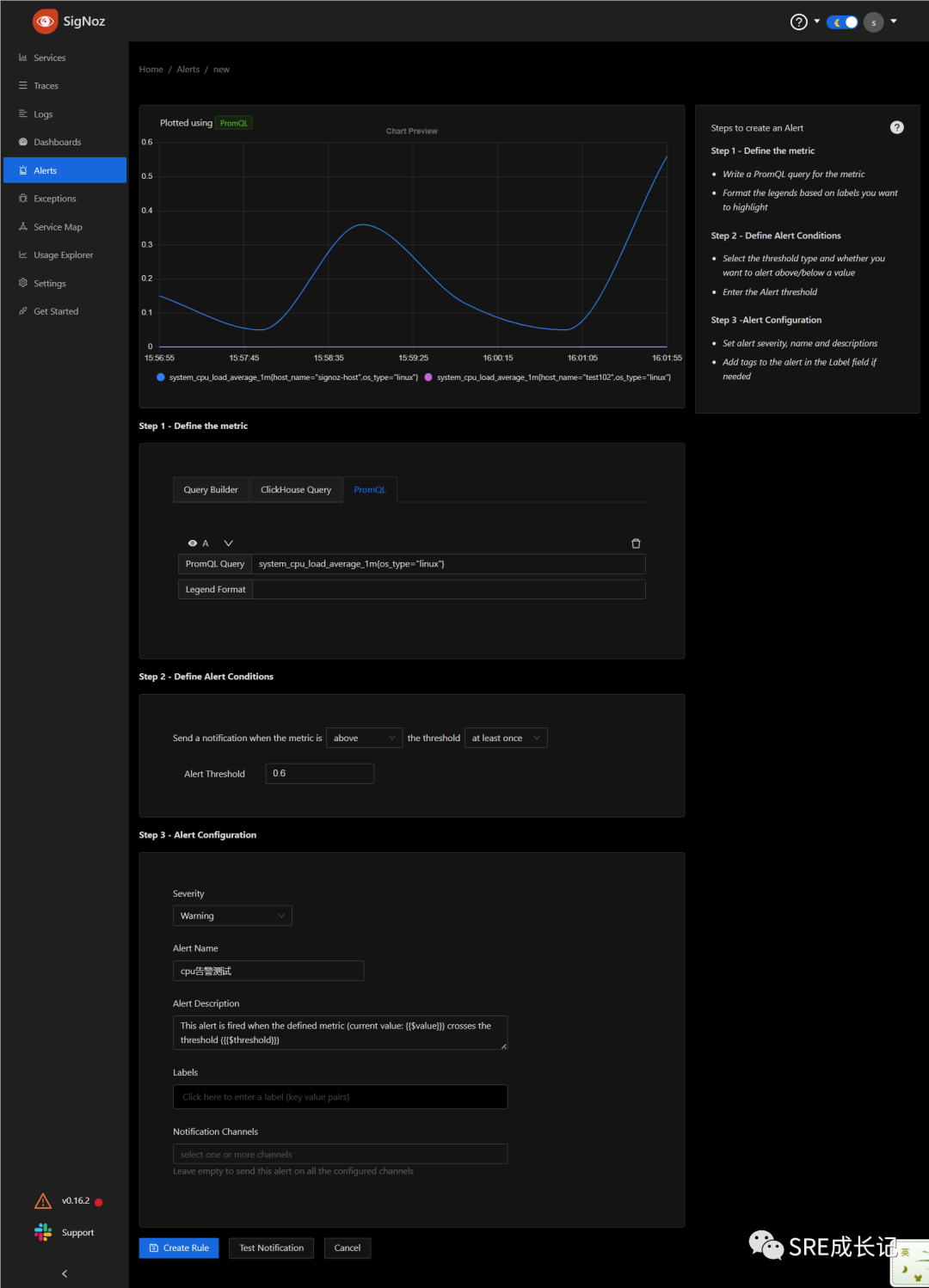

2.2 创建一个测试告警

告警可以基于指标、日志、链路、异常数据等方式创建告警,此处创建一个简单的基于metric的告警做测试:

内容如下:

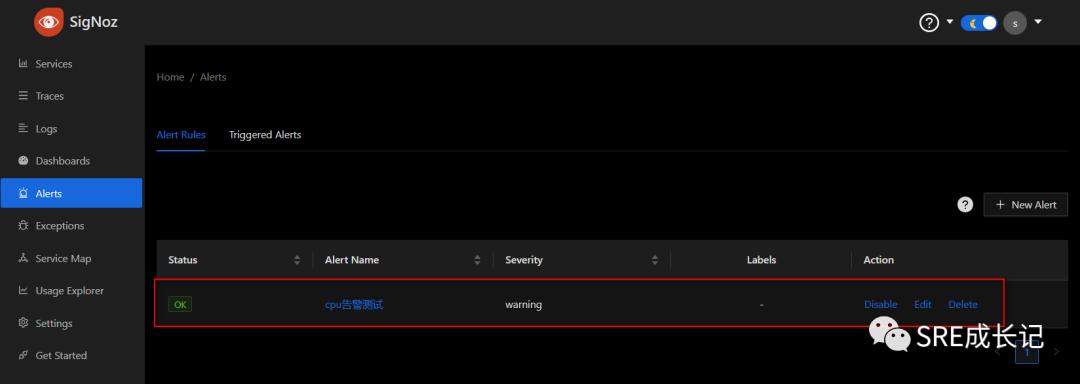

创建完成:

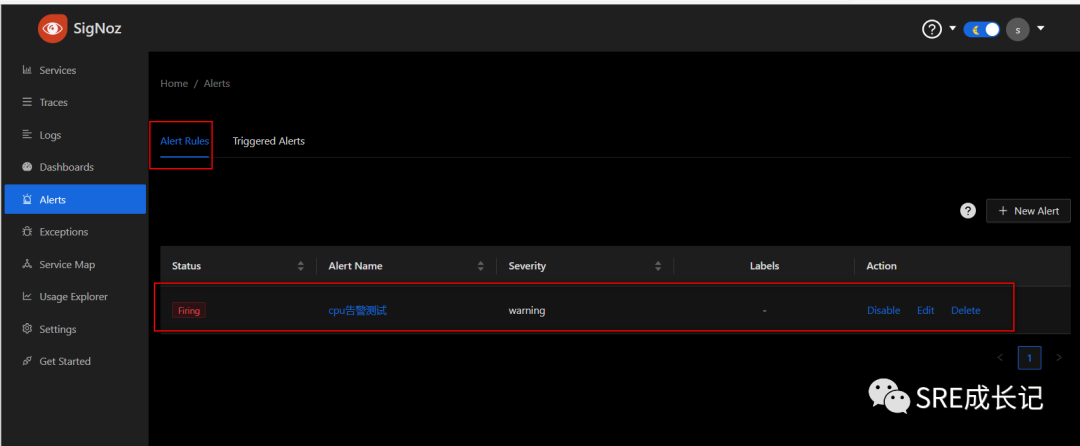

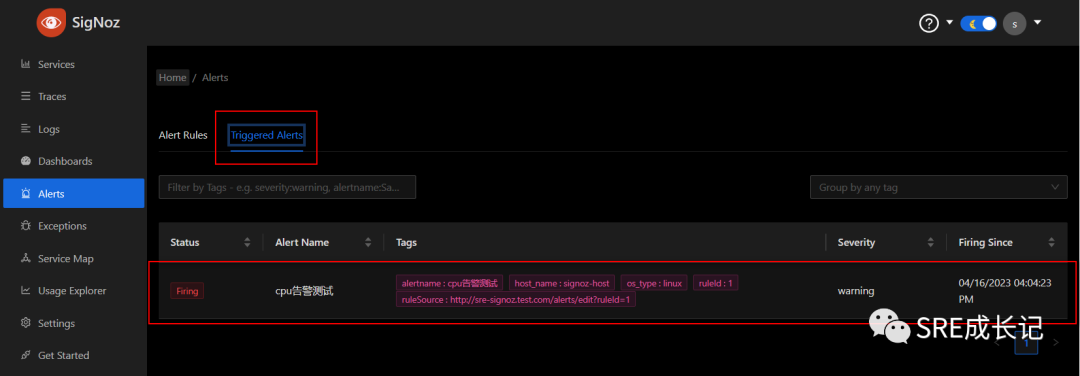

过了会儿,达到告警阈值时,开始告警了:

同时,https://webhook.site/上也收到了告警信息:

当阈值恢复时,signoz界面的告警又从Firing状态变回OK状态。

至此,主机监控接入和告警配置完成。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- Kubernetes实战(十二)-使用kubeconfig文件管理多套kubernetes(k8s)集群

- 数据结构与算法---在一个数组中找出相同个数最多的数

- K8S学习指南(70)-K8S中的informa机制

- 安全加固之weblogic屏蔽T3协议

- SQL server数据库加深学习

- Frida07 - dexdump核心源码分析

- 【形式语言与自动机】【《形式语言与自动机理论(第4版)》笔记】第六章:上下文无关语言

- Guarded Suspension模式--适合等待事件处理

- [Angular] 笔记 21:@ViewChild

- 数字化解决方案在市政交通大显身手