RAG(检索增强生成 )

发布时间:2024年01月13日

📑前言

本文主要是【RAG】——RAG(检索增强生成 )的文章,如果有什么需要改进的地方还请大佬指出??

🎬作者简介:大家好,我是听风与他🥇

??博客首页:CSDN主页听风与他

🌄每日一句:狠狠沉淀,顶峰相见

目录

RAG

1.RAG定义

- llm是一个预训练的模型,这就决定了llm自身无法实时更新模型中的知识,由此,业界已经形成了通过RAG(Retrieval Augmented Generation)等外接知识库等方式快速扩展llm知识。

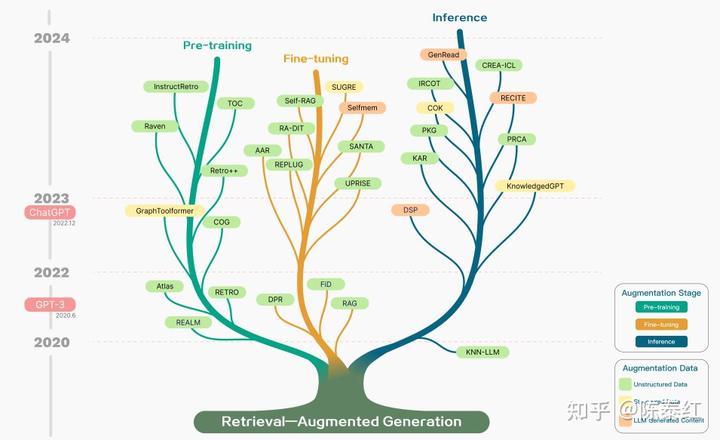

- RAG的增强阶段可以在pre-training预训练,Fine-tuning微调,Inference推理三个阶段;从增强的数据源,包括非结构化数据,结构化数据和llm生成的内容三个途径。

2.RAG技术演化

- RAG通过优化检索器、生成器等关键部分,为大模型中的复杂知识密集型任务提供了更高效的解决任务。

- 检索阶段:利用编码模型根据问题检索相关文档。

- 生成阶段:将检索到的上下文作为条件,系统生成文本。

3.RAG优势

结合检索系统和生成模型。能利用最新信息,提高答案质量,具有更好的可解释性和适应性。简单来说,就是实时更新检索库。

LangChain实现RAG

1.基础环境准备

pip install langchain openai weaviate-client

2.在项目根目录创建.env文件,用来存放相关配置(configuration.env)

OPENAI_API_KEY="此处添openai的api_key"

3.准备一个矢量数据库来保存所有附加信息的外部知识源。

3.1 加载数据

- 这里选择斗破苍穹.txt作为文档输出,要加载到langchain中的TextLoader中

from langchain.document_loaders import TextLoader

loader = TextLoader('./a.txt')

documents = loader.load()

3.2数据分块

- 因为文档在其原始状态下太长,无法放入大模型的上下文窗口,所以需要将其分成更小的部分。LangChain 内置了许多用于文本的分割器。这里使用 chunk_size 约为 1024 且 chunk_overlap 为128 的 CharacterTextSplitter 来保持块之间的文本连续性。

from langchain.text_splitter import CharacterTextSplitter

text_splitter = CharacterTextSplitter(chunk_size=1024, chunk_overlap=128)

chunks = text_splitter.split_documents(documents)

3.3数据块存储

- 要启用跨文本块的语义搜索,需要为每个块生成向量嵌入,然后将它们与其嵌入存储在一起。要生成向量嵌入,可以使用 OpenAI 嵌入模型,并使用 Weaviate 向量数据库来进行存储。通过调用 .from_documents(),矢量数据库会自动填充块。

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Weaviate

import weaviate

from weaviate.embedded import EmbeddedOptions

client = weaviate.Client(

embedded_options = EmbeddedOptions()

)

vectorstore = Weaviate.from_documents(

client = client,

documents = chunks,

embedding = OpenAIEmbeddings(),

by_text = False

)

RAG实现

1.数据检索

- 将数据存入矢量数据库后,就可以将其定义为检索器组件,该组件根据用户查询和嵌入块之间的语义相似性获取相关上下文。

retriever = vectorstore.as_retriever()

2.提示增强

- 完成数据检索之后,就可以使用相关上下文来增强提示。在这个过程中需要准备一个提示模板。可以通过提示模板轻松自定义提示,如下所示。

from langchain.prompts import ChatPromptTemplate

template = """你是一个问答机器人助手,请使用以下检索到的上下文来回答问题,如果你不知道答案,就说你不知道。问题是:{question},上下文: {context},答案是:

"""

prompt = ChatPromptTemplate.from_template(template)

3.答案生成

- 利用 RAG 管道构建一条链,将检索器、提示模板和 LLM 链接在一起。定义了 RAG 链,就可以调用它了。

from langchain.chat_models import ChatOpenAI

from langchain.schema.runnable import RunnablePassthrough

from langchain.schema.output_parser import StrOutputParser

llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0)

rag_chain = (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)

query = "萧炎的表妹是谁?"

res=rag_chain.invoke(query)

print(f'答案:{res}')

📑文章末尾

文章来源:https://blog.csdn.net/weixin_61494821/article/details/135576031

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 革命性突破:Great River推出XL高速ARINC 818传感器测试卡

- C++中sort()排序函数使用方法

- CentOS7部署bitbucket7.21.20-postgresql版

- c 语言, 随机数,一个不像随机数的随机数

- 代码随想录算法训练营第四十八天|198.打家劫舍、213.打家劫舍II、337.打家劫舍III

- Mybatis Plus

- 鸿蒙(HarmonyOS)应用开发—— video组件实操

- 使用Go进行HTTP客户端认证

- Unity 打包前,通过代码对 AndroidManifest 增删改查

- Python+OpenGL绘制3D模型(八)绘制插件导出的模型