【复现】AnimateDiff ControlNet Pipeline复现过程记录

发布时间:2023年12月20日

code:diffusers/examples/community at main · huggingface/diffusers · GitHub

似乎不用全下载,目前只用到了pipeline_animatediff_controlnet.py和anidi.py(readme中的AnimateDiff ControlNet Pipeline粘出来)

1.路径及文件问题

改为自己的绝对路径,custom_pipeline也要改,指向py文件

motion_id = "/data/diffusers-main/checkpoint/animatediff-motion-adapter-v1-5-2"

adapter = MotionAdapter.from_pretrained(motion_id)

controlnet = ControlNetModel.from_pretrained("/data/diffusers-main/checkpoint/control_v11p_sd15_openpose", torch_dtype=torch.float16)

vae = AutoencoderKL.from_pretrained("/data/diffusers-main/checkpoint/sd-vae-ft-mse", torch_dtype=torch.float16)

# 加载预训练的运动适配器、控制网络和自编码器模型

model_id = "/data/diffusers-main/checkpoint/Realistic_Vision_V5.1_noVAE"

pipe = DiffusionPipeline.from_pretrained(

model_id,

motion_adapter=adapter,

controlnet=controlnet,

vae=vae,

# custom_pipeline="pipeline_animatediff_controlnet",

custom_pipeline="/data/diffusers-main/examples/community/pipeline_animatediff_controlnet.py",

).to(device="cuda", dtype=torch.float16)

# 创建扩散管道并加载预训练的模型,包括运动适配器、控制网络和自编码器模型?pipeline_animatediff_controlnet.py文件中的主类名要改成pipeline_animatediff_controlnet

同时在anidi.py中添加

from pipeline_animatediff_controlnet import pipeline_animatediff_controlnet这样两个文件就连起来了。直接把custom_pipeline改成AnimateDiffControlNetPipeline应该也可以

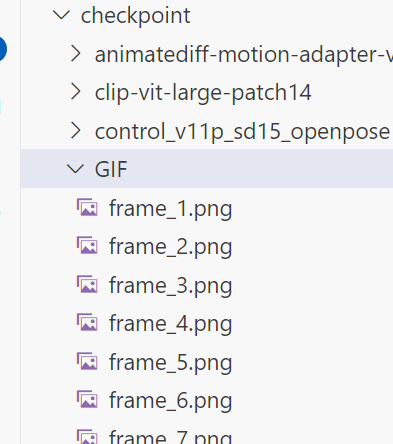

2.找不到图片

?这是要自己把16帧条件输入视频处理成图像吗?

拆分工具:在线GIF图片帧拆分工具 - UU在线工具 (uutool.cn)

?

?

?

?

?ok了

3.torch.cuda.OutOfMemoryError

Traceback (most recent call last):

File "/data/diffusers-main/examples/community/anidi.py", line 43, in <module>

result = pipe(

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/root/.cache/huggingface/modules/diffusers_modules/local/pipeline_animatediff_controlnet.py", line 1079, in __call__

down_block_res_samples, mid_block_res_sample = self.controlnet(

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/diffusers/models/controlnet.py", line 800, in forward

sample, res_samples = downsample_block(

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/diffusers/models/unet_2d_blocks.py", line 1160, in forward

hidden_states = attn(

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/diffusers/models/transformer_2d.py", line 392, in forward

hidden_states = block(

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/diffusers/models/attention.py", line 288, in forward

attn_output = self.attn1(

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/diffusers/models/attention_processor.py", line 522, in forward

return self.processor(

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/diffusers/models/attention_processor.py", line 743, in __call__

attention_probs = attn.get_attention_scores(query, key, attention_mask)

File "/data/diffusers-main/.conda/lib/python3.10/site-packages/diffusers/models/attention_processor.py", line 590, in get_attention_scores

baddbmm_input = torch.empty(

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 18.00 GiB (GPU 0; 11.91 GiB total capacity; 5.43 GiB already allocated; 4.75 GiB free; 6.38 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF要求18G。。我单个GPU才12G,两个才够,尝试两个GPU一起用

?

文章来源:https://blog.csdn.net/weixin_57974242/article/details/135086956

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 【CAN】Hardware Object的配置规则

- 我的创作纪念日

- 别再犹豫!一键下载安装Substance3D,在数字世界中创造引人注目的艺术品!

- 【无标题】

- TypeError: control character ‘delimiter‘ cannot be a newline (`\r` or `\n`)

- 社交直播类App开发的前景与需求深度解析

- 24秋招,小鹏汽车自动驾驶测试工程师一面

- Linux操作系统学习(二)、Linux的文件权限与目录配置

- 杰理-音箱-flash配置

- Linux常规操作指南