kafka报错a fault occurred in a recent unsafe memory access operation in compiled Java code

发布时间:2023年12月26日

kafka重启后进程异常消失。

查kafka日志,显示最近编译的Java代码中的不安全内存访问操作出现错误。

tail -1000 server.log.2023-12-24-14

[2023-12-24 14:19:25,568] ERROR There was an error in one of the threads during logs loading: java.lang.InternalError: a fault occurred in a recent unsafe memory access operation in compiled Java code (kafka.log.LogManager)

[2023-12-24 14:19:25,573] ERROR [KafkaServer id=44] Fatal error during KafkaServer startup. Prepare to shutdown (kafka.server.KafkaServer)

java.lang.InternalError: a fault occurred in a recent unsafe memory access operation in compiled Java code

at org.apache.kafka.common.record.FileLogInputStream.nextBatch(FileLogInputStream.java:88)

at org.apache.kafka.common.record.FileLogInputStream.nextBatch(FileLogInputStream.java:41)

at org.apache.kafka.common.record.RecordBatchIterator.makeNext(RecordBatchIterator.java:35)

at org.apache.kafka.common.record.RecordBatchIterator.makeNext(RecordBatchIterator.java:24)

at org.apache.kafka.common.utils.AbstractIterator.maybeComputeNext(AbstractIterator.java:79)

at org.apache.kafka.common.utils.AbstractIterator.hasNext(AbstractIterator.java:45)

at scala.collection.convert.Wrappers$JIteratorWrapper.hasNext(Wrappers.scala:43)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1431)

at scala.collection.IterableLike.foreach(IterableLike.scala:74)

at scala.collection.IterableLike.foreach$(IterableLike.scala:73)

at scala.collection.AbstractIterable.foreach(Iterable.scala:56)

at kafka.log.LogSegment.recover(LogSegment.scala:344)

at kafka.log.Log.recoverSegment(Log.scala:648)

at kafka.log.Log.recoverLog(Log.scala:787)

at kafka.log.Log.$anonfun$loadSegments$3(Log.scala:723)

at scala.runtime.java8.JFunction0$mcJ$sp.apply(JFunction0$mcJ$sp.java:23)

at kafka.log.Log.retryOnOffsetOverflow(Log.scala:2360)

at kafka.log.Log.loadSegments(Log.scala:723)

at kafka.log.Log.<init>(Log.scala:287)

at kafka.log.Log$.apply(Log.scala:2494)

at kafka.log.LogManager.loadLog(LogManager.scala:274)

at kafka.log.LogManager.$anonfun$loadLogs$12(LogManager.scala:353)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

原因是日志目录满了,df -h查看

[root@44 bak]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 284G 0 284G 0% /dev

/dev/mapper/rhel-root 542G 75G 468G 14% /

/dev/sdf1 1.7T 1.6T 92G 95% /data/data6

/dev/sds1 1.7T 33M 1.7T 1% /data/data19

/dev/sdd1 1.7T 57M 1.7T 1% /data/data4

/dev/sdb1 894G 33M 894G 1% /data/data2

/dev/sdr1 1.7T 33M 1.7T 1% /data/data18

/dev/sdq1 1.7T 1.6T 55G 97% /data/data17

/dev/sdk1 1.7T 68G 1.6T 5% /data/data11

/dev/sdl1 1.7T 23G 1.7T 2% /data/data12

/dev/sdx1 1.7T 33M 1.7T 1% /data/data24

/dev/sdm1 1.7T 4.1G 1.7T 1% /data/data13

/dev/sdt1 1.7T 33M 1.7T 1% /data/data20

/dev/sdy2 194M 149M 45M 78% /boot

/dev/sdp1 1.7T 43G 1.6T 3% /data/data16

/dev/sde1 1.7T 33M 1.7T 1% /data/data5

/dev/sdn1 1.7T 12G 1.7T 1% /data/data14

/dev/sdg1 1.7T 1.7T 15G 100% /data/data7

/dev/sdo1 1.7T 1.7T 150M 100% /data/data15

/dev/sdh1 1.7T 92G 1.6T 6% /data/data8

/dev/sdu1 1.7T 33M 1.7T 1% /data/data21

/dev/sdc1 894G 33M 894G 1% /data/data3

/dev/sda1 894G 33M 894G 1% /data/data1

/dev/sdw1 1.7T 301G 1.4T 18% /data/data23

/dev/sdv1 1.7T 33M 1.7T 1% /data/data22

/dev/sdi1 1.7T 111G 1.6T 7% /data/data9

/dev/sdj1 1.7T 1.6T 140G 92% /data/data10

在/data/data15,/data/data7等目录满的目录下查看:

cd /data/data15

du -lh --max-depth=1 . |sort -rn |head

发现某主题分区占用为996GB。

进入到该主题目录下查看,有超出3天的日志存在。

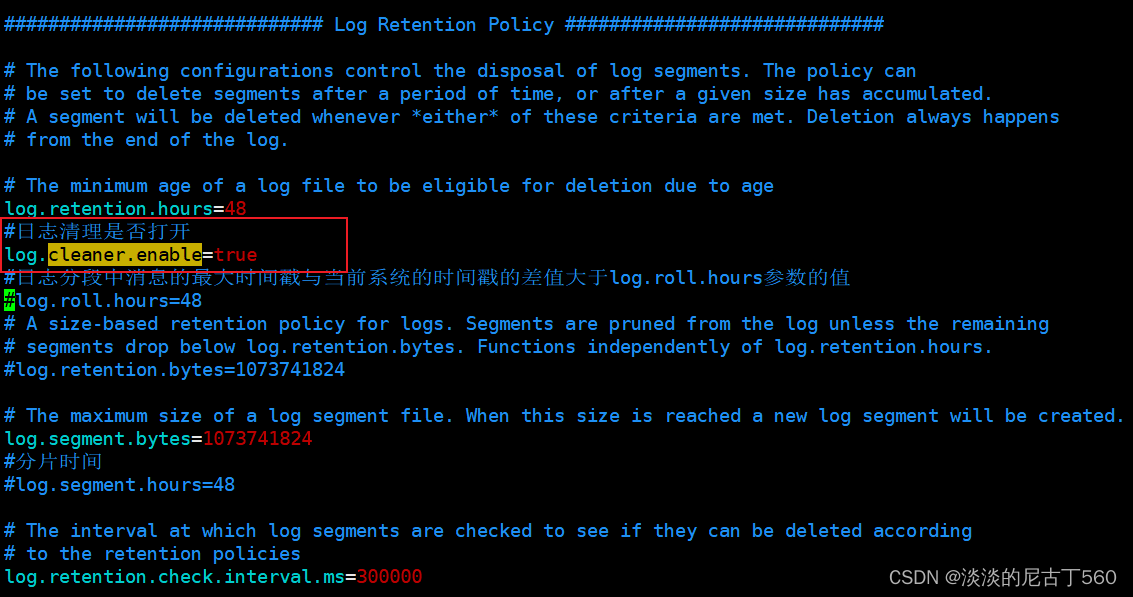

看server.properties配置中设置了日志保存时间为48小时。说明配置未生效。

查找原因为,未达到1G的分片大小,kafka不会进行分片,kafka不会对未分片的日志文件进行清理,所以log.retention.hours看似未进行清理日志。

添加以下配置后重启kafka,启动后日志等待一段时间会自动删除。

问题解决。

文章来源:https://blog.csdn.net/weixin_67333392/article/details/135201940

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 揭秘华为的退休真相,各种待遇细节曝光...

- 深入理解TF-IDF、BM25算法与BM25变种:揭秘信息检索的核心原理与应用

- 【华为机试真题 Python】最大报酬

- 2024最新版Python入门圣经来啦!

- SpringBoot中日志的使用

- React面试题

- 好消息,Linux Kernel 6.7正式发布!

- 共模电容:又一款EMC滤波神器?|深圳比创达电子(上)

- Java版企业电子招投标系统源代码,支持二次开发,采用Spring cloud技术

- FreeRTOS内核控制函数