[kubernetes]控制平面ETCD

什么是ETCD

- CoreOS基于Raft开发的分布式key-value存储,可用于服务发现、共享配置以及一致性保障(如数据库选主、分布式锁等)

- etcd像是专门为集群环境的服务发现和注册而设计,它提供了数据TTL失效、数据改变监视、多值、目录监听、分布式锁原子操作等功能,可以方便的跟踪并管理集群节点的状态

特点:

- 键值对存储:将数据存储在分层组织的目录中,如同在标准文件系统中

监测变更:监测特定的键或目录以进行更改,并对值的更改做出反应 - 简单: curl可访问的用户的API(HTTP+JSON)

- 安全: 可选的SSL客户端证书认证

- 快速: 单实例每秒1000次写操作,2000+次读操作

- 可靠: 使用Raft算法保证一致性

主要功能

- key-value存储

- 监听机制

- key的过期及续约机制,用于监控和服务发现

- 基于监听机制的分布式异步系统

键值对存储

- 采用kv型数据存储,一般情况下比关系型数据库快

- 支持动态存储(内存)以及静态存储(磁盘)

- 分布式存储,可集成为多节点集群

- 存储方式,采用类似目录结构。(B+tree)

- 只有叶子节点才能真正存储数据,相当于文件

- 叶子节点的父节点一定是目录,目录不能存储数据

服务注册与发现

- 强一致性、高可用的服务存储目录

- 基于 Raft 算法的 etcd 天生就是这样一个强一致性、高可用的服务存储目录

- 一种注册服务和服务健康状况的机制

- 用户可以在 etcd 中注册服务,并且对注册的服务配置 key TTL,定时保持服务的心跳以达到监控健康状态的效果

消息发布与订阅

- 在分布式系统中,最适用的一种组件间通信方式就是消息发布与订阅

- 即构建一个配置共享中心,数据提供者在这个配置中心发布消息,而消息使用者则订阅他们

- 关心的主题,一旦主题有消息发布,就会实时通知订阅者

- 通过这种方式可以做到分布式系统配置的集中式管理与动态更新

- 应用中用到的一些配置信息放到etcd上进行集中管理

- 应用在启动的时候主动从etcd获取一次配置信息,同时,在etcd节点上注册一个Watcher并等待,以后每次配置有更新的时候,etcd都会实时通知订阅者,以此达到获取最新配置信息的目的

核心:TTL & CAS

- TTL(time to live)指的是给一个key设置一个有效期,到期后这个key就会被自动删掉,这在

很多分布式锁的实现上都会用到,可以保证锁的实时有效性 - Atomic Compare-and-Swap(CAS)指的是在对key进行赋值的时候,客户端需要提供一些条

件,当这些条件满足后,才能赋值成功。这些条件包括

? prevExist:key当前赋值前是否存在

? prevValue:key当前赋值前的值

? prevIndex:key当前赋值前的Index

- key的设置是有前提的,需要知道这个key当前的具体情况才可以对其设置

Raft 协议

概览

- Raft协议基于quorum机制,即大多数同意原则,任何的变更都需超过半数的成员确认

learner

- Raft 4.2.1引入的新角色

- 当出现一个etcd集群需要增加节点时,新节点与Leader的数据差异较大,需要较多数据同步才能跟上leader的最新的数据

- 此时Leader的网络带宽很可能被用尽,进而使得leader无法正常保持心跳

- 进而导致follower重新发起投票

- 进而可能引发etcd集群不可用

- Learner角色只接收数据而不参与投票,因此增加learner节点时,集群的quorum不变

etcd基于Raft的一致性

- 初始启动时,节点处于follower状态并被设定一个election timeout,如果在这一时间周期内没有收到

来自 leader 的 heartbeat,节点将发起选举:将自己切换为 candidate 之后,向集群中其它 follower

节点发送请求,询问其是否选举自己成为 leader - 当收到来自集群中过半数节点的接受投票后,节点即成为 leader,开始接收保存 client 的数据并向其它

的 follower 节点同步日志。如果没有达成一致,则candidate随机选择一个等待间隔(150ms ~

300ms)再次发起投票,得到集群中半数以上follower接受的candidate将成为leader - leader节点依靠定时向 follower 发送heartbeat来保持其地位

- 任何时候如果其它 follower 在 election timeout 期间都没有收到来自 leader 的 heartbeat,同样会将

自己的状态切换为 candidate 并发起选举。每成功选举一次,新 leader 的任期(Term)都会比之前

leader 的任期大1。

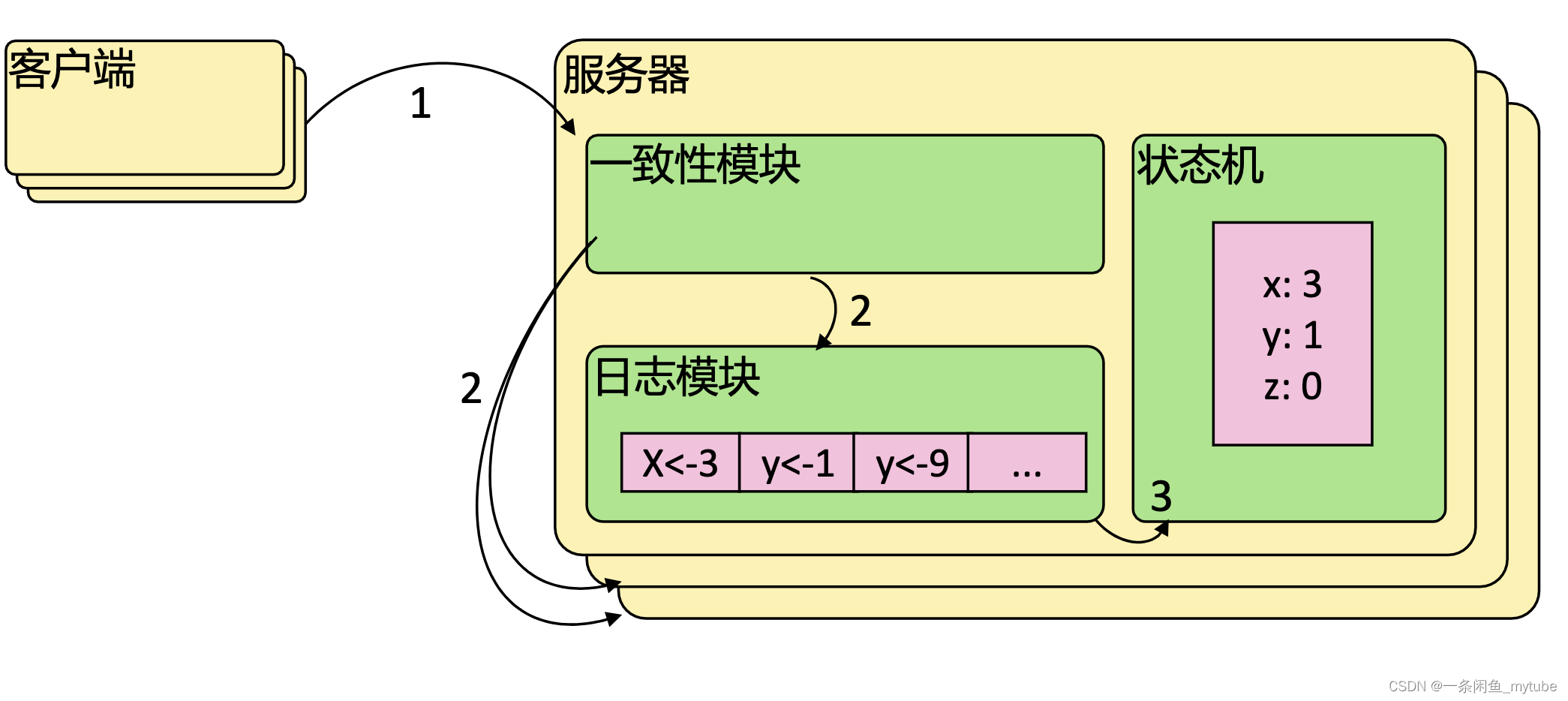

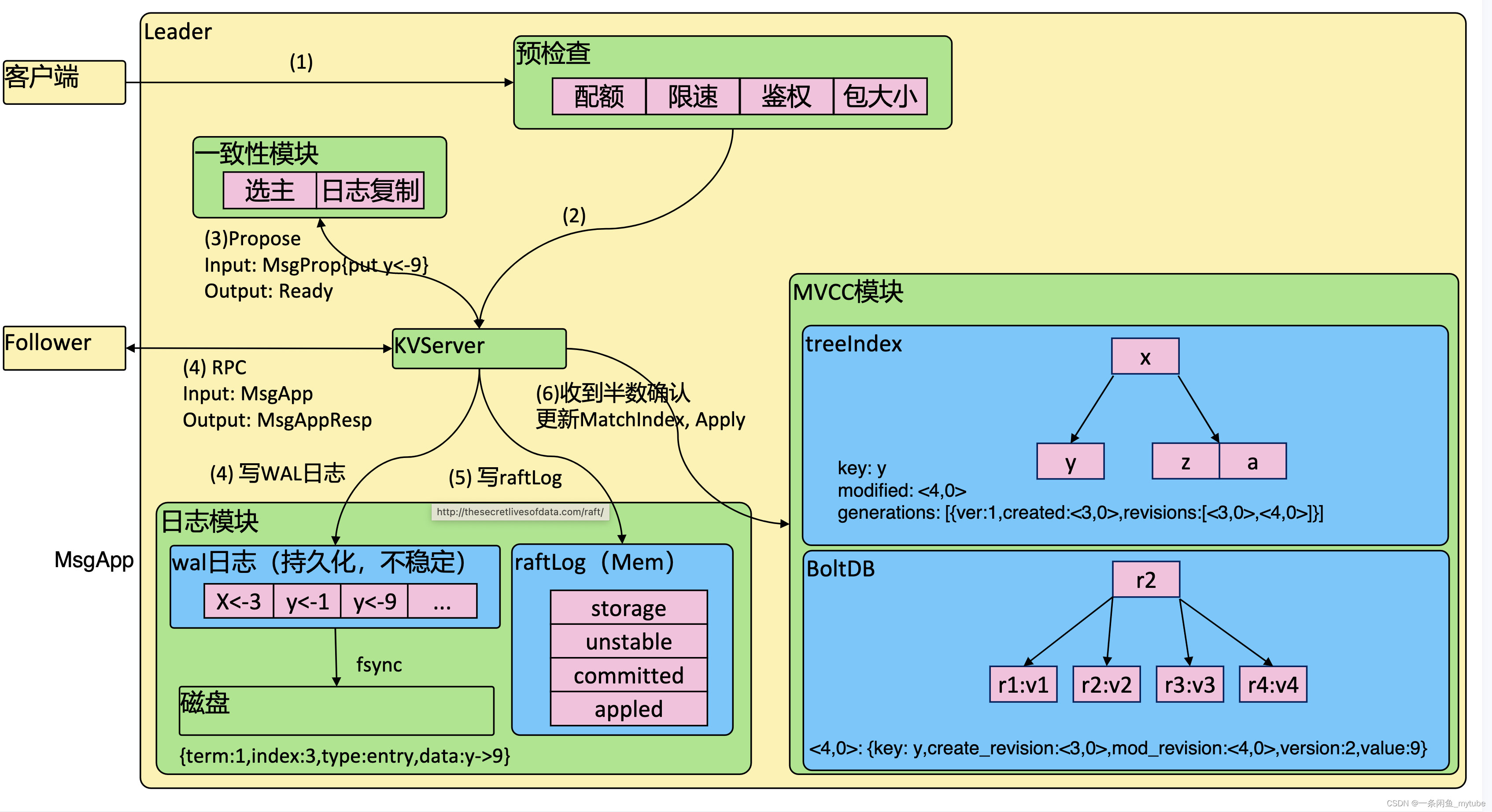

日志复制

- 当接Leader收到客户端的日志(事务请求)后先把该日志追加到本地的Log中,然后通过

heartbeat把该Entry同步给其他Follower,Follower接收到日志后记录日志然后向Leader发送

ACK,当Leader收到大多数(n/2+1)Follower的ACK信息后将该日志设置为已提交并追加到

本地磁盘中,通知客户端并在下个heartbeat中Leader将通知所有的Follower将该日志存储在自

己的本地磁盘中。

安全性

- 用于保证每个节点都执行相同序列的安全机制

- Safety就是用于保证选举出来的Leader一定包含先前 committed Log的机制

- 选举安全性(Election Safety):每个任期(Term)只能选举出一个Leade

- Leader完整性(Leader Completeness):指Leader日志的完整性,当Log在任期Term1被

Commit后,那么以后任期Term2、Term3…等的Leader必须包含该Log;Raft在选举阶段就使

用Term的判断用于保证完整性:当请求投票的该Candidate的Term较大或Term相同Index更大

则投票,否则拒绝该请求

失效处理

- 1 Leader失效:其他没有收到heartbeat的节点会发起新的选举,而当Leader恢复后由于步进

数小会自动成为follower(日志也会被新leader的日志覆盖) - 2 follower节点不可用:follower 节点不可用的情况相对容易解决。因为集群中的日志内容始

终是从 leader 节点同步的,只要这一节点再次加入集群时重新从 leader 节点处复制日志即可。 - 3 多个candidate:冲突后candidate将随机选择一个等待间隔(150ms ~ 300ms)再次发起

投票,得到集群中半数以上follower接受的candidate将成为leader

wal日志

- 数据结构LogEntry

- 字段type,只有两种,

一种是0表示Normal,1表示ConfChange(ConfChange表示 Etcd 本身的配置变更同步,比如有新的节点加入等) - term,每个term代表一个主节点的任期,每次主节点变更term就会变化

- index,这个序号是严格有序递增的,代表变更序号

- data,将raft request对象的pb结构整个保存下

- 一致性都通过同步wal日志来实现,每个节点将从主节点收到的data apply到本地的存储,Raft只关心日志的同步状态

- 如果本地存储实现的有bug,比如没有正确的将data apply到本地,也可能会导致数据不一致。

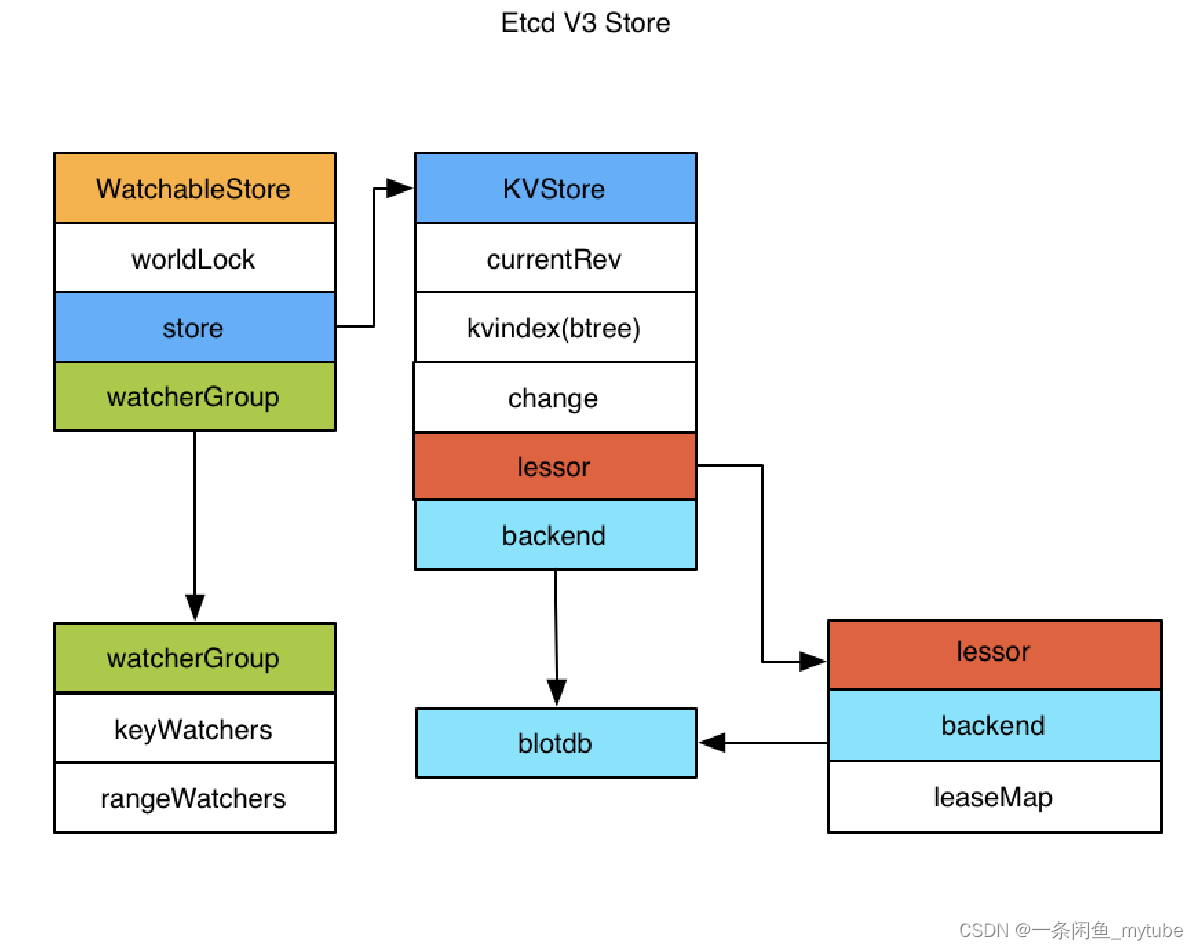

etcd v3 存储,Watch以及过期机制

-

存储机制

- 一部分是内存中的索引,kvindex,是基于Google开源的一个Golang的btree实现的

- 另外一部分是后端存储

- backend可以对接多种存储,当前使用的boltdb

- boltdb是一个单机的支持事务的kv存储,etcd 的事务是基于boltdb的事务实现的

- etcd 在boltdb中存储的key是reversion,value是 etcd 自己的key-value组合,也就是说 etcd 会在boltdb中把每个版都保存下,从而实现了多版本机制

- reversion主要由两部分组成,第一部分main rev,每次事务进行加一,第二部分sub rev,同一

个事务中的每次操作加一 - etcd 提供了命令和设置选项来控制compact,同时支持put操作的参数来精确控制某个key的历史版本数

- 内存kvindex保存的就是key和reversion之前的映射关系,用来加速查询

Watch机制

-

etcd v3 的watch机制支持watch某个固定的key,也支持watch一个范围

- watchGroup 包含两种watcher,一种是 key watchers,数据结构是每

个key对应一组watcher,另外一种是 range watchers, 数据结构是一个 IntervalTree,方便通

过区间查找到对应的watcher

- watchGroup 包含两种watcher,一种是 key watchers,数据结构是每

-

每个 WatchableStore 包含两种 watcherGroup,一种是synced,一种是unsynced,

前者表示该group的watcher数据都已经同步完毕,在等待新的变更,后者表示该group的

watcher数据同步落后于当前最新变更,还在追赶- 当 etcd 收到客户端的watch请求,如果请求携带了revision参数,则比较请求的revision和

store当前的revision,如果大于当前revision,则放入synced组中,否则放入unsynced组 - etcd 会启动一个后台的goroutine持续同步unsynced的watcher,然后将其迁移到synced组

- etcd v3 支持从任意版本开始watch,没有v2的1000条历史event表限制的问题(当然这是指没有compact的情况下)

- 当 etcd 收到客户端的watch请求,如果请求携带了revision参数,则比较请求的revision和

etcd 成员重要参数

成员相关参数

–name ‘default’

Human-readable name for this member.

–data-dir ‘${name}.etcd’

Path to the data directory.

–listen-peer-urls ‘http://localhost:2380’

List of URLs to listen on for peer traffic.

–listen-client-urls ‘http://localhost:2379’

List of URLs to listen on for client tra

etcd集群重要参数

–initial-advertise-peer-urls ‘http://localhost:2380’

List of this member’s peer URLs to advertise to the rest of the cluster.

–initial-cluster ‘default=http://localhost:2380’

Initial cluster configuration for bootstrapping.

–initial-cluster-state ‘new’

Initial cluster state (‘new’ or ‘existing’).

–initial-cluster-token ‘etcd-cluster’

Initial cluster token for the etcd cluster during bootstrap.

–advertise-client-urls ‘http://localhost:2379’

List of this member’s client URLs to advertise to the public

etcd安全相关参数

–cert-file ‘’

Path to the client server TLS cert file.

–key-file ‘’

Path to the client server TLS key file.

–client-crl-file ‘’

Path to the client certificate revocation list file.

–trusted-ca-file ‘’

Path to the client server TLS trusted CA cert file.

–peer-cert-file ‘’

Path to the peer server TLS cert file.

–peer-key-file ‘’

Path to the peer server TLS key file.

–peer-trusted-ca-file ‘’

Path to the peer server TLS trusted CA file

灾备

? 创建Snapshot

etcdctl --endpoints https://127.0.0.1:3379 --cert /tmp/etcd-certs/certs/127.0.0.1.pem –

key /tmp/etcd-certs/certs/127.0.0.1-key.pem --cacert /tmp/etcd-certs/certs/ca.pem

snapshot save snapshot.db

? 恢复数据

etcdctl snapshot restore snapshot.db

–name infra2

–data-dir=/tmp/etcd/infra2

–initial-cluster

infra0=http://127.0.0.1:3380,infra1=http://127.0.0.1:4380,infra2=http://127.0.0.1:5380

–initial-cluster-token etcd-cluster-1

–initial-advertise-peer-urls http://127.0.0.1:538

容量管理

- 单个对象不建议超过1.5M

- 默认容量2G

- 不建议超过8G

Alarm & Disarm Alarm

? 设置etcd存储大小

$ etcd --quota-backend-bytes=$((1610241024))

? 写爆磁盘

$ while [ 1 ]; do dd if=/dev/urandom bs=1024 count=1024 | ETCDCTL_API=3 etcdctl put key

|| break; done

? 查看endpoint状态

$ ETCDCTL_API=3 etcdctl --write-out=table endpoint status

? 查看alarm

$ ETCDCTL_API=3 etcdctl alarm list

? 清理碎片

$ ETCDCTL_API=3 etcdctl defrag

? 清理alarm

$ ETCDCTL_API=3 etcdctl alarm disarm

碎片整理

keep one hour of history

$ etcd --auto-compaction-retention=1

compact up to revision 3

$ etcdctl compact 3

$ etcdctl defrag

Finished defragmenting etcd member[127.0.0.1:2379]

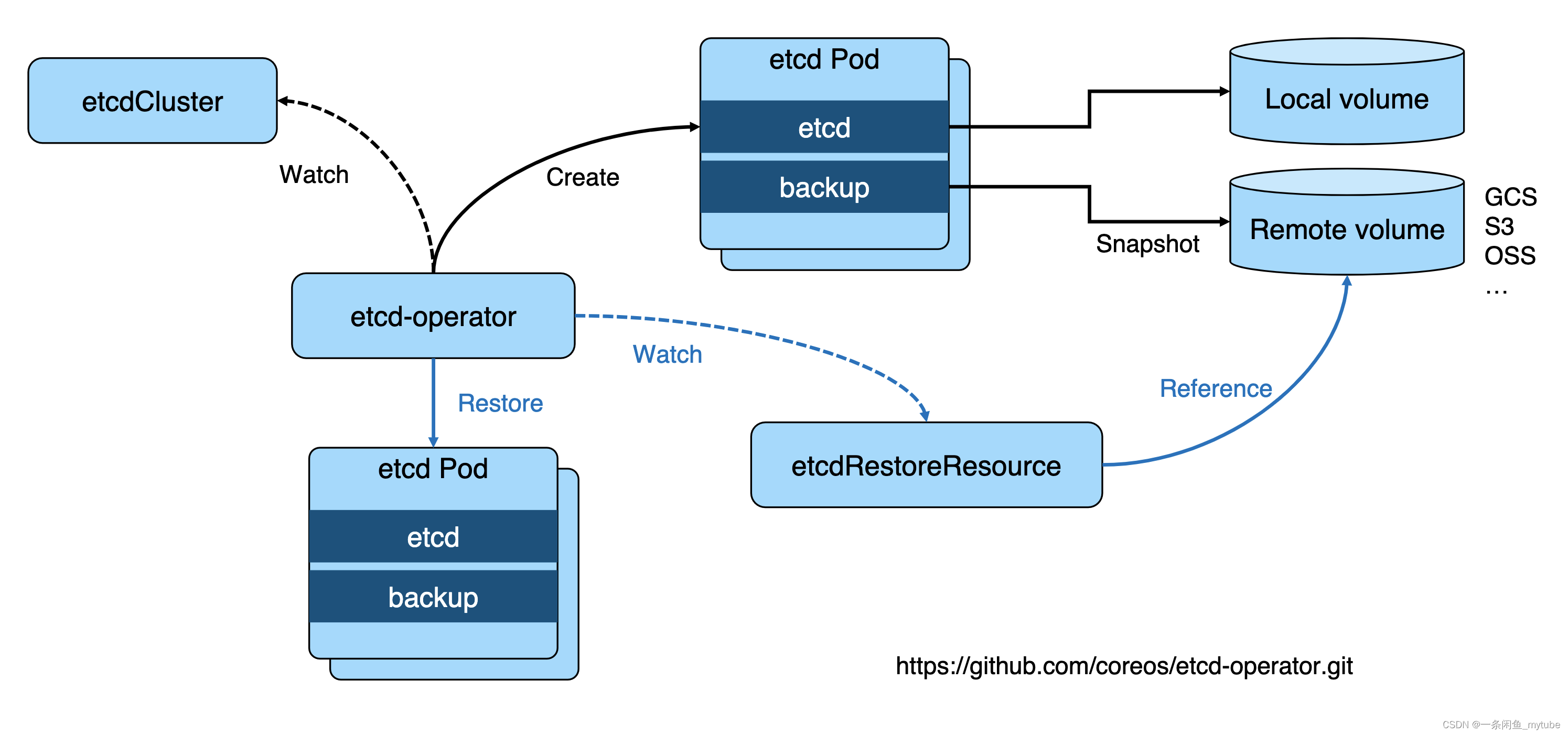

高可用etcd解决方案

etcd-operator: coreos开源的,基于kubernetes CRD完成etcd集群配置。Archived

https://github.com/coreos/etcd-operator

Etcd statefulset Helm chart: Bitnami(powered by vmware)

https://bitnami.com/stack/etcd/helm

https://github.com/bitnami/charts/blob/master/bitnami

Etcd Operato

基于 Bitnami 安装etcd高可用集群

? 安装helm

https://github.com/helm/helm/releases

? 通过helm安装etcd

helm repo add bitnami https://charts.bitnami.com/bitnami

helm install my-release bitnami/etcd

? 通过客户端与serve交互

kubectl run my-release-etcd-client --restart=‘Never’ --image

docker.io/bitnami/etcd:3.5.0-debian-10-r94 --env ROOT_PASSWORD=$(kubectl get

secret --namespace default my-release-etcd -o jsonpath=“{.data.etcd-root-password}” |

base64 --decode) --env ETCDCTL_ENDPOINTS=“my-release-

etcd.default.svc.cluster.local:2379” --namespace default --command – sleep infinity

Kubernetes如何使用etcd

-

etcd是kubernetes的后端存储

-

对于每一个kubernetes Object,都有对应的storage.go 负责对象的存储操作

- pkg/registry/core/pod/storage/storage.go

-

API server 启动脚本中指定etcd servers集群

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=192.168.34.2

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --etcd-servers=https://127.0.0.1:237

Kubernets对象在etcd中的存储路径

etcd在集群中所处的位置

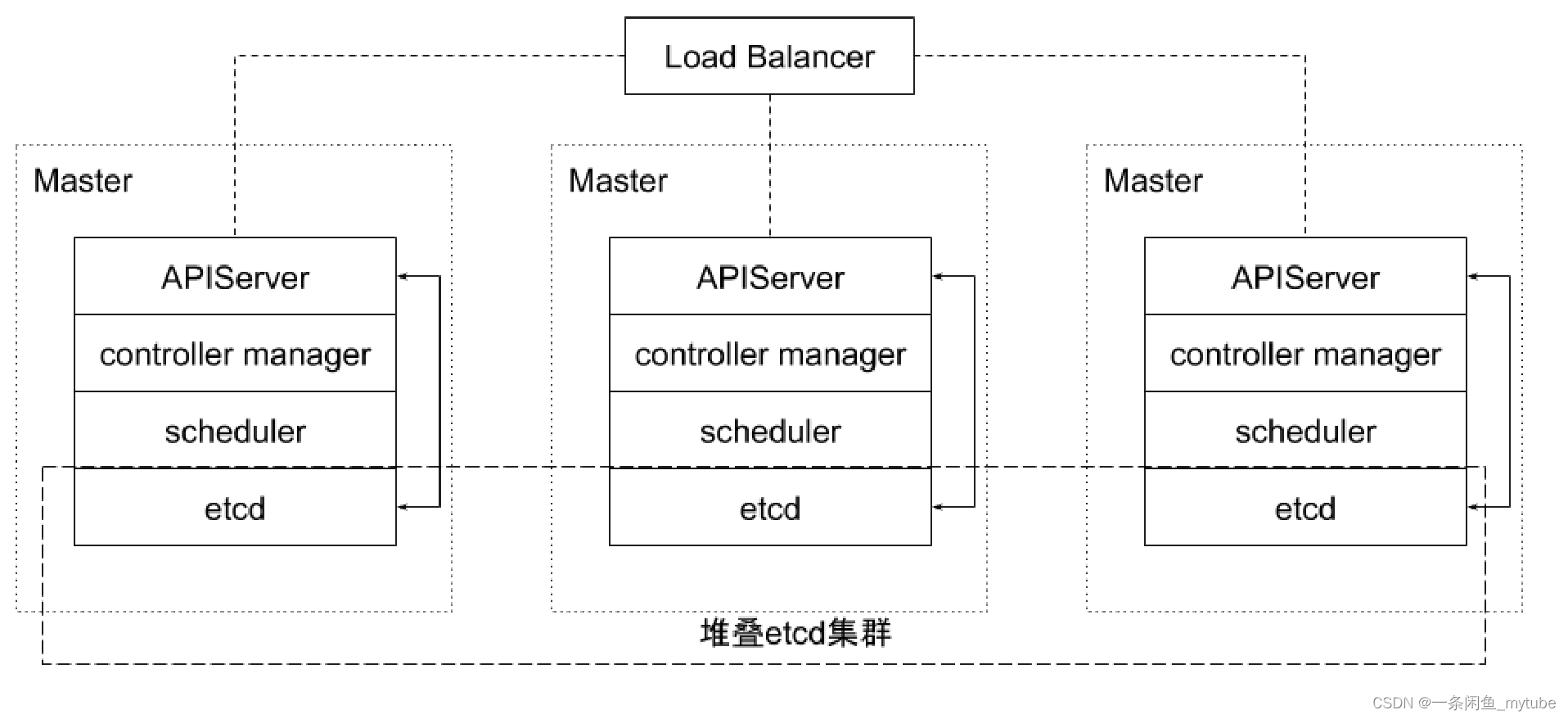

堆叠式etcd集群的高可用拓扑

- 这种拓扑将相同节点上的控制平面和etcd成员耦合在一起。优点在于建立起来非常容易,并且对副本的管理也更容易

- 堆叠式存在耦合失败的风险,如果一个节点发生故障,则etcd成员和控制平面实例都会丢失,并且集群冗余也会受到损害

- 可以通过添加更多控制平面节点来减轻这种风险。因此为实现集群高可用应该至少运行三个堆叠的Master节点

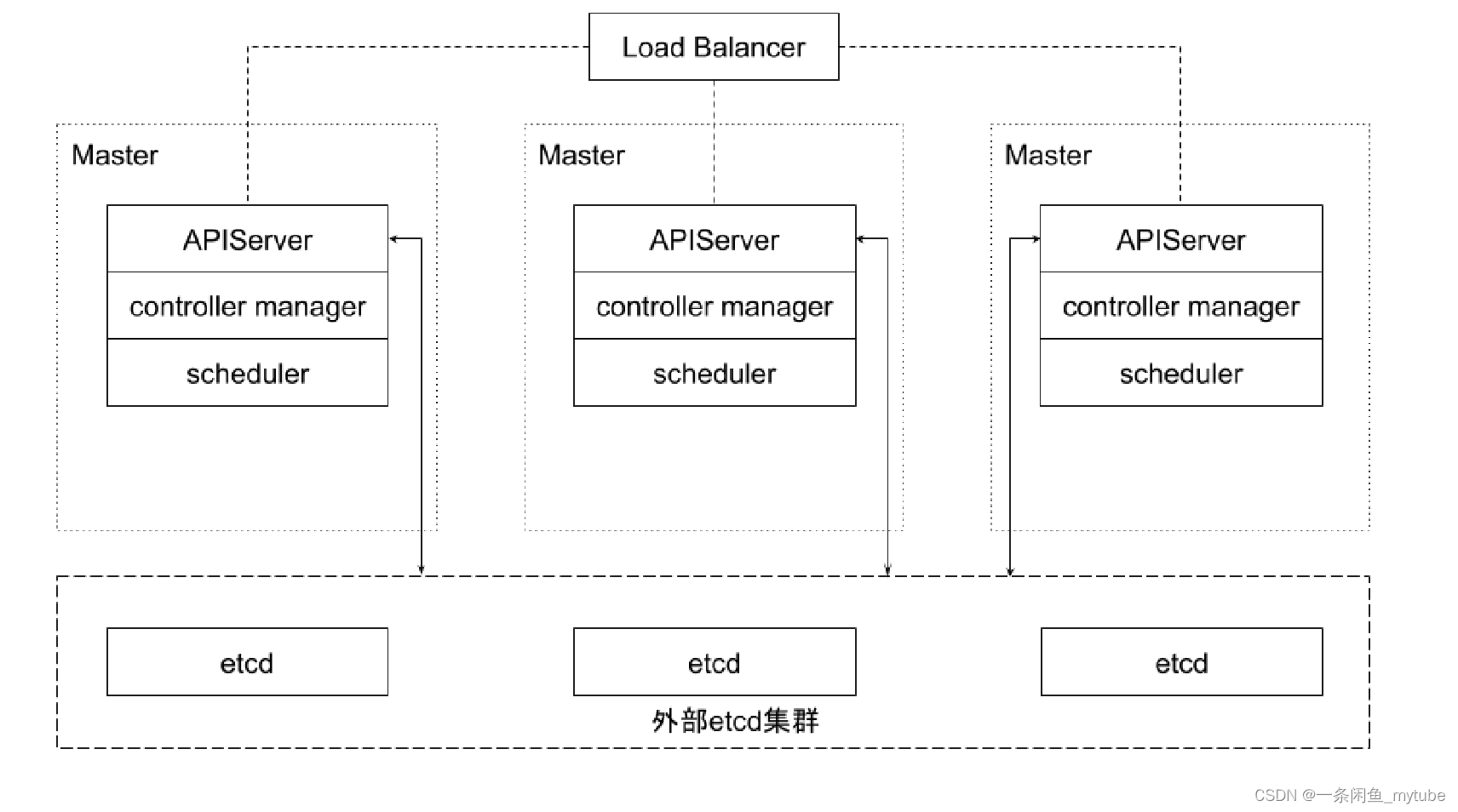

外部etcd集群的高可用拓扑

- 该拓扑将控制平面和etcd成员解耦。如果丢失一个Master节点,对etcd成员的影响较小,并

且不会像堆叠式拓扑那样对集群冗余产生太大影响。但是,此拓扑所需的主机数量是堆叠式拓

扑的两倍。具有此拓扑的群集至少需要三个主机用于控制平面节点,三个主机用于etcd集群

实践 - etcd集群高可用

- 保证高可用是首要目标

- 所有写操作都要经过leader

- apiserver的配置只连本地的etcd peer

- apiserver的配置指定所有etcd peers,但只有当前连接的etcd member异常,apiserver才会换目标

实践 - etcd集群高可用

? apiserver和etcd 部署在同一节点

? apiserver和etcd之间的通讯基于gRPC

? 针对每一个object,apiserver和etcd之间的Connection -> stream 共享

? http2的特性

? Stream quota

? 带来的问题?对于大规模集群,会造成链路阻塞

? 10000个pod,一次list操作需要返回的数据可能超过100M

实践 – etcd存储规划

本地 vs 远程

- Remote Storage

- 优势是假设永远可用

- 劣势是IO效率

- 最佳实践

- Local SSD

- 利用local volume分配空间

多少空间

- 与集群规模相关

安全性

-

peer和peer之间的通讯加密

- 是否有需求

- TLS的额外开销

- 运营复杂度增加

-

数据加密

- Kubernetes提供了针对secret的加密

-

事件分离

- 对于大规模集群,大量的事件会对etcd造成压力

- API server 启动脚本中指定etcd servers集群

减少网络延迟

- 数据中心内的RTT大概是数毫秒,国内的典型RTT约为50ms,两大洲之间的RTT可能慢至

400ms。因此建议etcd集群尽量同地域部署 - 当客户端到Leader的并发连接数量过多,可能会导致其他Follower节点发往Leader的请求因

为网络拥塞而被延迟处理

可以在节点上通过流量控制工具(Traffic Control)提高etcd成员之间发送数据的优先级来避免

减少磁盘I/O延迟

- 强烈建议使用SSD

- 典型的旋转磁盘写延迟约为10毫秒

- SSD(Solid State Drives,固态硬盘),延迟通常低于1毫秒

- 将etcd的数据存放在单独的磁盘内

- ionice命令对etcd服务设置更高的磁盘I/O优先级,尽可能避免其他进程的影响

$ ionice -c2 -n0 -p 'pgrep etcd'

保持合理的日志文件大小

- tcd以日志的形式保存数据,无论是数据创建还是修改,它都将操作追加到日志文件,因此日志

文件大小会随着数据修改次数而线性增长 - 当Kubernetes集群规模较大时,其对etcd集群中的数据更改也会很频繁,集群日记文件会迅速

增长 - etcd会以固定周期创建快照保存系统的当前状态,并移除旧日志文

件。另外当修改次数累积到一定的数量(默认是10000,通过参数“–snapshot-count”指

定),etcd也会创建快照文件 - 如果etcd的内存使用和磁盘使用过高,可以先分析是否数据写入频度过大导致快照频度过高,确

认后可通过调低快照触发的阈值来降低其对内存和磁盘的使用

设置合理的存储配额

- 存储空间的配额用于控制etcd数据空间的大小

- 合理的存储配额可保证集群操作的可靠性

- etcd的性能会因为存储空间的持续增长而严重下降

- 甚至有耗完集群磁盘空间导致不可预测集群行为的风险

自动压缩历史版本

- “–auto-compaction”,其值以小时为单位。也就是etcd会自动压缩该值设置的时间窗口之

前的历史版本

定期消除碎片化

- 压缩历史版本,相当于离散地抹去etcd存储空间某些数据,etcd存储空间中将会出现碎片

- 定期消除存储碎片,将释放碎片化的存储空间,重新调整整个存储空间

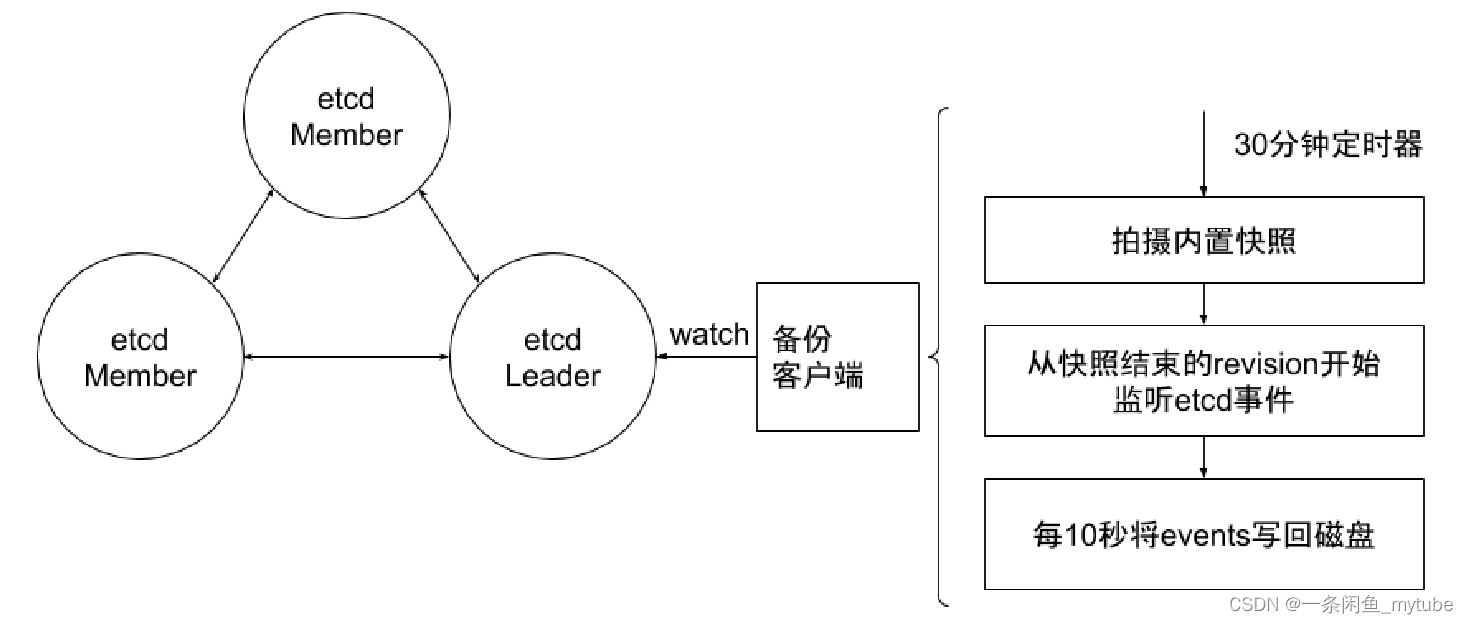

- 备份方案

- etcd备份:备份完整的集群信息,灾难恢复

- etcdctl snapshot save

- 备份Kubernetes event

- 频度

- 时间间隔太长

- 如果有外部资源配置,如负载均衡等,能否接受数据丢失导致的leak

- 时间间隔太短

- 做snapshot的时候,etcd会锁住当前数据

- 并发的写操作需要开辟新的空间进行增量写,导致磁盘空间增长

- 时间间隔太长

- 如何保证备份的时效性,同时防止磁盘爆掉

- Auto defrag

优化运行参数

- 通过调整心跳周期(Heatbeat Interval)和选举超时时间(Election

Timeout),来降低Leader选举的可能性- 心跳周期参数推荐设置为接近etcd多个成员之间平均数据往返周期的最大值,一般是平均RTT的0.55-1.5倍

- 选举超时时间最少设置为etcd成员之间RTT时间的10倍

etcd备份存储

- 两个子目录:wal和snap

- 所有数据的修改在提交前,都要先写入wal中

- snap是用于存放快照数据,为防止wal文件过多,etcd会定期(当wal中数据超过10000条记录时,由参数“–snapshot-count”设置)创建快照。当快照生成后,wal中数据就可以被删除了

- 数据遭到破坏或错误修改需要回滚到之前某个状态

- 一是从快照中恢复数据主体,但是未被拍入快照的数据会丢失

- 而是执行所有WAL中记录的修改操作,从最原始的数据恢复到数据损坏之前的状态,但恢复的时间较长

备份方案实践

- 备份程序每30分钟触发一次快照的拍摄。紧接着它从快照结

束的版本(Revision)开始,监听etcd集群的事件,并每10秒钟将事件保存到文件中,并将快照和事件文件

上传到网络存储设备中。 - 30分钟的快照周期对集群性能影响甚微。当大灾难来临时,也至多丢失10秒的数据。

至于数据修复,首先把数据从网络存储设备中下载下来,然后从快照中恢复大块数据,并在此基础上依次应

用存储的所有事件。这样就可以将集群数据恢复到灾难发生前

refer 云原生训练营

常联系,如果您看完了

- wx: tutengdihuang

- 群如下

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- K8S集群部署解决工作节点couldn‘t get current server API group list问题

- 防火墙:保护网络安全的第一道防线

- 元素隐式具有 “any“ 类型,因为类型为 “string“ 的表达式不能用于索引类型 “typeof

- 企业必知的加速FTP传输解决方案

- Apache Flink 1.15正式发布

- Docker Compose 部署 jenkins

- 计算机组成原理第6章-(算术运算)【下】

- vue3 setup语法糖

- MySQL中的视图和触发器

- WPF入门到跪下 第九章 MVVM-跨模块交互