ElasticSearch 学习9 spring-boot ,elasticsearch7.16.1实现中文拼音分词搜索

发布时间:2024年01月12日

一、elasticsearch官网下载:Elasticsearch 7.16.1 | Elastic

二、拼音、ik、繁简体转换插件安装

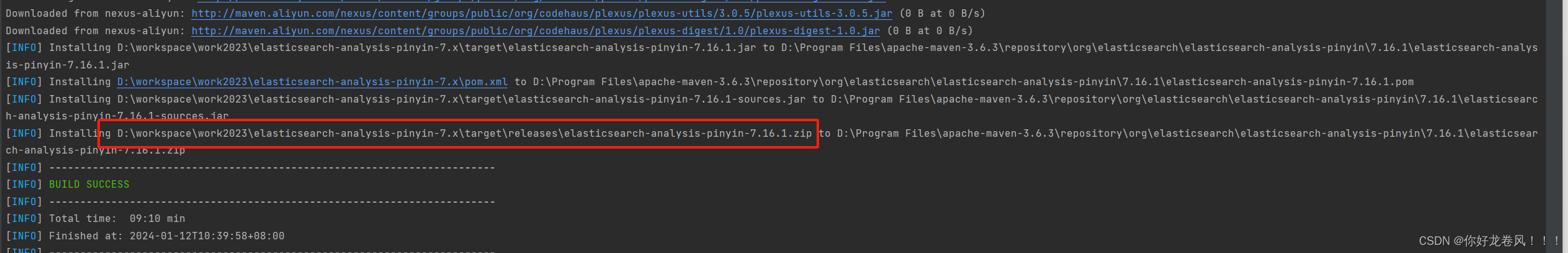

安装过程:从github上下载源码到本地,idea打开项目,修改对应项目中的pom.xml将

<elasticsearch.version>7.16.1</elasticsearch.version>修改为对应的elasticsearch版本

,alt+f12打开cmd命令界面,输入mvn install,项目编译成功后会在对应目录中生成对应zip包,效果如图:

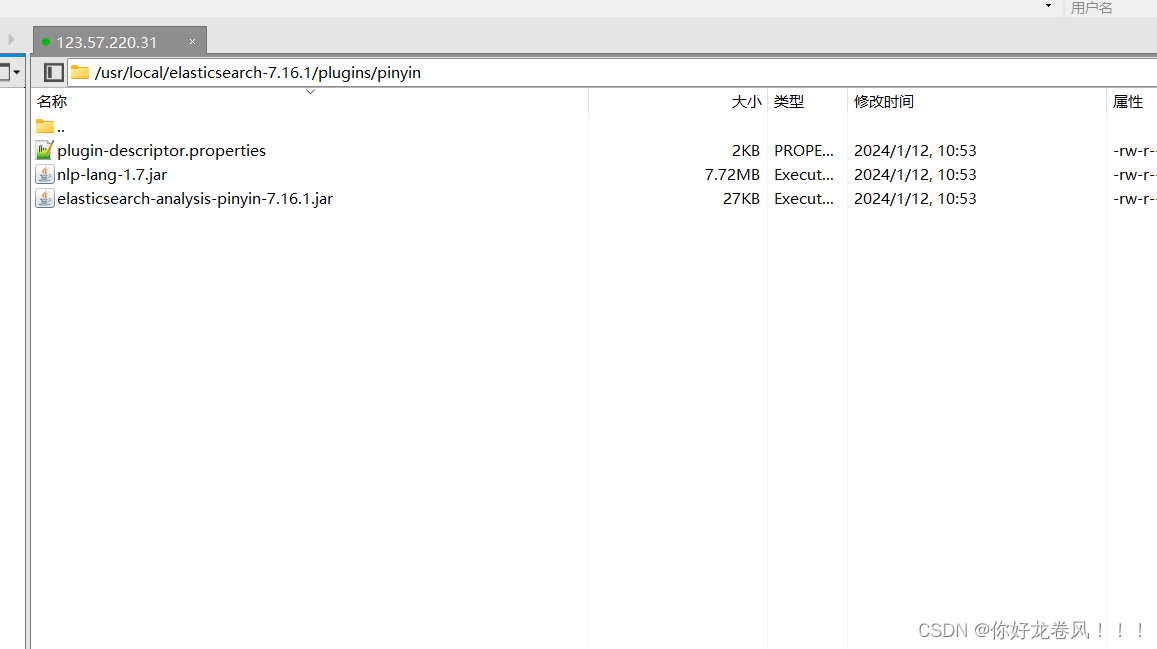

将对应zip包解压到elasticsearch存放目录的plugins下:

重新给把lasticsearch的文件权限给用户elastic?

chown -R elastic /usr/local/elasticsearch-7.16.1/

不然报权限的错误哦

然后启动elasticsearch.bat,

这样对应插件就算安装成功了

这样对应插件就算安装成功了

三. mvn,及yml配置

<elasticsearch.version>7.16.1</elasticsearch.version>

<dependency>

<groupId>org.elasticsearch</groupId>

<artifactId>elasticsearch</artifactId>

<version>${elasticsearch.version}</version>

</dependency>

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>elasticsearch-rest-client</artifactId>

<version>${elasticsearch.version}</version>

</dependency>

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>transport</artifactId>

<version>${elasticsearch.version}</version>

</dependency>

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>elasticsearch-rest-high-level-client</artifactId>

<version>${elasticsearch.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.data</groupId>

<artifactId>spring-data-elasticsearch</artifactId>

<version>3.1.3.RELEASE</version>

</dependency>

<dependency>

<groupId>org.elasticsearch.plugin</groupId>

<artifactId>x-pack-sql-jdbc</artifactId>

<version>${elasticsearch.version}</version>

</dependency> # ElasticSearch 7设置

elasticsearch:

schema: http

host: 123.456

port: 9200

userName: es

password:3333

indexes: index

四. es工具类

此次在原来的基础上主要是加了创建索引时可选则索引库的默认分词器类型,可选pinyin

CreateIndexRequest request = new CreateIndexRequest(indexName);

request.settings(Settings.builder()

//.put("analysis.analyzer.default.type", "ik_max_word")

//.put("analysis.analyzer.default.type", "pinyin")//同时支持拼音和文字

.put("analysis.analyzer.default.type", indexType)

import com.alibaba.fastjson.JSON;

import io.micrometer.core.instrument.util.StringUtils;

import org.apache.http.HttpHost;

import org.apache.http.auth.AuthScope;

import org.apache.http.auth.UsernamePasswordCredentials;

import org.apache.http.client.CredentialsProvider;

import org.apache.http.impl.client.BasicCredentialsProvider;

import org.apache.lucene.search.TotalHits;

import org.elasticsearch.action.DocWriteResponse;

import org.elasticsearch.action.admin.indices.create.CreateIndexRequest;

import org.elasticsearch.action.admin.indices.create.CreateIndexResponse;

import org.elasticsearch.action.admin.indices.delete.DeleteIndexRequest;

import org.elasticsearch.action.admin.indices.get.GetIndexRequest;

import org.elasticsearch.action.delete.DeleteRequest;

import org.elasticsearch.action.delete.DeleteResponse;

import org.elasticsearch.action.index.IndexRequest;

import org.elasticsearch.action.index.IndexResponse;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.action.support.master.AcknowledgedResponse;

import org.elasticsearch.action.update.UpdateRequest;

import org.elasticsearch.action.update.UpdateResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestClient;

import org.elasticsearch.client.RestClientBuilder;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.settings.Settings;

import org.elasticsearch.common.text.Text;

import org.elasticsearch.common.unit.TimeValue;

import org.elasticsearch.common.xcontent.XContentType;

import org.elasticsearch.index.query.QueryBuilder;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.index.query.QueryStringQueryBuilder;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.SearchHits;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.elasticsearch.search.fetch.subphase.highlight.HighlightBuilder;

import org.elasticsearch.search.fetch.subphase.highlight.HighlightField;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

/**

* @author ylwang

* @create 2021/7/27 9:16

*/

@Configuration

public class ElasticSearchClientConfig {

/**

* 协议

*/

@Value("${jeecg.elasticsearch.schema}")

private String schema;

/**

* 用户名

*/

@Value("${jeecg.elasticsearch.userName}")

private String userName;

/**

* 密码

*/

@Value("${jeecg.elasticsearch.password}")

private String password;

/**

* 地址

*/

@Value("${jeecg.elasticsearch.host}")

private String host;

/**

* 地址

*/

@Value("${jeecg.elasticsearch.port}")

private String port;

public final String AIOPENQAQINDEXNAME = "aiopenqaq"; //ai问题库索引名

public final String AIYunLiao = "aiyunliao"; //ai语料库索引名

public final String KNOWLEDGE = "knowledge";//知识库索引名

public static RestHighLevelClient restHighLevelClient;

@Bean

public RestHighLevelClient restHighLevelClient() {

restHighLevelClient = new RestHighLevelClient(RestClient.builder(

new HttpHost(host, Integer.parseInt(port), schema)));

//验证用户密码

final CredentialsProvider credentialsProvider = new BasicCredentialsProvider();

credentialsProvider.setCredentials(AuthScope.ANY, new UsernamePasswordCredentials(userName, password));

RestClientBuilder restClientBuilder = RestClient

.builder(new HttpHost(host, Integer.parseInt(port), schema))

.setHttpClientConfigCallback(httpClientBuilder -> {

httpClientBuilder.setDefaultCredentialsProvider(credentialsProvider);

return httpClientBuilder;

})

.setRequestConfigCallback(requestConfigBuilder -> {

return requestConfigBuilder;

});

restHighLevelClient = new RestHighLevelClient(restClientBuilder);

return restHighLevelClient;

}

/**

* 判断索引是否存在

* @return 返回是否存在。

* <ul>

* <li>true:存在</li>

* <li>false:不存在</li>

* </ul>

*/

public boolean existIndex(String index){

GetIndexRequest request = new GetIndexRequest();

request.indices(index);

boolean exists;

try {

exists = restHighLevelClient().indices().exists(request, RequestOptions.DEFAULT);

} catch (IOException e) {

e.printStackTrace();

return false;

}

return exists;

}

/**

* 查询并分页

* @param indexName 索引名字

* @param from 从第几条开始查询,相当于 limit a,b 中的a ,比如要从最开始第一条查,可传入: 0

* @param size 本次查询最大查询出多少条数据 ,相当于 limit a,b 中的b

* @return {@link SearchResponse} 结果,可以通过 response.status().getStatus() == 200 来判断是否执行成功

*获取总条数的方法

* TotalHits totalHits = searchResponse.getHits().getTotalHits();

*

*/

public SearchResponse search(String indexName, SearchSourceBuilder searchSourceBuilder, Integer from, Integer size){

SearchRequest request = new SearchRequest(indexName);

searchSourceBuilder.from(from);

searchSourceBuilder.size(size);

request.source(searchSourceBuilder);

SearchResponse response = null;

try {

response = restHighLevelClient().search(request, RequestOptions.DEFAULT);

} catch (IOException e) {

e.printStackTrace();

}

return response;

}

/**

* 根据索引中的id查询

* @param indexName

* @param id

* @return

*/

public String searchById(String indexName,String id){

SearchRequest request = new SearchRequest(indexName);

request.source(new SearchSourceBuilder().query(QueryBuilders.termQuery("_id",id)));

SearchResponse response = null;

try {

response = restHighLevelClient().search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

SearchHit[] hits1 = hits.getHits();

for (SearchHit fields : hits1) {

return fields.getSourceAsString();

}

} catch (IOException e) {

e.printStackTrace();

}

return null;

}

/**

* 创建索引

*

* @param indexName 要创建的索引的名字,传入如: testindex

* @param indexType 索引类型 : ik_max_word 和 pinyin

* @return 创建索引的响应对象。可以使用 {@link CreateIndexResponse#isAcknowledged()} 来判断是否创建成功。如果为true,则是创建成功

*/

public CreateIndexResponse createIndex(String indexName,String indexType) {

CreateIndexResponse response=null;

if(existIndex(indexName)){

response = new CreateIndexResponse(false, false, indexName);

return response;

}

if(StringUtils.isBlank(indexType)){

indexType="ik_max_word";

}

CreateIndexRequest request = new CreateIndexRequest(indexName);

request.settings(Settings.builder()

//.put("analysis.analyzer.default.type", "ik_max_word")

//.put("analysis.analyzer.default.type", "pinyin")//同时支持拼音和文字

.put("analysis.analyzer.default.type", indexType)

);

try {

response = restHighLevelClient().indices().create(request, RequestOptions.DEFAULT);

} catch (IOException e) {

e.printStackTrace();

}

return response;

}

/**

* 数据添加,网 elasticsearch 中添加一条数据

* @param params 要增加的数据,key-value形式。 其中map.value 支持的类型有 String、int、long、float、double、boolean

* @param indexName 索引名字,类似数据库的表,是添加进那个表

* @param id 要添加的这条数据的id, 如果传入null,则由es系统自动生成一个唯一ID

* @return 创建结果。如果 {@link IndexResponse#getId()} 不为null、且id长度大于0,那么就成功了

*/

public IndexResponse put(String params, String indexName, String id){

//创建请求

IndexRequest request = new IndexRequest(indexName);

if(id != null){

request.id(id);

}

request.timeout(TimeValue.timeValueSeconds(5));

IndexResponse response = null;

try {

response = restHighLevelClient().index(request.source(params, XContentType.JSON).setRefreshPolicy("wait_for"), RequestOptions.DEFAULT);

} catch (IOException e) {

e.printStackTrace();

}

return response;

}

/**

* 数据更新

* @param params 要更新 的数据,key-value形式。 其中map.value 支持的类型有 String、int、long、float、double、boolean

* @param indexName 索引名字,类似数据库的表,是添加进那个表

* @param id 要添加的这条数据的id, 如果传入null,则由es系统自动生成一个唯一ID

* @return 创建结果。如果 {@link IndexResponse#getId()} 不为null、且id长度大于0,那么就成功了

*/

public UpdateResponse update(String params, String indexName, String id){

//创建请求

UpdateRequest request = new UpdateRequest(indexName,id);

request = request.doc(params, XContentType.JSON);

request.setRefreshPolicy("wait_for");

request.timeout(TimeValue.timeValueSeconds(5));

UpdateResponse response = null;

try {

response = restHighLevelClient().update(request, RequestOptions.DEFAULT);

} catch (IOException e) {

e.printStackTrace();

}

return response;

}

/**

* 删除索引

* @param indexName

* @throws IOException

*/

public AcknowledgedResponse deleteIndex(String indexName) {

DeleteIndexRequest request = new DeleteIndexRequest(indexName);

AcknowledgedResponse response = null;

try {

response = restHighLevelClient().indices().delete(request, RequestOptions.DEFAULT);

} catch (IOException e) {

e.printStackTrace();

}

System.out.println(response.isAcknowledged());

return response;

}

/**

* 通过elasticsearch数据的id,来删除这条数据

* @param indexName 索引名字

* @param id 要删除的elasticsearch这行数据的id

*/

public boolean deleteById(String indexName, String id) {

DeleteRequest request = new DeleteRequest(indexName, id);

request.setRefreshPolicy("wait_for");

DeleteResponse delete = null;

try {

delete = restHighLevelClient().delete(request, RequestOptions.DEFAULT);

} catch (IOException e) {

e.printStackTrace();

//删除失败

return false;

}

if(delete == null){

//这种情况应该不存在

return false;

}

if(delete.getResult().equals(DocWriteResponse.Result.DELETED)){

return true;

}else{

return false;

}

}

}

五,创建索引,插入数据

@Resource

private ElasticSearchClientConfig es;

@Resource

private AiOpenqaQMapper aiOpenqaQMapper;

@Override

public int selectNums(String applicationId) {

return aiOpenqaQMapper.selectNums(applicationId);

}

@Override

public boolean saveAndEs(AiOpenqaQ aiOpenqaQ) {

// es.deleteIndex(es.AIOPENQAQINDEXNAME);

boolean save = this.save(aiOpenqaQ);

if(save){

if(!es.existIndex(es.AIOPENQAQINDEXNAME)){

es.createIndex(es.AIOPENQAQINDEXNAME,"pinyin");

}

es.put(JsonMapper.toJsonString(aiOpenqaQ), es.AIOPENQAQINDEXNAME,aiOpenqaQ.getId());

}

return true;

}

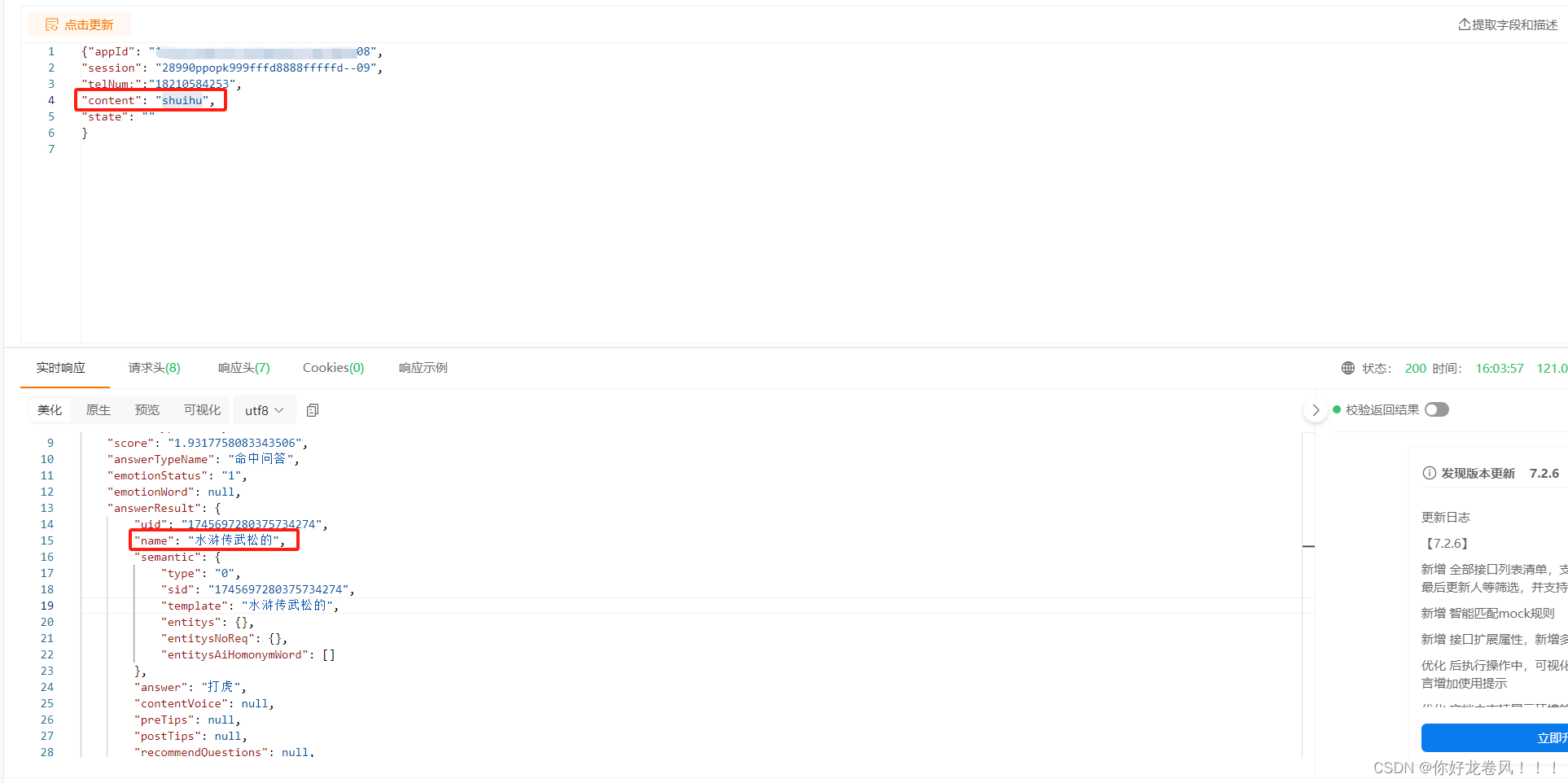

}六,验证

同音词查询

直接拼音

文章来源:https://blog.csdn.net/zhaofuqiangmycomm/article/details/135554546

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- Redis实现滚动周榜|滚动榜单|直播间榜单|排行榜|Redis实现日榜03

- 使用WebStorm编译和运行项目

- 搭建 MyBatis 环境

- Redis面试系列-01

- 什么是技术架构?架构和框架之间的区别是什么?怎样去做好架构设计?(二)

- PHP手机号码归属地批量查询系统 V2024

- 分库分表后如何解决唯一主键问题?

- 亲测可用,解决PowerPoint无法从所选的文件中插入视频和HEVC扩展问题。

- LCR 183. 望远镜中最高的海拔

- 宋仕强论道之华强北购物中心shopping mall(四十一)