[音视频 ffmpeg] 复用推流

发布时间:2023年12月30日

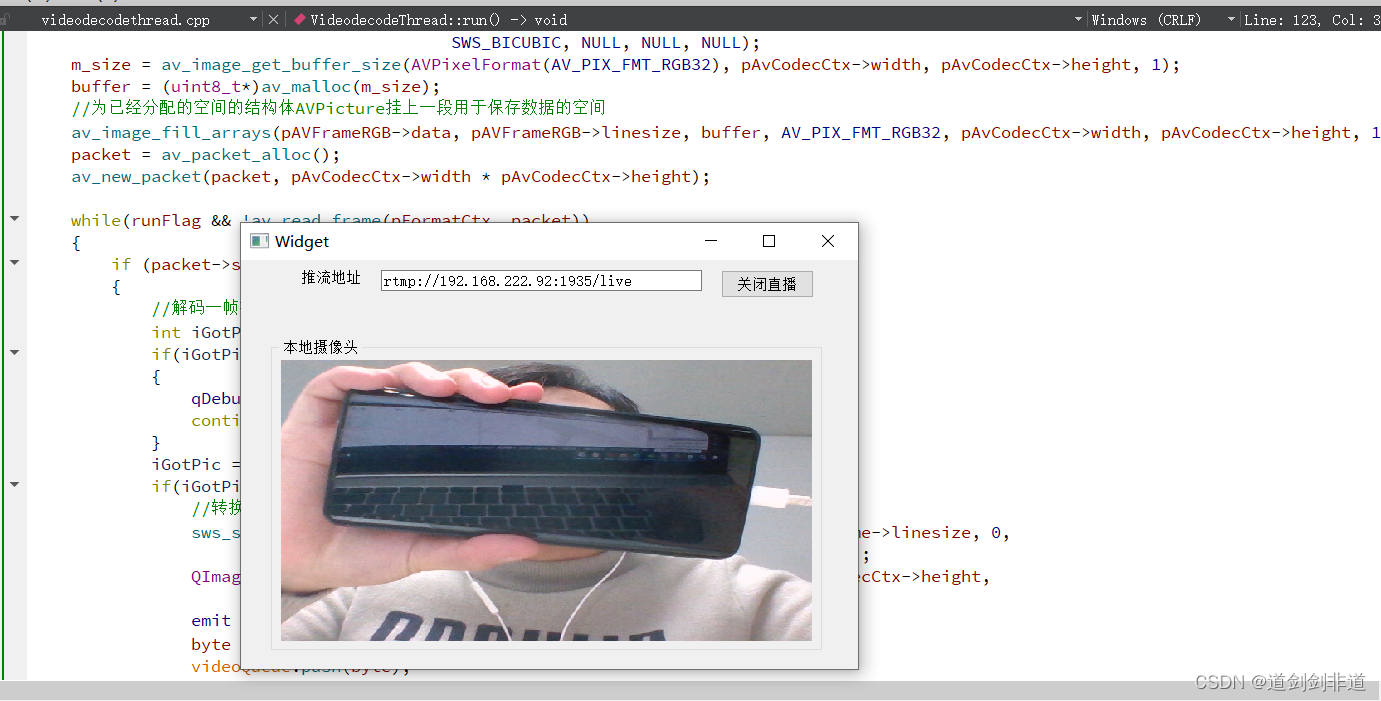

获取摄像头demo

videodecodethread.cpp

#include "videodecodethread.h"

VideodecodeThread::VideodecodeThread(QObject *parent)

:QThread(parent)

{

avdevice_register_all();

avformat_network_init();

}

VideodecodeThread::~VideodecodeThread()

{

if(pFormatCtx)

{

avformat_close_input(&pFormatCtx);

}

if(packet)

{

av_packet_free(&packet);

}

if(pAvCodecCtx)

{

avcodec_close(pAvCodecCtx);

}

if(pAvFrame)

{

av_free(pAvFrame);

}

}

void VideodecodeThread::run()

{

fmt = av_find_input_format("dshow");

av_dict_set(&options, "video_size", "640*480", 0);

av_dict_set(&options, "framerate", "30", 0);

ret = avformat_open_input(&pFormatCtx, "video=ov9734_azurewave_camera", fmt, &options);

if (ret < 0)

{

qDebug() << "Couldn't open input stream." << ret;

return;

}

ret = avformat_find_stream_info(pFormatCtx, &options);

if(ret < 0)

{

qDebug()<< "Couldn't find stream information.";

return;

}

videoIndex = av_find_best_stream(pFormatCtx, AVMEDIA_TYPE_VIDEO, -1, -1, &pAvCodec, 0);

if(videoIndex < 0)

{

qDebug()<< "Couldn't av_find_best_stream.";

return;

}

pAvCodec = avcodec_find_decoder(pFormatCtx->streams[videoIndex]->codecpar->codec_id);

if(!pAvCodec)

{

qDebug()<< "Couldn't avcodec_find_decoder.";

return;

}

qDebug()<<"pAVCodec->name:" << QString::fromStdString(pAvCodec->name);

if(pFormatCtx->streams[videoIndex]->avg_frame_rate.den != 0)

{

float fps_ = pFormatCtx->streams[videoIndex]->avg_frame_rate.num / pFormatCtx->streams[videoIndex]->avg_frame_rate.den;

qDebug() <<"fps:" << fps_;

}

int64_t video_length_sec_ = pFormatCtx->duration/AV_TIME_BASE;

qDebug() <<"video_length_sec_:" << video_length_sec_;

pAvCodecCtx = avcodec_alloc_context3(pAvCodec);

if(!pAvCodecCtx)

{

qDebug()<< "Couldn't avcodec_alloc_context3.";

return;

}

ret = avcodec_parameters_to_context(pAvCodecCtx, pFormatCtx->streams[videoIndex]->codecpar);

if(ret < 0)

{

qDebug()<< "Couldn't avcodec_parameters_to_context.";

return;

}

ret = avcodec_open2(pAvCodecCtx, pAvCodec, nullptr);

if(ret!=0)

{

qDebug("avcodec_open2 %d", ret);

return;

}

pAvFrame = av_frame_alloc();

pAVFrameRGB = av_frame_alloc();

pSwsCtx = sws_getContext(pAvCodecCtx->width, pAvCodecCtx->height, pAvCodecCtx->pix_fmt,

pAvCodecCtx->width, pAvCodecCtx->height, AV_PIX_FMT_RGB32,

SWS_BICUBIC, NULL, NULL, NULL);

m_size = av_image_get_buffer_size(AVPixelFormat(AV_PIX_FMT_RGB32), pAvCodecCtx->width, pAvCodecCtx->height, 1);

buffer = (uint8_t*)av_malloc(m_size);

//为已经分配的空间的结构体AVPicture挂上一段用于保存数据的空间

av_image_fill_arrays(pAVFrameRGB->data, pAVFrameRGB->linesize, buffer, AV_PIX_FMT_RGB32, pAvCodecCtx->width, pAvCodecCtx->height, 1);

packet = av_packet_alloc();

av_new_packet(packet, pAvCodecCtx->width * pAvCodecCtx->height);

while(runFlag && !av_read_frame(pFormatCtx, packet))

{

if (packet->stream_index == videoIndex)

{

//解码一帧视频数据

int iGotPic = avcodec_send_packet(pAvCodecCtx, packet);

if(iGotPic != 0)

{

qDebug("VideoIndex avcodec_send_packet error :%d", iGotPic);

continue;

}

iGotPic = avcodec_receive_frame(pAvCodecCtx, pAvFrame);

if(iGotPic == 0){

//转换像素

sws_scale(pSwsCtx, (uint8_t const * const *)pAvFrame->data, pAvFrame->linesize, 0,

pAvFrame->height, pAVFrameRGB->data, pAVFrameRGB->linesize);

QImage desImage = QImage((uchar*)buffer, pAvCodecCtx->width,pAvCodecCtx->height,

QImage::Format_RGB32); //RGB32

emit sigSendQImage(desImage);//得到图片的时候触发信号

byte = QByteArray((char*)pAvFrame->data);

videoQueue.push(byte);

videoCount++;

msleep(25);

}

}

av_packet_unref(packet);

}

}

void VideodecodeThread::setRunFlag(bool flag)

{

runFlag = flag;

}

videodecodethread.h

#ifndef VIDEODECODETHREAD_H

#define VIDEODECODETHREAD_H

#include <QObject>

#include <QThread>

#include <QDebug>

#include <mutex>

#include <QImage>

#include "SharedVariables.h"

extern "C" {

#include "libavdevice/avdevice.h" // 调用输入设备需要的头文件

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libavutil/avutil.h"

#include "libswscale/swscale.h"

#include "libavutil/imgutils.h"

#include "libavutil/pixfmt.h"

#include "libavutil/error.h"

#include "libswresample/swresample.h"

#include "libavfilter/avfilter.h"

}

class VideodecodeThread :public QThread

{

Q_OBJECT

public:

VideodecodeThread(QObject *parent = nullptr);

~VideodecodeThread();

void run() override;

void setRunFlag(bool flag);

signals:

void sigSendQImage(QImage);

private:

const AVInputFormat *fmt = nullptr;

AVFormatContext *pFormatCtx = nullptr;

AVDictionary *options = nullptr;

AVPacket *packet = nullptr;

AVFrame* pAvFrame = nullptr;

const AVCodec *pAvCodec = nullptr;

AVCodecContext *pAvCodecCtx = nullptr;

AVFrame* pAVFrameRGB = nullptr;

SwsContext* pSwsCtx = nullptr;

QByteArray byte;

int m_size = 0;

uint8_t* buffer = nullptr;

int ret = -1;

int videoIndex = -1;

bool runFlag = true;

int videoCount = 0;

};

#endif // VIDEODECODETHREAD_H

摄像头麦克风打开正常

目前复用还有问题继续修改

文章来源:https://blog.csdn.net/weixin_45397344/article/details/135263461

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- Win10电脑关闭OneDrive自动同步的方法

- flink 读取 apache paimon表,查看source的延迟时间 消费堆积情况

- linux 上安装 minio

- Keras多分类鸢尾花DEMO

- 如何使用Python3 Boto3删除AWS CloudFormation的栈(Stacks)

- Unity | Shader基础知识(第二集:shader语言的格式)

- 自己用原生js写jquery能够达到寻找节点,调用方法的效果。

- 天津python培训机构高薪就业!选Python的好处

- Spring 是如何解决循环依赖的

- Spring基于AOP(面向切面编程)开发