Python 备份 CSDN 博客

发布时间:2024年01月21日

代码来源

根据csdn 中的 一位博主 备份代码修改

新增加

增加了保存图片 到本地,和修改markdown中图片的路径

问题

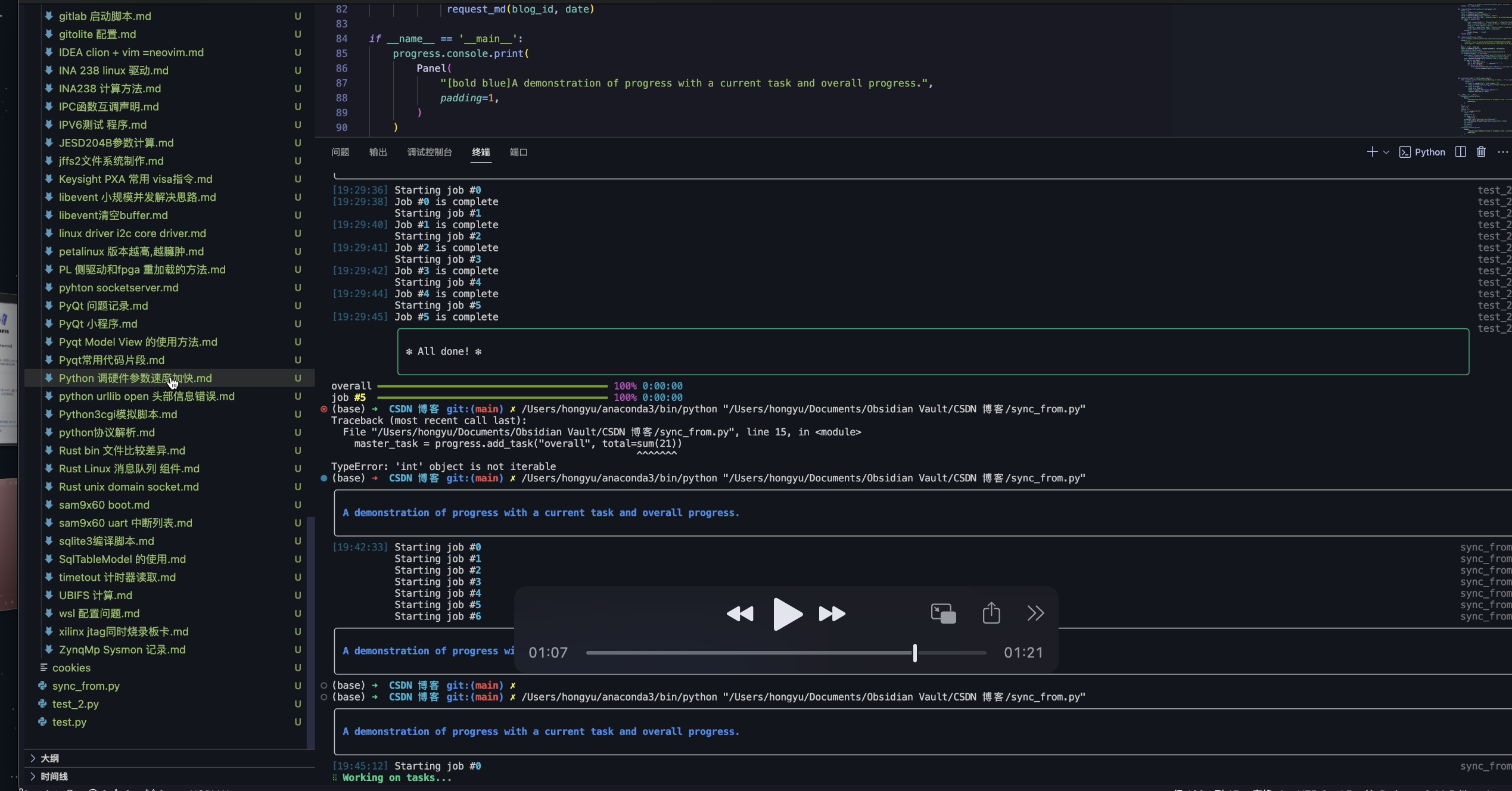

如果博客的内容太多,需要分多个truck 传输,保存时出现’'字符时,无法保存

注意

得获取登陆后的cookie,要不没法从服务器请求回博客详细内容

勉强可以用 , 95% 得到备份

import json

import uuid

import time

import requests

import datetime

from bs4 import BeautifulSoup

import re

import rich

import threading

from time import sleep

from rich.panel import Panel

from rich.progress import Progress

progress = Progress(auto_refresh=False)

master_task = progress.add_task("overall", total=21)

jobs_task = progress.add_task("jobs")

from rich.console import Console

console = Console()

def replace_image_path(markdown,new_path) -> str:

pattern = r'!\[.*?\]\((.+)\)'

lines = markdown.split('\n')

for i in range(len(lines)):

match = re.search(pattern, lines[i])

if match is not None:

original_path = match.group(1)

lines[i] = lines[i].replace(original_path, new_path+"/"+original_path.rsplit('/')[-1])

return '\n'.join(lines)

def request_blog_list(remote_url:str,page)->[]:

blogs = []

url = f'{remote_url}/{page}'

reply = requests.get(url,headers='')

parse = BeautifulSoup(reply.content, "lxml")

spans = parse.find_all('div', attrs={'class':'article-item-box csdn-tracking-statistics'})

for span in spans[:40]:

try:

href = span.find('a', attrs={'target':'_blank'})['href']

date = span.find('span', attrs={'class':'date'}).get_text()

blog_id = href.split("/")[-1]

read_num = span.find('span', attrs={'class':'read-num'}).get_text()

blogs.append([blog_id, date, read_num])

except:

print('Wrong, ' + href)

return blogs

def request_md(blog_id, date):

url = f"https://blog-console-api.csdn.net/v1/editor/getArticle?id={blog_id}"

# 检查 cookies 从 csdn 博客中

headers = {

"cookie":"",

"User-Agent": ""

}

data = {"id": blog_id}

reply = requests.get(url, headers=headers, data=data)

json_cache = reply.json()

markdowncontent=json_cache['data']['markdowncontent']

if markdowncontent is not None:

with open(f"blogs/{json_cache['data']['title']}.md","w+") as f:

f.write(replace_image_path(markdowncontent,"img"))

png = BeautifulSoup(reply.content,"lxml").find("img")

if png is not None:

res = png.get('src')

res = res.replace('"','').replace('\\','')

if res is not None:

with open(f"blogs/img/{res.rsplit('/', 1)[-1]}","wb") as f :

f.write(requests.get(res).content)

def main(start:int= 1,total_pages:int=1):

with console.status("[bold green]Working on tasks...") as status:

blogs = []

for page in range(start, total_pages + 1):

blogs.extend(request_blog_list("https://blog.csdn.net/weixin_45647912/article/list",page))

for blog in blogs:

blog_id = blog[0]

date = blog[1].split()[0].split("-")

request_md(blog_id, date)

if __name__ == '__main__':

progress.console.print(

Panel(

"[bold blue]A demonstration of progress with a current task and overall progress.",

padding=1,

)

)

start = 0

stop = 0

job_no = 0

for it in range(1,21,3):

start = it

stop = it +3

if stop > 21 :

stop = 21

progress.log(f"Starting job #{job_no}")

th = threading.Thread(target=main,args=(start,stop))

th.start()

th.join()

job_no+=1

progress.console.print(

Panel(

"[bold blue]A demonstration of progress with a current task and overall progress. [ done]",

padding=1,

)

)

文章来源:https://blog.csdn.net/weixin_45647912/article/details/135734315

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!