DataLoader的使用

发布时间:2024年01月06日

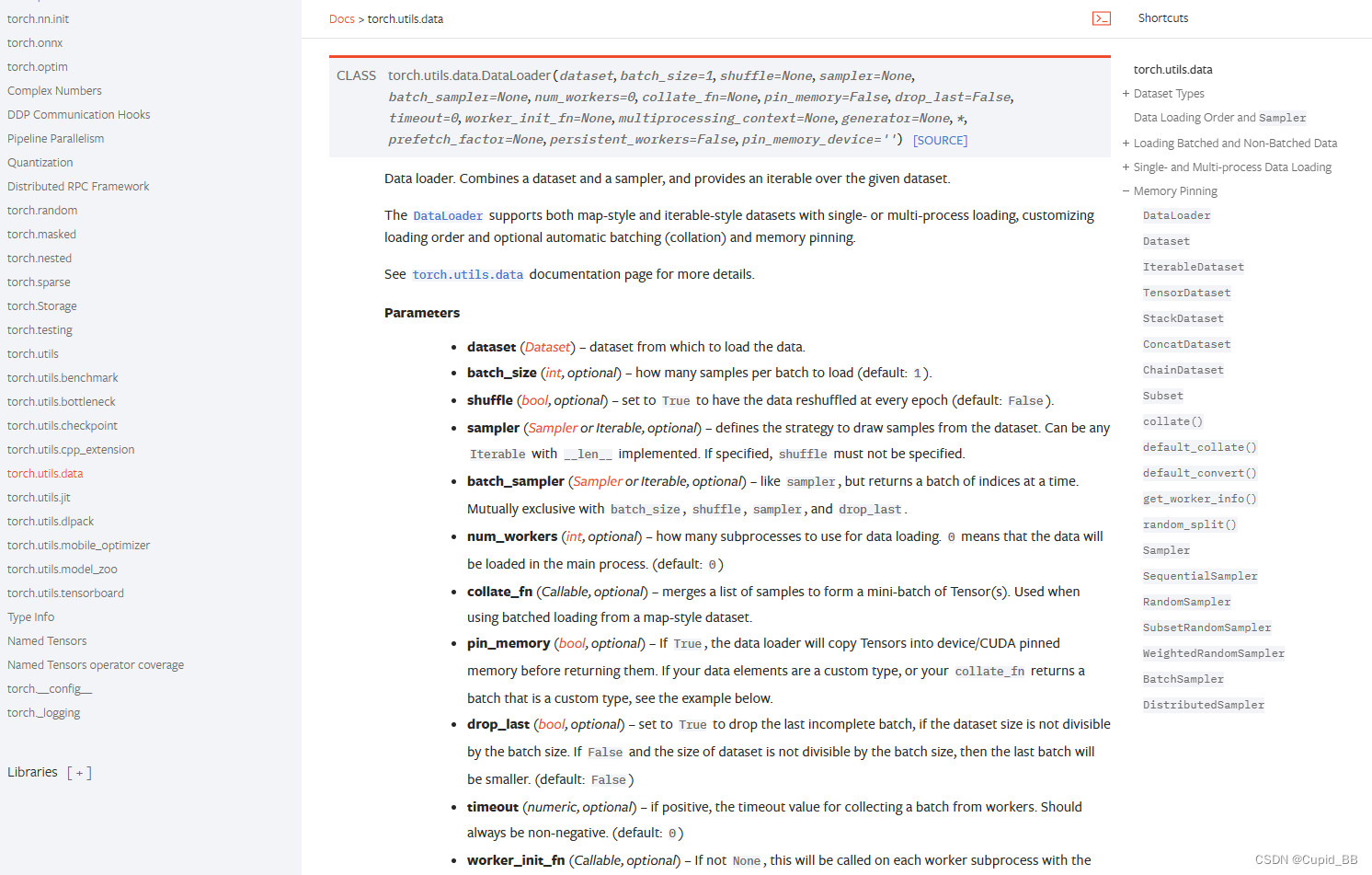

官方网站进行查看DataLoader

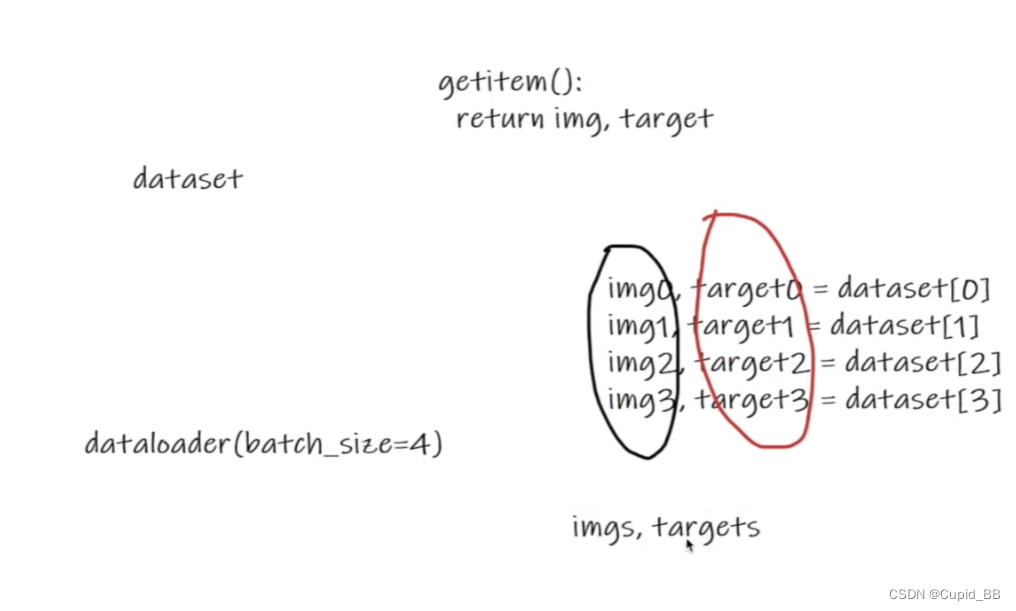

batch_size 的含义

import torchvision

from torch.utils.data import DataLoader

# 准备的测试数据集

test_data = torchvision.datasets.CIFAR10('D:\Pytorch\pythonProject\Transform\dataset', train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=4, shuffle=False, num_workers=0, drop_last=False)

# 测试数据集中第一张图片及target

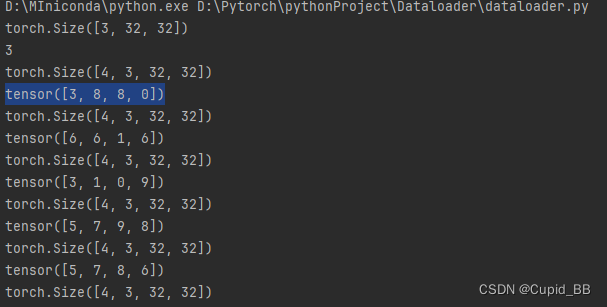

img, target = test_data[0]

print(img.shape) # torch.Size([3, 32, 32])

print(target) # 3

for data in test_loader:

imgs, targets = data

print(imgs.shape) # torch.Size([4, 3, 32, 32]); 4就是batch_size, 3是通道, 32×32是图片大小

print(targets) # tensor([3, 8, 8, 0]); 4张图片的target

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

# 准备的测试数据集

test_data = torchvision.datasets.CIFAR10('D:\Pytorch\pythonProject\Transform\dataset', train=False, transform=torchvision.transforms.ToTensor())

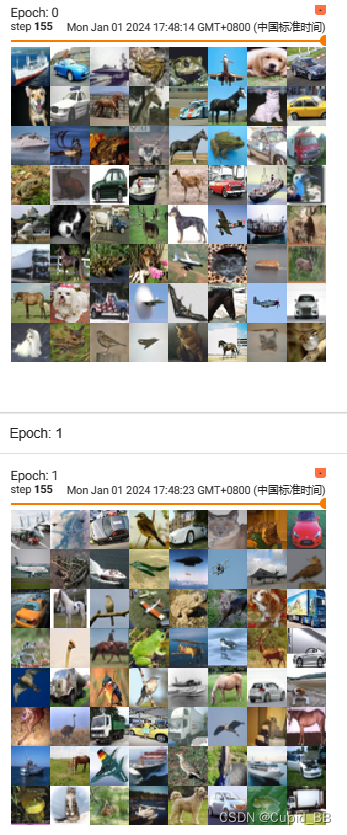

test_loader = DataLoader(dataset=test_data, batch_size=64, shuffle=True, num_workers=0, drop_last=True)

# 测试数据集中第一张图片及target

img, target = test_data[0]

print(img.shape) # torch.Size([3, 32, 32])

print(target) # 3

writer = SummaryWriter('dataloader')

for epoch in range(2):

step = 0

for data in test_loader:

imgs, targets = data

# print(imgs.shape) # torch.Size([4, 3, 32, 32]); 4就是batch_size, 3是通道, 32×32是图片大小

# print(targets) # tensor([3, 8, 8, 0]); 4张图片的target

writer.add_images('Epoch: {}'.format(epoch), imgs, step)

step += 1

writer.close()

shuffle=True 的话,会随机成batch

文章来源:https://blog.csdn.net/weixin_51788042/article/details/135326854

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- (统计用词)Identifiability可识别性

- C++动态内存分配(动态内存分配函数)栈区

- 磁盘分区--windows sever 2012 r2

- 如何用idm下载迅雷 2024最新详细解析

- 星穹铁道1.5版本活动【狐斋志异】,有哪些有趣故事和彩蛋

- 重置Parallels虚机密码

- Prometheus通过consul实现自动服务发现

- 什么事“网络水军”?他们的违法活动主要有四种形式

- maven 的 settings.xml 配置详解

- 阿里云环境搭建