加权准确率WA,未加权平均召回率UAR和未加权UF1

发布时间:2023年12月17日

加权准确率WA,未加权平均召回率UAR和未加权UF1

1.加权准确率WA,未加权平均召回率UAR和未加权UF1

from sklearn.metrics import classification_report

from sklearn.metrics import precision_recall_curve, average_precision_score,roc_curve, auc, precision_score, recall_score, f1_score, confusion_matrix, accuracy_score

import torch

from torchmetrics import MetricTracker, F1Score, Accuracy, Recall, Precision, Specificity, ConfusionMatrix

import warnings

warnings.filterwarnings("ignore") # 忽略告警

pred_list = [2, 0, 4, 1, 4, 2, 4, 2, 2, 0, 4, 1, 4, 2, 2, 0, 4, 1, 4, 2, 4, 2]

target_list = [1, 1, 4, 1, 3, 1, 2, 1, 1, 1, 4, 1, 3, 1, 1, 1, 4, 1, 3, 1, 2, 1]

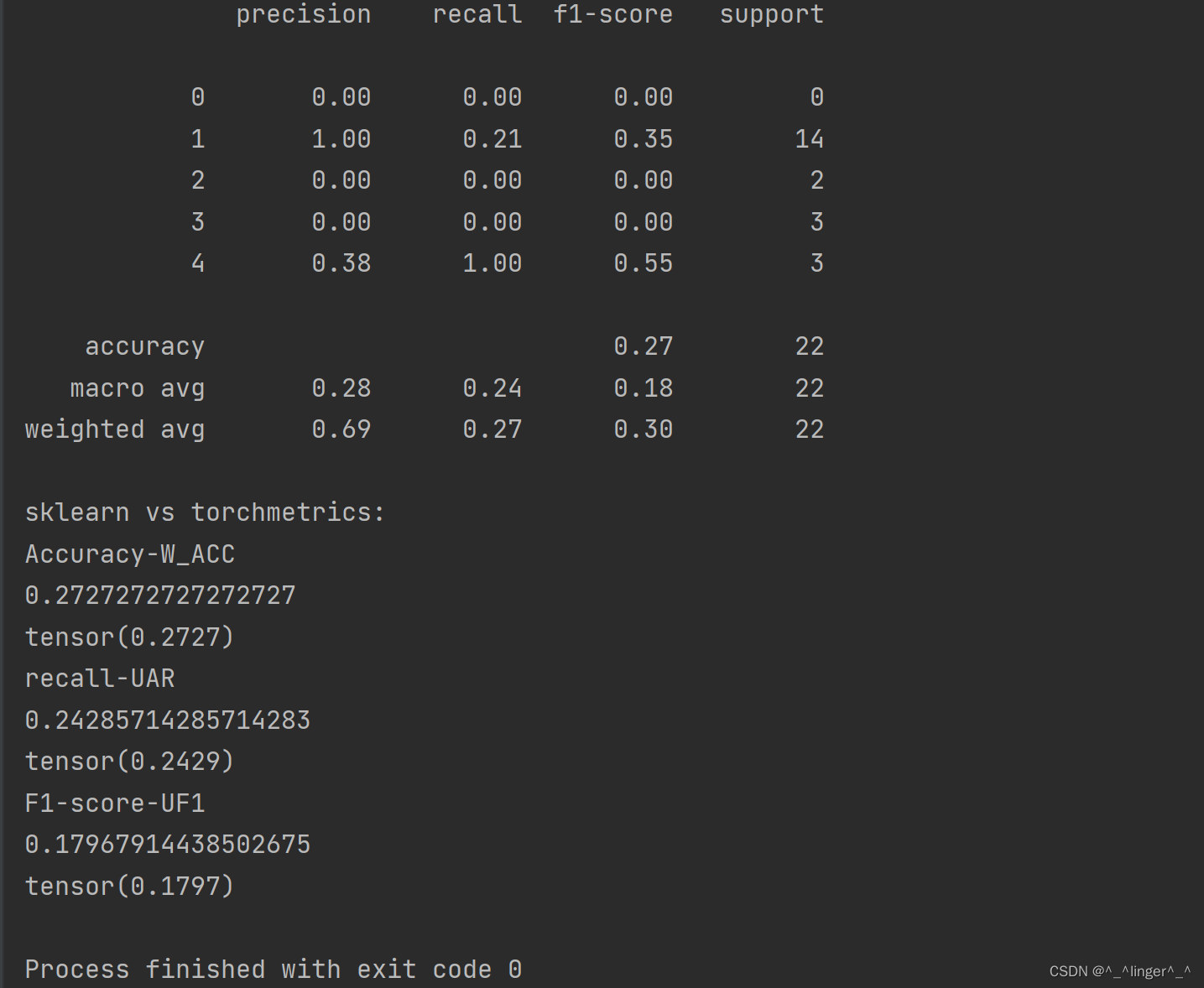

print(classification_report(target_list, pred_list))

# Accuracy # WA(weighted accuracy)加权准确率,其实就是我们sklearn日常跑代码出来的acc

# 在多分类问题中,加权准确率(Weighted Accuracy)是一种考虑每个类别样本数量的准确率计算方式。

# 对于样本不均衡的情况,该方式比较适用。其计算方式是将每个类别的准确率乘以该类别在总样本中的比例(权重),然后求和。

test_acc_en = Accuracy(task="multiclass",num_classes=5, threshold=1. / 5, average="weighted")

# Recall macro表示加权的

# UAR(unweighted average recall)未加权平均召回率

# 是一种性能评估指标,主要用于多分类问题。它表示各类别的平均召回率(Recall),在计算时,不对各类别进行加权。对于每个类别,召回率是该类别中真正被正确预测的样本数与该类别中所有样本数的比值。

# UAR在评估一个分类器时,对每个类别都给予相同的重要性,而不考虑各类别的样本数量。这使得UAR在处理不平衡数据集时具有一定的优势,因为它不会受到数量较多的类别的影响。

test_rcl_en = Recall(task="multiclass",num_classes=5, threshold=1. / 5, average="macro") # UAR

# F1 score

# F1分数是精确率(Precision)和召回率(Recall)的调和平均值。

# 在多分类问题中,通常会计算每个类别的F1分数,然后取平均值作为总体的F1分数。

# 平均方法可以是简单的算术平均(Macro-F1)(常用),也可以是根据每个类别的样本数量进行加权的平均(Weighted-F1)

test_f1_en = F1Score(task="multiclass", num_classes=5, threshold=1. / 5, average="macro") # UF1

preds = torch.tensor(pred_list)

target = torch.tensor(target_list)

print("sklearn vs torchmetrics: ")

print("Accuracy-W_ACC")

print(accuracy_score(y_true=target_list, y_pred=pred_list))

print(test_acc_en(preds, target))

print("recall-UAR")

print(recall_score(y_true=target_list,y_pred=pred_list,average='macro')) # 也可以指定micro模式

print(test_rcl_en(preds, target))

print("F1-score-UF1")

print(f1_score(y_true=target_list, y_pred=pred_list, average='macro'))

print(test_f1_en(preds, target))

2.参考链接

- 一文看懂机器学习指标:准确率、精准率、召回率、F1、ROC曲线、AUC曲线

- 多分类中TP/TN/FP/FN的计算

- 多分类中混淆矩阵的TP,TN,FN,FP计算

- TP、TN、FP、FN超级详细解析

- 一分钟看懂深度学习中的准确率(Accuracy)、精度(Precision)、召回率(Recall)和 mAP

- 深度学习评价指标简要综述

- 深度学习 数据多分类准确度 多分类的准确率计算

- Sklearn和TorchMetrics计算F1、准确率(Accuracy)、召回率(Recall)、精确率(Precision)、敏感性(Sensitivity)、特异性(Specificity)

- 【PyTorch】TorchMetrics:PyTorch的指标度量库

文章来源:https://blog.csdn.net/GYY8023/article/details/135034714

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- [每周一更]-(第37期):PHP常见的操作消息队列

- 用户逻辑删除配置 - PC端通用管理模块配置教程(5)-多八多AI低代码多端应用开发助手在线IDE

- HarmonyOS开发环境配置

- 目标检测-One Stage-YOLOv4

- easycode的vm生成模板

- 如何在苹果手机上进行文件管理

- 啊哈c语言——逻辑挑战12:你好坏,关机啦

- 【2023】java常用HTTP客户端对比以及使用(HttpClient/OkHttp/WebClient)

- 百分点科技获国际顶会NeurIPS LLM效率挑战赛冠军

- 小米行车记录仪mp4文件修复案例