python_解析_requests

发布时间:2024年01月05日

目录

1.一个类型六个属性

import requests

url = 'https://www.baidu.com/'

response = requests.get(url=url)

# 一个类型和六个属性

# response类型

# print(type(response))

# 设置响应的编码格式

# response.encoding = 'utf-8'

# 以字符串的形式返回网页源码

# print(response.text)

# 返回一个url路径地址

# print(response.url)

# 返回二进制数据

# print(response.content)

#返回响应的状态码

# print(response.status_code)

# 返回响应头

print(response.headers)2.requests的get请求

import requests

url = 'https://www.baidu.com/s?'

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

data = {

'wd':'北京'

}

# get方法的解释

# url 请求资源路径

# params 参数

# kwargs 字典

response = requests.get(url=url,params=data,headers=headers)

content = response.text

print(content)

# 总结

# (1)参数使用params传递

# (2)参数无需urlencode编码

# (3)不需要请求对象的定制

# (4)请求资源路径中的?可以加也可以不加3.requests的post请求

post请求总结:

(1)post请求 不需要编码

(2)post请求的参数是data

(3)不需要请求对象的定制

import requests

url ='https://fanyi.baidu.com/v2transapi?from=en&to=zh'

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82',

'Cookie':'BIDUPSID=27C677FC03842E7B4593C0FC23E63309; PSTM=1633330321; BAIDUID=DA5564F1160BAB4A47C7DA6772F3B9EA:FG=1; APPGUIDE_10_6_7=1; REALTIME_TRANS_SWITCH=1; FANYI_WORD_SWITCH=1; HISTORY_SWITCH=1; SOUND_SPD_SWITCH=1; SOUND_PREFER_SWITCH=1; BDUSS=RmcnNONVB5clZXSWlObUQtcFcxbWk1ZmZ6SW5Cc2ZnOVNhUFBWRE9NWlJRb1ZsSVFBQUFBJCQAAAAAAQAAAAEAAADR~t4MAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAFG1XWVRtV1lME; BDUSS_BFESS=RmcnNONVB5clZXSWlObUQtcFcxbWk1ZmZ6SW5Cc2ZnOVNhUFBWRE9NWlJRb1ZsSVFBQUFBJCQAAAAAAQAAAAEAAADR~t4MAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAFG1XWVRtV1lME; H_PS_PSSID=39938_39997_40073; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; BAIDUID_BFESS=DA5564F1160BAB4A47C7DA6772F3B9EA:FG=1; Hm_lvt_64ecd82404c51e03dc91cb9e8c025574=1703917057,1704008168; ab_sr=1.0.1_YjczYjU3OTM2OTI1ZmE0YjdiMGUxNzliNzdmZjlmZWUwYTk0N2UyNGUxYWY3NTgzMzRjNzEyMGRkZDJiZjhiZjk4MzQyZTA5OTA0YWMzZmFjZWIxNGU3ZjcxNDE4MDUwMWZkNzVjMGEyNDVmNzg1NjI3N2U4NThmMTczMGFkMzRkMWQ3NDRjZTI0OThhZTJlNDcwNzY0NTJkMjEzNThlMDg5ZDc2NzM1M2ExNTA4ZjlkZDhkODRhMDc4NmJkOWVh; Hm_lpvt_64ecd82404c51e03dc91cb9e8c025574=1704008283'

}

data = {

'query':'eye'

}

# url 请求地址

# data 请求参数

# kwargs 字典

response = requests.post(url=url,data=data,headers=headers)

# response.encoding='utf-8'

content = response.text

import json

obj = json.loads(content,encoding='utf-8')

print(obj)4.requests的代理

import requests

url = 'https://www.baidu.com/s?'

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

data = {

'wd':'ip'

}

response = requests.get(url=url,params=data,headers=headers)

content = response.text

with open('daili.html','w',encoding='utf-8') as fp:

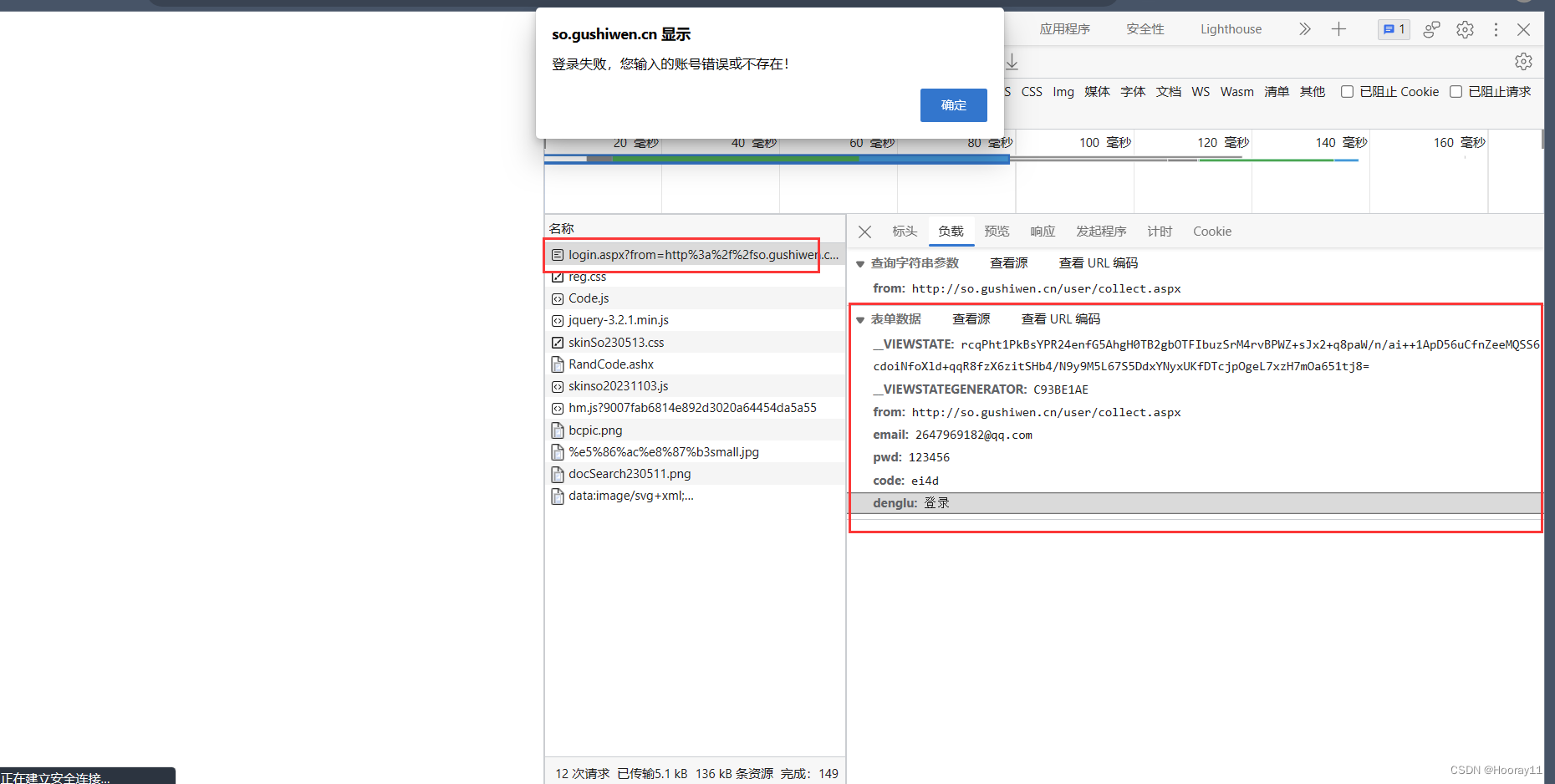

fp.write(content)5.requests_cookie登录_古诗文网

5.1网站

5.2分析思路

找登录接口——用错误的账号以及密码去找

# 通过寻找登录接口,发现登录所需要的参数很多 # __VIEWSTATE: rcqPht1PkBsYPR24enfG5AhgH0TB2gbOTFIbuzSrM4rvBPWZ+sJx2+q8paW/n/ai++1ApD56uCfnZeeMQSS6cdoiNfoXld+qqR8fzX6zitSHb4/N9y9M5L67S5DdxYNyxUKfDTcjpOgeL7xzH7mOa651tj8= # __VIEWSTATEGENERATOR: C93BE1AE # from: http://so.gushiwen.cn/user/collect.aspx # email: 2647969182@qq.com # pwd: 123456 # code: ei4d # denglu: 登录# __VIEWSTATE、__VIEWSTATEGENERATOR、code为可以变化的量 # 难点1:__VIEWSTATE、__VIEWSTATEGENERATOR 一般看不到的数据都在页面源码中 # 难点2:验证码

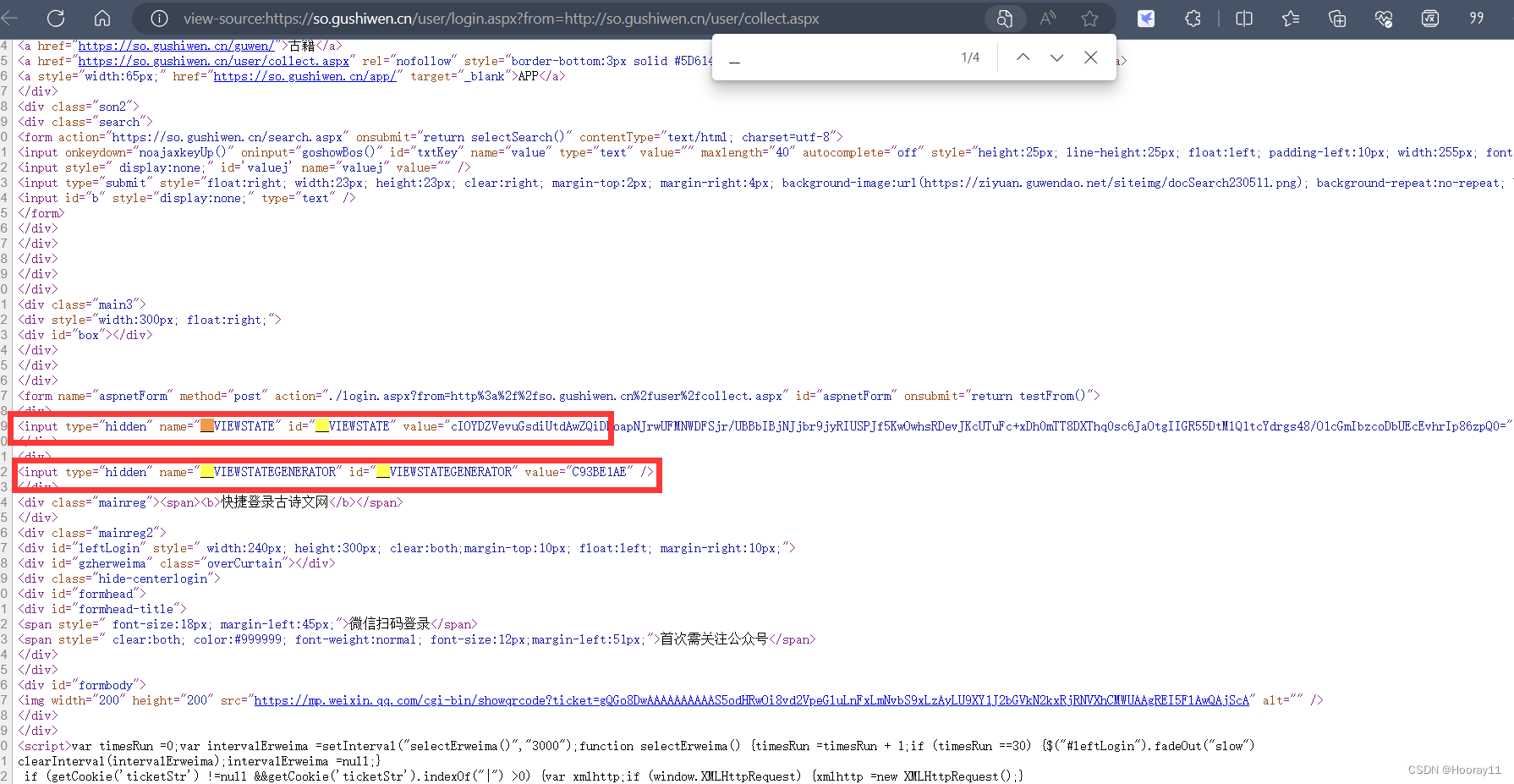

解决难点1:去查看页面的源码

由type='hidden'得知这两个数据为隐藏

我们观察到这两个数据在页面的源码中,所以我们需要获取页面的源码,然后进行解析就可以获取了

?

import requests

# 登陆页面的url地址

url = 'https://so.gushiwen.cn/user/login.aspx?from=http://so.gushiwen.cn/user/collect.aspx'

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

# 获取网页的源码

response = requests.get(url=url,headers=headers)

content = response.text

# 解析页面源码 获取这两个变量__VIEWSTATE、__VIEWSTATE

from bs4 import BeautifulSoup

soup = BeautifulSoup(content,'lxml')

# 获取__VIEWSTATE

viewstate = soup.select('#__VIEWSTATE')[0].attrs.get('value')

# 获取__VIEWSTATEGENERATOR

viewstategenerator = soup.select('#__VIEWSTATEGENERATOR')[0].attrs.get('value')

print(viewstate)

print(viewstategenerator)

解决难点2:验证码

# 获取验证码

code = soup.select('#imgCode')[0].attrs.get('src')

code_url = 'https://so.gushiwen.cn'+code

# 获取验证码的图片并且下载到本地 然后观察验证码 然后在控制台输入这个验证码

# 就可以将这个值给code的参数 就可以实现登录

import urllib.request

urllib.request.urlretrieve(url=code_url,filename='code.jpg')

code_name = input('请输入你的验证码:')但是上面代码!!!!!!这个方法不行

所以需要用session ,session是什么没学哈哈哈哈哈哈

Python爬虫之Requests模块session进行登录状态保持_python 爬虫后如何长久保留登录状态-CSDN博客

# 获取验证码的图片并且下载到本地 然后观察验证码 然后在控制台输入这个验证码

# 就可以将这个值给code的参数 就可以实现登录

# 有坑——重新发送了一次请求导致两次的验证码不一样

# import urllib.request

# urllib.request.urlretrieve(url=code_url,filename='code.jpg')

# requests中一个方法 session()通过他的返回值使请求变成一个对象

session = requests.session()

# 验证码的url内容

response_code = session.get(code_url)

# 注意此时需要使用二进制的数据 因为我们要使用的是图片的下载

content_code = response_code.content

# wb模式就是讲二进制的数据写入到文件

with open('code.jpg','wb') as fp:

fp.write(content_code)5.3成功

import requests

# 登陆页面的url地址

url = 'https://so.gushiwen.cn/user/login.aspx?from=http://so.gushiwen.cn/user/collect.aspx'

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82',

# 'Cookie':'login=flase; Hm_lvt_9007fab6814e892d3020a64454da5a55=1704010564; ASP.NET_SessionId=stupofxeferpwbzf32utbmzy; login=flase; gswZhanghao=2647969182%40qq.com; wsEmail=2647969182%40qq.com; ticketStr=205155250%7cgQFk8DwAAAAAAAAAAS5odHRwOi8vd2VpeGluLnFxLmNvbS9xLzAyRXlMeVFqbGVkN2kxRm1XVU5CMTQAAgRWLZFlAwQAjScA; codeyzgswso=463ca75a496e570a; Hm_lpvt_9007fab6814e892d3020a64454da5a55=1704013495'

}

# 获取网页的源码

response = requests.get(url=url,headers=headers)

content = response.text

# 解析页面源码 获取这两个变量__VIEWSTATE、__VIEWSTATE

from bs4 import BeautifulSoup

soup = BeautifulSoup(content,'lxml')

# 获取__VIEWSTATE

viewstate = soup.select('#__VIEWSTATE')[0].attrs.get('value')

print(viewstate)

# 获取__VIEWSTATEGENERATOR

viewstategenerator = soup.select('#__VIEWSTATEGENERATOR')[0].attrs.get('value')

print(viewstategenerator)

# 获取验证码

code = soup.select('#imgCode')[0].attrs.get('src')

code_url = 'https://so.gushiwen.cn'+code

# 获取验证码的图片并且下载到本地 然后观察验证码 然后在控制台输入这个验证码

# 就可以将这个值给code的参数 就可以实现登录

# 有坑——重新发送了一次请求导致两次的验证码不一样

# import urllib.request

# urllib.request.urlretrieve(url=code_url,filename='code.jpg')

# requests中一个方法 session()通过他的返回值使请求变成一个对象

session = requests.session()

# 验证码的url内容

response_code = session.get(code_url)

# 注意此时需要使用二进制的数据 因为我们要使用的是图片的下载

content_code = response_code.content

# wb模式就是讲二进制的数据写入到文件

with open('code.jpg','wb') as fp:

fp.write(content_code)

code_name = input('请输入你的验证码:')

#点击登录

url_post = 'https://so.gushiwen.cn/user/login.aspx?from=http%3a%2f%2fso.gushiwen.cn%2fuser%2fcollect.aspx'

data_post = {

'__VIEWSTATE': viewstate,

'__VIEWSTATEGENERATOR': viewstategenerator,

'from': 'http://so.gushiwen.cn/user/collect.aspx',

'email': '你的账户@qq.com',

'pwd': '你的密码',

'code': code_name,

'denglu': '登录'

}

response_post = session.post(url=url_post,headers=headers,data=data_post)

content_post = response_post.text

with open('gushiwen.html','w',encoding='utf-8') as fp:

fp.write(content_post)

文章来源:https://blog.csdn.net/Hooray11/article/details/135316491

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- Ebullient开发文档之OTA升级(从TF中升级简洁明了)

- 荣誉|持续创新 知行合一,唯迈医疗荣登“创新医疗器械杰出雇主”榜单

- 华为机试真题实战应用【赛题代码篇】-快递投放问题(附Java和C++代码实现)

- STM32与Freertos入门(七)信号量

- 连接oracle报:ora-28001:the password has expired

- GNU Tools使用笔记

- 成功的交易员是如何走向成熟的?

- 责任链模式

- Java项目:102SSM汽车租赁系统

- 深入了解Snowflake雪花算法:分布式唯一ID生成器