RandLA-Net导出onnx模型并使用onnxruntime推理

发布时间:2024年01月14日

首先下载RandLA-Net工程:https://github.com/tsunghan-wu/RandLA-Net-pytorch

导出onnx模型

import torch

from utils.config import ConfigSemanticKITTI as cfg

from network.RandLANet import Network

model = Network(cfg)

checkpoint = torch.load("./pretrain_model/checkpoint.tar")

model.load_state_dict(checkpoint['model_state_dict'])

input = {}

input['xyz'] = [torch.zeros([1, 45056, 3]), torch.zeros([1, 11264, 3]), torch.zeros([1, 2816, 3]), torch.zeros([1, 704, 3])]

input['neigh_idx'] = [torch.zeros([1, 45056, 16], dtype=torch.int64), torch.zeros([1, 11264, 16], dtype=torch.int64),

torch.zeros([1, 2816, 16], dtype=torch.int64), torch.zeros([1, 704, 16], dtype=torch.int64)]

input['sub_idx'] = [torch.zeros([1, 11264, 16], dtype=torch.int64), torch.zeros([1, 2816, 16], dtype=torch.int64),

torch.zeros([1, 704, 16], dtype=torch.int64), torch.zeros([1, 176, 16], dtype=torch.int64)]

input['interp_idx'] = [torch.zeros([1, 45056, 1], dtype=torch.int64), torch.zeros([1, 11264, 1], dtype=torch.int64),

torch.zeros([1, 2816, 1], dtype=torch.int64), torch.zeros([1, 704, 1], dtype=torch.int64)]

input['features'] = torch.zeros([1, 3, 45056])

input['labels'] = torch.zeros([1, 45056], dtype=torch.int64)

input['logits'] = torch.zeros([1, 19, 45056])

torch.onnx.export(model, input, "randla-net.onnx", opset_version=13)

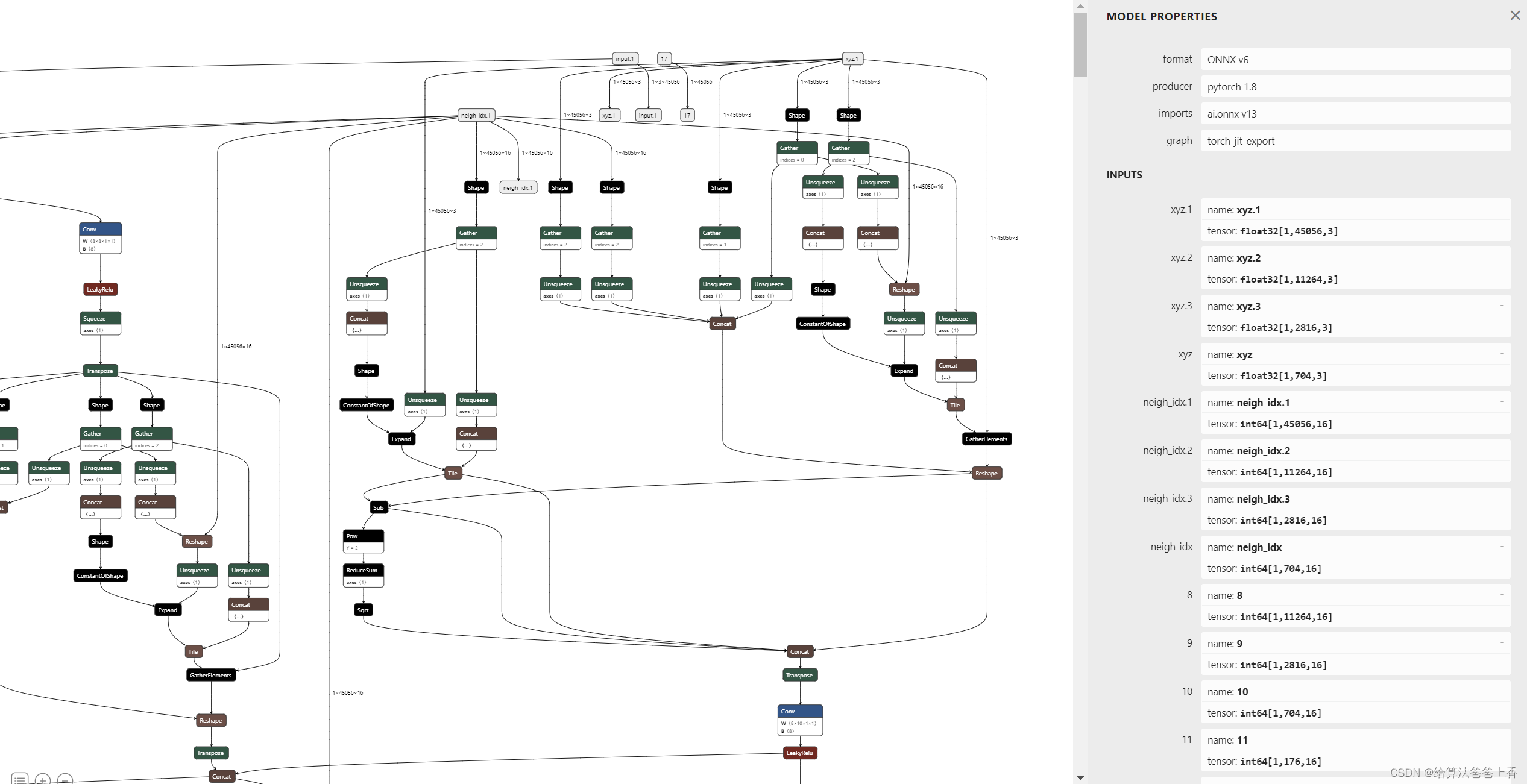

onnx模型结构如下:

onnxruntime推理

python代码

import pickle

import numpy as np

import torch

from network.RandLANet import Network

from utils.data_process import DataProcessing as DP

from utils.config import ConfigSemanticKITTI as cfg

np.random.seed(0)

k_n = 16

num_points = 4096 * 11

num_layers = 4

num_classes = 19

sub_sampling_ratio = [4, 4, 4, 4]

if __name__ == '__main__':

net = Network(cfg).to(torch.device("cpu"))

checkpoint = torch.load("pretrain_model/checkpoint.tar", map_location=torch.device('cpu'))

net.load_state_dict(checkpoint['model_state_dict'])

points = np.load('./data/08/velodyne/000000.npy')

possibility = np.zeros(points.shape[0]) * 1e-3 #[np.random.rand(points.shape[0]) * 1e-3]

min_possibility = [float(np.min(possibility[-1]))]

probs = [np.zeros(shape=[points.shape[0], num_classes], dtype=np.float32)]

test_probs = probs

test_smooth = 0.98

import onnxruntime

onnx_session = onnxruntime.InferenceSession("randla-net.onnx", providers=['CPUExecutionProvider'])

input_name = []

for node in onnx_session.get_inputs():

input_name.append(node.name)

output_name = []

for node in onnx_session.get_outputs():

output_name.append(node.name)

net.eval()

with torch.no_grad():

with open('./data/08/KDTree/000000.pkl', 'rb') as f:

tree = pickle.load(f)

pc = np.array(tree.data, copy=False)

labels = np.zeros(np.shape(pc)[0])

while np.min(min_possibility) <= 0.5:

cloud_ind = int(np.argmin(min_possibility))

pick_idx = np.argmin(possibility)

center_point = pc[pick_idx, :].reshape(1, -1)

selected_idx = tree.query(center_point, num_points)[1][0]

selected_pc = pc[selected_idx]

selected_labels = labels[selected_idx]

dists = np.sum(np.square((selected_pc - pc[pick_idx])), axis=1)

delta = np.square(1 - dists / np.max(dists))

possibility[selected_idx] += delta # possibility[193] += delta[1], possibility[20283] += delta[45055]

min_possibility[cloud_ind] = np.min(possibility)

batch_pc = np.expand_dims(selected_pc, 0)

batch_label = np.expand_dims(selected_labels, 0)

batch_pc_idx = np.expand_dims(selected_idx, 0)

batch_cloud_idx = np.expand_dims(np.array([cloud_ind], dtype=np.int32), 0)

features = batch_pc

input_points, input_neighbors, input_pools, input_up_samples = [], [], [], []

for i in range(num_layers):

neighbour_idx = DP.knn_search(batch_pc, batch_pc, k_n)

sub_points = batch_pc[:, :batch_pc.shape[1] // sub_sampling_ratio[i], :]

pool_i = neighbour_idx[:, :batch_pc.shape[1] // sub_sampling_ratio[i], :]

up_i = DP.knn_search(sub_points, batch_pc, 1)

input_points.append(batch_pc)

input_neighbors.append(neighbour_idx)

input_pools.append(pool_i)

input_up_samples.append(up_i)

batch_pc = sub_points

flat_inputs = input_points + input_neighbors + input_pools + input_up_samples

flat_inputs += [features, batch_label, batch_pc_idx, batch_cloud_idx]

batch_data, inputs = {}, {}

batch_data['xyz'] = []

for tmp in flat_inputs[:num_layers]:

batch_data['xyz'].append(torch.from_numpy(tmp).float())

inputs['xyz.1'] = flat_inputs[:num_layers][0].astype(np.float32)

inputs['xyz.2'] = flat_inputs[:num_layers][1].astype(np.float32)

inputs['xyz.3'] = flat_inputs[:num_layers][2].astype(np.float32)

inputs['xyz'] = flat_inputs[:num_layers][3].astype(np.float32)

batch_data['neigh_idx'] = []

for tmp in flat_inputs[num_layers: 2 * num_layers]:

batch_data['neigh_idx'].append(torch.from_numpy(tmp).long())

inputs['neigh_idx.1'] = flat_inputs[num_layers: 2 * num_layers][0].astype(np.int64)

inputs['neigh_idx.2'] = flat_inputs[num_layers: 2 * num_layers][1].astype(np.int64)

inputs['neigh_idx.3'] = flat_inputs[num_layers: 2 * num_layers][2].astype(np.int64)

inputs['neigh_idx'] = flat_inputs[num_layers: 2 * num_layers][3].astype(np.int64)

batch_data['sub_idx'] = []

for tmp in flat_inputs[2 * num_layers:3 * num_layers]:

batch_data['sub_idx'].append(torch.from_numpy(tmp).long())

inputs['8'] = flat_inputs[2 * num_layers:3 * num_layers][0].astype(np.int64)

inputs['9'] = flat_inputs[2 * num_layers:3 * num_layers][1].astype(np.int64)

inputs['10'] = flat_inputs[2 * num_layers:3 * num_layers][2].astype(np.int64)

inputs['11'] = flat_inputs[2 * num_layers:3 * num_layers][3].astype(np.int64)

batch_data['interp_idx'] = []

for tmp in flat_inputs[3 * num_layers:4 * num_layers]:

batch_data['interp_idx'].append(torch.from_numpy(tmp).long())

inputs['12'] = flat_inputs[3 * num_layers:4 * num_layers][0].astype(np.int64)

inputs['13'] = flat_inputs[3 * num_layers:4 * num_layers][1].astype(np.int64)

inputs['14'] = flat_inputs[3 * num_layers:4 * num_layers][2].astype(np.int64)

inputs['15'] = flat_inputs[3 * num_layers:4 * num_layers][3].astype(np.int64)

batch_data['features'] = torch.from_numpy(flat_inputs[4 * num_layers]).transpose(1, 2).float()

inputs['input.1'] = np.swapaxes(flat_inputs[4 * num_layers], 1, 2).astype(np.float32)

batch_data['labels'] = torch.from_numpy(flat_inputs[4 * num_layers + 1]).long()

inputs['17'] = flat_inputs[4 * num_layers + 1].astype(np.int64)

input_inds = flat_inputs[4 * num_layers + 2]

cloud_inds = flat_inputs[4 * num_layers + 3]

for key in batch_data:

if type(batch_data[key]) is list:

for i in range(num_layers):

batch_data[key][i] = batch_data[key][i]

else:

batch_data[key] = batch_data[key]

end_points = net(batch_data)

outputs = onnx_session.run(None, inputs)

end_points['logits'] = end_points['logits'].transpose(1, 2).cpu().numpy()

for j in range(end_points['logits'].shape[0]):

probs = end_points['logits'][j]

inds = input_inds[j]

c_i = cloud_inds[j][0]

test_probs[c_i][inds] = test_smooth * test_probs[c_i][inds] + (1 - test_smooth) * probs #19 (45056, 19)

for j in range(len(test_probs)):

pred = np.argmax(test_probs[j], 1).astype(np.uint32) + 1

output = np.concatenate((points, pred.reshape(-1, 1)), axis=1)

np.savetxt('./result/output.txt', output)

C++代码:

#include <iostream>

#include <fstream>

#include <vector>

#include <algorithm>

#include <pcl/io/pcd_io.h>

#include <pcl/point_types.h>

#include <pcl/search/kdtree.h>

#include <pcl/common/distances.h>

#include <onnxruntime_cxx_api.h>

#include "knn_.h"

const int k_n = 16;

const int num_classes = 19;

const int num_points = 4096 * 11;

const int num_layers = 4;

const float test_smooth = 0.98;

std::vector<std::vector<long>> knn_search(pcl::PointCloud<pcl::PointXYZ>::Ptr& support_pts, pcl::PointCloud<pcl::PointXYZ>::Ptr& query_pts, int k)

{

float* points = new float[support_pts->size() * 3];

for (size_t i = 0; i < support_pts->size(); i++)

{

points[3 * i + 0] = support_pts->points[i].x;

points[3 * i + 1] = support_pts->points[i].y;

points[3 * i + 2] = support_pts->points[i].z;

}

float* queries = new float[query_pts->size() * 3];

for (size_t i = 0; i < query_pts->size(); i++)

{

queries[3 * i + 0] = query_pts->points[i].x;

queries[3 * i + 1] = query_pts->points[i].y;

queries[3 * i + 2] = query_pts->points[i].z;

}

long* indices = new long[query_pts->size() * k];

cpp_knn_omp(points, support_pts->size(), 3, queries, query_pts->size(), k, indices);

std::vector<std::vector<long>> neighbour_idx(query_pts->size(), std::vector<long>(k));

for (size_t i = 0; i < query_pts->size(); i++)

{

for (size_t j = 0; j < k; j++)

{

neighbour_idx[i][j] = indices[k * i + j];

}

}

return neighbour_idx;

}

int main()

{

Ort::Env env(ORT_LOGGING_LEVEL_WARNING, "randla-net");

Ort::SessionOptions session_options;

session_options.SetIntraOpNumThreads(1);

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_EXTENDED);

const wchar_t* model_path = L"randla-net.onnx";

Ort::Session session(env, model_path, session_options);

Ort::AllocatorWithDefaultOptions allocator;

std::vector<const char*> input_node_names;

for (size_t i = 0; i < session.GetInputCount(); i++)

{

input_node_names.push_back(session.GetInputName(i, allocator));

}

std::vector<const char*> output_node_names;

for (size_t i = 0; i < session.GetOutputCount(); i++)

{

output_node_names.push_back(session.GetOutputName(i, allocator));

}

float x, y, z;

pcl::PointCloud<pcl::PointXYZ>::Ptr points(new pcl::PointCloud<pcl::PointXYZ>);

std::ifstream infile_points("000000.txt");

while (infile_points >> x >> y >> z)

{

points->push_back(pcl::PointXYZ(x, y, z));

}

std::vector<float> possibility(points->size(), 0);

std::vector<float> min_possibility = { 0 };

std::vector<std::vector<float>> test_probs(points->size(), std::vector<float>(num_classes, 0));

pcl::PointCloud<pcl::PointXYZ>::Ptr pc(new pcl::PointCloud<pcl::PointXYZ>);

std::ifstream infile_pc("000000.pkl", std::ios::binary);

while (infile_pc >> x >> y >> z)

{

pc->push_back(pcl::PointXYZ(x, y, z));

}

std::vector<float> labels(pc->size(), 0);

while (*std::min_element(min_possibility.begin(), min_possibility.end()) < 0.5)

{

int cloud_ind = std::min_element(min_possibility.begin(), min_possibility.end()) - min_possibility.begin();

int pick_idx = std::min_element(possibility.begin(), possibility.end()) - possibility.begin();

pcl::PointXYZ center_point = pc->points[pick_idx];

pcl::search::KdTree<pcl::PointXYZ>::Ptr kdtree(new pcl::search::KdTree<pcl::PointXYZ>);

kdtree->setInputCloud(pc);

std::vector<int> selected_idx(num_points);

std::vector<float> distances(num_points);

kdtree->nearestKSearch(center_point, num_points, selected_idx, distances);

pcl::PointCloud<pcl::PointXYZ>::Ptr selected_pc(new pcl::PointCloud<pcl::PointXYZ>);

pcl::copyPointCloud(*pc, selected_idx, *selected_pc);

std::vector<float> selected_labels(num_points);

for (size_t i = 0; i < num_points; i++)

{

selected_labels[i] = labels[selected_idx[i]];

}

std::vector<float> dists(num_points);

for (size_t i = 0; i < num_points; i++)

{

dists[i] = pcl::squaredEuclideanDistance(selected_pc->points[i], pc->points[pick_idx]);

}

float max_dists = *std::max_element(dists.begin(), dists.end());

std::vector<float> delta(num_points);

for (size_t i = 0; i < num_points; i++)

{

delta[i] = pow(1 - dists[i] / max_dists, 2);

possibility[selected_idx[i]] += delta[i];

}

min_possibility[cloud_ind] = *std::min_element(possibility.begin(), possibility.end());

pcl::PointCloud<pcl::PointXYZ>::Ptr features(new pcl::PointCloud<pcl::PointXYZ>);

pcl::copyPointCloud(*selected_pc, *features);

std::vector<pcl::PointCloud<pcl::PointXYZ>::Ptr> input_points;

std::vector<std::vector<std::vector<long>>> input_neighbors, input_pools, input_up_samples;

for (size_t i = 0; i < num_layers; i++)

{

std::vector<std::vector<long>> neighbour_idx = knn_search(selected_pc, selected_pc, k_n);

pcl::PointCloud<pcl::PointXYZ>::Ptr sub_points(new pcl::PointCloud<pcl::PointXYZ>);

std::vector<int> index(selected_pc->size() / 4);

std::iota(index.begin(), index.end(), 0);

pcl::copyPointCloud(*selected_pc, index, *sub_points);

std::vector<std::vector<long>> pool_i(selected_pc->size() / 4);

std::copy(neighbour_idx.begin(), neighbour_idx.begin() + selected_pc->size() / 4, pool_i.begin());

std::vector<std::vector<long>> up_i = knn_search(sub_points, selected_pc, 1);

input_points.push_back(selected_pc);

input_neighbors.push_back(neighbour_idx);

input_pools.push_back(pool_i);

input_up_samples.push_back(up_i);

selected_pc = sub_points;

}

const size_t xyz1_size = 1 * input_points[0]->size() * 3;

std::vector<float> xyz1_values(xyz1_size);

for (size_t i = 0; i < input_points[0]->size(); i++)

{

xyz1_values[3 * i + 0] = input_points[0]->points[i].x;

xyz1_values[3 * i + 1] = input_points[0]->points[i].y;

xyz1_values[3 * i + 2] = input_points[0]->points[i].z;

}

std::vector<int64_t> xyz1_dims = { 1, (int64_t)input_points[0]->size(), 3 };

auto xyz1_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value xyz1_tensor = Ort::Value::CreateTensor<float>(xyz1_memory, xyz1_values.data(), xyz1_size, xyz1_dims.data(), xyz1_dims.size());

const size_t xyz2_size = 1 * input_points[1]->size() * 3;

std::vector<float> xyz2_values(xyz2_size);

for (size_t i = 0; i < input_points[1]->size(); i++)

{

xyz2_values[3 * i + 0] = input_points[1]->points[i].x;

xyz2_values[3 * i + 1] = input_points[1]->points[i].y;

xyz2_values[3 * i + 2] = input_points[1]->points[i].z;

}

std::vector<int64_t> xyz2_dims = { 1, (int64_t)input_points[1]->size(), 3 };

auto xyz2_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value xyz2_tensor = Ort::Value::CreateTensor<float>(xyz2_memory, xyz2_values.data(), xyz2_size, xyz2_dims.data(), xyz2_dims.size());

const size_t xyz3_size = 1 * input_points[2]->size() * 3;

std::vector<float> xyz3_values(xyz3_size);

for (size_t i = 0; i < input_points[2]->size(); i++)

{

xyz3_values[3 * i + 0] = input_points[2]->points[i].x;

xyz3_values[3 * i + 1] = input_points[2]->points[i].y;

xyz3_values[3 * i + 2] = input_points[2]->points[i].z;

}

std::vector<int64_t> xyz3_dims = { 1, (int64_t)input_points[2]->size(), 3 };

auto xyz3_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value xyz3_tensor = Ort::Value::CreateTensor<float>(xyz3_memory, xyz3_values.data(), xyz3_size, xyz3_dims.data(), xyz3_dims.size());

const size_t xyz_size = 1 * input_points[3]->size() * 3;

std::vector<float> xyz_values(xyz_size);

for (size_t i = 0; i < input_points[3]->size(); i++)

{

xyz_values[3 * i + 0] = input_points[3]->points[i].x;

xyz_values[3 * i + 1] = input_points[3]->points[i].y;

xyz_values[3 * i + 2] = input_points[3]->points[i].z;

}

std::vector<int64_t> xyz_dims = { 1, (int64_t)input_points[3]->size(), 3 };

auto xyz_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value xyz_tensor = Ort::Value::CreateTensor<float>(xyz_memory, xyz_values.data(), xyz_size, xyz_dims.data(), xyz_dims.size());

const size_t neigh_idx1_size = 1 * input_neighbors[0].size() * 16;

std::vector<int64_t> neigh_idx1_values(neigh_idx1_size);

for (size_t i = 0; i < input_neighbors[0].size(); i++)

{

for (size_t j = 0; j < 16; j++)

{

neigh_idx1_values[16 * i + j] = input_neighbors[0][i][j];

}

}

std::vector<int64_t> neigh_idx1_dims = { 1, (int64_t)input_neighbors[0].size(), 16 };

auto neigh_idx1_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value neigh_idx1_tensor = Ort::Value::CreateTensor<int64_t>(neigh_idx1_memory, neigh_idx1_values.data(), neigh_idx1_size, neigh_idx1_dims.data(), neigh_idx1_dims.size());

const size_t neigh_idx2_size = 1 * input_neighbors[1].size() * 16;

std::vector<int64_t> neigh_idx2_values(neigh_idx2_size);

for (size_t i = 0; i < input_neighbors[1].size(); i++)

{

for (size_t j = 0; j < 16; j++)

{

neigh_idx2_values[16 * i + j] = input_neighbors[1][i][j];

}

}

std::vector<int64_t> neigh_idx2_dims = { 1, (int64_t)input_neighbors[1].size(), 16 };

auto neigh_idx2_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value neigh_idx2_tensor = Ort::Value::CreateTensor<int64_t>(neigh_idx2_memory, neigh_idx2_values.data(), neigh_idx2_size, neigh_idx2_dims.data(), neigh_idx2_dims.size());

const size_t neigh_idx3_size = 1 * input_neighbors[2].size() * 16;

std::vector<int64_t> neigh_idx3_values(neigh_idx3_size);

for (size_t i = 0; i < input_neighbors[2].size(); i++)

{

for (size_t j = 0; j < 16; j++)

{

neigh_idx3_values[16 * i + j] = input_neighbors[2][i][j];

}

}

std::vector<int64_t> neigh_idx3_dims = { 1, (int64_t)input_neighbors[2].size(), 16 };

auto neigh_idx3_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value neigh_idx3_tensor = Ort::Value::CreateTensor<int64_t>(neigh_idx3_memory, neigh_idx3_values.data(), neigh_idx3_size, neigh_idx3_dims.data(), neigh_idx3_dims.size());

const size_t neigh_idx_size = 1 * input_neighbors[3].size() * 16;

std::vector<int64_t> neigh_idx_values(neigh_idx_size);

for (size_t i = 0; i < input_neighbors[3].size(); i++)

{

for (size_t j = 0; j < 16; j++)

{

neigh_idx_values[16 * i + j] = input_neighbors[3][i][j];

}

}

std::vector<int64_t> neigh_idx_dims = { 1, (int64_t)input_neighbors[3].size(), 16 };

auto neigh_idx_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value neigh_idx_tensor = Ort::Value::CreateTensor<int64_t>(neigh_idx_memory, neigh_idx_values.data(), neigh_idx_size, neigh_idx_dims.data(), neigh_idx_dims.size());

const size_t sub_idx8_size = 1 * input_pools[0].size() * 16;

std::vector<int64_t> sub_idx8_values(sub_idx8_size);

for (size_t i = 0; i < input_pools[0].size(); i++)

{

for (size_t j = 0; j < 16; j++)

{

sub_idx8_values[16 * i + j] = input_pools[0][i][j];

}

}

std::vector<int64_t> sub_idx8_dims = { 1, (int64_t)input_pools[0].size(), 16 };

auto sub_idx8_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value sub_idx8_tensor = Ort::Value::CreateTensor<int64_t>(sub_idx8_memory, sub_idx8_values.data(), sub_idx8_size, sub_idx8_dims.data(), sub_idx8_dims.size());

const size_t sub_idx9_size = 1 * input_pools[1].size() * 16;

std::vector<int64_t> sub_idx9_values(sub_idx9_size);

for (size_t i = 0; i < input_pools[1].size(); i++)

{

for (size_t j = 0; j < 16; j++)

{

sub_idx9_values[16 * i + j] = input_pools[1][i][j];

}

}

std::vector<int64_t> sub_idx9_dims = { 1, (int64_t)input_pools[1].size(), 16 };

auto sub_idx9_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value sub_idx9_tensor = Ort::Value::CreateTensor<int64_t>(sub_idx9_memory, sub_idx9_values.data(), sub_idx9_size, sub_idx9_dims.data(), sub_idx9_dims.size());

const size_t sub_idx10_size = 1 * input_pools[2].size() * 16;

std::vector<int64_t> sub_idx10_values(sub_idx10_size);

for (size_t i = 0; i < input_pools[2].size(); i++)

{

for (size_t j = 0; j < 16; j++)

{

sub_idx10_values[16 * i + j] = input_pools[2][i][j];

}

}

std::vector<int64_t> sub_idx10_dims = { 1, (int64_t)input_pools[2].size(), 16 };

auto sub_idx10_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value sub_idx10_tensor = Ort::Value::CreateTensor<int64_t>(sub_idx10_memory, sub_idx10_values.data(), sub_idx10_size, sub_idx10_dims.data(), sub_idx10_dims.size());

const size_t sub_idx11_size = 1 * input_pools[3].size() * 16;

std::vector<int64_t> sub_idx11_values(sub_idx11_size);

for (size_t i = 0; i < input_pools[3].size(); i++)

{

for (size_t j = 0; j < 16; j++)

{

sub_idx11_values[16 * i + j] = input_pools[3][i][j];

}

}

std::vector<int64_t> sub_idx11_dims = { 1, (int64_t)input_pools[3].size(), 16 };

auto sub_idx11_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value sub_idx11_tensor = Ort::Value::CreateTensor<int64_t>(sub_idx11_memory, sub_idx11_values.data(), sub_idx11_size, sub_idx11_dims.data(), sub_idx11_dims.size());

const size_t interp_idx12_size = 1 * input_up_samples[0].size() * 1;

std::vector<int64_t> interp_idx12_values(interp_idx12_size);

for (size_t i = 0; i < input_up_samples[0].size(); i++)

{

interp_idx12_values[i] = input_up_samples[0][i][0];

}

std::vector<int64_t> interp_idx12_dims = { 1, (int64_t)input_up_samples[0].size(), 1 };

auto interp_idx12_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value interp_idx12_tensor = Ort::Value::CreateTensor<int64_t>(interp_idx12_memory, interp_idx12_values.data(), interp_idx12_size, interp_idx12_dims.data(), interp_idx12_dims.size());

const size_t interp_idx13_size = 1 * input_up_samples[1].size() * 1;

std::vector<int64_t> interp_idx13_values(interp_idx13_size);

for (size_t i = 0; i < input_up_samples[1].size(); i++)

{

interp_idx13_values[i] = input_up_samples[1][i][0];

}

std::vector<int64_t> interp_idx13_dims = { 1, (int64_t)input_up_samples[1].size(), 1 };

auto interp_idx13_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value interp_idx13_tensor = Ort::Value::CreateTensor<int64_t>(interp_idx13_memory, interp_idx13_values.data(), interp_idx13_size, interp_idx13_dims.data(), interp_idx13_dims.size());

const size_t interp_idx14_size = 1 * input_up_samples[2].size() * 1;

std::vector<int64_t> interp_idx14_values(interp_idx14_size);

for (size_t i = 0; i < input_up_samples[2].size(); i++)

{

interp_idx14_values[i] = input_up_samples[2][i][0];

}

std::vector<int64_t> interp_idx14_dims = { 1, (int64_t)input_up_samples[2].size(), 1 };

auto interp_idx14_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value interp_idx14_tensor = Ort::Value::CreateTensor<int64_t>(interp_idx14_memory, interp_idx14_values.data(), interp_idx14_size, interp_idx14_dims.data(), interp_idx14_dims.size());

const size_t interp_idx15_size = 1 * input_up_samples[3].size() * 1;

std::vector<int64_t> interp_idx15_values(interp_idx15_size);

for (size_t i = 0; i < input_up_samples[3].size(); i++)

{

interp_idx15_values[i] = input_up_samples[3][i][0];

}

std::vector<int64_t> interp_idx15_dims = { 1, (int64_t)input_up_samples[3].size(), 1 };

auto interp_idx15_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value interp_idx15_tensor = Ort::Value::CreateTensor<int64_t>(interp_idx15_memory, interp_idx15_values.data(), interp_idx15_size, interp_idx15_dims.data(), interp_idx15_dims.size());

const size_t features_size = 1 * 3 * features->size();

std::vector<float> features_values(features_size);

for (size_t i = 0; i < features->size(); i++)

{

features_values[features->size() * 0 + i] = features->points[i].x;

features_values[features->size() * 1 + i] = features->points[i].y;

features_values[features->size() * 2 + i] = features->points[i].z;

}

std::vector<int64_t> features_dims = { 1, 3, (int64_t)features->size() };

auto features_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value features_tensor = Ort::Value::CreateTensor<float>(features_memory, features_values.data(), features_size, features_dims.data(), features_dims.size());

const size_t labels_size = 1 * selected_labels.size();

std::vector<int64_t> labels_values(labels_size);

for (size_t i = 0; i < selected_labels.size(); i++)

{

labels_values[i] = selected_labels[i];

}

std::vector<int64_t> labels_dims = { 1, (int64_t)selected_labels.size() };

auto labels_memory = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value labels_tensor = Ort::Value::CreateTensor<int64_t>(labels_memory, labels_values.data(), labels_size, labels_dims.data(), labels_dims.size());

std::vector<Ort::Value> inputs;

inputs.push_back(std::move(xyz1_tensor));

inputs.push_back(std::move(xyz2_tensor));

inputs.push_back(std::move(xyz3_tensor));

inputs.push_back(std::move(xyz_tensor));

inputs.push_back(std::move(neigh_idx1_tensor));

inputs.push_back(std::move(neigh_idx2_tensor));

inputs.push_back(std::move(neigh_idx3_tensor));

inputs.push_back(std::move(neigh_idx_tensor));

inputs.push_back(std::move(sub_idx8_tensor));

inputs.push_back(std::move(sub_idx9_tensor));

inputs.push_back(std::move(sub_idx10_tensor));

inputs.push_back(std::move(sub_idx11_tensor));

inputs.push_back(std::move(interp_idx12_tensor));

inputs.push_back(std::move(interp_idx13_tensor));

inputs.push_back(std::move(interp_idx14_tensor));

inputs.push_back(std::move(interp_idx15_tensor));

inputs.push_back(std::move(features_tensor));

inputs.push_back(std::move(labels_tensor));

std::vector<Ort::Value> outputs = session.Run(Ort::RunOptions{ nullptr }, input_node_names.data(), inputs.data(), input_node_names.size(), output_node_names.data(), output_node_names.size());

const float* output = outputs[18].GetTensorData<float>();

std::vector<int64_t> output_dims = outputs[18].GetTensorTypeAndShapeInfo().GetShape(); //1*19*45056

size_t count = outputs[18].GetTensorTypeAndShapeInfo().GetElementCount();

std::vector<float> pred(output, output + count);

std::vector<std::vector<float>> probs(output_dims[2], std::vector<float>(output_dims[1])); //45056*19

for (size_t i = 0; i < output_dims[2]; i++)

{

for (size_t j = 0; j < output_dims[1]; j++)

{

probs[i][j] = pred[j * output_dims[2] + i];

}

}

std::vector<int> inds = selected_idx;

int c_i = cloud_ind;

for (size_t i = 0; i < inds.size(); i++)

{

for (size_t j = 0; j < num_classes; j++)

{

test_probs[inds[i]][j] = test_smooth * test_probs[inds[i]][j] + (1 - test_smooth) * probs[i][j];

}

}

}

std::vector<int> pred(test_probs.size());

std::fstream output("output.txt", 'w');

for (size_t i = 0; i < test_probs.size(); i++)

{

pred[i] = max_element(test_probs[i].begin(), test_probs[i].end()) - test_probs[i].begin() + 1;

output << points->points[i].x << " " << points->points[i].y << " " << points->points[i].z << " " << pred[i] << std::endl;

}

return 0;

}

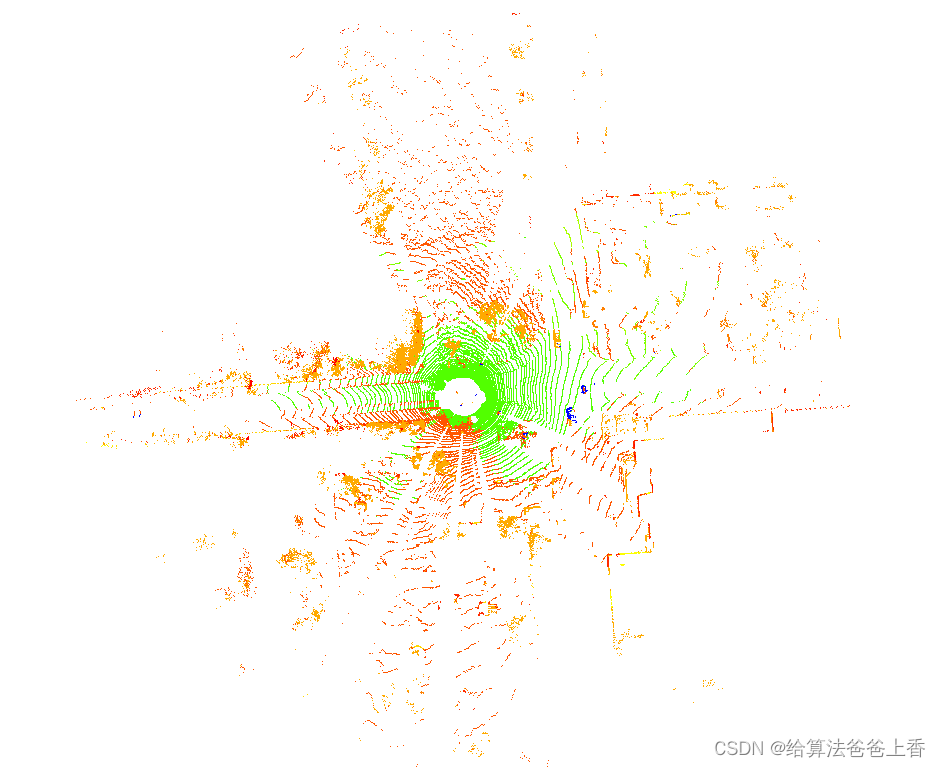

预测结果:

文章来源:https://blog.csdn.net/taifyang/article/details/135573838

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!