SD插件的实现逻辑(Animatediff&Controlnet API调用)

一、序言

研究主要是Animatediff在视频转绘时,API调用生成会失败,因此看了下插件的源码与SD插件实现逻辑

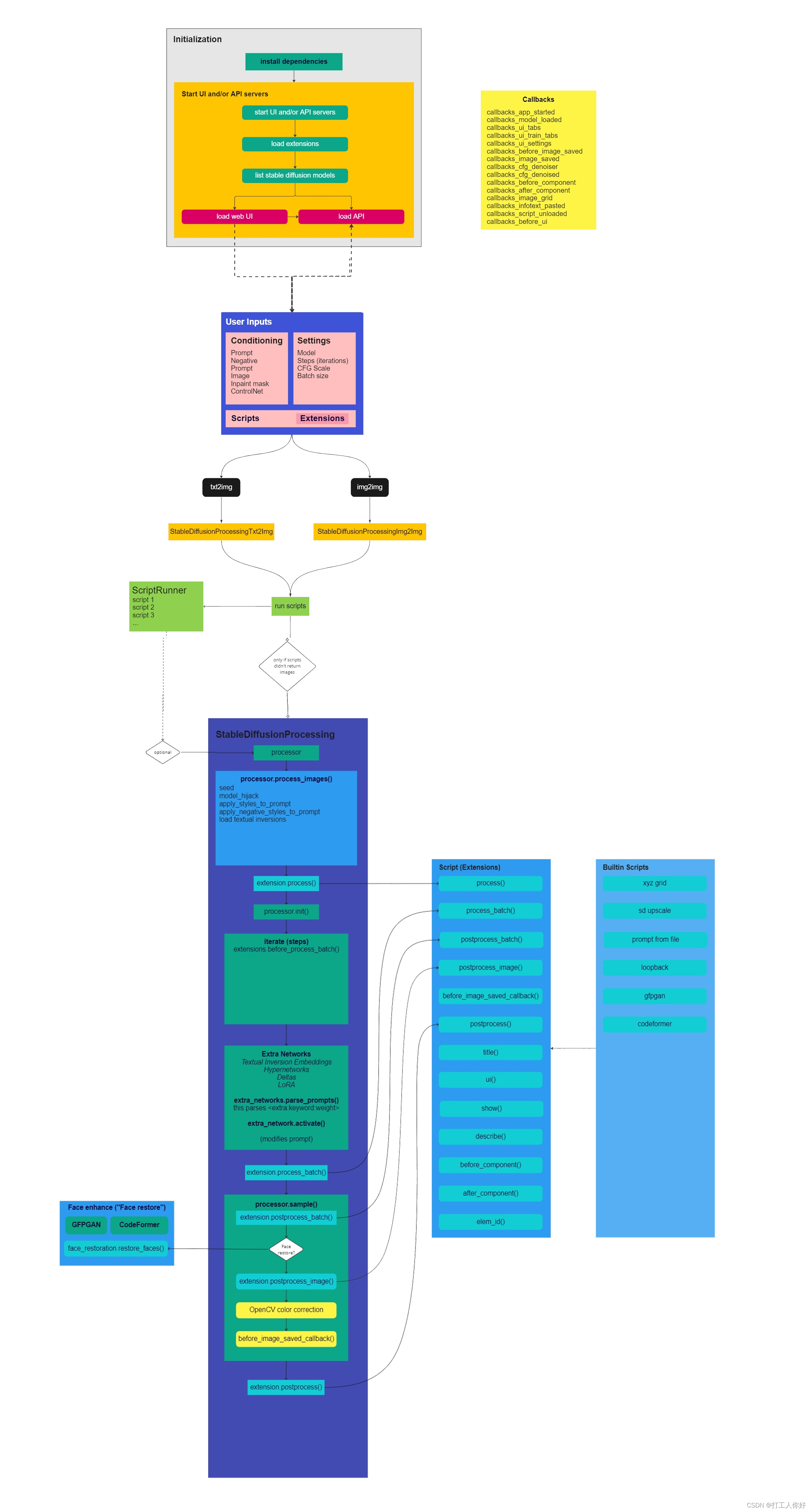

主要流程如下,涉及到几个关键类:StableDifussionProcessing(txt2img or img2img)、ScriptRunner、Script,下面这张图从一个宽泛的全流程角度展示了插件调用的全流程,之后具体从实现自己的插件、插件调用插件两个方面并结合具体示例来阐述SDWebUI插件的实现逻辑

二、实现自己的插件

官方有简单谢一篇Wiki,讲述如何开发插件:开发SD-WebUI插件

这篇Wiki中介绍了几个特殊文件的用途,分别如下:

- install.py:会自动执行该文件,以完成扩展依赖库的安装

- script目录:里面存放脚本,写法与上一节介绍的脚本相似

- javascript目录:里面的js文件会被加载到页面上

- preload.py:它会在程序解析命令行之前加载,用于解析与扩展有关的命令行

要实现一个SD插件,最重要的是实现"modules\scripts.py"的"class Script"基类,该基类如下,由序言中的图可以看到,插件可以调用的实际主要涉及到 六个 调用时机点,这个Script基类声明了自定义插件可以在出图过程中影响的方面,初次之外,还有影响该插件的属性也有声明:参数起始位置、插件名称、UI界面等等

class Script:

name = None

"""

script's internal name derived from title

"""

def title(self):

"""

this function should return the title of the script. This is what will be displayed in the dropdown menu.

"""

raise NotImplementedError()

def ui(self, is_img2img):

"""

this function should create gradio UI elements. See https://gradio.app/docs/#components

The return value should be an array of all components that are used in processing.

Values of those returned components will be passed to run() and process() functions.

"""

pass

def before_process(self, p, *args):

"""

This function is called very early during processing begins for AlwaysVisible scripts.

You can modify the processing object (p) here, inject hooks, etc.

args contains all values returned by components from ui()

"""

pass

def process(self, p, *args):

"""

This function is called before processing begins for AlwaysVisible scripts.

You can modify the processing object (p) here, inject hooks, etc.

args contains all values returned by components from ui()

"""

pass

...... // 相关属性和方法并未列完,可以自行去源码中查看

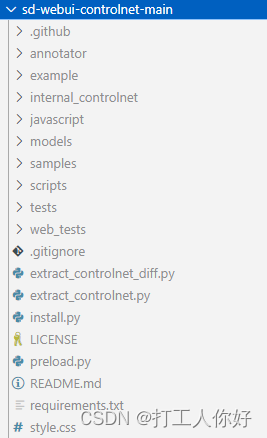

下面我们以ControlNet为例看看这个万人迷插件是如何实现的,ControlNet的目录结构如下所示,它包含上述“规则”所介绍的install.py、preload.py、scripts目录等

1、install.py

判断requirements.txt中涉及的依赖性有没有被安装,如果没有就调用launch.run_pip去安装它

2、preload.py

接收下面四个命令行处理参数

【

“–controlnet-dir”

“–controlnet-annotator-models-path”

“–no-half-controlnet”

“–controlnet-preprocessor-cache-size”

“–controlnet-loglevel”

】

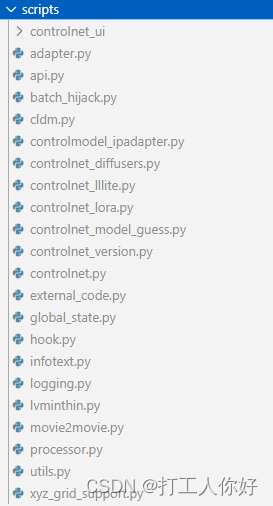

3、scripts目录

存放一些脚本,以及一些对现有脚本的修改

简单举例:

- api.py:监听路由,提供ControlNet的相关HTTP服务

- batch_hijack.py:处理批次生成的相关逻辑

- external_code.py:ControlNet杯外部调用的场景

4、controlnet的UI实现

UI的相关定义如下

# ui方法既是界面实现,可见各个选框都在这里定义

def ui(self, is_img2img):

"""this function should create gradio UI elements. See https://gradio.app/docs/#components

The return value should be an array of all components that are used in processing.

Values of those returned components will be passed to run() and process() functions.

"""

infotext = Infotext()

controls = ()

max_models = shared.opts.data.get("control_net_unit_count", 3)

elem_id_tabname = ("img2img" if is_img2img else "txt2img") + "_controlnet"

with gr.Group(elem_id=elem_id_tabname):

with gr.Accordion(f"ControlNet {controlnet_version.version_flag}", open = False, elem_id="controlnet"):

if max_models > 1:

with gr.Tabs(elem_id=f"{elem_id_tabname}_tabs"):

for i in range(max_models):

with gr.Tab(f"ControlNet Unit {i}",

elem_classes=['cnet-unit-tab']):

group, state = self.uigroup(f"ControlNet-{i}", is_img2img, elem_id_tabname)

infotext.register_unit(i, group)

controls += (state,)

else:

with gr.Column():

group, state = self.uigroup(f"ControlNet", is_img2img, elem_id_tabname)

infotext.register_unit(0, group)

controls += (state,)

if shared.opts.data.get("control_net_sync_field_args", True):

self.infotext_fields = infotext.infotext_fields

self.paste_field_names = infotext.paste_field_names

return controls

在 “extensions\sd-webui-controlnet-main\scripts\api.py” 可以看到有个on_app_started的回调,即程序启动时会调用controlnet_api方法

5、controlnet的功能实现

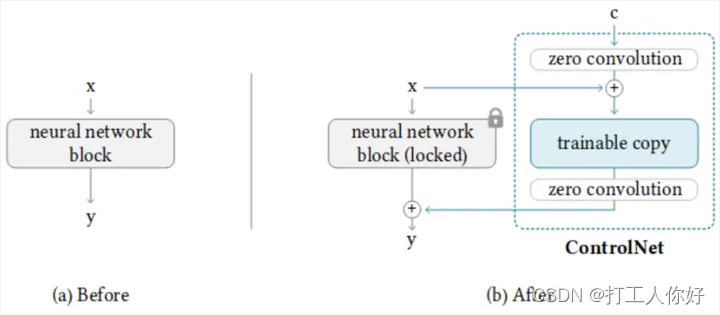

下图展示了controlnet的原理,它是在unet的网络上多增加一个旁路,因此,要看看代码中是怎样实现这一功能的

关键代码如下:

UnetHook为新定义的结构,通过latest_network.hook改变原有网络

在latest_network.hook中,hook方法先将原先的模型的forward方法保存起来(model._original_forward = model.forward),然后给它重新赋值,赋值为自行实现的forward_webui.get(model, UNetModel),真正的前向过程便替换为自己的forward方法

代码一:

self.latest_network = UnetHook(lowvram=is_low_vram)

self.latest_network.hook(model=unet, sd_ldm=sd_ldm, control_params=forward_params, process=p)

代码二:

model._original_forward = model.forward

outer.original_forward = model.forward

model.forward = forward_webui.__get__(model, UNetModel)

三、API模式下调用插件

在Body的JSON中,在 “alwayson_scripts” 中放置相关插件的参数,如下所示,参数必须封装到 “args” 里面

"alwayson_scripts": {

"AnimateDiff": {

"args": [

{

"model": "mm_sd_v15_v2.ckpt",

"format": ["GIF"],

"enable": true,

"video_length": 94,

"fps": 29,

"loop_number": 0,......

}

]

},

"ControlNet": {

"args": [

{

"enabled": true,

"module": "canny",

"model": "control_v11p_sd15_canny [d14c016b]",......

},

{

"enabled": true,

"module": "depth_midas",

"model": "control_v11f1p_sd15_depth [cfd03158]",......

}

]

}

}

- 在 “modules\api\api.py” 的 def init_script_args 方法中,对 alwayson_scripts 中的插件参数进行初始化,将各参数分配到各插件,依赖于 args_to 和 args_from 两个参数

- 在 “modules\scripts_postprocessing.py” 的 create_script_ui 中设置上面两个重要参数,即使在API模式下,也会进行 : ui.create_ui(),因为需要相关参数的默认值

def create_script_ui(self, script, inputs):

script.args_from = len(inputs)

script.args_to = len(inputs)

script.controls = wrap_call(script.ui, script.filename, "ui")

for control in script.controls.values():

control.custom_script_source = os.path.basename(script.filename)

inputs += list(script.controls.values())

script.args_to = len(inputs)

四、插件调用插件(AnimatedDiff 调用 Controlnet的例子)

UI模式下一切正常,API模式下文生图正常,但是视频转绘会报错:ControlNet找不到图片

从插件的源码层面找到原因,下面仅给出修改代码的解决方案,尽量不修改开源插件的原有逻辑,通过向 StableDiffusionProcessing 注入batch_images(视频的每一帧)完成 ControlNet 介入控制,修改下面三处地方:

修改一:assert global_input_frames != '', 'No input images found for ControlNet module'

unit.batch_images = global_input_frames

unit.input_mode = InputMode.BATCH

if(p.is_api):

print(type(p.script_args[11 + idx]))

print(type(unit))

p.script_args[11 + idx]['input_mode'] = InputMode.BATCH

p.script_args[11 + idx]['batch_images'] = global_input_frames

修改二:

else:

unit.batch_images = shared.listfiles(unit.batch_images)

if(p.is_api):

p.script_args[11 + idx]['batch_images'] = shared.listfiles(p.script_args[11 + idx]['batch_images'])

修改三:

self.latest_model_hash = p.sd_model.sd_model_hash

for idx, unit in enumerate(self.enabled_units):

if(p.is_api):

setattr(unit, "input_mode", p.script_args[11 + idx]['input_mode'])

setattr(unit, "batch_images", p.script_args[11 + idx]['batch_images'])

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- GitHub Copilot 快速入门快来看!

- Golang 通用代码生成器仙童发布 2.4.0 电音仙女尝鲜版一及其介绍视频

- for和foreach谁更快,为什么

- C++播放音乐:使用EGE图形库

- 信息安全导论期末复习

- AI视频何时才能跑出一个“Midjourney ”?

- 今天学习的是mysql-算术运算符 比较符 逻辑运算符 位运算符 mysql函数

- 20240121-集合不匹配

- Python:list列表与tuple元组的区别

- UCloud + 宝塔 + PHP = 个人网站