飞天使-k8s知识点2-安装

发布时间:2023年12月20日

文章目录

安装包参考地址

https://github.com/kubernetes/kubernetes/releases?page=5

安装前准备

禁?用swap

关闭selinux

关闭iptables, 优化内核参数及资源限制参数

# cat sysctl.conf

net.ipv4.ip_forward=1

vm.max_map_count=262144

kernel.pid_max=4194303

fs.file-max=1000000

net.ipv4.tcp_max_tw_buckets=6000

net.netfilter.nf_conntrack_max=2097152

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

# cat limits.conf

* soft core unlimited

* hard core unlimited

* soft nproc 1000000

* hard nproc 1000000

* soft nofile 1000000

* hard nofile 1000000

* soft memlock 32000

* hard memlock 32000

* soft msgqueue 8192000

* hard msgqueue 8192000

# 关闭 Selinux/firewalld

systemctl stop firewalld && systemctl disable firewalld

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

# 关闭交换分区

swapoff -a

cp /etc/{fstab,fstab.bak}

cat /etc/fstab.bak | grep -v swap > /etc/fstab

# 设置 iptables

echo """

net.ipv4.ip_forward=1

vm.max_map_count=262144

kernel.pid_max=4194303

fs.file-max=1000000

net.ipv4.tcp_max_tw_buckets=6000

net.netfilter.nf_conntrack_max=2097152

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

""" > /etc/sysctl.conf

modprobe br_netfilter

sysctl -p

net.bridge.bridge-nf-call-iptables = 1 #?二层的?网桥在转发包时会被宿主机iptables的 FORWARD规则匹配

# 同步时间

yum install -y ntpdate

ln -nfsv /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

准备机器

3主

2台 haproxy + keepalived

1台 node

harbor 另外准备

k8s-master1 kubeadm-master1.example.local 192.168.1.209

k8s-master2 kubeadm-master2.example.local 192.168.1.210

k8s-master3 kubeadm-master3.example.local 192.168.1.212

ha1 ha1.example.local 192.168.1.213

ha2 ha2.example.local 192.168.1.214

node1 node1.example.local 192.168.1.215

keepalived 服务器安装

由于内网网卡是eth1

yum install -y keepalived

主keepalived

/etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state MASTER

interface eth1

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.240 dev eth1 label eth1:1

}

}

从keepalived

/etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state BACKUP

interface eth1

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.240 dev eth1 label eth1:1

}

}

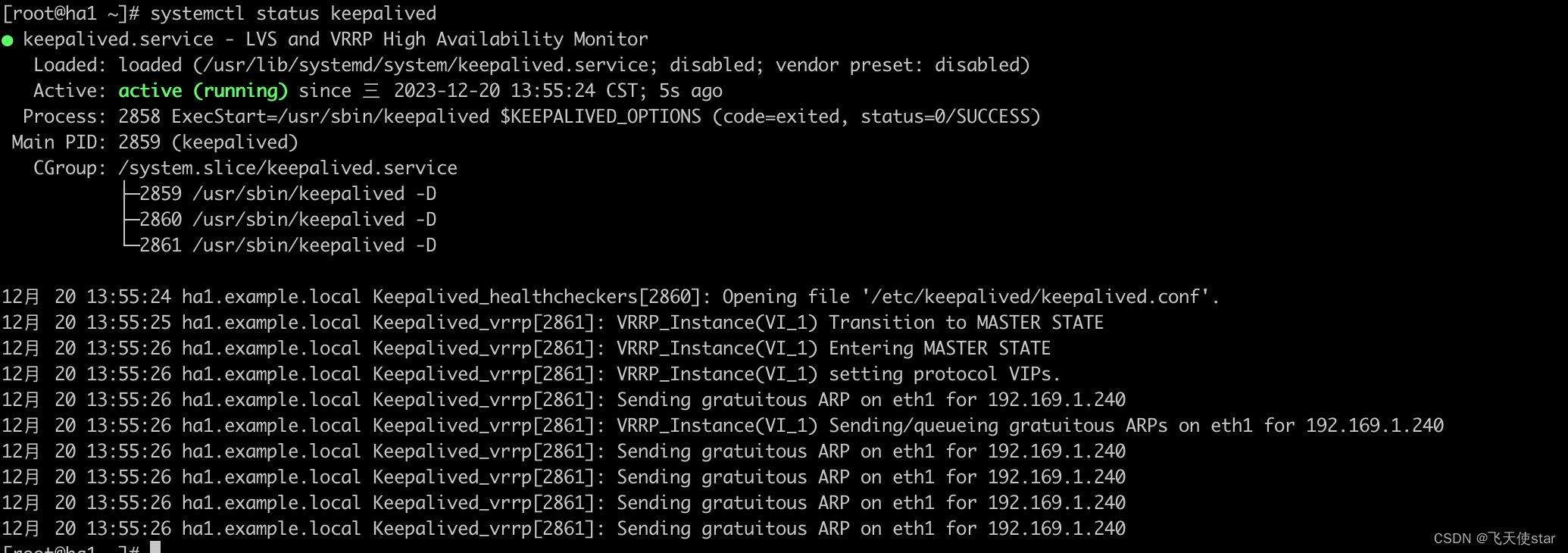

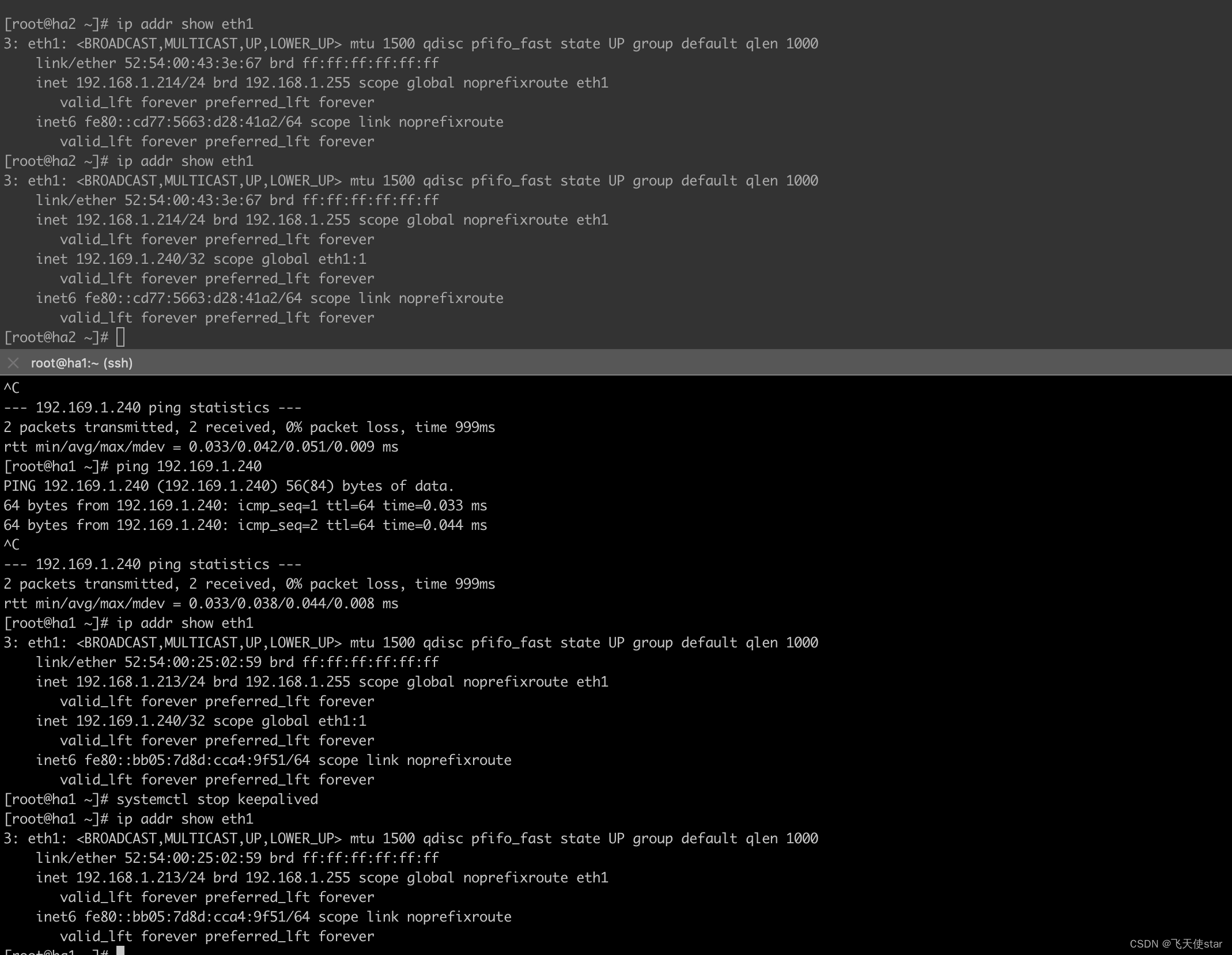

要验证 Keepalived 在两台机器上的有效性,可以执行以下步骤:

确保两台服务器上已成功安装和配置了 Keepalived。

将其中一台机器配置为主节点(state MASTER),将另一台机器配置为备节点(state BACKUP)。

启动 Keepalived 服务并确保服务正常运行。

在主节点上检查虚拟 IP 地址是否成功被绑定。可以使用命令 ip addr show ethX (ethX 是虚拟 IP 地址所绑定的接口)来验证。

断开主节点的网络连接(例如,拔掉主节点的网线或禁用其网络接口)。

在备节点上检查是否成功接管主节点的虚拟 IP 地址。可以使用命令 ip addr show ethX 来验证。

恢复主节点的网络连接。

重新启动 Keepalived 服务,并确保主备节点的状态正确切换(主节点变为主节点,备节点变为备节点)。

在结束测试后,恢复初始状态,确保每个节点都正常工作并具有适当的角色和配置。

通过上述步骤,你可以验证 Keepalived 在主备节点之间的正常切换。请注意,确保网络环境和配置的一致性对于 Keepalived 的正常运行非常重要。

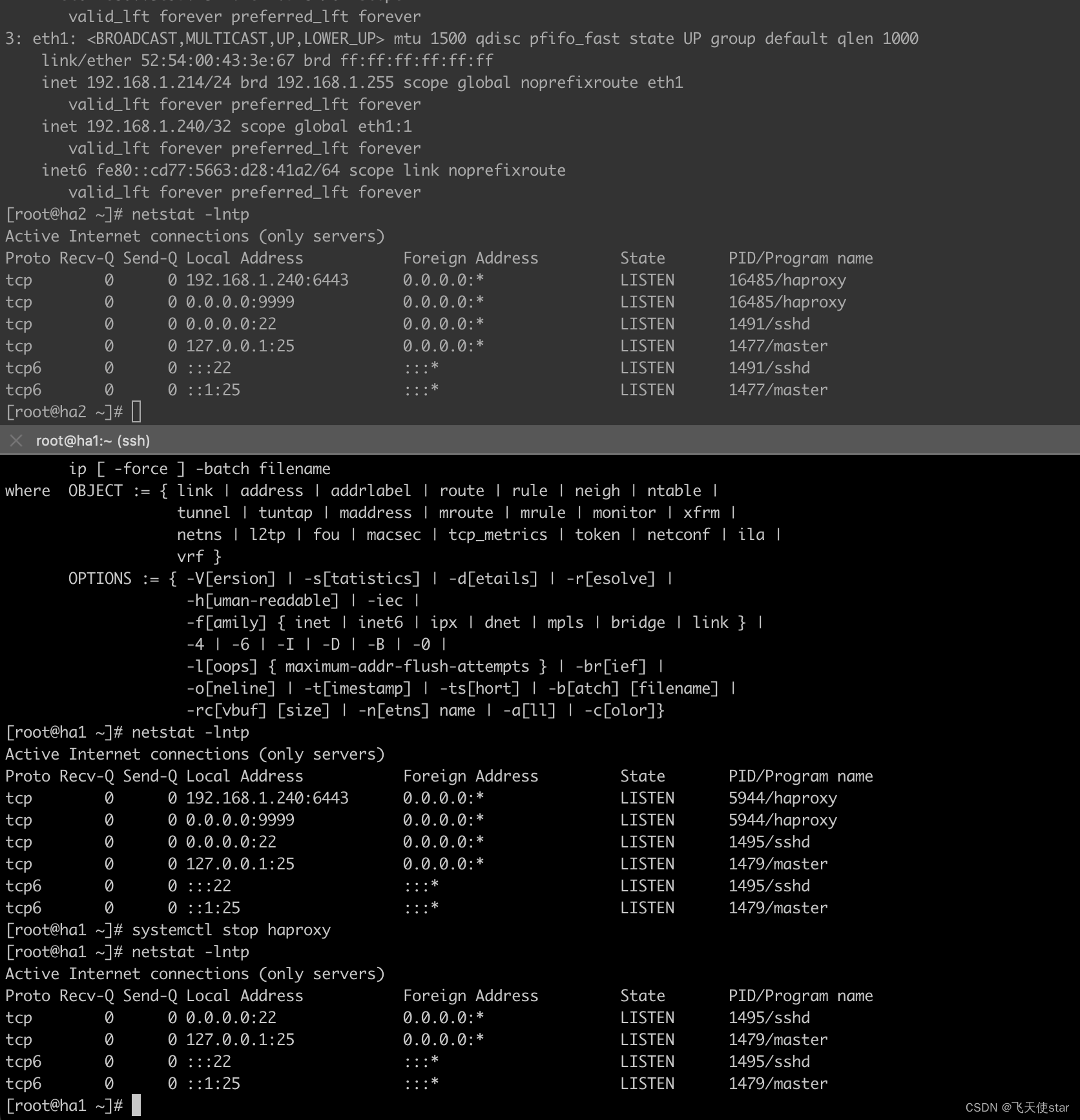

haproxy

yum install -y haproxy

两台都搞这种配置,然后就在写个监听本机 是不是启用 虚拟ip的脚本,这样可以做到另一台的haproxy 挂了,本机的haproxy 自动接上

cat /etc/haproxy/haproxy.cfg

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth haadmin:faeefa123ef

listen k8s-6443

bind 192.168.1.240:6443

mode tcp

balance roundrobin

server 192.168.1.209 192.168.1.209:6443 check inter 2s fall 3 rise 5

server 192.168.1.210 192.168.1.210:6443 check inter 2s fall 3 rise 5

server 192.168.1.212 192.168.1.212:6443 check inter 2s fall 3 rise 5

harbor 略

docker 19.03-15 版本安装略

所有节点yum安装kubelet kubeadm kubectl

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

setenforce 0

查看版本

yum list kubeadm --showduplicates |grep 1.20

yum list kubelet --showduplicates |grep 1.20

yum list kubectl --showduplicates |grep 1.20

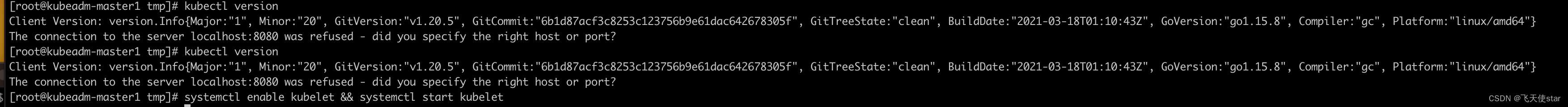

安装指定版本

yum install -y install kubelet-1.20.5-0 kubeadm-1.20.5-0 kubectl-1.20.5-0

systemctl enable kubelet && systemctl start kubelet

安装最新版本是这样

yum install -y kubelet kubeadm kubectl

systemctl enable kubelet && systemctl start kubelet

查看安装效果

kubeadm version

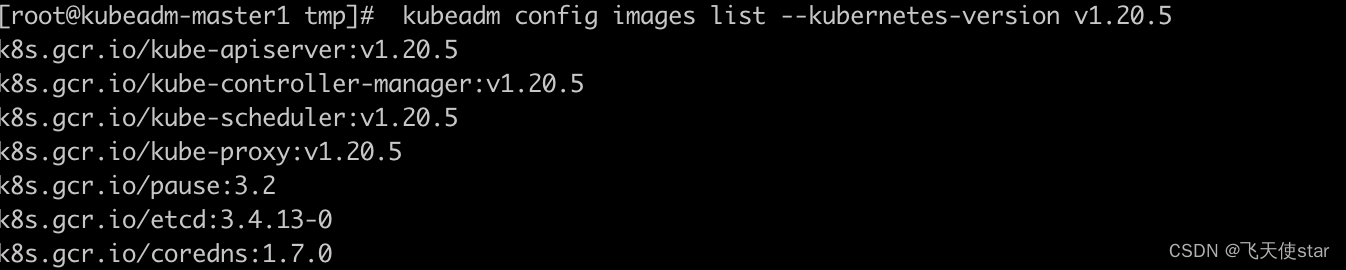

准备镜像

kubeadm config images list --kubernetes-version v1.20.5

下载镜像

#!/bin/bash

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

下载完成之后 docker images 查看结果

[root@kubeadm-master1 tmp]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.20.5 5384b1650507 2 years ago 118MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.20.5 d7e24aeb3b10 2 years ago 122MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.20.5 6f0c3da8c99e 2 years ago 116MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.20.5 8d13f1db8bfb 2 years ago 47.3MB

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 3 years ago 253MB

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns 1.7.0 bfe3a36ebd25 3 years ago 45.2MB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 3 years ago 683kB

[root@kubeadm-master1 tmp]# kubeadm config images list --kubernetes-version v1.20.5

k8s.gcr.io/kube-apiserver:v1.20.5

k8s.gcr.io/kube-controller-manager:v1.20.5

k8s.gcr.io/kube-scheduler:v1.20.5

k8s.gcr.io/kube-proxy:v1.20.5

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.0

测试环境可以单节点安装

kubeadm init --apiserver-advertise-address=192.168.1.209 --apiserver-bindport=6443 --kubernetes-version=v1.20.5 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=feitianshi.local --image-repository=registry.cnhangzhou.aliyuncs.com/google_containers --ignorepreflight-errors=swap

这个命令是用于在主机上初始化一个Kubernetes集群。让我们逐个解释每个参数的含义:

kubeadm init: 这是初始化Kubernetes集群的命令。

--apiserver-advertise-address=172.31.3.201: 这个参数指定了API服务器的广播地址,即API服务器将使用的IP地址。

--apiserver-bind-port=6443: 这个参数指定了API服务器的绑定端口,即API服务器将监听的端口号。

--kubernetes-version=v1.20.5: 这个参数指定了要安装的Kubernetes版本。

--pod-network-cidr=10.100.0.0/16: 这个参数指定了Pod网络的CIDR范围。Pod网络是用于容器之间通信的网络。

--service-cidr=10.200.0.0/16: 这个参数指定了Service网络的CIDR范围。Service网络是用于Kubernetes服务的虚拟IP地址范围。

--service-dns-domain=feitianshi.local: 这个参数指定了Service的DNS域名后缀。在集群中,Service的DNS名称将采用<service-name>.<namespace>.<service-dns-domain>的格式。

--image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers: 这个参数指定了使用的容器镜像仓库。在这个命令中,使用了阿里云容器镜像仓库作为镜像仓库。

--ignore-preflight-errors=swap: 这个参数指定了在初始化前要忽略的预检错误。在这个命令中,忽略了与交换空间(swap)相关的预检错误。

通过运行这个命令,您将初始化一个Kubernetes集群,并使用指定的参数配置集群的各种属性。请注意,具体的参数值可能会根据您的环境和需求而有所不同。

允许master 节点部署 pod

kubectl taint nodes --all node-role.kubernetes.io/master-

这个命令的含义是在所有节点上移除名为node-role.kubernetes.io/master的污点(taint)。污点是用于标记节点的属性,可以影响Pod的调度行为。

kubectl taint nodes --all node-role.kubernetes.io/master-命令中的--all参数表示对所有节点执行操作,node-role.kubernetes.io/master-表示移除所有节点上的node-role.kubernetes.io/master污点。

移除node-role.kubernetes.io/master污点的效果是允许非主节点上运行的Pod。默认情况下,Kubernetes集群的主节点会有这个污点,以防止普通的Pod被调度到主节点上。通过移除这个污点,可以允许主节点上运行普通的Pod。

请注意,移除主节点上的污点可能会导致主节点上的Pod与集群组件(如kube-apiserver、kube-controller-manager和kube-scheduler)发生冲突,因此在执行此操作之前请确保了解其潜在影响,并根据实际需求进行操作。

单节点部署网络组件

https://kubernetes.io/zh/docs/concepts/cluster-administration/addons/ #kubernetes?持的?络扩

展

https://quay.io/repository/coreos/flannel?tab=tags #flannel镜像下载地址

https://github.com/flannel-io/flannel #flannel的github 项?地址

kubectl apply -f flannel-0.14.0-rc1.yml

kubectl get pod -A

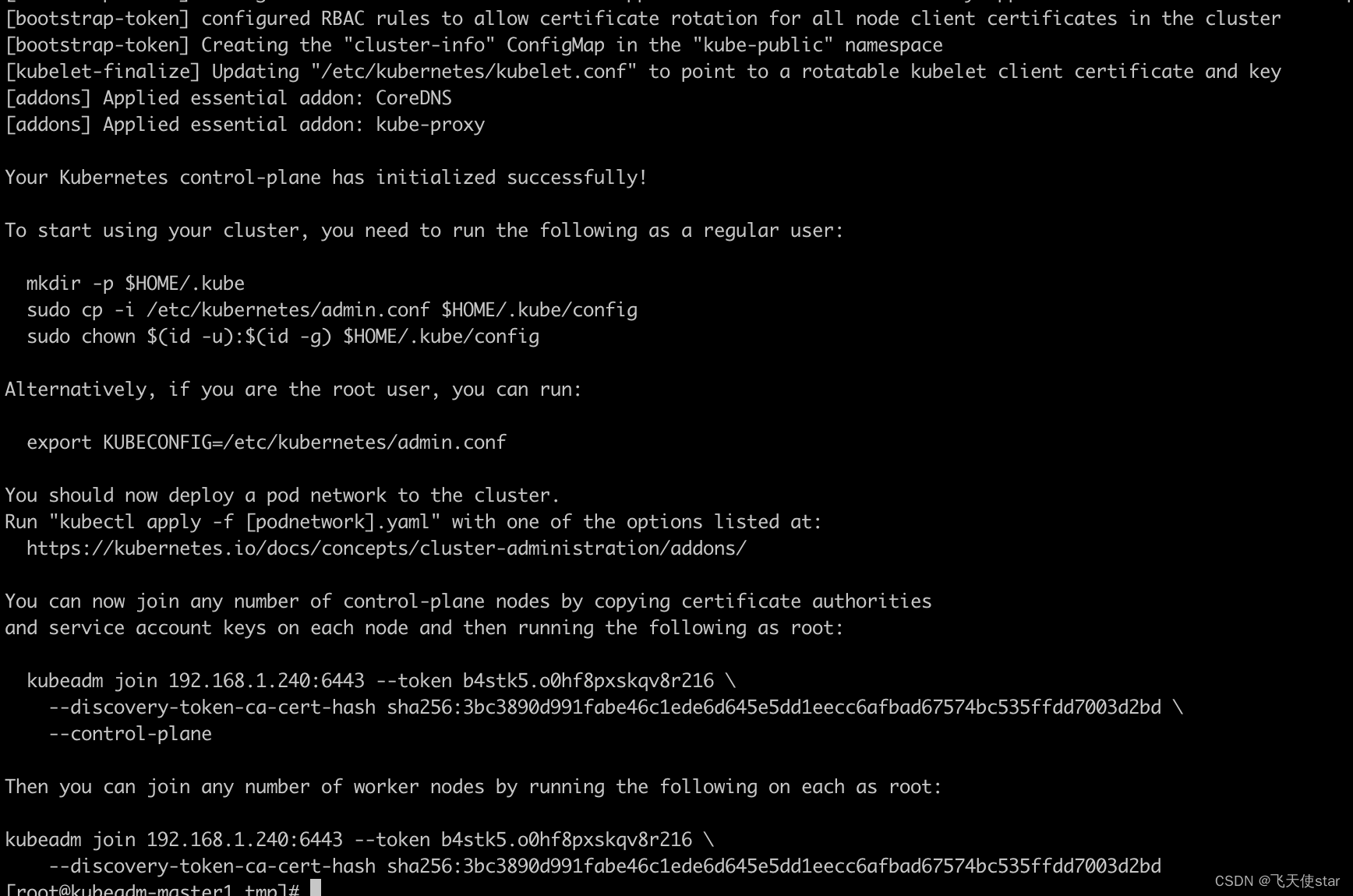

生产环境多master 安装,基于本文开头安装的keepalived,通过haproxy 进行反向代理

kubeadm init --apiserver-advertise-address=192.168.1.209 --control-plane-endpoint=192.168.1.240:6443 --apiserver-bind-port=6443 --kubernetes-version=v1.20.5 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=feitianshi.local --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --ignore-preflight-errors=swap

也有基于文件的初始化

# kubeadm config print init-defaults #输出默认初始化配置

# kubeadm config print init-defaults > kubeadm-init.yaml #将默认配置输出??件

# cat kubeadm-init.yaml #修改后的初始化?件内容

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.209

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master1.example.local

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.1.240:6443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.5

networking:

dnsDomain: jiege.local

podSubnet: 10.100.0.0/16

serviceSubnet: 10.200.0.0/16

scheduler: {}

kubeadm init --config kubeadm-init.yaml #基于?件执?k8smaster初始化

配置kube-config?件及?络组件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

部署?络组件flannel:

https://github.com/coreos/flannel/

[root@kubeadm-master1 tmp]# kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

namespace/kube-flannel created

serviceaccount/flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@kubeadm-master1 tmp]# kubectl get node

NAME STATUS ROLES AGE VERSION

kubeadm-master1.example.local NotReady control-plane,master 12m v1.20.5

[root@kubeadm-master1 tmp]# kubectl get node

NAME STATUS ROLES AGE VERSION

kubeadm-master1.example.local Ready control-plane,master 13m v1.20.5

当前master?成证书?于添加新控制节点:

[root@kubeadm-master1 tmp]# kubeadm init phase upload-certs --upload-certs

I1220 16:45:42.030027 694 version.go:254] remote version is much newer: v1.29.0; falling back to: stable-1.20

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

b034a5089df78a617b5bbcc674bff67aafaae081e33fcd0f3be0886c614df8e1

其他master 加入集群

在另外?台已经安装了docker、kubeadm和kubelet的master节点上执?以下操作

之前单master 初始化出来的命令输出是

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 192.168.1.240:6443 --token b4stk5.o0hf8pxskqv8r216 \

--discovery-token-ca-cert-hash sha256:3bc3890d991fabe46c1ede6d645e5dd1eecc6afbad67574bc535ffdd7003d2bd \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.240:6443 --token b4stk5.o0hf8pxskqv8r216 \

--discovery-token-ca-cert-hash sha256:3bc3890d991fabe46c1ede6d645e5dd1eecc6afbad67574bc535ffdd7003d2bd

master 加入

kubeadm join 192.168.1.240:6443 --token b4stk5.o0hf8pxskqv8r216 \

--discovery-token-ca-cert-hash sha256:3bc3890d991fabe46c1ede6d645e5dd1eecc6afbad67574bc535ffdd7003d2bd \

--control-plane --certificate-key b034a5089df78a617b5bbcc674bff67aafaae081e33fcd0f3be0886c614df8e1

文章来源:https://blog.csdn.net/startfefesfe/article/details/135102854

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- SpringMVC获取请求参数

- CTF之misc杂项解题技巧总结(2)——流量分析

- Inis博客系统本地部署结合内网穿透实现远程访问本地站点

- Angular:跨域请求携带 cookie

- hydra爆破

- 基于Python数据可视化的网易云音乐歌单分析系统

- set注入专题/简单类型/数组/List/Set/Map/空字符串/null/特殊符号

- stm32项目(17)——基于stm32的温湿度检测protues仿真

- 电脑桌面文件在c盘哪个文件夹里

- 最佳利用Mock提升测试效率的7个技巧!