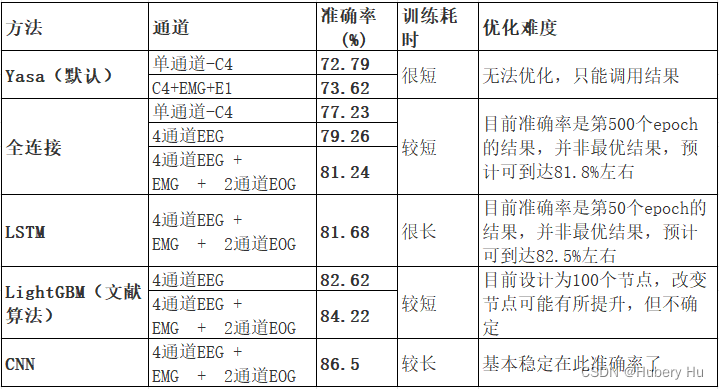

仿Yasa算法-睡眠分期,目前五分期86.5%

参考链接

具体内容可见:个人文章

参考代码

The code that was used to train the classifiers can be found on GitHub at:

Link

验证数据集

PhysiNet-Haaglanden

选取了其中150例数据(剔除了No.98,标签数据长度不匹配)

特征

参考Yasa的定义,每个脑电通道筛选了(283=84)个特征,每个眼电通道筛选了(233=69)个特征,肌电通道筛选了(10*3=30)个特征。

结果

(1)论文作者以训练好的模型(对单人)

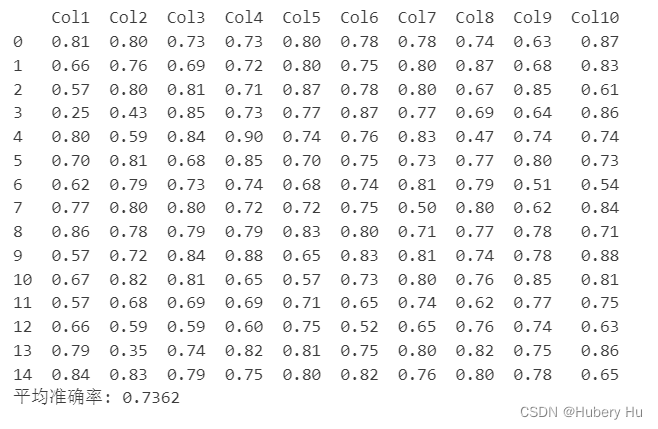

1.三通道

使用通道名称:eeg_name=“EEG C4-M1”, eog_name=“EOG E1-M2”, emg_name=“EMG chin”

准确率:73.62%

//

def calculate_accuracy(edf_file, txt_file):

# 这里应该是你的准确率计算逻辑

# 例如,打开EDF文件,进行一些处理,然后使用TXT文件中的数据进行比较

# 返回计算得到的准确率

# 加载 EDF 文件

raw = mne.io.read_raw_edf(edf_file, preload=True)

# Let's now load the human-scored hypnogram, where each value represents a 30-sec epoch.

hypno = np.loadtxt(txt_file, dtype=str)

sls = yasa.SleepStaging(raw, eeg_name="EEG C4-M1", eog_name="EOG E1-M2", emg_name="EMG chin")

y_pred = sls.predict()

# What is the accuracy of the prediction, compared to the human scoring

accuracy = (hypno == y_pred).sum() / y_pred.size

return accuracy

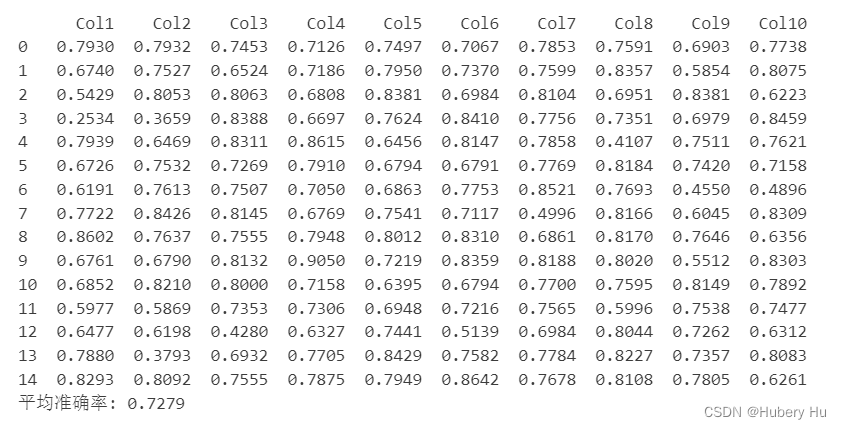

2.单通道脑电

使用通道名称:eeg_name=“EEG C4-M1”

准确率:72.79%

def calculate_accuracy(edf_file, txt_file):

# 这里应该是你的准确率计算逻辑

# 例如,打开EDF文件,进行一些处理,然后使用TXT文件中的数据进行比较

# 返回计算得到的准确率

# 加载 EDF 文件

raw = mne.io.read_raw_edf(edf_file, preload=True)

# Let's now load the human-scored hypnogram, where each value represents a 30-sec epoch.

hypno = np.loadtxt(txt_file, dtype=str)

# sls = yasa.SleepStaging(raw, eeg_name="EEG C4-M1", eog_name="EOG E1-M2", emg_name="EMG chin")

sls = yasa.SleepStaging(raw, eeg_name="EEG C4-M1")

y_pred = sls.predict()

# What is the accuracy of the prediction, compared to the human scoring

accuracy = (hypno == y_pred).sum() / y_pred.size

return accuracy

(2)全连接,单通道脑电

通道:EEG C4-M1

准确率:77.23%

# 划分训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# 构建模型

model = Sequential()

model.add(Dense(128, input_shape=(84,), activation='relu'))

model.add(Dense(64, activation='relu'))

model.add(Dense(5, activation='softmax')) # 5 类分类

# 训练模型

model.fit(X_train, y_train, epochs=500, batch_size=32, validation_data=(X_test, y_test))

(3)全连接,4通道脑电

通道:‘EEG F4-M1’, ‘EEG C4-M1’, ‘EEG O2-M1’, ‘EEG C3-M2’

准确率:79.26%

# 划分训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# 构建模型

model = Sequential()

model.add(Dense(128, input_shape=(336,), activation='relu'))

model.add(Dense(64, activation='relu'))

model.add(Dense(5, activation='softmax')) # 5 类分类

# 训练模型

model.fit(X_train, y_train, epochs=500, batch_size=32, validation_data=(X_test, y_test))

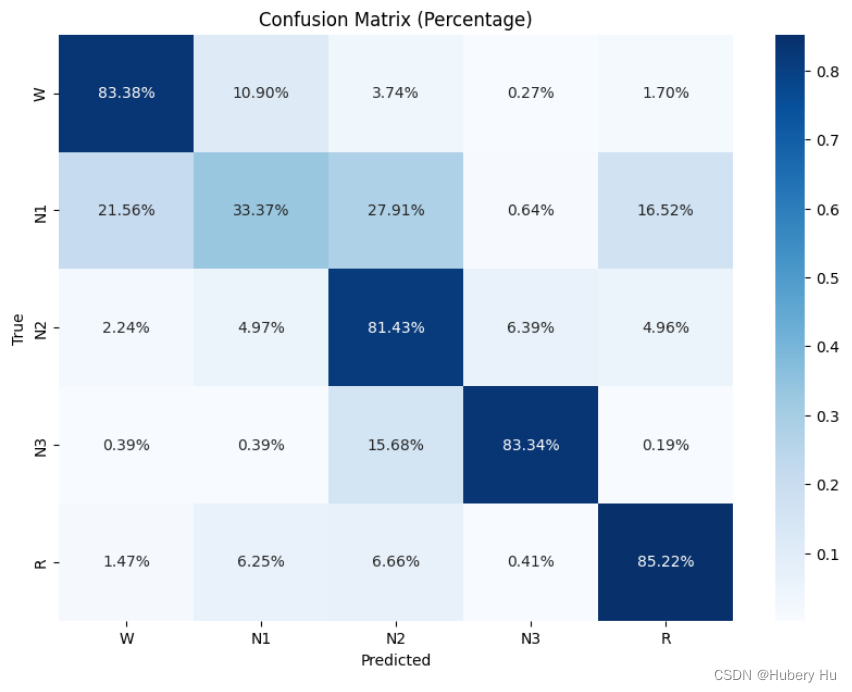

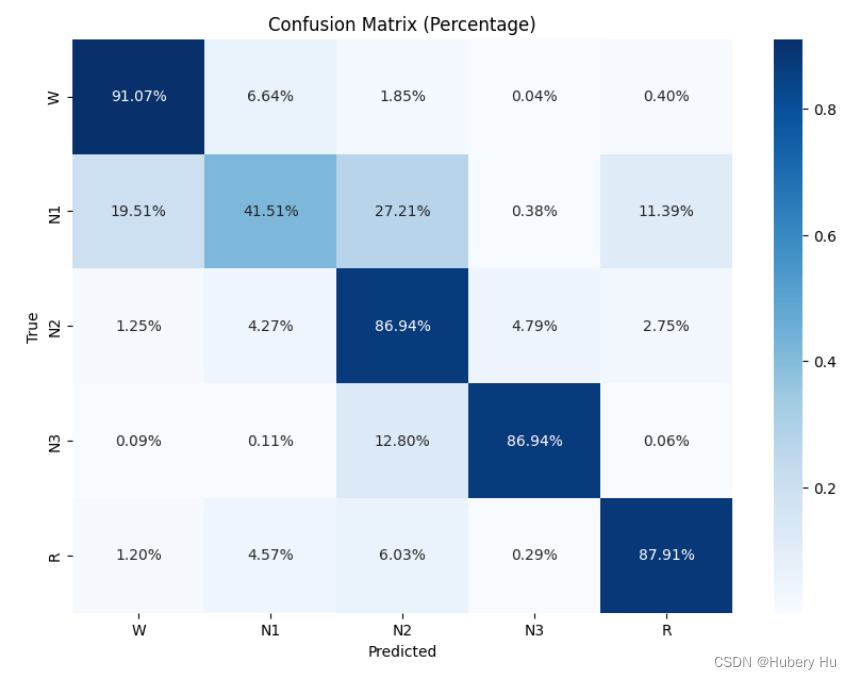

(4)LightGBM,4通道脑电

通道:‘EEG F4-M1’, ‘EEG C4-M1’, ‘EEG O2-M1’, ‘EEG C3-M2’

准确率:82.62%

# 创建 LightGBM 分类器

lgbm = LGBMClassifier(n_estimators=100, learning_rate=0.05)

y_train_1d = np.argmax(y_train, axis=1)

y_test_1d = np.argmax(y_test, axis=1)

# 然后使用这个一维数组进行训练

lgbm.fit(X_train, y_train_1d)

# 预测和评估

y_pred = lgbm.predict(X_test)

accuracy = accuracy_score(y_test_1d, y_pred)

print(f"Accuracy: {accuracy}")

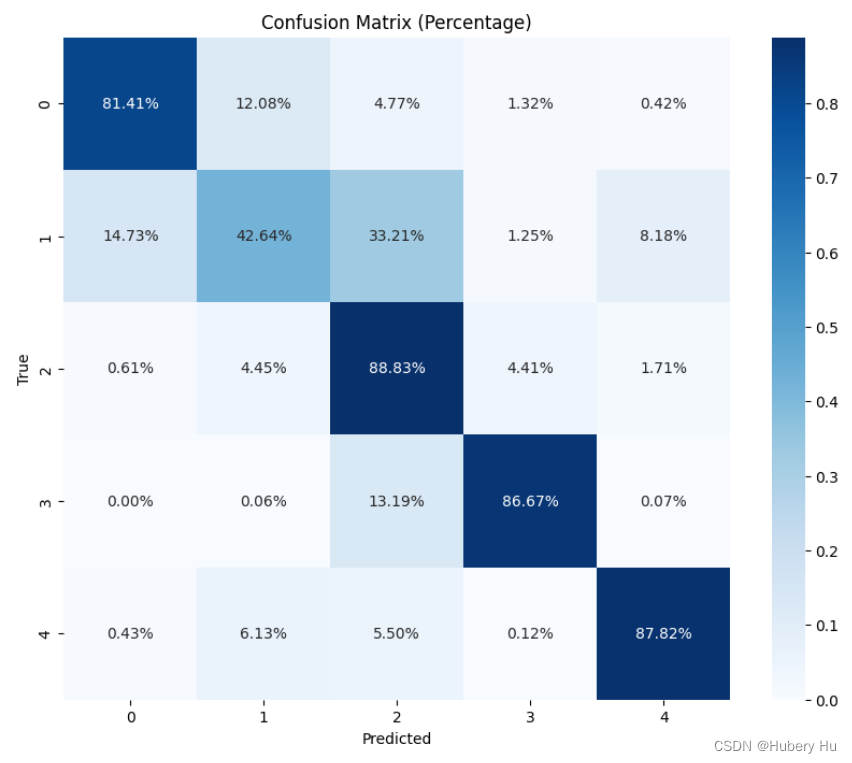

(5)全连接,4通道脑电+EMG+2通道眼电

通道:‘EEG F4-M1’, ‘EEG C4-M1’, ‘EEG O2-M1’, ‘EEG C3-M2’, ‘EMG chin’, ‘EOG E1-M2’, ‘EOG E2-M2’

准确率:81.24%

# 构建模型

model = Sequential()

model.add(Dense(128, input_shape=(504,), activation='relu'))

model.add(Dense(64, activation='relu'))

model.add(Dense(5, activation='softmax')) # 5 类分类

(6)LSTM,4通道脑电+EMG+2通道眼电

通道:‘EEG F4-M1’, ‘EEG C4-M1’, ‘EEG O2-M1’, ‘EEG C3-M2’, ‘EMG chin’, ‘EOG E1-M2’, ‘EOG E2-M2’

准确率:81.68%

def build_lstm_model(input_shape, num_classes):

model = Sequential()

# model.add(LSTM(50, input_shape=input_shape))

model.add(LSTM(100, input_shape=input_shape))

model.add(Dense(num_classes, activation='softmax'))

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

return model

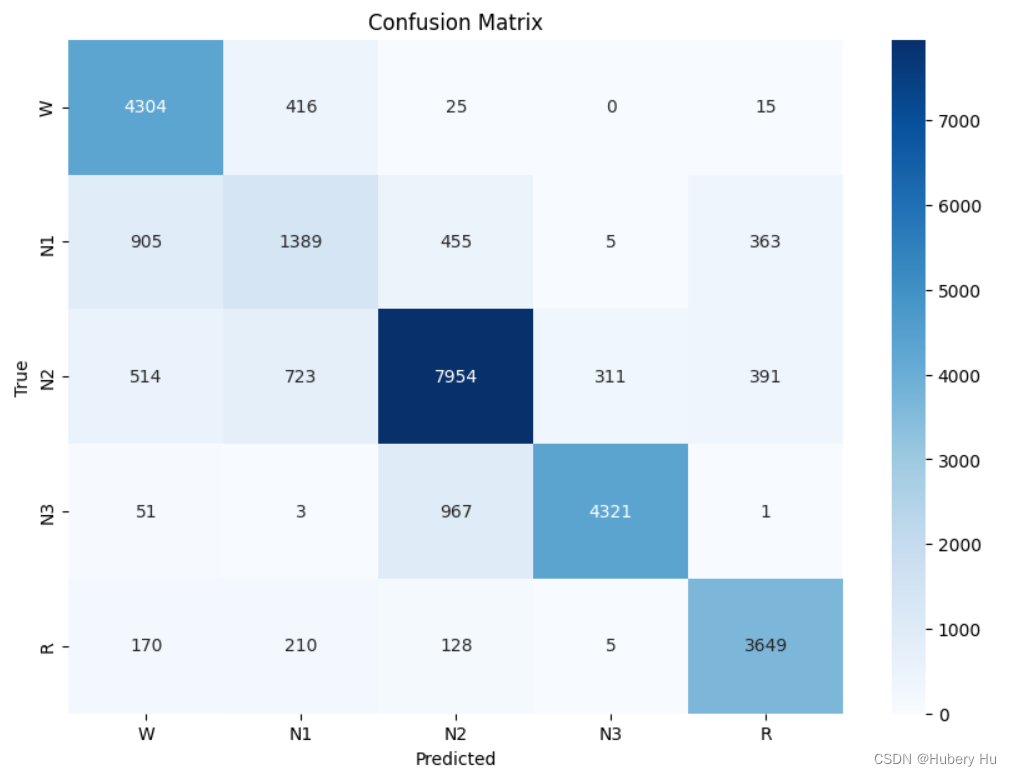

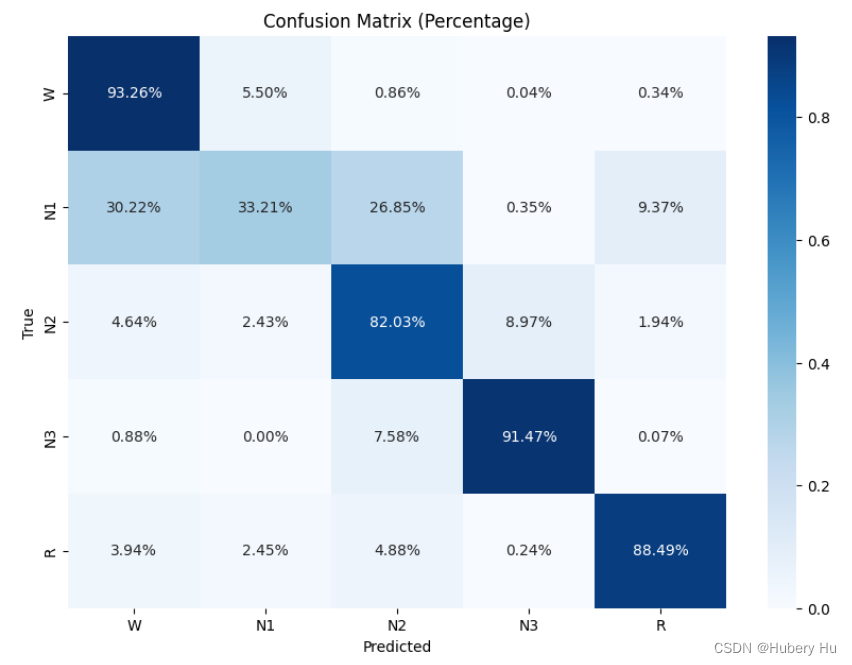

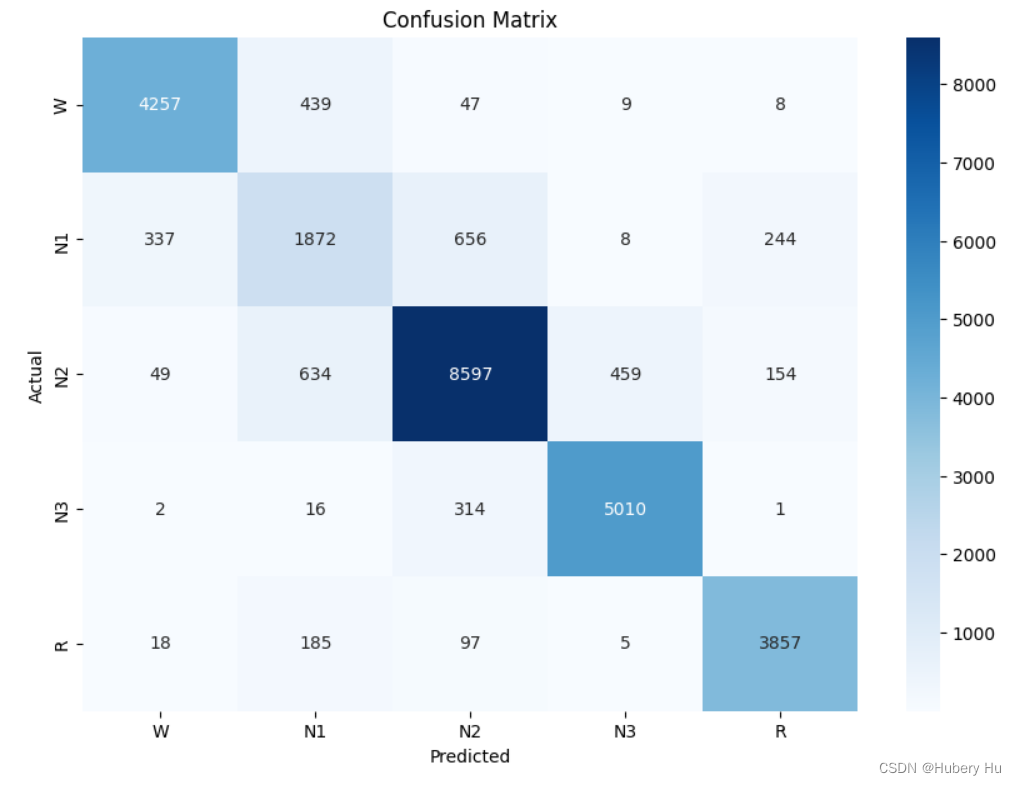

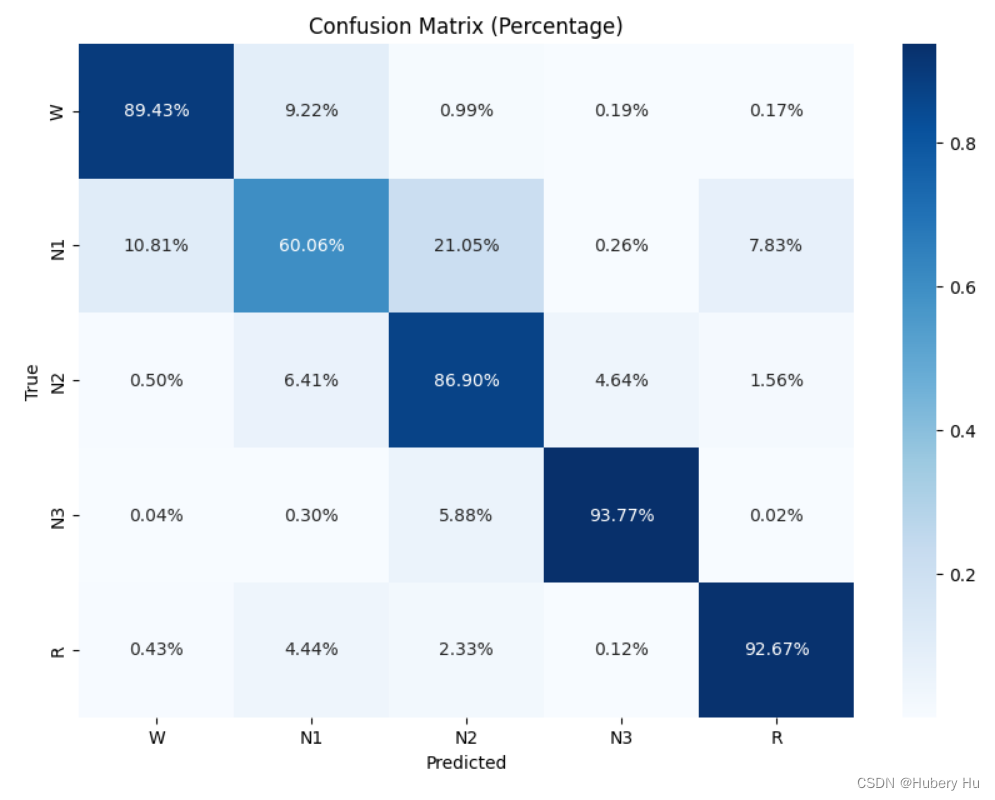

(7)LightGBM,4通道脑电+EMG+2通道眼电

通道:‘EEG F4-M1’, ‘EEG C4-M1’, ‘EEG O2-M1’, ‘EEG C3-M2’, ‘EMG chin’, ‘EOG E1-M2’, ‘EOG E2-M2’

准确率:84.22%

# 创建 LightGBM 分类器

lgbm = LGBMClassifier(n_estimators=100, learning_rate=0.05)

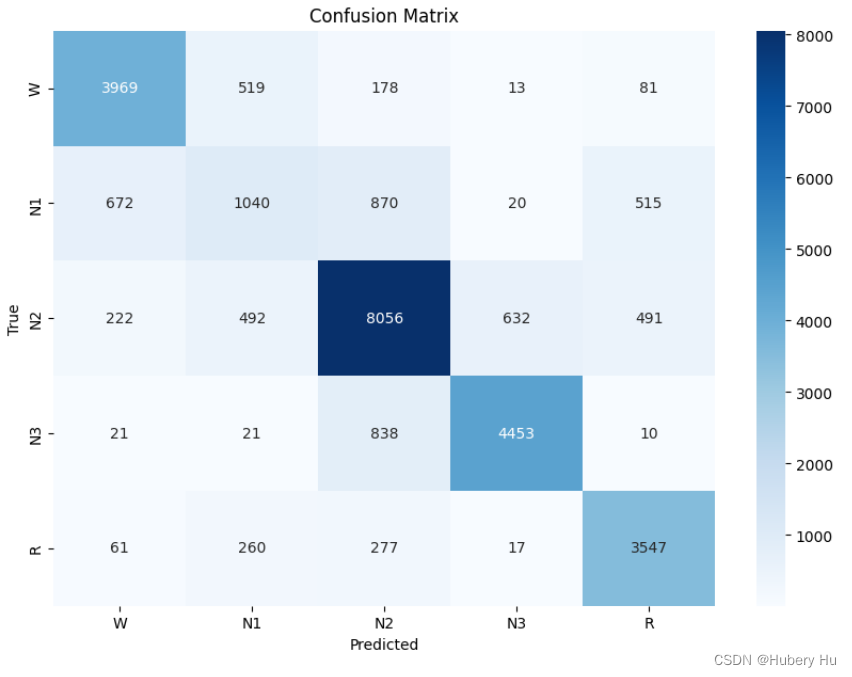

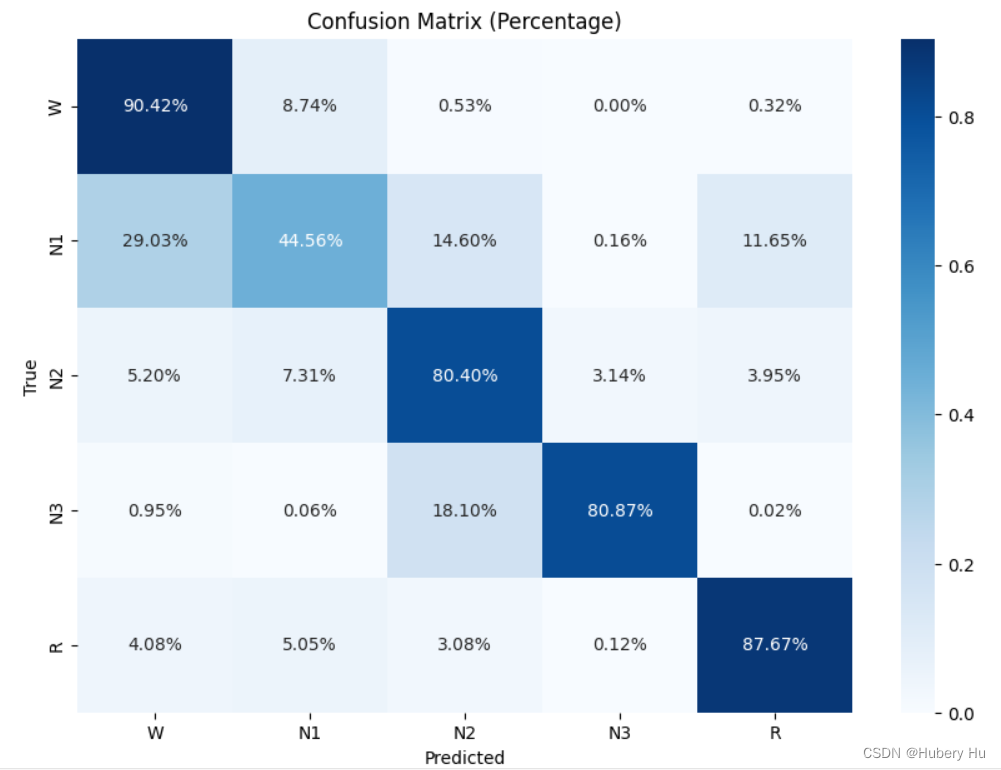

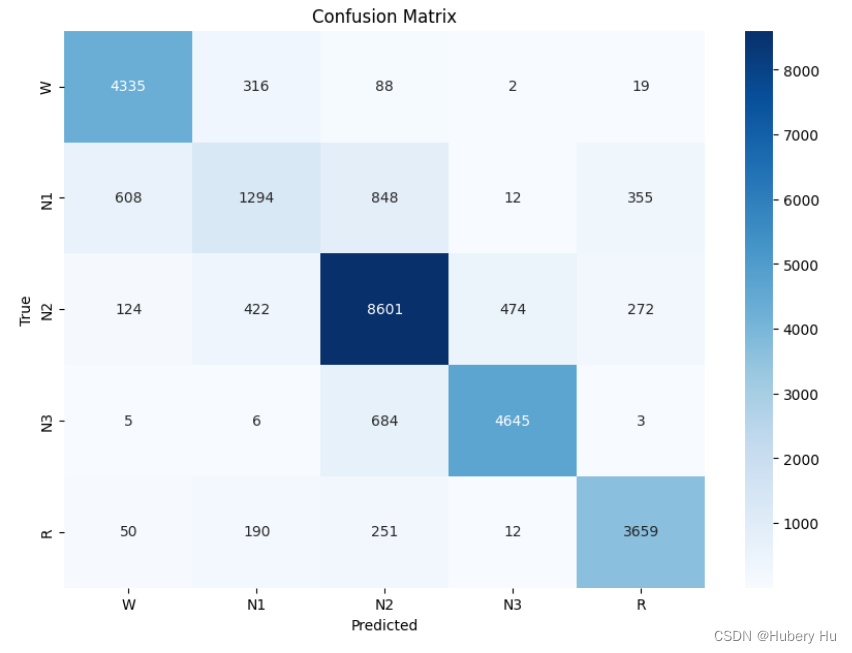

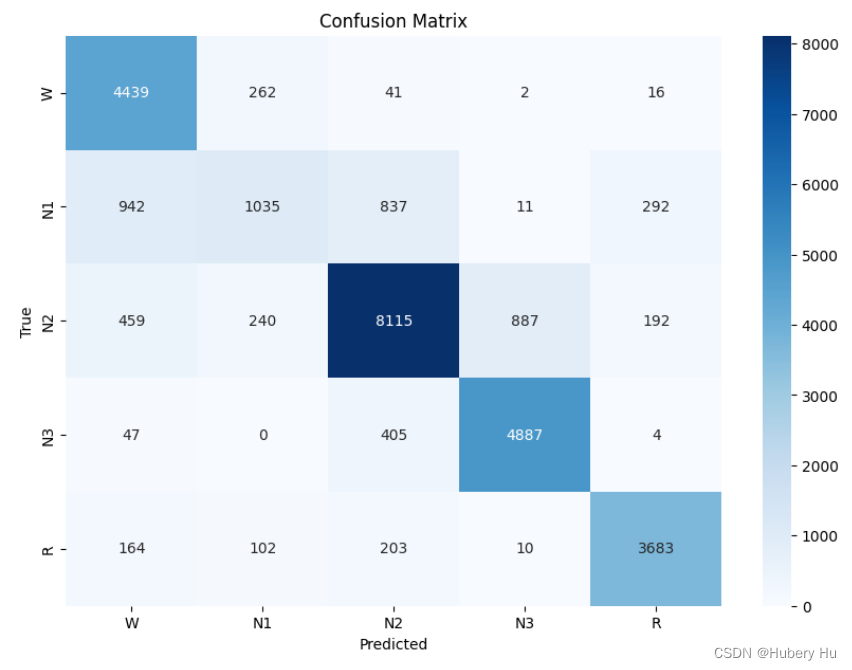

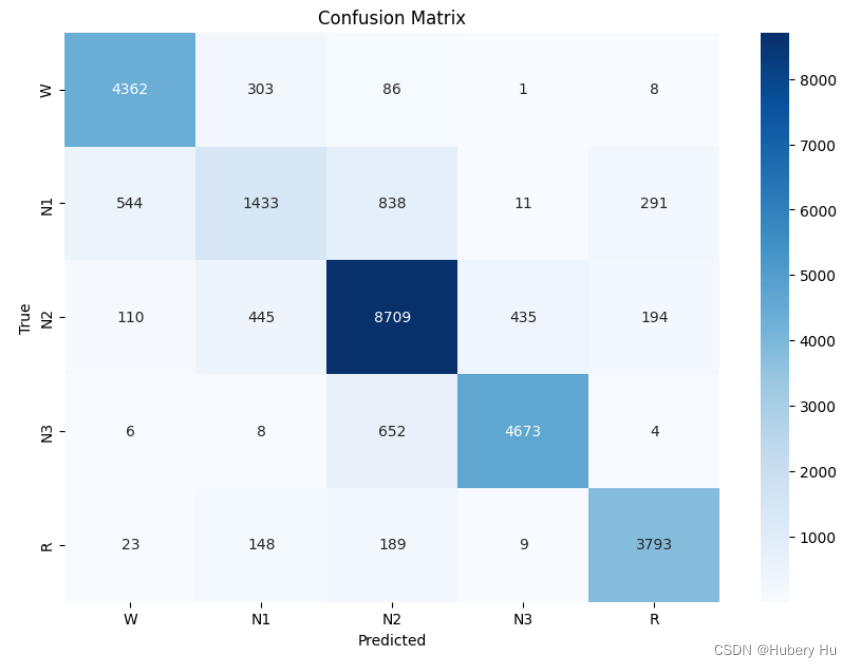

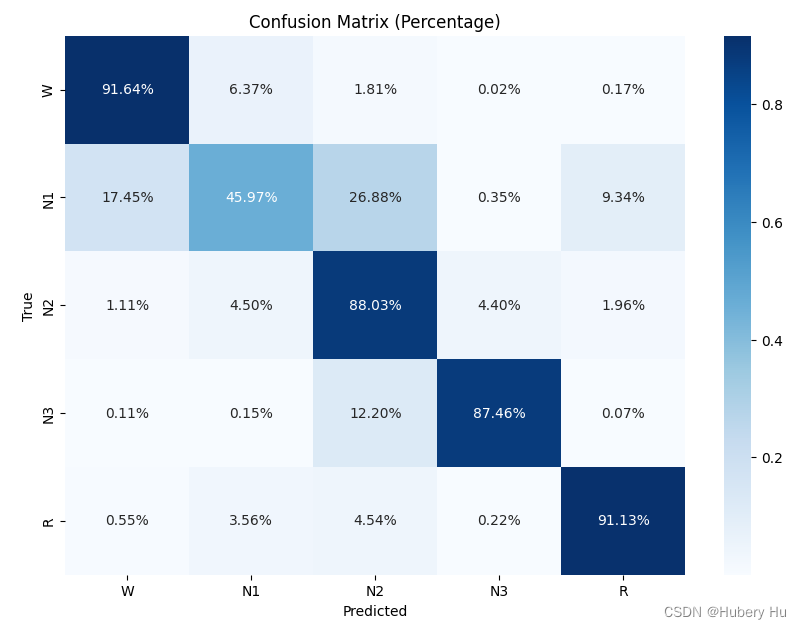

(8)CNN,4通道脑电+EMG+2通道眼电

通道:‘EEG F4-M1’, ‘EEG C4-M1’, ‘EEG O2-M1’, ‘EEG C3-M2’, ‘EMG chin’, ‘EOG E1-M2’, ‘EOG E2-M2’

准确率:86.50%

# 由于是1D数据,我们需要扩展一个维度来适配CNN

X_train = X_train[..., np.newaxis]

X_test = X_test[..., np.newaxis]

# 构建模型

model = tf.keras.models.Sequential([

tf.keras.layers.Conv1D(filters=64, kernel_size=3, activation='relu', input_shape=(504, 1)),

tf.keras.layers.Conv1D(filters=64, kernel_size=3, activation='relu'),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.MaxPooling1D(pool_size=2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(100, activation='relu'),

tf.keras.layers.Dense(5, activation='softmax')

])

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 前端三件套html/css/js的基本认识以及示例程序

- 【docker】如何编写dockerfile文件,构建docker镜像

- 三、计算机理论-计算机网络-计算机网络的基本概念;计算机网络的功能、应用、拓扑结构及分类;网络的层次体系结构和网络协议;网络标准化

- 如何使用 NFTScan NFT API 在 Gnosis 网络上开发 Web3 应用

- 前端JavaScript篇之实现有序数组原地去重方法有哪些?

- 程序员常用的学习网站有哪些?10大网站整理

- css如何让滚动条不占用宽度 hover上去会显示滚动条

- Flink多流转换(1)—— 分流&合流

- OpenVAS 插件

- 【?AI工具?】实用工具推荐