LVM-系统

发布时间:2023年12月22日

# Linux常见的文件系统:ext4,xfs,vfat(linux和window都能够识别)

mkfs.ext4 /dev/sdb1 # 格式化为ext4文件系统

mkfs.xfs /dev/sdb2 # 格式化为xfs文件系统

mkfs.vfat /dev/sdb1 # 格式化为vfat文件系统

mkswap /dev/vdb # 格式化为交换分区swap文件系统

一. 概念

1. 关键字

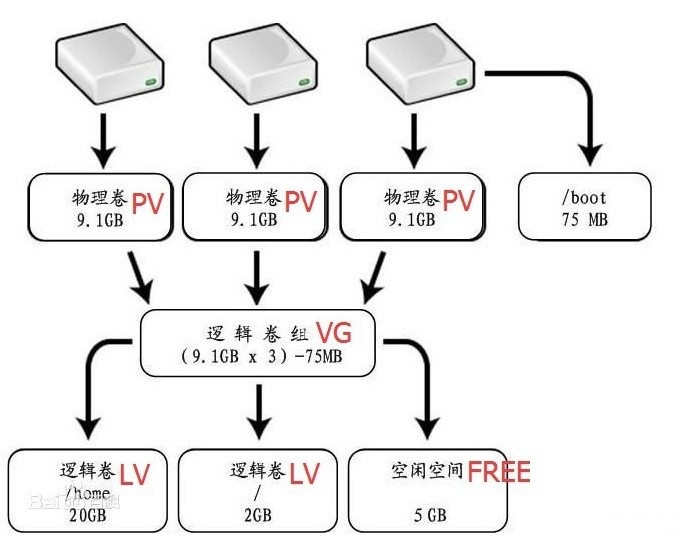

- LVM逻辑卷管理 (Logical Volume Manager)

- LVM是逻辑卷管理的简称

- 物理存储介质 (The physical media)

- 系统的存储设备硬件, 如硬盘:/dev/hda,/dev/sda,/dev/vda 等,是存储系统的基础

- 物理卷(PV,physicalvolume)

- 物理卷是LVM逻辑卷 管理系统 最底层。包含逻辑卷管理相关的管理参数,可以是整个物理硬盘或实际物理硬盘上的分区。如:硬盘的MBR分区或GPT分区,还有RAID,回环文件等

- 卷组(VG,Volume Group)

- 卷组是一个或多个物理卷的集合,并在设备的文件系统中显示为 /dev/VG_NAME

- 逻辑卷(LV,logicalvolume)

- 逻辑卷建立在卷组基础上,由物理块PE组成,是一个虚拟分区,显示为 /dev/VG_NAME/LV_NAME

- 卷组中未分配的空间可用于建立新的逻辑卷,逻辑卷建立后可以动态扩展和缩小空间。在逻辑卷之上可以建立文件系统(比如/home或者/usr等)

- 物理块(PE,physical extent)

- 每一个物理卷被划分为 称为PE的基本单元—物理块。物理块PE是一个卷组中最小的物理区域存储单元,默认为4 MiB(大小可设置)

- 同一卷组所有PV的PE大小需一致,新的pv加入到vg后,pe的大小自动更改为vg中定义的pe大小

2. 原理

- LVM的工作原理其实很简单,它就是通过将底层的物理硬盘抽象的封装起来,然后以逻辑卷的方式呈现给上层应用。在传统的磁盘管理机制中,我们的上层应用是直接访问文件系统,从而对底层的物理硬盘进行读取,而在LVM中,其通过对底层的硬盘进行封装,当我们对底层的物理硬盘进行操作时,其不再是针对于分区进行操作,而是通过一个叫做逻辑卷的东西来对其进行底层的磁盘管理操作。比如说我增加一个物理硬盘,这个时候上层的服务是感觉不到的,因为呈现给上层服务的是以逻辑卷的方式

- LVM最大的特点就是可以对磁盘进行动态管理。因为逻辑卷的大小是可以动态调整的,而且不会丢失现有的数据。如果我们新增加了硬盘,其也不会改变现有上层的逻辑卷。作为一个动态磁盘管理机制,逻辑卷技术大大提高了磁盘管理的灵活性

3. 优点

- 比起传统的硬盘分区管理方式,LVM更富有灵活性,可以将多块硬盘看做一个大硬盘, 可以创建跨众多硬盘空间的分区

- 在调整逻辑卷(LV)大小时可以不用考虑逻辑卷在硬盘上的位置,不用担心没有可用的连续空间

二. 操作案例

1. 基于创建

- 服务器上新创建

# 安装lvm相关命令

yum -y install lvm2

# 查看分区

[root@lvm ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

└─sda1 8:1 0 20G 0 part /

sdb 8:16 0 40G 0 disk

sdc 8:32 0 10G 0 disk

sr0 11:0 1 973M 0 rom

# 创建物理卷PV

[root@lvm ~]# pvcreate /dev/sdb /dev/sdc

Physical volume "/dev/sdb" successfully created.

Physical volume "/dev/sdc" successfully created.

# 查看创建的物理卷,可以得知创建的PV中有2个分区

[root@lvm ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sdb lvm2 --- 40.00g 40.00g

/dev/sdc lvm2 --- 10.00g 10.00g

## 也可以这样查看

pvscan

# 创建卷组VG

[root@lvm ~]# vgcreate yaya /dev/sdb /dev/sdc

Volume group "yaya" successfully created

# 查看卷组,yaya的卷组一共49G, 可用49G

[root@lvm ~]# vgs

VG #PV #LV #SN Attr VSize VFree

yaya 2 0 0 wz--n- 49.99g 49.99g

# 创建逻辑卷LV, 创建多个逻辑卷

# 语法:lvcreate -L 容量大小 -n LV名 VG名

[root@lvm ~]# lvcreate -L 10G -n Moon001 yaya

Logical volume "Moon001" created.

[root@lvm ~]# lvcreate -L 5G -n Moon002 yaya

Logical volume "Moon002" created.

[root@lvm ~]# lvcreate -L 5G -n Moon003 yaya

Logical volume "Moon003" created.

# 查看创建的LV

[root@lvm ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

Moon001 yaya -wi-a----- 10.00g

Moon002 yaya -wi-a----- 5.00g

Moon003 yaya -wi-a----- 5.00g

## LV也可以这样查看

[root@lvm ~]# lvscan

ACTIVE '/dev/yaya/Moon001' [10.00 GiB] inherit

ACTIVE '/dev/yaya/Moon002' [5.00 GiB] inherit

ACTIVE '/dev/yaya/Moon003' [5.00 GiB] inherit

# 查看VG的详细信息

[root@lvm ~]# vgdisplay yaya

--- Volume group ---

VG Name yaya

System ID

Format lvm2

Metadata Areas 2

Metadata Sequence No 4

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 3

Open LV 0

Max PV 0

Cur PV 2

Act PV 2

VG Size 49.99 GiB

PE Size 4.00 MiB

Total PE 12798

Alloc PE / Size 5120 / 20.00 GiB

Free PE / Size 7678 / 29.99 GiB

VG UUID P52FYJ-i06q-rifK-W7Nf-HjCN-4gmb-ZVj7KC

# 再次查看VG

[root@lvm ~]# vgs

VG #PV #LV #SN Attr VSize VFree

yaya 2 3 0 wz--n- 49.99g 29.99g

# 查看我们分区,多了3个lvm

[root@lvm ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

└─sda1 8:1 0 20G 0 part /

sdb 8:16 0 40G 0 disk

├─yaya-Moon001 253:0 0 10G 0 lvm

├─yaya-Moon002 253:1 0 5G 0 lvm

└─yaya-Moon003 253:2 0 5G 0 lvm

sdc 8:32 0 10G 0 disk

sr0 11:0 1 973M 0 rom

# 查看上面创建的卷组与逻辑卷对应关系的位置

[root@lvm ~]# ll /dev/yaya/

total 0

lrwxrwxrwx 1 root root 7 Mar 14 22:13 Moon001 -> ../dm-0

lrwxrwxrwx 1 root root 7 Mar 14 22:13 Moon002 -> ../dm-1

lrwxrwxrwx 1 root root 7 Mar 14 22:13 Moon003 -> ../dm-2

# 格式化,可以格式化为多种格式,常见格式ext4,xfs,vFAT

mkfs.ext4 /dev/yaya/Moon001

mkfs.xfs /dev/yaya/Moon002

mkfs.vfat /dev/yaya/Moon003

# 格式化过程之一

[root@lvm ~]# mkfs.ext4 /dev/yaya/Moon001

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

655360 inodes, 2621440 blocks

131072 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=2151677952

80 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

# 创建目录挂载

mkdir /Moon-{a,b,c}

mount /dev/yaya/Moon001 /Moon-a

mount /dev/yaya/Moon002 /Moon-b

mount /dev/yaya/Moon003 /Moon-c

# 查看, 可以看到他们的文件格式

[root@lvm ~]# df -TH

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 943M 0 943M 0% /dev

tmpfs tmpfs 954M 0 954M 0% /dev/shm

tmpfs tmpfs 954M 10M 944M 2% /run

tmpfs tmpfs 954M 0 954M 0% /sys/fs/cgroup

/dev/sda1 xfs 22G 2.0G 20G 9% /

tmpfs tmpfs 191M 0 191M 0% /run/user/0

/dev/mapper/yaya-Moon001 ext4 11G 38M 9.9G 1% /Moon-a

/dev/mapper/yaya-Moon002 xfs 5.4G 34M 5.4G 1% /Moon-b

/dev/mapper/yaya-Moon003 vfat 5.4G 4.1k 5.4G 1% /Moon-c

# 写入 /etc/fstab 中,实现开机自动挂载

vim /etc/fstab

UUID=85fd024b-5296-406d-a63b-50f19e292839 / xfs defaults 0 0

/dev/yaya/Moon001 /Moon-a ext4 defaults 0 0

/dev/yaya/Moon002 /Moon-b xfs defaults 0 0

/dev/yaya/Moon003 /Moon-c vfat defaults 0 0

2. 基于追加

- 服务器上本有物理卷等的追加情况

# 新增加了一块20G的硬盘sdd, 现在需要将他也加入到上面创建的yaya的VG中

[root@lvm ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

└─sda1 8:1 0 20G 0 part /

sdb 8:16 0 40G 0 disk

├─yaya-Moon001 253:0 0 12G 0 lvm

├─yaya-Moon002 253:1 0 7G 0 lvm

└─yaya-Moon003 253:2 0 7.5G 0 lvm

sdc 8:32 0 10G 0 disk

sdd 8:48 0 20G 0 disk

sr0 11:0 1 973M 0 rom

# 将磁盘转为物理卷

pvcreate /dev/sdd

[root@lvm ~]# pvcreate /dev/sdd

Physical volume "/dev/sdd" successfully created.

[root@lvm ~]# pvscan

PV /dev/sdb VG yaya lvm2 [<40.00 GiB / <13.50 GiB free]

PV /dev/sdc VG yaya lvm2 [<10.00 GiB / <10.00 GiB free]

PV /dev/sdd lvm2 [20.00 GiB]

Total: 3 [69.99 GiB] / in use: 2 [49.99 GiB] / in no VG: 1 [20.00 GiB]

# 将新创建的物理卷扩展到之前创建的yaya的卷组中

vgextend yaya /dev/sdd

[root@lvm ~]# vgextend yaya /dev/sdd

Volume group "yaya" successfully extended

[root@lvm ~]# pvscan

PV /dev/sdb VG yaya lvm2 [<40.00 GiB / <13.50 GiB free]

PV /dev/sdc VG yaya lvm2 [<10.00 GiB / <10.00 GiB free]

PV /dev/sdd VG yaya lvm2 [<20.00 GiB / <20.00 GiB free]

Total: 3 [<69.99 GiB] / in use: 3 [<69.99 GiB] / in no VG: 0 [0 ]

# 所有的容量扩展到你想扩展到的逻辑卷中,一般在 /dev/mapper/centos-root 目录下有

lvextend -l +100%FREE /dev/yaya/Moon002

# xfs 格式,文件系统同步

xfs_growfs /dev/yaya/Moon002

# 如果是 ext4 格式, 使用 resize2fs 同步文件系统 resize2fs /dev/yaya/Moon001

3. 逻辑卷扩容

# 使用 lvextend 命令扩展逻辑卷LV的大小

# lvextend -L 容量大小(单位是M,G,例如:+5G) -r LV的位置

[root@lvm ~]# lvextend -L +2G -r /dev/yaya/Moon002

Size of logical volume yaya/Moon002 changed from 5.00 GiB (1280 extents) to 7.00 GiB (1792 extents).

Logical volume yaya/Moon002 successfully resized.

meta-data=/dev/mapper/yaya-Moon002 isize=512 agcount=4, agsize=327680 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0 spinodes=0

data = bsize=4096 blocks=1310720, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

# 查看是否加上

[root@lvm ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 900M 0 900M 0% /dev

tmpfs 910M 0 910M 0% /dev/shm

tmpfs 910M 9.5M 901M 2% /run

tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/sda1 20G 1.8G 19G 9% /

tmpfs 182M 0 182M 0% /run/user/0

/dev/mapper/yaya-Moon001 12G 41M 12G 1% /Moon-a

/dev/mapper/yaya-Moon002 7.0G 33M 7.0G 1% /Moon-b

/dev/mapper/yaya-Moon003 5.0G 4.0K 5.0G 1% /Moon-c

文章来源:https://blog.csdn.net/moon_naxx/article/details/135139192

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- React 状态管理中的类型错误及解决方案

- 《HumanGaussian: Text-Driven 3D Human Generation with Gaussian Splatting》

- 【UML】第11篇 类图(6种关系)(3/3)

- Wasmer运行.wasm文件的流程

- 高并发场景下底层账务优化方案

- 不用再找了,这就是 NLP 方向最全面试题库

- EaseUS Todo Backup Mac/win:一款功能强大、操作简便的数据保护工具

- useEffect监听多个变量

- 微信小程序的支付流程

- Oracle 经典练习题 50 题