在springboot中集成clickhouse进行读写操作

发布时间:2024年01月11日

上篇文章讲了如何在docker中搭建clickhouse,本篇记录一下在springboot中如何集成clickhouse并进行读写

1、引入依赖

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>3.4.3.4</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

<!--升级 druid驱动 1.1.10支持ClickHouse-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.1.13</version>

</dependency>

2、编写数据源配置

@Configuration

public class DruidConfig {

@Bean

public DataSource dataSource(){

DruidDataSource dataSource = new DruidDataSource();

dataSource.setUrl("jdbc:clickhouse://localhost:8123/test");

dataSource.setInitialSize(10);

dataSource.setMaxActive(100);

dataSource.setMinIdle(10);

dataSource.setMaxWait(-1);

return dataSource;

}

}

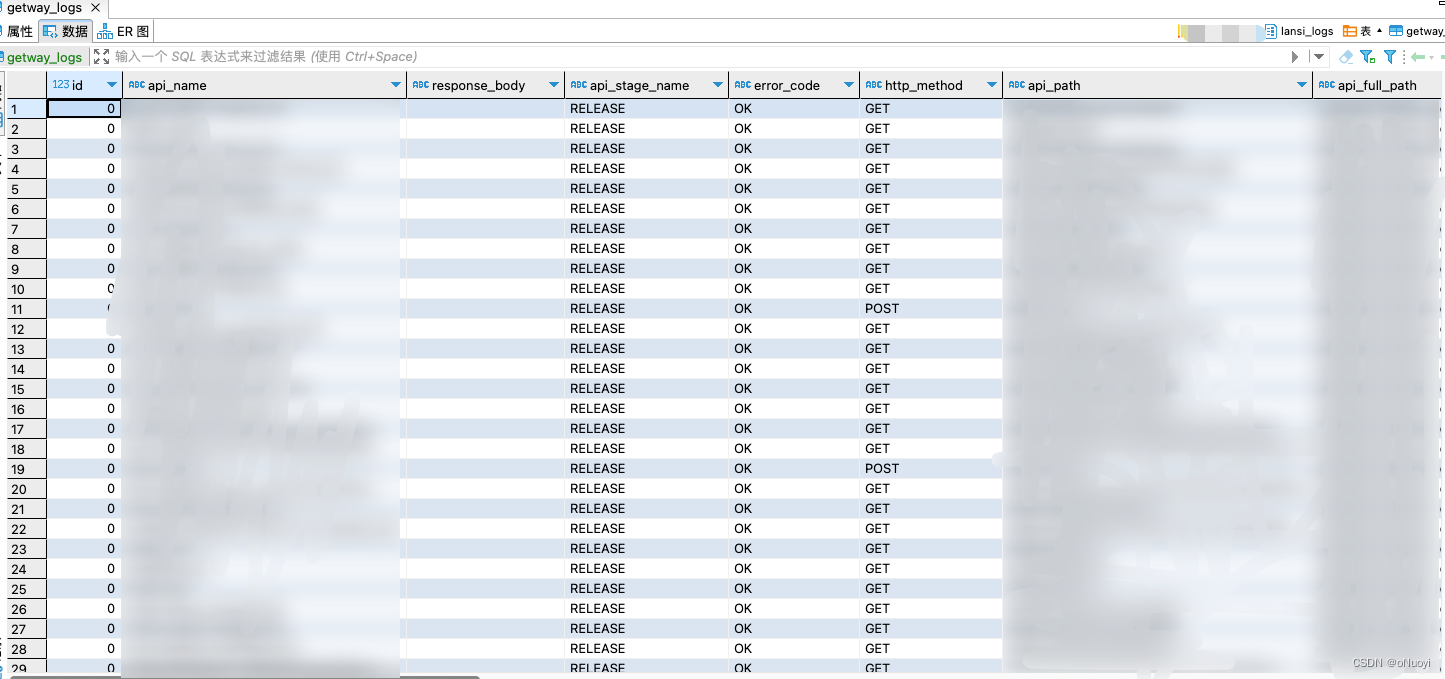

3、编写表实体类,和mysql一模一样写法,对应的表我通过DBeaver已经创建好了

package cn.yufire.sync.sls.getway.logs.pojo;

import com.baomidou.mybatisplus.annotation.TableField;

import com.baomidou.mybatisplus.annotation.TableName;

import lombok.*;

import java.io.Serializable;

@Builder

@AllArgsConstructor

@NoArgsConstructor

@TableName(value = "getway_logs")

@Data

public class GetwayLogs implements Serializable {

/**

* id

*/

@TableField(value = "id")

private Long id;

/**

* 接口名称:网关上定义的名称

*/

@TableField(value = "api_name")

private String apiName;

/**

* 响应体

*/

@TableField(value = "response_body")

private String responseBody;

/**

* 调用环境:TEST、RELEASE、PRE

*/

@TableField(value = "api_stage_name")

private String apiStageName;

/**

* 错误码

*/

@TableField(value = "error_code")

private String errorCode;

/**

* 请求类型

*/

@TableField(value = "http_method")

private String httpMethod;

/**

* 接口请求地址

*/

@TableField(value = "api_path")

private String apiPath;

/**

* 接口请求全地址

*/

@TableField(value = "api_full_path")

private String apiFullPath;

/**

* 请求时间

*/

@TableField(value = "request_time")

private String requestTime;

/**

* 请求体

*/

@TableField(value = "request_body")

private String requestBody;

/**

* 网关请求阿里云创建

*/

@TableField(value = "getway_request_id")

private String getwayRequestId;

/**

* 阿里云网关应用id

*/

@TableField(value = "getway_app_id")

private String getwayAppId;

/**

* 请求协议,HTTP、HTTPS、...

*/

@TableField(value = "request_protocol")

private String requestProtocol;

/**

* 客户端调用产生的随机字符串

*/

@TableField(value = "client_nonce")

private String clientNonce;

/**

* 网关应用名称

*/

@TableField(value = "getway_app_name")

private String getwayAppName;

/**

* 网关分组id

*/

@TableField(value = "getway_group_id")

private String getwayGroupId;

/**

* 客户端ip

*/

@TableField(value = "client_ip")

private String clientIp;

/**

* 网关绑定域名

*/

@TableField(value = "getway_bind_domain")

private String getwayBindDomain;

/**

* 请求体大小

*/

@TableField(value = "request_size")

private Integer requestSize;

/**

* 响应体大小

*/

@TableField(value = "response_size")

private Integer responseSize;

/**

* 后端应用响应HTTP状态码

*/

@TableField(value = "app_response_code")

private Integer appResponseCode;

/**

* 分组名称

* */

@TableField(value = "apiGroupName")

private String apiGroupName;

}

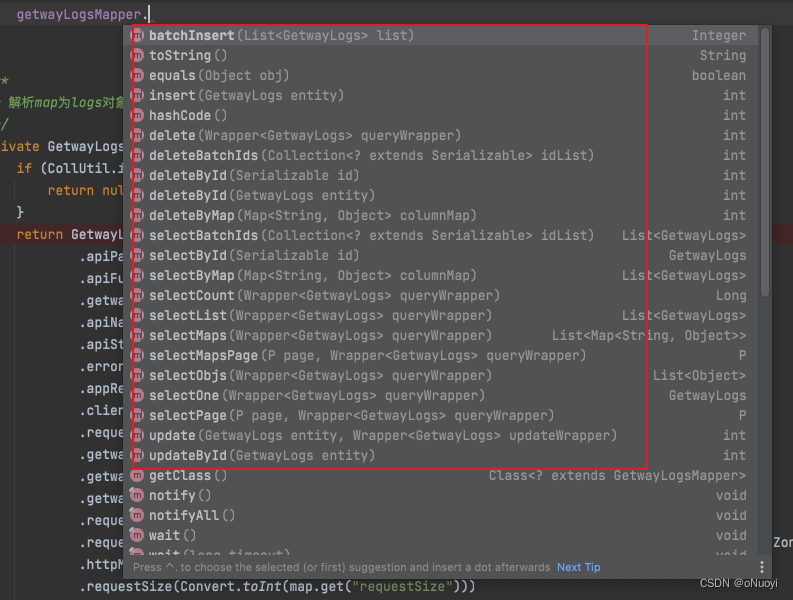

4、创建一个mapper类进行增删改查

因为我使用clickhouse只需要批量插入,所以就写了一个批量插入的sql,当然mybatis-plus也有自带的批量插入的方法,但是不是一条sql执行的,而是通过批量执行多条 SQL 语句来实现的,所以就手写了一个批次插入的sql,这里有一个坑,批量插入clickhouse会报错,单条插入没问题,百度了发现批量插入各种小问题,需要在 Values前加一个FORMAT ,即 insert into x(xx,xx) FORMAT Values (),处理后就没有报错了

package cn.yufire.sync.sls.getway.logs.mapper;

import cn.yufire.sync.sls.getway.logs.pojo.GetwayLogs;

import com.baomidou.mybatisplus.core.mapper.BaseMapper;

import org.apache.ibatis.annotations.Insert;

import java.util.List;

public interface GetwayLogsMapper extends BaseMapper<GetwayLogs> {

/**

* 批量插入网关日志

*

* @param list 数据

* @return

*/

@Insert("<script>insert into getway_logs(" +

"api_name," +

"response_body," +

"api_stage_name," +

"error_code," +

"http_method," +

"api_path," +

"api_full_path," +

"request_time," +

"request_body," +

"getway_request_id," +

"getway_app_id," +

"request_protocol," +

"client_nonce," +

"getway_app_name," +

"getway_group_id," +

"client_ip," +

"getway_bind_domain," +

"request_size," +

"response_size," +

"app_response_code" +

") FORMAT Values " +

" <foreach collection=\"list\" item=\"item\" index=\"index\" separator=\",\">\n" +

" (" +

"#{item.apiName}," +

"#{item.responseBody}," +

"#{item.apiStageName}," +

"#{item.errorCode}," +

"#{item.httpMethod}," +

"#{item.apiPath}," +

"#{item.apiFullPath}," +

"#{item.requestTime}," +

"#{item.requestBody}," +

"#{item.getwayRequestId}," +

"#{item.getwayAppId}," +

"#{item.requestProtocol}," +

"#{item.clientNonce}," +

"#{item.getwayAppName}," +

"#{item.getwayGroupId}," +

"#{item.clientIp}," +

"#{item.getwayBindDomain}," +

"#{item.requestSize}," +

"#{item.responseSize}," +

"#{item.appResponseCode}" +

")\n" +

" </foreach>" +

"</script>")

Integer batchInsert(List<GetwayLogs> list);

}

在业务中调用

@Autowired

private GetwayLogsMapper getwayLogsMapper;

log.info("批量插入至数据库中...,是否添加成功:{}", getwayLogsMapper.batchInsert(logs));

可以看到增删改查操作和操作mysql的一模一样,也就是说给clickhouse当成mysql用就行

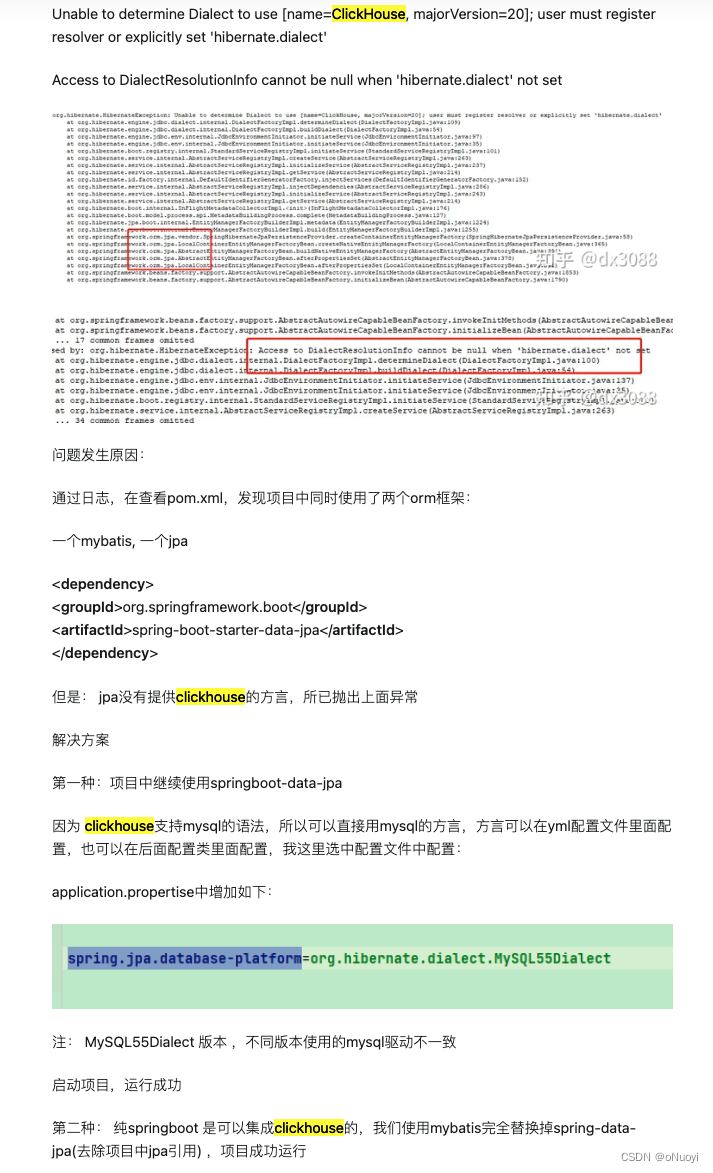

在springboot中集成clickhouse中也遇到了一些报错

ClickHouse exception, code: 1002

使用了clickhouse的依赖和jpa依赖总是跑不起来,一直报没有驱动,给了驱动也不对

clickhouse集成springboot报错Unable to determine Dialect to use [name=ClickHouse, majorVersion=22]; user must register resolver or explicitly set 'hibernate.dialect'

百度了各种最后看到这个,直接给clickhouse的依赖和jpa的依赖去掉就给clickhouse当成mysql结果就正常了

文章来源:https://blog.csdn.net/qq_41973632/article/details/135456196

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 软件装一送三了!还附带弹窗资讯,你确定不试一下?

- 运行hive的beelin2时候going to print operations logs printed operations logs

- 算法 - 二分法 / 双指针 / 三指针 / 滑动窗口

- K8s系列 Prometheus+Grafana构建智能化监控系统

- MySQL第六讲·where和having的异同?

- React的JSX

- 数模转换 120dB,192kHz DAC 音频转换芯片DP7398 软硬件兼容替代CS4398

- HTML5+CSS3小实例:纯CSS实现网站置灰

- linux安装mysql 8 数据库(保姆级)

- log4j2漏洞综合利用_CVE-2021-44228_CNVD-2021-95919