使用docker-compose快速搭建ELK

发布时间:2024年01月12日

ubuntu系统

查看Elasticsearch版本

docker search Elasticsearch

拉取镜像(7.1版本)

docker pull docker.elastic.co/elasticsearch/elasticsearch:7.1.0

新建docker-compose.yml 文件

version: '2.2'

services:

cerebro:

image: lmenezes/cerebro:0.8.3

container_name: cerebro

ports:

- "9000:9000"

command:

- -Dhosts.0.host=http://elasticsearch:9200

kibana:

image: docker.elastic.co/kibana/kibana:7.1.0

container_name: kibana7

environment:

- I18N_LOCALE=zh-CN

- XPACK_GRAPH_ENABLED=true

- TIMELION_ENABLED=true

- XPACK_MONITORING_COLLECTION_ENABLED="true"

ports:

- "5601:5601"

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.1.0

container_name: es7_01

environment:

- cluster.name=xttblog

- node.name=es7_01

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- discovery.seed_hosts=es7_01

- cluster.initial_master_nodes=es7_01,es7_02

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- es7data1:/usr/share/elasticsearch/data

ports:

- 9200:9200

elasticsearch2:

image: docker.elastic.co/elasticsearch/elasticsearch:7.1.0

container_name: es7_02

environment:

- cluster.name=xttblog

- node.name=es7_02

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- discovery.seed_hosts=es7_01

- cluster.initial_master_nodes=es7_01,es7_02

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- es7data2:/usr/share/elasticsearch/data

volumes:

es7data1:

driver: local

es7data2:

driver: local

运行docker中的compose文件(在上面yml文件的目录运行)

- docker-compose up -d : 在后台运行, 不打印日志

加大系统运行内存

- 如果报错, “max virtual memory areas vm.max_map_count [65530] is too low, increase to at least”那说明你设置的 max_map_count 小了

- 编辑 /etc/sysctl.conf

- 追加以下内容:vm.max_map_count=262144保存后

- 重新启动:sysctl -p

调整elasticsearch的jvm内存(额外操作,可以不加)

- [root@localhost /]# find / -name jvm.options

## JVM configuration

################################################################

## IMPORTANT: JVM heap size

################################################################

##

## You should always set the min and max JVM heap

## size to the same value. For example, to set

## the heap to 4 GB, set:

##

## -Xms4g

## -Xmx4g

##

## See https://www.elastic.co/guide/en/elasticsearch/reference/current/heap-size.html

## for more information

##

################################################################

# Xms represents the initial size of total heap space

# Xmx represents the maximum size of total heap space

-Xms1g #改成512m

-Xmx1g #改成512m

################################################################

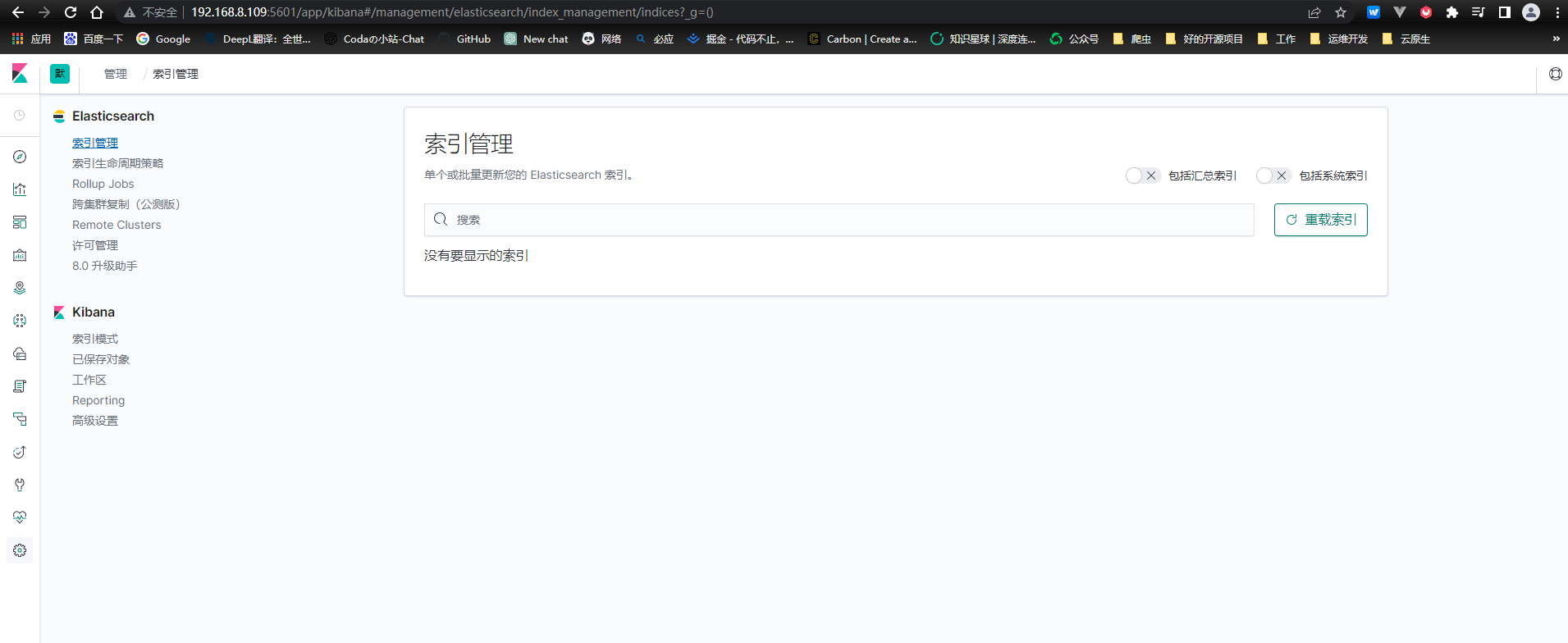

在浏览器去登录ES与kibana与cerebro

- 5601登录kibana

- 9200登录ES

- 9000登录cerebro

Linux安装logstash

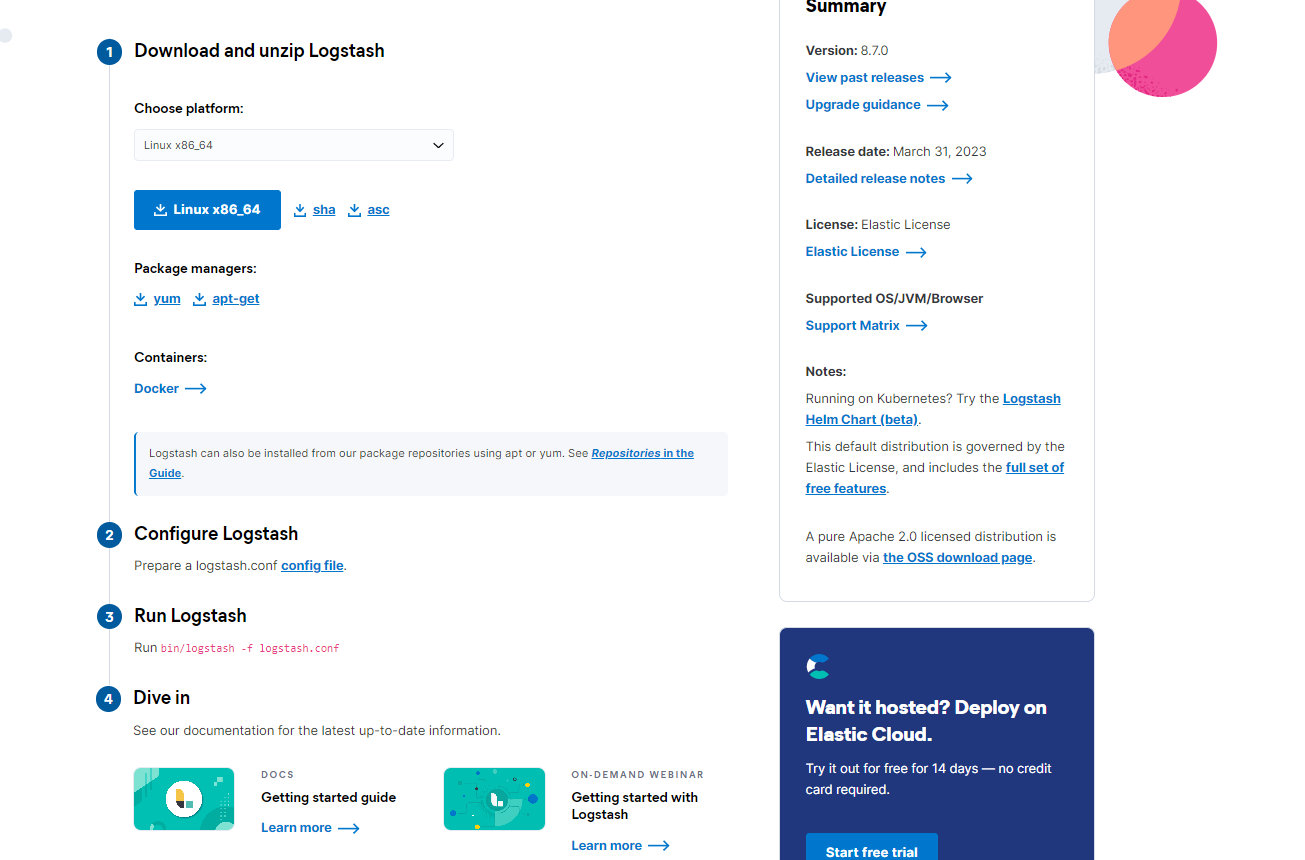

进入到elasticsearch官网下载和elasticsearch同版本的logstash

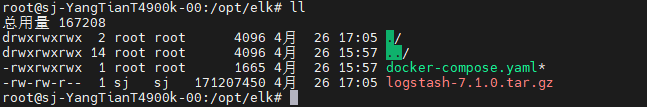

将logstash上传到服务器安装

解压logstash:

tar -zxvf logstash-7.1.0.tar.gz

顺便安装一个jdk1.8(安装好的可以跳过)

apt-get install openjdk-8-jdk

配置logstash的配置文件

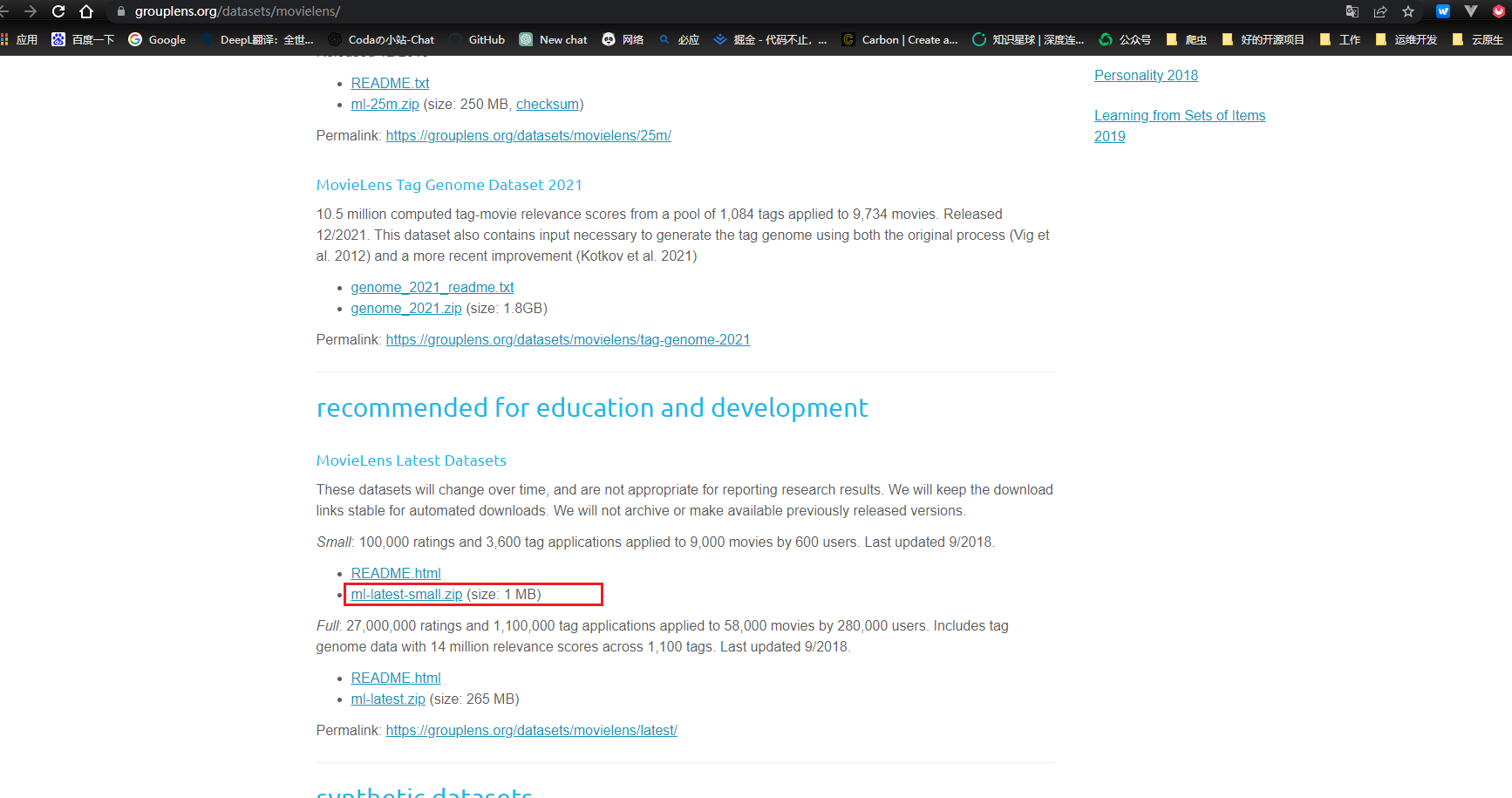

下载测试数据集

- 测试数据集, 就是在我们启动logstash的时候往ES中导入数据, 供我们测试

- 网站:https://grouplens.org/datasets/movielens/

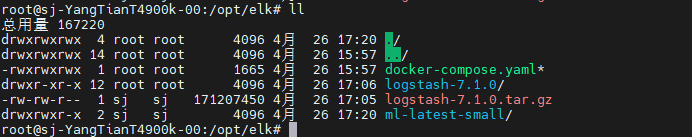

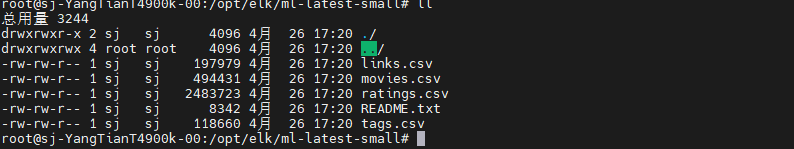

导入数据到elasticsearch

- 传入需要测试的数据集到

/opt/elk文件夹下:

- 数据集文件里面的movies.csv就是我们需要导入的数据

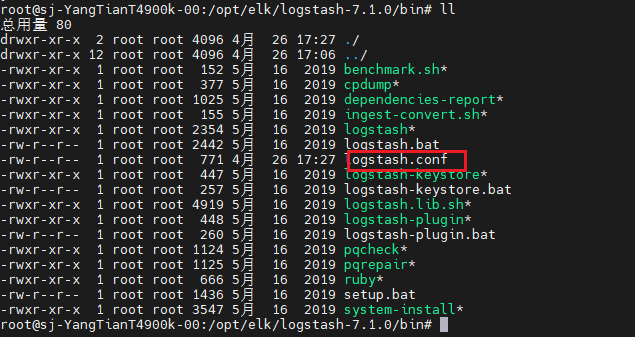

建立配置文件

在logstash的bin目录下新建配置文件

input {

file {

path => "/opt/elk/ml-latest-small/movies.csv"

start_position => "beginning"

sincedb_path => "/dev/null"

}

}

filter {

csv {

separator => ","

columns => ["id","content","genre"]

}

mutate {

split => { "genre" => "|"}

remove_field => ["path", "host", "@timestamp","message"]

}

mutate {

split => ["content", "("]

add_field => {"title" => "%{[content][0]}"}

add_field => {"year" => "%{[content][1]}"}

}

mutate {

convert => {

"year" => "integer"

}

strip => ["title"]

remove_field => ["path", "host", "@timestamp","message","content"]

}

}

output {

elasticsearch {

hosts => ["http://192.168.8.109:9200"]

index => "movies"

document_id => "%{id}"

}

stdout {}

}

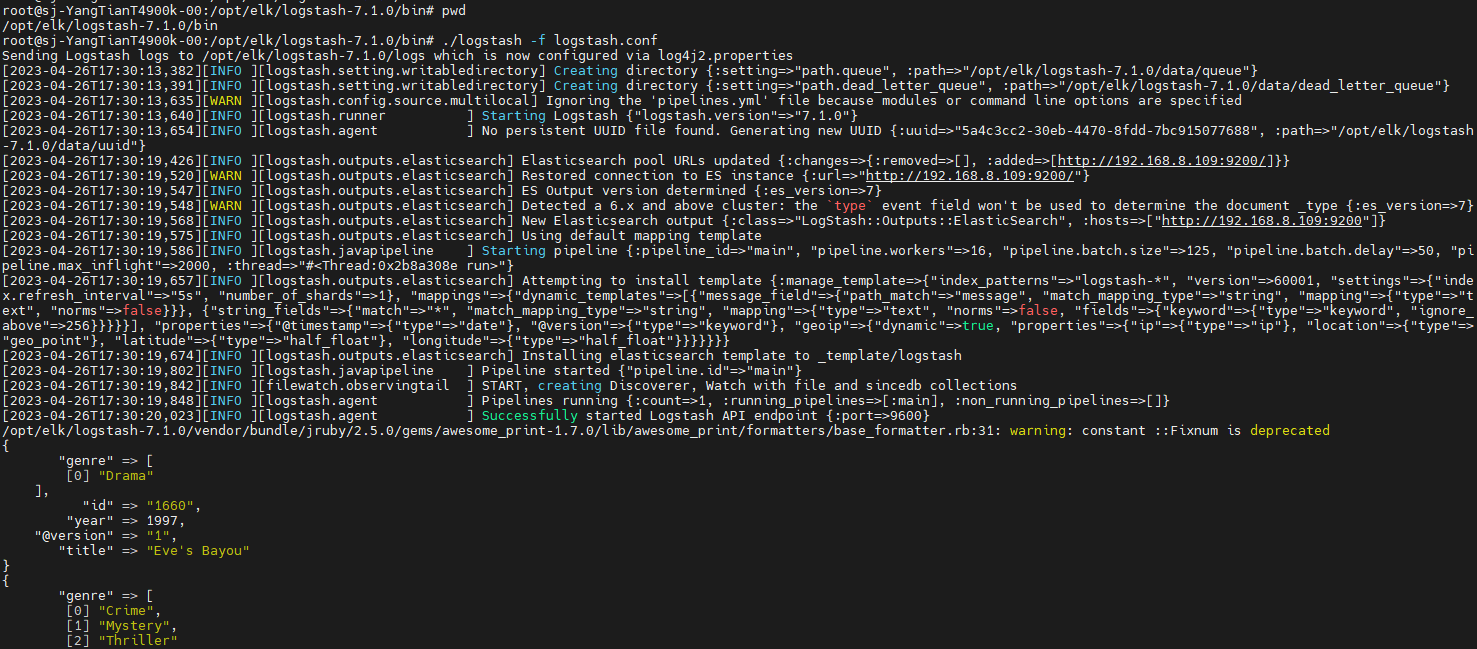

启动logstash

在logstash 的bin目录下启动

cd /opt/elk/logstash-7.1.0/bin && ./logstash -f logstash.conf

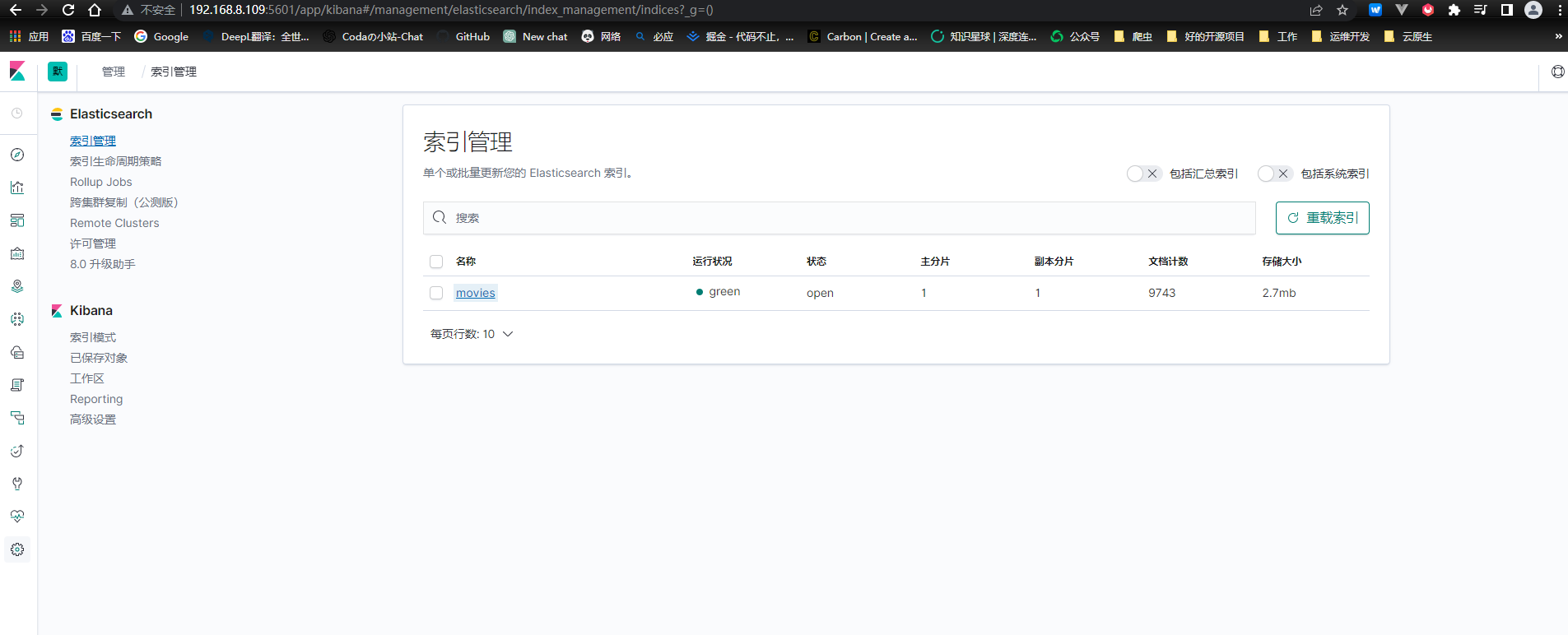

通过日志,我们可以看到数据被导入到elasticsearch中,我们同样可以在kibana中看到数据已经被导入elasticsearch。

微信公众号:海哥python

文章来源:https://blog.csdn.net/python_9k/article/details/135558116

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 基于 MQTT 的开源桥接器:自由控制物联网设备 | 开源日报 No.151

- java自动化将用例和截图一起执行测试放入world中直接生成测试报告【搬代码】

- ARM汇编指令集-跳转与存储器访问指令

- 微服务架构所面临的技术问题

- java 如何正确使用多线程

- JL-03-Q6 校园气象站

- SoC芯片中的复位

- 宏基因组学Metagenome-磷循环Pcycle功能基因分析-从分析过程到代码及结果演示-超详细保姆级流程

- 手把手教你使用 PyTorch 搭建神经网络

- 网络通信(6)-ARP协议解析