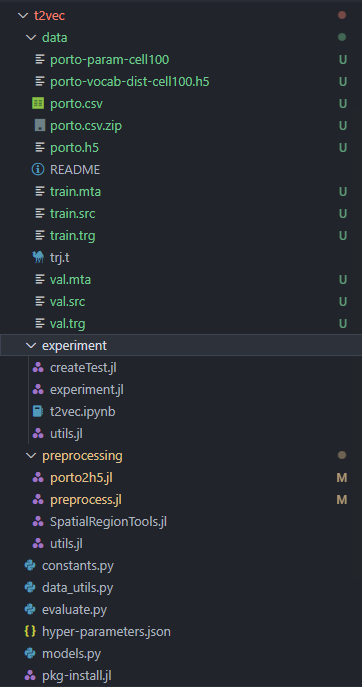

t2vec code

数据预处理执行过程

(base) zzq@server1:~/project/t2vec/preprocessing$ julia porto2h5.jl

Processing 1710660 trips…

100000

200000

300000

400000

500000

600000

700000

800000

900000

1000000

1100000

1200000

1300000

1400000

1500000

1600000

1700000

Incompleted trip: 0.

Saved 1704759 trips.

(base) zzq@server1:~/project/t2vec/preprocessing$ julia preprocess.jl

Please provide the correct hdf5 file /home/xiucheng/Github/t2vec/data/porto.h5

(base) zzq@server1:~/project/t2vec/preprocessing$ julia preprocess.jl

Building spatial region with:

cityname=porto,

minlon=-8.735152,

minlat=40.953673,

maxlon=-8.156309,

maxlat=41.307945,

xstep=100.0,

ystep=100.0,

minfreq=100

Creating paramter file /home/zzq/project/t2vec/data/porto-param-cell100

Processed 100000 trips

Processed 200000 trips

Processed 300000 trips

Processed 400000 trips

Processed 500000 trips

Processed 600000 trips

Processed 700000 trips

Processed 800000 trips

Processed 900000 trips

Processed 1000000 trips

Processed 1100000 trips

Processed 1200000 trips

Processed 1300000 trips

Processed 1400000 trips

Processed 1500000 trips

Processed 1600000 trips

Processed 1700000 trips

Cell count at max_num_hotcells:40000 is 7

Vocabulary size 18866 with cell size 100.0 (meters)

Creating training and validation datasets…

Scaned 200000 trips…

Scaned 300000 trips…

Scaned 400000 trips…

Scaned 500000 trips…

Scaned 600000 trips…

Scaned 700000 trips…

Scaned 900000 trips…

Scaned 1000000 trips…

Saved cell distance into /home/zzq/project/t2vec/data/porto-vocab-dist-cell100.h5

训练执行过程

(py38_torch) zzq@server1:~/project/t2vec$ python t2vec.py -vocab_size 19000 -criterion_name “KLDIV” -knearestvocabs “data/porto-vocab-

dist-cell100.h5”

Namespace(batch=128, bidirectional=True, bucketsize=[(20, 30), (30, 30), (30, 50), (50, 50), (50, 70), (70, 70), (70, 100), (100, 100)], checkpoint=‘/home/zzq/project/t2vec/data/checkpoint.pt’, criterion_name=‘KLDIV’, cuda=True, data=‘/home/zzq/project/t2vec/data’, discriminative_w=0.1, dist_decay_speed=0.8, dropout=0.2, embedding_size=256, epochs=15, generator_batch=32, hidden_size=256, knearestvocabs=‘data/porto-vocab-dist-cell100.h5’, learning_rate=0.001, max_grad_norm=5.0, max_length=200, max_num_line=20000000, mode=0, num_layers=3, prefix=‘exp’, pretrained_embedding=None, print_freq=50, save_freq=1000, start_iteration=0, t2vec_batch=256, use_discriminative=False, vocab_size=19000)

Reading training data…

Read line 500000

Read line 1000000

Read line 1500000

Read line 2000000

Read line 2500000

Read line 3000000

Read line 3500000

Read line 4000000

Read line 4500000

Read line 5000000

Read line 5500000

Read line 6000000

Read line 6500000

Read line 7000000

Read line 7500000

conda activate py38_torch

Read line 8000000

Read line 8500000

Read line 9000000

Read line 9500000

Read line 10000000

Read line 10500000

Read line 11000000

Read line 11500000

Read line 12000000

Read line 12500000

Read line 13000000

Read line 13500000

Read line 14000000

Read line 14500000

Read line 15000000

Read line 15500000

Read line 16000000

Read line 16500000

/home/zzq/project/t2vec/data_utils.py:155: VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tuple of lists-or-tuples-or ndarrays with different lengths or shapes) is deprecated. If you meant to do this, you must specify ‘dtype=object’ when creating the ndarray.

self.srcdata = list(map(np.array, self.srcdata))

/home/zzq/project/t2vec/data_utils.py:156: VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tuple of lists-or-tuples-or ndarrays with different lengths or shapes) is deprecated. If you meant to do this, you must specify ‘dtype=object’ when creating the ndarray.

self.trgdata = list(map(np.array, self.trgdata))

Allocation: [5452223, 1661226, 4455236, 2564198, 1595705, 648512, 247211, 87649]

Percent: [0.32624677 0.09940342 0.26658968 0.15343491 0.09548282 0.03880526

0.01479246 0.00524469]

Reading validation data…

/home/zzq/project/t2vec/data_utils.py:148: VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tuple of lists-or-tuples-or ndarrays with different lengths or shapes) is deprecated. If you meant to do this, you must specify ‘dtype=object’ when creating the ndarray.

self.srcdata = np.array(merge(*self.srcdata))

/home/zzq/project/t2vec/data_utils.py:149: VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tuple of lists-or-tuples-or ndarrays with different lengths or shapes) is deprecated. If you meant to do this, you must specify ‘dtype=object’ when creating the ndarray.

self.trgdata = np.array(merge(*self.trgdata))

Loaded validation data size 167520

Loading vocab distance file data/porto-vocab-dist-cell100.h5…

=> training with GPU

=> no checkpoint found at ‘/home/zzq/project/t2vec/data/checkpoint.pt’

Iteration starts at 0 and will end at 66999

/home/zzq/project/t2vec/data_utils.py:46: VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tuple of lists-or-tuples-or ndarrays with different lengths or shapes) is deprecated. If you meant to do this, you must specify ‘dtype=object’ when creating the ndarray.

src = list(np.array(src)[idx])

/home/zzq/project/t2vec/data_utils.py:47: VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tuple of lists-or-tuples-or ndarrays with different lengths or shapes) is deprecated. If you meant to do this, you must specify ‘dtype=object’ when creating the ndarray.

trg = list(np.array(trg)[idx])

Iteration: 0 Generative Loss: 6.349 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 50 Generative Loss: 4.667 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 100 Generative Loss: 4.298 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 150 Generative Loss: 4.311 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 200 Generative Loss: 4.882 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 250 Generative Loss: 3.627 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 300 Generative Loss: 3.012 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 350 Generative Loss: 3.063 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 400 Generative Loss: 2.437 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 450 Generative Loss: 2.157 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 500 Generative Loss: 2.217 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 550 Generative Loss: 1.979 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 600 Generative Loss: 2.446 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 650 Generative Loss: 1.313 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 700 Generative Loss: 1.495 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 750 Generative Loss: 1.446 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 800 Generative Loss: 1.298 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 850 Generative Loss: 1.119 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 900 Generative Loss: 1.191 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 950 Generative Loss: 1.149 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 1000 Generative Loss: 1.555 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Saving the model at iteration 1000 validation loss 48.266686046931895

Iteration: 1050 Generative Loss: 1.039 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

Iteration: 1100 Generative Loss: 0.797 Discriminative Cross Loss: 0.000 Discriminative Inner Loss: 0.000

preprocess.jl 解释

这段代码是用于构建和训练一个空间区域的模型,主要包含以下步骤:

-

导入必要的Julia包:

using JSON using DataStructures using NearestNeighbors using Serialization, ArgParse include("SpatialRegionTools.jl") -

通过ArgParse库解析命令行参数:

args = let s = ArgParseSettings() @add_arg_table s begin "--datapath" arg_type=String default="/home/zzq/project/t2vec/data" end parse_args(s; as_symbols=true) end这段代码使用ArgParse库来解析命令行参数。

--datapath是一个可选参数,表示数据的存储路径,默认为 “/home/zzq/project/t2vec/data”。 -

读取JSON格式的超参数文件(“…/hyper-parameters.json”):

param = JSON.parsefile("../hyper-parameters.json")这里假设存在一个超参数文件,通过JSON库解析超参数。

-

从超参数中提取有关空间区域的信息:

regionps = param["region"] cityname = regionps["cityname"] cellsize = regionps["cellsize"]获取城市名称、单元格大小等信息。

-

检查是否存在 HDF5 文件,如果不存在则退出:

if !isfile("$datapath/$cityname.h5") println("Please provide the correct hdf5 file $datapath/$cityname.h5") exit(1) end -

使用提取的信息构建一个 SpatialRegion 对象:

region = SpatialRegion(cityname, regionps["minlon"], regionps["minlat"], regionps["maxlon"], regionps["maxlat"], cellsize, cellsize, regionps["minfreq"], # minfreq 40_000, # maxvocab_size 10, # k 4) # vocab_start这里创建了一个

SpatialRegion对象,用于表示一个空间区域,包含了区域的地理信息和一些超参数。 -

输出空间区域的信息:

println("Building spatial region with: cityname=$(region.name), minlon=$(region.minlon), minlat=$(region.minlat), maxlon=$(region.maxlon), maxlat=$(region.maxlat), xstep=$(region.xstep), ystep=$(region.ystep), minfreq=$(region.minfreq)")这段代码输出构建的空间区域的一些关键信息。

-

检查是否存在先前保存的参数文件,如果存在则读取参数文件,否则创建并保存参数文件:

paramfile = "$datapath/$(region.name)-param-cell$(Int(cellsize))" if isfile(paramfile) println("Reading parameter file from $paramfile") region = deserialize(paramfile) else println("Creating parameter file $paramfile") num_out_region = makeVocab!(region, "$datapath/$cityname.h5") serialize(paramfile, region) end如果存在参数文件,则从文件中读取参数,否则创建参数并保存到文件中。

-

输出词汇表的大小和单元格大小:

println("Vocabulary size $(region.vocab_size) with cell size $cellsize (meters)")输出词汇表的大小和单元格大小。

-

创建训练和验证数据集:

println("Creating training and validation datasets...") createTrainVal(region, "$datapath/$cityname.h5", datapath, downsamplingDistort, 1_000_000, 10_000)这里调用

createTrainVal函数创建训练和验证数据集。 -

保存最近邻词汇:

saveKNearestVocabs(region, datapath)最后,保存最近邻的词汇。

训练未开始时目录结构

h5 文件结构

根据你提供的Julia代码,使用h5open函数创建了一个HDF5文件,并将处理后的数据存储到文件中。下面是生成的HDF5文件的组和数据集结构的大致描述:

-

组结构:

/trips: 存储处理后的行程数据。/timestamps: 存储每个行程的时间戳数据。

-

数据集结构:

/trips/1: 第一个行程的数据。/timestamps/1: 第一个行程对应的时间戳数据。/trips/2: 第二个行程的数据。/timestamps/2: 第二个行程对应的时间戳数据。- 以此类推…

-

属性:

- 文件属性:

num属性存储了总共存储的行程数目。

- 文件属性:

根据代码中的逻辑,每个行程都被存储为两个数据集:/trips/$num 和 /timestamps/$num。这里的 $num 是行程的编号,从1开始递增。

要注意的是,这里的时间戳数据 /timestamps/$num 是通过生成一个等差数列 collect(0:tripLength-1) * 15.0 得到的,15.0 是一个时间间隔的倍数。这是基于时间戳的假设,具体的时间间隔可能需要根据你的数据集的特点进行调整。

请注意,具体的组织结构可能取决于你的数据和代码的具体实现。你可以使用h5py或其他HDF5文件阅读工具来查看生成的HDF5文件的详细结构。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!