超维空间S2无人机使用说明书——51、使用yolov8进行目标跟踪

发布时间:2023年12月24日

引言:为了提高yolo识别的质量,提高了yolo的版本,改用yolov8进行物体识别,同时系统兼容了低版本的yolo,包括基于C++的yolov3和yolov4,以及yolov7。

简介,为了提高识别速度,系统采用了GPU进行加速,在使用7W功率的情况,大概可以稳定在20FPS,满功率情况下可以适当提高。

硬件:D435摄像头,Jetson orin nano 8G

环境:ubuntu20.04,ros-noetic, yolov8

注:目标跟随是在木根识别的基础上进行,因此本小节和yolov8识别小节类似,只是在此基础上添加了跟随控制程序

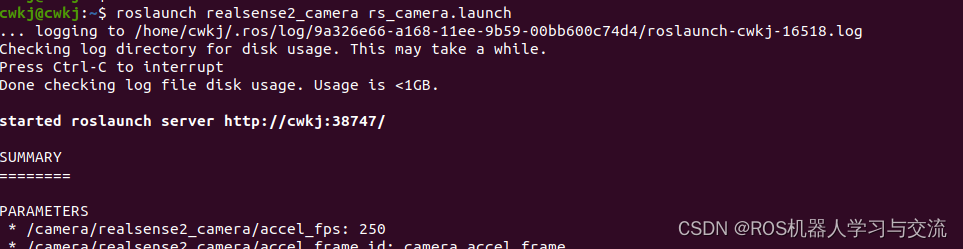

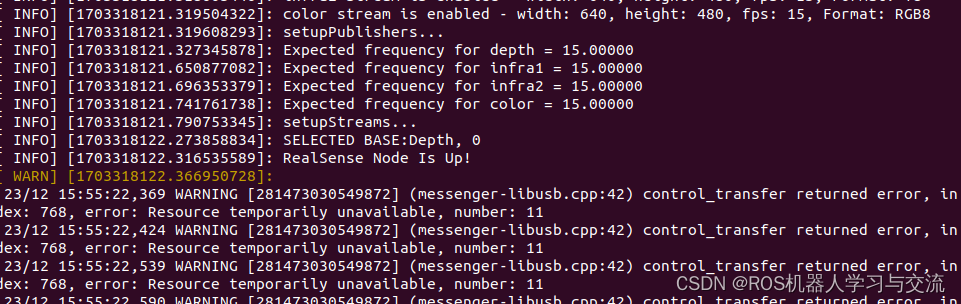

步骤一: 启动摄像头,获取摄像头发布的图像话题

roslaunch realsense2_camera rs_camera.launch

没有出现红色报错,出现如下界面,表明摄像头启动成功

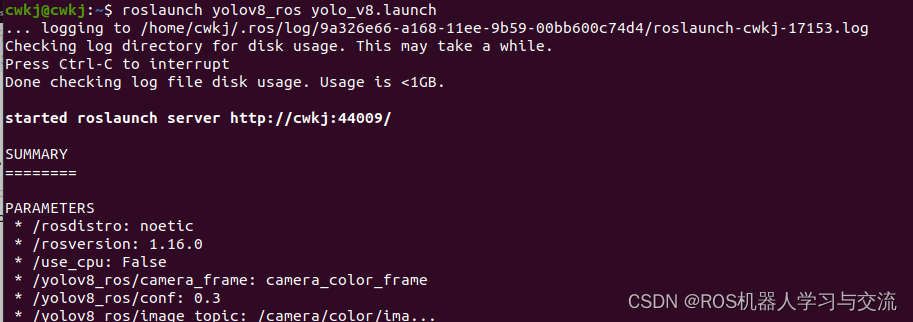

步骤二:启动yolov8识别节点

roslaunch yolov8_ros yolo_v8.launch

launch文件如下,参数use_cpu设置为false,因为实际使用GPU加速,不是CPU跑,另外参数pub_topic是yolov8识别到目标后发布出来的物体在镜头中的位置,程序作了修改,直接给出目标物的中心位置,其中参数image_topic是订阅的节点话题,一定要与摄像头发布的实际话题名称对应上。

<?xml version="1.0" encoding="utf-8"?>

<launch>

<!-- Load Parameter -->

<param name="use_cpu" value="false" />

<!-- Start yolov8 and ros wrapper -->

<node pkg="yolov8_ros" type="yolo_v8.py" name="yolov8_ros" output="screen" >

<param name="weight_path" value="$(find yolov8_ros)/weights/yolov8n.pt"/>

<param name="image_topic" value="/camera/color/image_raw" />

<param name="pub_topic" value="/object_position" />

<param name="camera_frame" value="camera_color_frame"/>

<param name="visualize" value="false"/>

<param name="conf" value="0.3" />

</node>

</launch>

出现如下界面表示yolov8启动成功

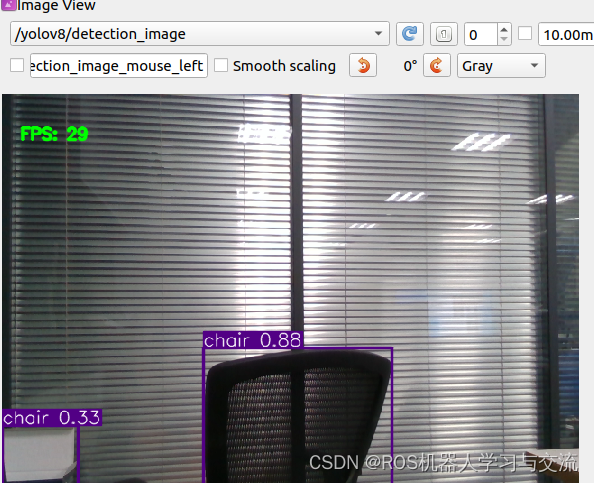

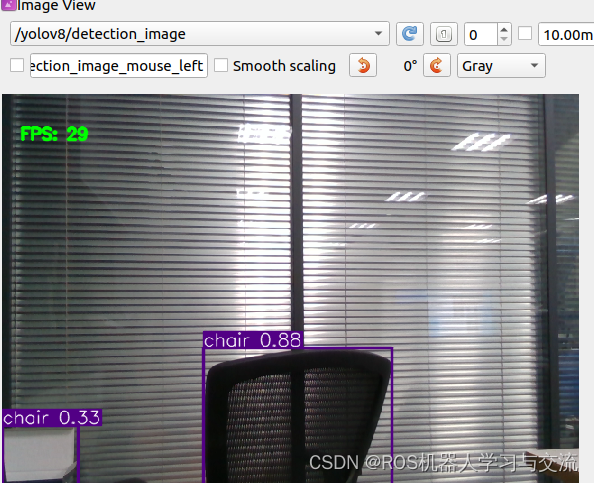

步骤三:打开rqt工具,查看识别效果

注:步骤三不是必须的,可以跳过直接进行步骤四

rqt_image_view

等待出现如下界面后,选择yolov8/detection_image查看yolov8识别效果

步骤四:启动跟随控制程序

(1)、终端启动程序

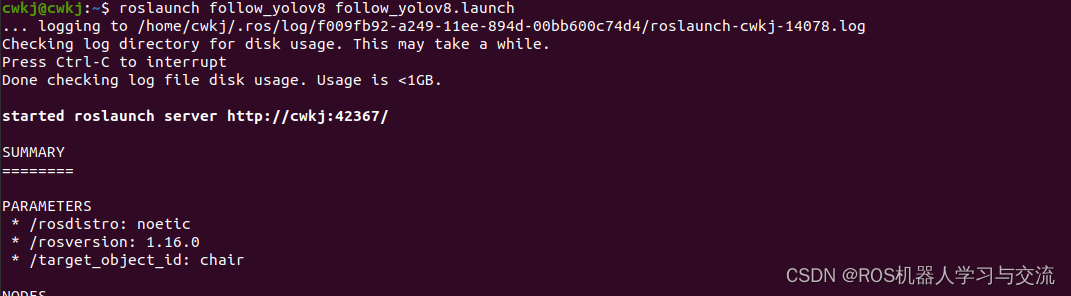

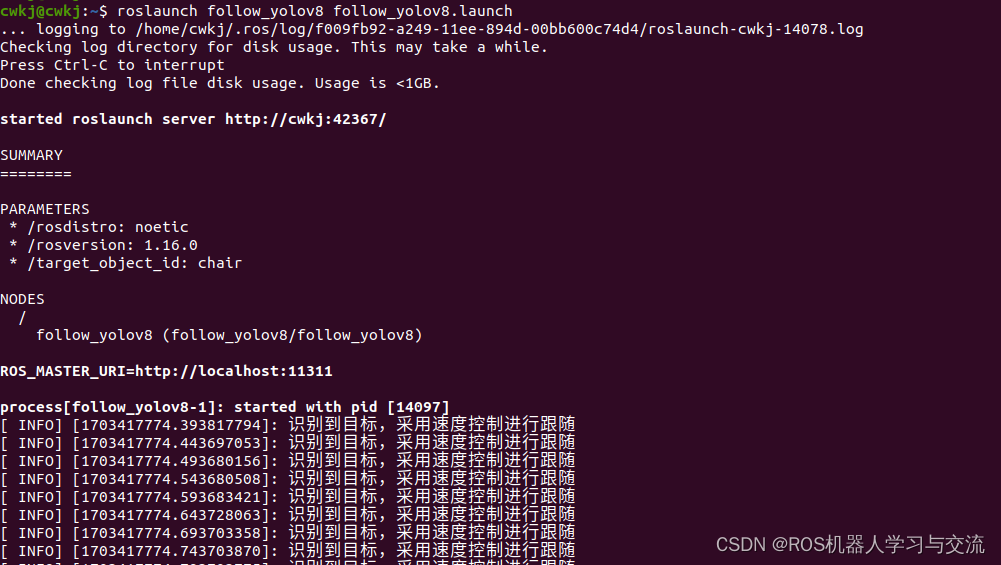

roslaunch follow_yolov8 follow_yolov8.launch

(2)、launch文件详解

<?xml version="1.0" encoding="utf-8"?>

<launch>

<param name="target_object_id" value="chair" />

<node pkg="follow_yolov8" type="follow_yolov8" name="follow_yolov8" output="screen" />

</launch>

launch文件中加载的参数target_object_id是指定跟随的目标名称,无人机在识别到这个目标以后,会通过全向的速度控制保持目标始终在无人机的视野中。launch文件中指定参数chair,因此在识别chair以后,可以看到终端会打印日志已经识别到指定的目标物

步骤五:控制部分代码

此处抛砖引玉,仅仅做最简单的速度控制,读者可以根据自己的理解,添加类似PID等控制跟随的算法,本文不再展开

#include <ros/ros.h>

#include <std_msgs/Bool.h>

#include <geometry_msgs/PoseStamped.h>

#include <geometry_msgs/TwistStamped.h>

#include <mavros_msgs/CommandBool.h>

#include <mavros_msgs/SetMode.h>

#include <mavros_msgs/State.h>

#include <mavros_msgs/PositionTarget.h>

#include <cmath>

#include <tf/transform_listener.h>

#include <nav_msgs/Odometry.h>

#include <mavros_msgs/CommandLong.h>

#include <yolov8_ros_msgs/BoundingBoxes.h>

#include <string>

#define MAX_ERROR 50

#define VEL_SET 0.15

#define ALTITUDE 0.40

using namespace std;

yolov8_ros_msgs::BoundingBoxes object_pos;

nav_msgs::Odometry local_pos;

mavros_msgs::State current_state;

mavros_msgs::PositionTarget setpoint_raw;

//检测到的物体坐标值

double position_detec_x = 0;

double position_detec_y = 0;

std::string Class = "no_object";

std::string target_object_id = "eight";

void state_cb(const mavros_msgs::State::ConstPtr& msg);

void local_pos_cb(const nav_msgs::Odometry::ConstPtr& msg);

void object_pos_cb(const yolov8_ros_msgs::BoundingBoxes::ConstPtr& msg);

int main(int argc, char **argv)

{

//防止中文输出乱码

setlocale(LC_ALL, "");

//初始化节点,名称为visual_throw

ros::init(argc, argv, "follow_yolov8");

//创建句柄

ros::NodeHandle nh;

//订阅无人机状态话题

ros::Subscriber state_sub = nh.subscribe<mavros_msgs::State>("mavros/state", 100, state_cb);

//订阅无人机实时位置信息

ros::Subscriber local_pos_sub = nh.subscribe<nav_msgs::Odometry>("/mavros/local_position/odom", 100, local_pos_cb);

//订阅实时位置信息

ros::Subscriber object_pos_sub = nh.subscribe<yolov8_ros_msgs::BoundingBoxes>("object_position", 100, object_pos_cb);

//发布无人机位置控制话题

ros::Publisher local_pos_pub = nh.advertise<geometry_msgs::PoseStamped>("mavros/setpoint_position/local", 100);

//发布无人机多维控制话题

ros::Publisher mavros_setpoint_pos_pub = nh.advertise<mavros_msgs::PositionTarget>("/mavros/setpoint_raw/local", 100);

//请求无人机解锁服务

ros::ServiceClient arming_client = nh.serviceClient<mavros_msgs::CommandBool>("mavros/cmd/arming");

//请求无人机设置飞行模式,本代码请求进入offboard

ros::ServiceClient set_mode_client = nh.serviceClient<mavros_msgs::SetMode>("mavros/set_mode");

//请求控制舵机客户端

ros::ServiceClient ctrl_pwm_client = nh.serviceClient<mavros_msgs::CommandLong>("mavros/cmd/command");

//循环频率

ros::Rate rate(20.0);

ros::param::get("target_object_id", target_object_id);

//等待连接到PX4无人机

while(ros::ok() && current_state.connected)

{

ros::spinOnce();

rate.sleep();

}

setpoint_raw.type_mask = 1 + 2 + /*4 + 8 + 16 + 32*/ + 64 + 128 + 256 + 512 + 1024 + 2048;

setpoint_raw.coordinate_frame = 8;

setpoint_raw.position.x = 0;

setpoint_raw.position.y = 0;

setpoint_raw.position.z = 0 + ALTITUDE;

mavros_setpoint_pos_pub.publish(setpoint_raw);

for(int i = 100; ros::ok() && i > 0; --i)

{

mavros_setpoint_pos_pub.publish(setpoint_raw);

ros::spinOnce();

rate.sleep();

}

//请求offboard模式变量

mavros_msgs::SetMode offb_set_mode;

offb_set_mode.request.custom_mode = "OFFBOARD";

//请求解锁变量

mavros_msgs::CommandBool arm_cmd;

arm_cmd.request.value = true;

ros::Time last_request = ros::Time::now();

//请求进入offboard模式并且解锁无人机,15秒后退出,防止重复请求

while(ros::ok())

{

//请求进入OFFBOARD模式

if( current_state.mode != "OFFBOARD" && (ros::Time::now() - last_request > ros::Duration(5.0)))

{

if( set_mode_client.call(offb_set_mode) && offb_set_mode.response.mode_sent)

{

ROS_INFO("Offboard enabled");

}

last_request = ros::Time::now();

}

else

{

//请求解锁

if( !current_state.armed && (ros::Time::now() - last_request > ros::Duration(5.0)))

{

if( arming_client.call(arm_cmd) && arm_cmd.response.success)

{

ROS_INFO("Vehicle armed");

}

last_request = ros::Time::now();

}

}

if(ros::Time::now() - last_request > ros::Duration(5.0))

break;

mavros_setpoint_pos_pub.publish(setpoint_raw);

ros::spinOnce();

rate.sleep();

}

while(ros::ok())

{

//此处表示识别到launch文件中指定的目标

//if(object_pos.bounding_boxes[0].Class == "chair")

if(Class == target_object_id)

{

ROS_INFO("识别到目标,采用速度控制进行跟随");

//摄像头向下安装,因此摄像头的Z对应无人机的X前后方向,Y对应Y左右方向

//无人机左右移动速度控制

if(position_detec_x-320 >= MAX_ERROR)

{

setpoint_raw.velocity.y = -VEL_SET;

}

else if(position_detec_x-320 <= -MAX_ERROR)

{

setpoint_raw.velocity.y = VEL_SET;

}

else

{

setpoint_raw.velocity.y = 0;

}

//无人机前后移动速度控制

if(position_detec_y-240 >= MAX_ERROR)

{

setpoint_raw.velocity.x = -VEL_SET;

}

else if(position_detec_y-240 <= -MAX_ERROR)

{

setpoint_raw.velocity.x = VEL_SET;

}

else

{

setpoint_raw.velocity.x = 0;

}

}

else

{

setpoint_raw.velocity.x = 0;

setpoint_raw.velocity.y = 0;

}

setpoint_raw.type_mask = 1 + 2 +/* 4 + 8 + 16 + 32*/ + 64 + 128 + 256 + 512 /*+ 1024 + 2048*/;

setpoint_raw.coordinate_frame = 8;

setpoint_raw.velocity.x = 0;

setpoint_raw.position.z = 0 + ALTITUDE;

setpoint_raw.yaw = 0;

mavros_setpoint_pos_pub.publish(setpoint_raw);

ros::spinOnce();

rate.sleep();

}

return 0;

}

void state_cb(const mavros_msgs::State::ConstPtr& msg)

{

current_state = *msg;

}

void local_pos_cb(const nav_msgs::Odometry::ConstPtr& msg)

{

local_pos = *msg;

}

void object_pos_cb(const yolov8_ros_msgs::BoundingBoxes::ConstPtr& msg)

{

object_pos = *msg;

position_detec_x = object_pos.bounding_boxes[0].xmin;

position_detec_y = object_pos.bounding_boxes[0].ymin;

Class = object_pos.bounding_boxes[0].Class;

}

从图中可以看出,在10W功率的情况下,大概在30帧的效果,识别准确度比较高

文章来源:https://blog.csdn.net/qq_35598561/article/details/135185469

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- ES查询语法介绍及举例

- leetcode 525. 连续数组(优质解法)

- 工厂设计模式看这一篇就够了

- Linux 环境中Postgresql-10安装 RPM安装详细步骤

- 小型内衣洗衣机什么牌子好?家用小型洗衣机推荐

- 融资项目——vue之数据绑定

- 基于Pytorch的身份证及其他证件检测矫正模型应用

- Leetcode—118.杨辉三角【简单】

- 亚洲诚信TrustAsia成功入选《第十四批上海市重点商标保护名录》

- 热门平台引流玩法,一起看看吧