工具系列:TensorFlow决策森林_(9)自动超参数调整

文章目录

欢迎来到TensorFlow决策森林 自动超参数调整教程。在本文中,您将学习如何使用TensorFlow Decision Forests进行自动超参数调整来改进您的模型。

更具体地说,我们将:

- 训练一个没有超参数调整的模型。这个模型将用于衡量超参数调整的质量改进。

- 使用TF-DF的调谐器训练一个有超参数调整的模型。要优化的超参数将被手动定义。

- 使用TF-DF的调谐器训练另一个有超参数调整的模型。但是这次,要优化的超参数将被自动设置。这是使用超参数调整时推荐尝试的第一种方法。

- 最后,我们将使用Keras的调谐器训练一个有超参数调整的模型。

介绍

学习算法在训练数据集上训练机器学习模型。学习算法的参数,称为“超参数”,控制模型的训练方式并影响其质量。因此,找到最佳超参数是建模的重要阶段。

有些超参数很容易配置。例如,增加随机森林中的树的数量(num_trees)可以提高模型的质量,直到达到一个平台。因此,设置与服务约束兼容的最大值(更多的树意味着更大的模型)是一个有效的经验法则。然而,其他超参数与模型有更复杂的交互,并不能用这样简单的规则来选择。例如,增加梯度提升树模型的最大树深度(max_depth)既可以提高模型的质量,也可以降低模型的质量。此外,超参数之间可以相互作用,超参数的最佳值不能孤立地找到。

选择超参数值有三种主要方法:

-

默认方法:学习算法带有默认值。虽然在所有情况下都不理想,但这些值在大多数情况下产生合理的结果。这种方法被推荐作为任何建模中使用的第一种方法。

此页面列出了TF Decision Forests的默认值。 -

模板超参数方法:除了默认值之外,TF Decision Forests还公开了超参数模板。这些是经过基准调整的超参数值,具有出色的性能,但训练成本很高(例如,

hyperparameter_template="benchmark_rank1")。 -

手动调整方法:您可以手动测试不同的超参数值,并选择表现最好的那个。

本指南提供了一些建议。 -

自动调整方法:可以使用调整算法自动找到最佳的超参数值。这种方法通常可以获得最佳结果,并且不需要专业知识。这种方法的主要缺点是对于大型数据集需要花费的时间。

在这个colab中,我们将展示TensorFlow Decision Forests库中的默认和自动调整方法。

超参数调整算法

自动调整算法通过生成和评估大量的超参数值来工作。其中每个迭代被称为一个“试验”。试验的评估是昂贵的,因为它需要每次训练一个新模型。在调整结束时,使用评估最佳的超参数。

调整算法的配置如下:

搜索空间

搜索空间是要优化的超参数列表及其可以取的值。例如,树的最大深度可以优化为1到32之间的值。探索更多的超参数和更多的可能值通常会导致更好的模型,但也需要更多的时间。超参数在文档中列出。

当一个超参数的可能值取决于另一个超参数的值时,搜索空间被称为条件空间。

试验的数量

试验的数量定义了将要训练和评估的模型数量。更多的试验数量通常会导致更好的模型,但需要更多的时间。

优化器

优化器选择要评估过去试验评估的下一个超参数。最简单且通常合理的优化器是随机选择超参数。

目标/试验分数

目标是调谐器优化的度量标准。通常,这个度量标准是模型在验证数据集上评估的质量的度量(例如准确性、对数损失)。

训练-验证-测试

验证数据集应该与训练数据集不同:如果训练和验证数据集相同,选择的超参数将是无关紧要的。验证数据集也应该与测试数据集(也称为留出数据集)不同:因为超参数调整是一种训练形式,如果测试和验证数据集相同,您实际上是在测试数据集上进行训练。在这种情况下,您可能会在测试数据集上过度拟合而没有办法进行测量。

交叉验证

在小数据集的情况下,例如包含少于100k个示例的数据集,超参数调整可以与交叉验证相结合:目标/分数的评估不是从单个训练-测试轮回中进行的,而是作为多个交叉验证轮回中指标的平均值进行评估。

与训练-验证-测试数据集类似,用于评估超参数调整期间的目标/分数的交叉验证应该与用于评估模型质量的交叉验证不同。

袋外评估

一些模型,如随机森林,可以使用“袋外评估”方法在训练数据集上进行评估。虽然不如交叉验证准确,但“袋外评估”比交叉验证快得多,并且不需要单独的验证数据集。

在TensorFlow决策森林中

在TF-DF中,模型的"自我"评估始终是一种公平的评估模型的方法。例如,随机森林模型使用袋外评估,而梯度提升模型使用验证数据集。

使用TF Decision Forests进行超参数调整

TF-DF支持最小配置的自动超参数调整。在下一个示例中,我们将训练和比较两个模型:一个使用默认超参数训练,一个使用超参数调整训练。

注意: 在大型数据集的情况下,超参数调整可能需要很长时间。在这种情况下,建议使用TF-DF进行分布式训练,以大大加快超参数调整的速度。

设置

# 安装TensorFlow Decision Forests库

!pip install tensorflow_decision_forests -U -qq

安装Wurlitzer。在colabs中显示详细的训练日志需要使用Wurlitzer(使用verbose=2)。

# 安装wurlitzer库,用于在Jupyter Notebook中隐藏pip安装的输出信息

!pip install wurlitzer -U -qq

导入必要的库。

# 导入所需的库

import tensorflow_decision_forests as tfdf

import matplotlib.pyplot as plt

import pandas as pd

import tensorflow as tf

import numpy as np

隐藏的代码单元格在colab中限制了输出的高度。

#@title 定义"set_cell_height"函数。

from IPython.core.magic import register_line_magic # 导入register_line_magic函数

from IPython.display import Javascript # 导入Javascript类

from IPython.display import display # 导入display函数

# 由于一些模型训练日志可能会覆盖整个屏幕,如果不将其压缩到较小的视口中,则会很难查看。

# 这个魔术函数允许设置单元格的最大高度。

@register_line_magic # 注册为魔术函数

def set_cell_height(size): # 定义set_cell_height函数,接受一个参数size

display( # 调用display函数

Javascript("google.colab.output.setIframeHeight(0, true, {maxHeight: " + # 调用Javascript类的方法设置iframe的最大高度

str(size) + "})")) # 将size转换为字符串并作为参数传递给Javascript方法

在没有自动超参数调整的情况下训练模型

我们将在UCI提供的Adult数据集上训练模型。让我们下载数据集。

# 下载成人数据集的副本。

!wget -q https://raw.githubusercontent.com/google/yggdrasil-decision-forests/main/yggdrasil_decision_forests/test_data/dataset/adult_train.csv -O /tmp/adult_train.csv

!wget -q https://raw.githubusercontent.com/google/yggdrasil-decision-forests/main/yggdrasil_decision_forests/test_data/dataset/adult_test.csv -O /tmp/adult_test.csv

# 上述代码使用wget命令从指定的URL下载成人数据集的训练集和测试集副本。-q选项用于静默下载,-O选项用于指定下载文件的保存路径和文件名。训练集保存在/tmp/adult_train.csv,测试集保存在/tmp/adult_test.csv。

请将数据集分割为训练集和测试集。

# 加载数据集到内存中

train_df = pd.read_csv("/tmp/adult_train.csv") # 从文件中读取训练数据集

test_df = pd.read_csv("/tmp/adult_test.csv") # 从文件中读取测试数据集

# 将数据集转换为 TensorFlow 数据集

train_ds = tfdf.keras.pd_dataframe_to_tf_dataset(train_df, label="income") # 将训练数据集转换为 TensorFlow 数据集,并指定标签为 "income"

test_ds = tfdf.keras.pd_dataframe_to_tf_dataset(test_df, label="income") # 将测试数据集转换为 TensorFlow 数据集,并指定标签为 "income"

首先,我们使用默认超参数训练和评估一个Gradient Boosted Trees模型的质量。

%%time

# 训练一个使用默认超参数的模型

# 创建一个梯度提升树模型对象

model = tfdf.keras.GradientBoostedTreesModel()

# 使用训练数据集对模型进行训练

model.fit(train_ds)

Warning: The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

WARNING:absl:The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

Use /tmpfs/tmp/tmp8vxzd_gw as temporary training directory

Reading training dataset...

[WARNING 23-08-16 11:07:53.6383 UTC gradient_boosted_trees.cc:1818] "goss_alpha" set but "sampling_method" not equal to "GOSS".

[WARNING 23-08-16 11:07:53.6384 UTC gradient_boosted_trees.cc:1829] "goss_beta" set but "sampling_method" not equal to "GOSS".

[WARNING 23-08-16 11:07:53.6384 UTC gradient_boosted_trees.cc:1843] "selective_gradient_boosting_ratio" set but "sampling_method" not equal to "SELGB".

Training dataset read in 0:00:03.854321. Found 22792 examples.

Training model...

Model trained in 0:00:03.313284

Compiling model...

[INFO 23-08-16 11:08:00.8007 UTC kernel.cc:1243] Loading model from path /tmpfs/tmp/tmp8vxzd_gw/model/ with prefix 672884dfed9c4c02

[INFO 23-08-16 11:08:00.8244 UTC abstract_model.cc:1311] Engine "GradientBoostedTreesQuickScorerExtended" built

[INFO 23-08-16 11:08:00.8244 UTC kernel.cc:1075] Use fast generic engine

WARNING:tensorflow:AutoGraph could not transform <function simple_ml_inference_op_with_handle at 0x7f23da2a7ee0> and will run it as-is.

Please report this to the TensorFlow team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: could not get source code

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING:tensorflow:AutoGraph could not transform <function simple_ml_inference_op_with_handle at 0x7f23da2a7ee0> and will run it as-is.

Please report this to the TensorFlow team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: could not get source code

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING: AutoGraph could not transform <function simple_ml_inference_op_with_handle at 0x7f23da2a7ee0> and will run it as-is.

Please report this to the TensorFlow team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: could not get source code

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

Model compiled.

CPU times: user 12.7 s, sys: 1.1 s, total: 13.8 s

Wall time: 8.9 s

<keras.src.callbacks.History at 0x7f24cdc1f9a0>

# 评估模型

model.compile(["accuracy"]) # 编译模型,使用"accuracy"作为评估指标

# 使用测试数据集评估模型的准确率

test_accuracy = model.evaluate(test_ds, return_dict=True, verbose=0)["accuracy"]

# 打印没有超参数调整的测试准确率

print(f"没有超参数调整的测试准确率: {test_accuracy:.4f}")

Test accuracy without hyper-parameter tuning: 0.8744

默认模型的超参数可以通过learner_params函数获得。这些参数的定义可以在文档中找到。

# 打印模型的默认超参数

print("Default hyper-parameters of the model:\n", model.learner_params)

Default hyper-parameters of the model:

{'adapt_subsample_for_maximum_training_duration': False, 'allow_na_conditions': False, 'apply_link_function': True, 'categorical_algorithm': 'CART', 'categorical_set_split_greedy_sampling': 0.1, 'categorical_set_split_max_num_items': -1, 'categorical_set_split_min_item_frequency': 1, 'compute_permutation_variable_importance': False, 'dart_dropout': 0.01, 'early_stopping': 'LOSS_INCREASE', 'early_stopping_initial_iteration': 10, 'early_stopping_num_trees_look_ahead': 30, 'focal_loss_alpha': 0.5, 'focal_loss_gamma': 2.0, 'forest_extraction': 'MART', 'goss_alpha': 0.2, 'goss_beta': 0.1, 'growing_strategy': 'LOCAL', 'honest': False, 'honest_fixed_separation': False, 'honest_ratio_leaf_examples': 0.5, 'in_split_min_examples_check': True, 'keep_non_leaf_label_distribution': True, 'l1_regularization': 0.0, 'l2_categorical_regularization': 1.0, 'l2_regularization': 0.0, 'lambda_loss': 1.0, 'loss': 'DEFAULT', 'max_depth': 6, 'max_num_nodes': None, 'maximum_model_size_in_memory_in_bytes': -1.0, 'maximum_training_duration_seconds': -1.0, 'min_examples': 5, 'missing_value_policy': 'GLOBAL_IMPUTATION', 'num_candidate_attributes': -1, 'num_candidate_attributes_ratio': -1.0, 'num_trees': 300, 'pure_serving_model': False, 'random_seed': 123456, 'sampling_method': 'RANDOM', 'selective_gradient_boosting_ratio': 0.01, 'shrinkage': 0.1, 'sorting_strategy': 'PRESORT', 'sparse_oblique_normalization': None, 'sparse_oblique_num_projections_exponent': None, 'sparse_oblique_projection_density_factor': None, 'sparse_oblique_weights': None, 'split_axis': 'AXIS_ALIGNED', 'subsample': 1.0, 'uplift_min_examples_in_treatment': 5, 'uplift_split_score': 'KULLBACK_LEIBLER', 'use_hessian_gain': False, 'validation_interval_in_trees': 1, 'validation_ratio': 0.1}

使用自动化超参数调整和手动定义超参数训练模型

通过指定模型的tuner构造函数参数来启用超参数调整。调谐器对象包含了调谐器的所有配置(搜索空间、优化器、试验和目标)。

注意: 在下一节中,您将看到如何自动配置超参数值。然而,手动设置超参数如此处所示仍然是有价值的,可以帮助理解。

# 配置调参器。

# 创建一个有50次试验的随机搜索调参器。

tuner = tfdf.tuner.RandomSearch(num_trials=50)

# 定义搜索空间。

#

# 添加更多的参数通常会提高模型的质量,但会使调参时间更长。

tuner.choice("min_examples", [2, 5, 7, 10])

tuner.choice("categorical_algorithm", ["CART", "RANDOM"])

# 一些超参数只对其他超参数的特定值有效。例如,当"growing_strategy=LOCAL"时,"max_depth"参数大多数情况下是有用的,而当"growing_strategy=BEST_FIRST_GLOBAL"时,"max_num_nodes"更适用。

local_search_space = tuner.choice("growing_strategy", ["LOCAL"])

local_search_space.choice("max_depth", [3, 4, 5, 6, 8])

# merge=True表示参数(这里是"growing_strategy")已经定义,并且新的值将被添加到其中。

global_search_space = tuner.choice("growing_strategy", ["BEST_FIRST_GLOBAL"], merge=True)

global_search_space.choice("max_num_nodes", [16, 32, 64, 128, 256])

tuner.choice("use_hessian_gain", [True, False])

tuner.choice("shrinkage", [0.02, 0.05, 0.10, 0.15])

tuner.choice("num_candidate_attributes_ratio", [0.2, 0.5, 0.9, 1.0])

# 取消对以下超参数的注释(或全部取消注释)以提高搜索的质量。相应地应增加试验的次数。

# tuner.choice("split_axis", ["AXIS_ALIGNED"])

# oblique_space = tuner.choice("split_axis", ["SPARSE_OBLIQUE"], merge=True)

# oblique_space.choice("sparse_oblique_normalization",

# ["NONE", "STANDARD_DEVIATION", "MIN_MAX"])

# oblique_space.choice("sparse_oblique_weights", ["BINARY", "CONTINUOUS"])

# oblique_space.choice("sparse_oblique_num_projections_exponent", [1.0, 1.5])

<tensorflow_decision_forests.component.tuner.tuner.SearchSpace at 0x7f240c3b32b0>

%%time

%set_cell_height 300

# 使用tuner对象来训练模型

tuned_model = tfdf.keras.GradientBoostedTreesModel(tuner=tuner)

tuned_model.fit(train_ds, verbose=2)

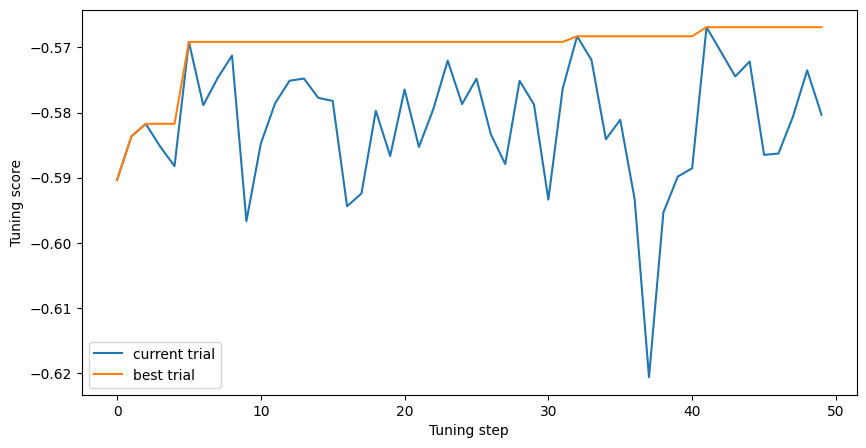

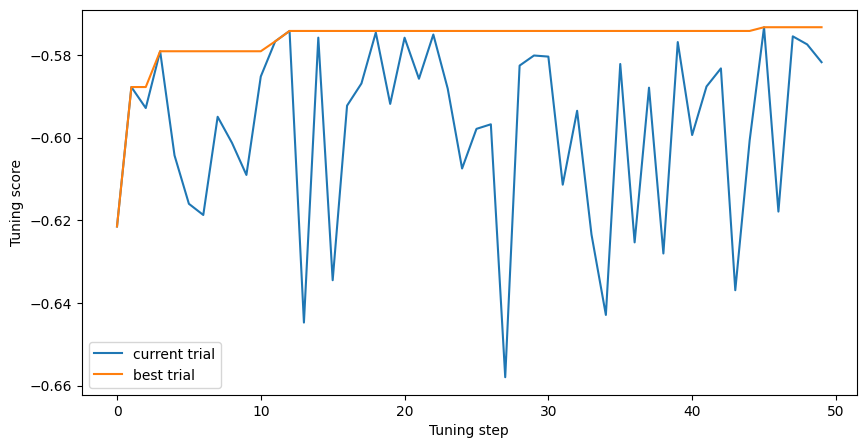

# 在训练日志中,可以看到类似"[10/50] Score: -0.45 / -0.40 HParams: ..."的行。

# 这表示已经完成了50个试验中的10个。最后一个试验返回了得分"-0.45",而迄今为止最好的试验得分为"-0.40"。

# 在这个例子中,模型通过对数损失进行优化。由于得分是最大化的,而对数损失应该最小化,所以得分实际上是负对数损失。

<IPython.core.display.Javascript object>

Warning: The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

WARNING:absl:The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

Use /tmpfs/tmp/tmpzdzgno07 as temporary training directory

Reading training dataset...

Training tensor examples:

Features: {'age': <tf.Tensor 'data:0' shape=(None,) dtype=int64>, 'workclass': <tf.Tensor 'data_1:0' shape=(None,) dtype=string>, 'fnlwgt': <tf.Tensor 'data_2:0' shape=(None,) dtype=int64>, 'education': <tf.Tensor 'data_3:0' shape=(None,) dtype=string>, 'education_num': <tf.Tensor 'data_4:0' shape=(None,) dtype=int64>, 'marital_status': <tf.Tensor 'data_5:0' shape=(None,) dtype=string>, 'occupation': <tf.Tensor 'data_6:0' shape=(None,) dtype=string>, 'relationship': <tf.Tensor 'data_7:0' shape=(None,) dtype=string>, 'race': <tf.Tensor 'data_8:0' shape=(None,) dtype=string>, 'sex': <tf.Tensor 'data_9:0' shape=(None,) dtype=string>, 'capital_gain': <tf.Tensor 'data_10:0' shape=(None,) dtype=int64>, 'capital_loss': <tf.Tensor 'data_11:0' shape=(None,) dtype=int64>, 'hours_per_week': <tf.Tensor 'data_12:0' shape=(None,) dtype=int64>, 'native_country': <tf.Tensor 'data_13:0' shape=(None,) dtype=string>}

Label: Tensor("data_14:0", shape=(None,), dtype=int64)

Weights: None

Normalized tensor features:

{'age': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast:0' shape=(None,) dtype=float32>), 'workclass': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data_1:0' shape=(None,) dtype=string>), 'fnlwgt': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_1:0' shape=(None,) dtype=float32>), 'education': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data_3:0' shape=(None,) dtype=string>), 'education_num': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_2:0' shape=(None,) dtype=float32>), 'marital_status': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data_5:0' shape=(None,) dtype=string>), 'occupation': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data_6:0' shape=(None,) dtype=string>), 'relationship': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data_7:0' shape=(None,) dtype=string>), 'race': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data_8:0' shape=(None,) dtype=string>), 'sex': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data_9:0' shape=(None,) dtype=string>), 'capital_gain': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_3:0' shape=(None,) dtype=float32>), 'capital_loss': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_4:0' shape=(None,) dtype=float32>), 'hours_per_week': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_5:0' shape=(None,) dtype=float32>), 'native_country': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data_13:0' shape=(None,) dtype=string>)}

[WARNING 23-08-16 11:08:02.9532 UTC gradient_boosted_trees.cc:1818] "goss_alpha" set but "sampling_method" not equal to "GOSS".

[WARNING 23-08-16 11:08:02.9533 UTC gradient_boosted_trees.cc:1829] "goss_beta" set but "sampling_method" not equal to "GOSS".

[WARNING 23-08-16 11:08:02.9533 UTC gradient_boosted_trees.cc:1843] "selective_gradient_boosting_ratio" set but "sampling_method" not equal to "SELGB".

Training dataset read in 0:00:00.389683. Found 22792 examples.

Training model...

Standard output detected as not visible to the user e.g. running in a notebook. Creating a training log redirection. If training gets stuck, try calling tfdf.keras.set_training_logs_redirection(False).

[INFO 23-08-16 11:08:03.3555 UTC kernel.cc:773] Start Yggdrasil model training

[INFO 23-08-16 11:08:03.3555 UTC kernel.cc:774] Collect training examples

[INFO 23-08-16 11:08:03.3555 UTC kernel.cc:787] Dataspec guide:

column_guides {

column_name_pattern: "^__LABEL$"

type: CATEGORICAL

categorial {

min_vocab_frequency: 0

max_vocab_count: -1

}

}

default_column_guide {

categorial {

max_vocab_count: 2000

}

discretized_numerical {

maximum_num_bins: 255

}

}

ignore_columns_without_guides: false

detect_numerical_as_discretized_numerical: false

[INFO 23-08-16 11:08:03.3556 UTC kernel.cc:393] Number of batches: 23

[INFO 23-08-16 11:08:03.3556 UTC kernel.cc:394] Number of examples: 22792

[INFO 23-08-16 11:08:03.3630 UTC data_spec_inference.cc:305] 1 item(s) have been pruned (i.e. they are considered out of dictionary) for the column native_country (40 item(s) left) because min_value_count=5 and max_number_of_unique_values=2000

[INFO 23-08-16 11:08:03.3630 UTC data_spec_inference.cc:305] 1 item(s) have been pruned (i.e. they are considered out of dictionary) for the column occupation (13 item(s) left) because min_value_count=5 and max_number_of_unique_values=2000

[INFO 23-08-16 11:08:03.3631 UTC data_spec_inference.cc:305] 1 item(s) have been pruned (i.e. they are considered out of dictionary) for the column workclass (7 item(s) left) because min_value_count=5 and max_number_of_unique_values=2000

[INFO 23-08-16 11:08:03.3698 UTC kernel.cc:794] Training dataset:

Number of records: 22792

Number of columns: 15

Number of columns by type:

CATEGORICAL: 9 (60%)

NUMERICAL: 6 (40%)

Columns:

CATEGORICAL: 9 (60%)

0: "__LABEL" CATEGORICAL integerized vocab-size:3 no-ood-item

4: "education" CATEGORICAL has-dict vocab-size:17 zero-ood-items most-frequent:"HS-grad" 7340 (32.2043%)

8: "marital_status" CATEGORICAL has-dict vocab-size:8 zero-ood-items most-frequent:"Married-civ-spouse" 10431 (45.7661%)

9: "native_country" CATEGORICAL num-nas:407 (1.78571%) has-dict vocab-size:41 num-oods:1 (0.00446728%) most-frequent:"United-States" 20436 (91.2933%)

10: "occupation" CATEGORICAL num-nas:1260 (5.52826%) has-dict vocab-size:14 num-oods:1 (0.00464425%) most-frequent:"Prof-specialty" 2870 (13.329%)

11: "race" CATEGORICAL has-dict vocab-size:6 zero-ood-items most-frequent:"White" 19467 (85.4115%)

12: "relationship" CATEGORICAL has-dict vocab-size:7 zero-ood-items most-frequent:"Husband" 9191 (40.3256%)

13: "sex" CATEGORICAL has-dict vocab-size:3 zero-ood-items most-frequent:"Male" 15165 (66.5365%)

14: "workclass" CATEGORICAL num-nas:1257 (5.51509%) has-dict vocab-size:8 num-oods:1 (0.0046436%) most-frequent:"Private" 15879 (73.7358%)

NUMERICAL: 6 (40%)

1: "age" NUMERICAL mean:38.6153 min:17 max:90 sd:13.661

2: "capital_gain" NUMERICAL mean:1081.9 min:0 max:99999 sd:7509.48

3: "capital_loss" NUMERICAL mean:87.2806 min:0 max:4356 sd:403.01

5: "education_num" NUMERICAL mean:10.0927 min:1 max:16 sd:2.56427

6: "fnlwgt" NUMERICAL mean:189879 min:12285 max:1.4847e+06 sd:106423

7: "hours_per_week" NUMERICAL mean:40.3955 min:1 max:99 sd:12.249

Terminology:

nas: Number of non-available (i.e. missing) values.

ood: Out of dictionary.

manually-defined: Attribute which type is manually defined by the user i.e. the type was not automatically inferred.

tokenized: The attribute value is obtained through tokenization.

has-dict: The attribute is attached to a string dictionary e.g. a categorical attribute stored as a string.

vocab-size: Number of unique values.

[INFO 23-08-16 11:08:03.3699 UTC kernel.cc:810] Configure learner

[WARNING 23-08-16 11:08:03.3702 UTC gradient_boosted_trees.cc:1818] "goss_alpha" set but "sampling_method" not equal to "GOSS".

[WARNING 23-08-16 11:08:03.3702 UTC gradient_boosted_trees.cc:1829] "goss_beta" set but "sampling_method" not equal to "GOSS".

[WARNING 23-08-16 11:08:03.3702 UTC gradient_boosted_trees.cc:1843] "selective_gradient_boosting_ratio" set but "sampling_method" not equal to "SELGB".

[INFO 23-08-16 11:08:03.3703 UTC kernel.cc:824] Training config:

learner: "HYPERPARAMETER_OPTIMIZER"

features: "^age$"

features: "^capital_gain$"

features: "^capital_loss$"

features: "^education$"

features: "^education_num$"

features: "^fnlwgt$"

features: "^hours_per_week$"

features: "^marital_status$"

features: "^native_country$"

features: "^occupation$"

features: "^race$"

features: "^relationship$"

features: "^sex$"

features: "^workclass$"

label: "^__LABEL$"

task: CLASSIFICATION

metadata {

framework: "TF Keras"

}

[yggdrasil_decision_forests.model.hyperparameters_optimizer_v2.proto.hyperparameters_optimizer_config] {

base_learner {

learner: "GRADIENT_BOOSTED_TREES"

features: "^age$"

features: "^capital_gain$"

features: "^capital_loss$"

features: "^education$"

features: "^education_num$"

features: "^fnlwgt$"

features: "^hours_per_week$"

features: "^marital_status$"

features: "^native_country$"

features: "^occupation$"

features: "^race$"

features: "^relationship$"

features: "^sex$"

features: "^workclass$"

label: "^__LABEL$"

task: CLASSIFICATION

random_seed: 123456

pure_serving_model: false

[yggdrasil_decision_forests.model.gradient_boosted_trees.proto.gradient_boosted_trees_config] {

num_trees: 300

decision_tree {

max_depth: 6

min_examples: 5

in_split_min_examples_check: true

keep_non_leaf_label_distribution: true

num_candidate_attributes: -1

missing_value_policy: GLOBAL_IMPUTATION

allow_na_conditions: false

categorical_set_greedy_forward {

sampling: 0.1

max_num_items: -1

min_item_frequency: 1

}

growing_strategy_local {

}

categorical {

cart {

}

}

axis_aligned_split {

}

internal {

sorting_strategy: PRESORTED

}

uplift {

min_examples_in_treatment: 5

split_score: KULLBACK_LEIBLER

}

}

shrinkage: 0.1

loss: DEFAULT

validation_set_ratio: 0.1

validation_interval_in_trees: 1

early_stopping: VALIDATION_LOSS_INCREASE

early_stopping_num_trees_look_ahead: 30

l2_regularization: 0

lambda_loss: 1

mart {

}

adapt_subsample_for_maximum_training_duration: false

l1_regularization: 0

use_hessian_gain: false

l2_regularization_categorical: 1

stochastic_gradient_boosting {

ratio: 1

}

apply_link_function: true

compute_permutation_variable_importance: false

binary_focal_loss_options {

misprediction_exponent: 2

positive_sample_coefficient: 0.5

}

early_stopping_initial_iteration: 10

}

}

optimizer {

optimizer_key: "RANDOM"

[yggdrasil_decision_forests.model.hyperparameters_optimizer_v2.proto.random] {

num_trials: 50

}

}

search_space {

fields {

name: "min_examples"

discrete_candidates {

possible_values {

integer: 2

}

possible_values {

integer: 5

}

possible_values {

integer: 7

}

possible_values {

integer: 10

}

}

}

fields {

name: "categorical_algorithm"

discrete_candidates {

possible_values {

categorical: "CART"

}

possible_values {

categorical: "RANDOM"

}

}

}

fields {

name: "growing_strategy"

discrete_candidates {

possible_values {

categorical: "LOCAL"

}

possible_values {

categorical: "BEST_FIRST_GLOBAL"

}

}

children {

name: "max_depth"

discrete_candidates {

possible_values {

integer: 3

}

possible_values {

integer: 4

}

possible_values {

integer: 5

}

possible_values {

integer: 6

}

possible_values {

integer: 8

}

}

parent_discrete_values {

possible_values {

categorical: "LOCAL"

}

}

}

children {

name: "max_num_nodes"

discrete_candidates {

possible_values {

integer: 16

}

possible_values {

integer: 32

}

possible_values {

integer: 64

}

possible_values {

integer: 128

}

possible_values {

integer: 256

}

}

parent_discrete_values {

possible_values {

categorical: "BEST_FIRST_GLOBAL"

}

}

}

}

fields {

name: "use_hessian_gain"

discrete_candidates {

possible_values {

categorical: "true"

}

possible_values {

categorical: "false"

}

}

}

fields {

name: "shrinkage"

discrete_candidates {

possible_values {

real: 0.02

}

possible_values {

real: 0.05

}

possible_values {

real: 0.1

}

possible_values {

real: 0.15

}

}

}

fields {

name: "num_candidate_attributes_ratio"

discrete_candidates {

possible_values {

real: 0.2

}

possible_values {

real: 0.5

}

possible_values {

real: 0.9

}

possible_values {

real: 1

}

}

}

}

base_learner_deployment {

num_threads: 1

}

}

[INFO 23-08-16 11:08:03.3707 UTC kernel.cc:827] Deployment config:

cache_path: "/tmpfs/tmp/tmpzdzgno07/working_cache"

num_threads: 32

try_resume_training: true

[INFO 23-08-16 11:08:03.3709 UTC kernel.cc:889] Train model

[INFO 23-08-16 11:08:03.3711 UTC hyperparameters_optimizer.cc:209] Hyperparameter search space:

fields {

name: "min_examples"

discrete_candidates {

possible_values {

integer: 2

}

possible_values {

integer: 5

}

possible_values {

integer: 7

}

possible_values {

integer: 10

}

}

}

fields {

name: "categorical_algorithm"

discrete_candidates {

possible_values {

categorical: "CART"

}

possible_values {

categorical: "RANDOM"

}

}

}

fields {

name: "growing_strategy"

discrete_candidates {

possible_values {

categorical: "LOCAL"

}

possible_values {

categorical: "BEST_FIRST_GLOBAL"

}

}

children {

name: "max_depth"

discrete_candidates {

possible_values {

integer: 3

}

possible_values {

integer: 4

}

possible_values {

integer: 5

}

possible_values {

integer: 6

}

possible_values {

integer: 8

}

}

parent_discrete_values {

possible_values {

categorical: "LOCAL"

}

}

}

children {

name: "max_num_nodes"

discrete_candidates {

possible_values {

integer: 16

}

possible_values {

integer: 32

}

possible_values {

integer: 64

}

possible_values {

integer: 128

}

possible_values {

integer: 256

}

}

parent_discrete_values {

possible_values {

categorical: "BEST_FIRST_GLOBAL"

}

}

}

}

fields {

name: "use_hessian_gain"

discrete_candidates {

possible_values {

categorical: "true"

}

possible_values {

categorical: "false"

}

}

}

fields {

name: "shrinkage"

discrete_candidates {

possible_values {

real: 0.02

}

possible_values {

real: 0.05

}

possible_values {

real: 0.1

}

possible_values {

real: 0.15

}

}

}

fields {

name: "num_candidate_attributes_ratio"

discrete_candidates {

possible_values {

real: 0.2

}

possible_values {

real: 0.5

}

possible_values {

real: 0.9

}

possible_values {

real: 1

}

}

}

[INFO 23-08-16 11:08:03.3713 UTC hyperparameters_optimizer.cc:500] Start local tuner with 32 thread(s)

[INFO 23-08-16 11:08:03.3728 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3728 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:03.3729 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3729 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:03.3729 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3730 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and [INFO 23-08-16 11:08:03.3730 UTC gradient_boosted_trees.cc:14 feature(s).

459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3731 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO[INFO 23-08-16 11:08:03.3731 UTC 23-08-16 11:08:03.3732 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3732 UTC gradient_boosted_trees.cc[INFO 23-08-16 11:08:03.3732 UTC gradient_boosted_trees.cc:1085:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:03.3733 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3733 UTC gradient_boosted_trees.cc:459[[INFO 23-08-16 11:08:03.3733 UTC [INFOgradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).[ 23-08-16 11:08:03.3734 UTC

INFO] Default loss set to BINOMIAL_LOG_LIKELIHOOD[INFO 23-08-16 11:08:03.3734 UTC INFOgradient_boosted_trees.cc

23-08-16 11:08:03.3734 UTC [ 23-08-16 11:08:03.3734 UTC :[INFO[[INFOINFO 23-08-16 11:08:03.3735 UTC gradient_boosted_trees.cc 23-08-16 11:08:03.3735 UTC gradient_boosted_trees.ccgradient_boosted_trees.ccgradient_boosted_trees.cc:459] 459Default loss set to BINOMIAL_LOG_LIKELIHOOD

23-08-16 11:08:03.3735 UTC INFOgradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD:gradient_boosted_trees.cc

:] 459:] Default loss set to 459BINOMIAL_LOG_LIKELIHOOD

[459INFO] Default loss set to 23-08-16 11:08:03.3736 UTC 23-08-16 11:08:03.3736 UTC BINOMIAL_LOG_LIKELIHOODgradient_boosted_trees.cc:Default loss set to [] [INFO:4591085 23-08-16 11:08:03.3737 UTC gradient_boosted_trees.cc

:gradient_boosted_trees.cc:INFOBINOMIAL_LOG_LIKELIHOOD459] 23-08-16 11:08:03.3737 UTC ]

Default loss set to [BINOMIAL_LOG_LIKELIHOODINFO[Training gradient boosted tree on gradient_boosted_trees.ccINFO 23-08-16 11:08:03.3738 UTC :1085[] ] INFO22792 23-08-16 11:08:03.3738 UTC example(s) and gradient_boosted_trees.cc14Default loss set to feature(s).BINOMIAL_LOG_LIKELIHOODgradient_boosted_trees.cc

23-08-16 11:08:03.3738 UTC :[INFO1085gradient_boosted_trees.ccTraining gradient boosted tree on ]

:Default loss set to :227921085 23-08-16 11:08:03.3738 UTC [BINOMIAL_LOG_LIKELIHOOD10851085Training gradient boosted tree on ] [Training gradient boosted tree on INFOINFO22792

example(s) and ] 23-08-16 11:08:03.3739 UTC gradient_boosted_trees.cc[ example(s) and INFO1422792 23-08-16 11:08:03.3739 UTC gradient_boosted_trees.cc feature(s).

:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

23-08-16 11:08:03.3739 UTC gradient_boosted_trees.cc::10851085] ] Training gradient boosted tree on 14 example(s) and gradient_boosted_trees.cc feature(s).14 feature(s).Training gradient boosted tree on

22792[ example(s) and :Training gradient boosted tree on 2279222792 example(s) and example(s) and 1414INFO

[ 23-08-16 11:08:03.3741 UTC feature(s).gradient_boosted_trees.cc45914] Default loss set to ] feature(s).Training gradient boosted tree on

feature(s).

INFO 23-08-16 11:08:03.3742 UTC gradient_boosted_trees.cc:BINOMIAL_LOG_LIKELIHOOD1085

] [INFOTraining gradient boosted tree on 22792 example(s) and :[22792 23-08-16 11:08:03.3742 UTC 108514gradient_boosted_trees.cc feature(s).

:1085INFO] example(s) and 14] feature(s).Training gradient boosted tree on 22792

23-08-16 11:08:03.3743 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD[ example(s) and INFO14 feature(s).Training gradient boosted tree on

22792 example(s) and 14

feature(s).[ 23-08-16 11:08:03.3743 UTC INFOgradient_boosted_trees.cc[ 23-08-16 11:08:03.3744 UTC [

INFO: 23-08-16 11:08:03.3744 UTC gradient_boosted_trees.cc459:] Default loss set to INFOBINOMIAL_LOG_LIKELIHOOD[459INFO] Default loss set to BINOMIAL_LOG_LIKELIHOOD 23-08-16 11:08:03.3745 UTC gradient_boosted_trees.ccgradient_boosted_trees.cc:459

]

Default loss set to BINOMIAL_LOG_LIKELIHOOD:[459] INFO

Default loss set to [[INFO 23-08-16 11:08:03.3745 UTC 23-08-16 11:08:03.3745 UTC gradient_boosted_trees.ccBINOMIAL_LOG_LIKELIHOODgradient_boosted_trees.cc

:1085[[INFOINFO 23-08-16 11:08:03.3746 UTC 23-08-16 11:08:03.3746 UTC gradient_boosted_trees.cc: 23-08-16 11:08:03.3746 UTC [:INFO1085gradient_boosted_trees.cc1085] 23-08-16 11:08:03.3746 UTC gradient_boosted_trees.ccgradient_boosted_trees.cc:] Training gradient boosted tree on :] 1085Training gradient boosted tree on ] INFO22792: example(s) and 14Training gradient boosted tree on feature(s).

22792 example(s) and 141085 feature(s).22792

23-08-16 11:08:03.3747 UTC example(s) and gradient_boosted_trees.cc:14459459] ] Training gradient boosted tree on feature(s).22792 example(s) and

Training gradient boosted tree on 14] 22792 example(s) and 14 feature(s).

Default loss set to BINOMIAL_LOG_LIKELIHOOD feature(s).Default loss set to

BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3748 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14[INFO 23-08-16 11:08:03.3749 UTC gradient_boosted_trees.cc:1085] feature(s).

Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:03.3752 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3752 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:03.3754 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3754 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:03.3761 UTC gradient_boosted_trees.cc:459] Default loss set to [INFOBINOMIAL_LOG_LIKELIHOOD

23-08-16 11:08:03.3762 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO[INFO 23-08-16 11:08:03.3762 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 23-08-16 11:08:03.3762 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 1414 feature(s).

feature(s).

[INFO 23-08-16 11:08:03.3768 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3768 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:03.3772 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3772 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:03.3774 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:03.3775 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:03.3914 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4337 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4344 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4345 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4347 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4356 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4363 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO[INFO 23-08-16 11:08:03.4369 UTC gradient_boosted_trees.cc: 23-08-16 11:08:03.4369 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4370 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4374 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO[[[INFOINFO 23-08-16 11:08:03.4382 UTC gradient_boosted_trees.ccINFO 23-08-16 11:08:03.4382 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and [2259:INFO1128 23-08-16 11:08:03.4382 UTC [ 23-08-16 11:08:03.4382 UTC INFOgradient_boosted_trees.ccgradient_boosted_trees.cc: examples used for validation:]

205331128 23-08-16 11:08:03.4382 UTC ] 20533gradient_boosted_trees.cc examples used for training and 2259:1128 examples used for validation examples used for training and ] 23-08-16 11:08:03.4382 UTC 205332259gradient_boosted_trees.cc examples used for training and 2259 examples used for validation:

1128] 205331128 examples used for training and examples used for validation2259 examples used for validation

] 20533

examples used for training and 2259 examples used for validation

[INFO[INFO 23-08-16 11:08:03.4385 UTC gradient_boosted_trees.cc 23-08-16 11:08:03.4385 UTC gradient_boosted_trees.cc::1128[INFO] 20533 examples used for training and 22591128] 20533 examples used for training and 2259 examples used for validation examples used for validation

23-08-16 11:08:03.4386 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO[INFO 23-08-16 11:08:03.4403 UTC gradient_boosted_trees.cc:1128[[[INFO] 20533INFO examples used for training and 2259INFO examples used for validation

23-08-16 11:08:03.4404 UTC gradient_boosted_trees.cc 23-08-16 11:08:03.4404 UTC :gradient_boosted_trees.cc 23-08-16 11:08:03.4403 UTC :1128] 20533 examples used for training and gradient_boosted_trees.cc 23-08-16 11:08:03.4404 UTC 2259 examples used for validationgradient_boosted_trees.cc::1128] 20533 examples used for training and 11282259 examples used for validation1128

]

20533] 20533 examples used for training and 2259 examples used for validation

examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4409 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[[INFOINFO 23-08-16 11:08:03.4416 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259[ 23-08-16 11:08:03.4416 UTC gradient_boosted_trees.ccINFO examples used for validation

: 23-08-16 11:08:03.4416 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4447 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4462 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4490 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:03.4535 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.012352 train-accuracy:0.761895 valid-loss:1.067086 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4640 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.033944 train-accuracy:0.761895 valid-loss:1.087890 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4689 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.007318 train-accuracy:0.761895 valid-loss:1.063819 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4717 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.015585 train-accuracy:0.761895 valid-loss:1.068358 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4747 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:0.992466 train-accuracy:0.761895 valid-loss:1.048658 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4758 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.080310 train-accuracy:0.761895 valid-loss:1.138544 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4762 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.024983 train-accuracy:0.761895 valid-loss:1.080660 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4800 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.013950 train-accuracy:0.761895 valid-loss:1.069965 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4803 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.035081 train-accuracy:0.761895 valid-loss:1.091865 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4826 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:0.974501 train-accuracy:0.761895 valid-loss:1.024211 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4855 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:0.992049 train-accuracy:0.761895 valid-loss:1.047210 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4868 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.021242 train-accuracy:0.761895 valid-loss:1.076859 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4882 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.056437 train-accuracy:0.761895 valid-loss:1.113420 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4903 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.057450 train-accuracy:0.761895 valid-loss:1.114456 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4920 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.054434 train-accuracy:0.761895 valid-loss:1.110703 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4927 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.022126 train-accuracy:0.761895 valid-loss:1.077863 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.4975 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:0.985785 train-accuracy:0.761895 valid-loss:1.041083 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5011 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.015975 train-accuracy:0.761895 valid-loss:1.071430 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5043 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.056455 train-accuracy:0.761895 valid-loss:1.113410 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5052 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.080606 train-accuracy:0.761895 valid-loss:1.138615 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5098 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.055526 train-accuracy:0.761895 valid-loss:1.112339 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5126 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.080606 train-accuracy:0.761895 valid-loss:1.138615 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5132 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.080079 train-accuracy:0.761895 valid-loss:1.138475 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5158 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.080017 train-accuracy:0.761895 valid-loss:1.137988 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5282 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.052474 train-accuracy:0.761895 valid-loss:1.109417 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5328 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:0.978408 train-accuracy:0.761895 valid-loss:1.031947 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5335 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.055966 train-accuracy:0.761895 valid-loss:1.113004 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5340 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.080559 train-accuracy:0.761895 valid-loss:1.138519 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5397 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.080851 train-accuracy:0.761895 valid-loss:1.138916 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5398 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.015861 train-accuracy:0.761895 valid-loss:1.071101 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5503 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.054509 train-accuracy:0.761895 valid-loss:1.111318 valid-accuracy:0.736609

[INFO 23-08-16 11:08:03.5527 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.080203 train-accuracy:0.761895 valid-loss:1.138223 valid-accuracy:0.736609

[INFO 23-08-16 11:08:05.4509 UTC gradient_boosted_trees.cc:1542] num-trees:300 train-loss:0.553261 train-accuracy:0.875566 valid-loss:0.590388 valid-accuracy:0.865870

[INFO 23-08-16 11:08:05.4509 UTC gradient_boosted_trees.cc:247] Truncates the model to 299 tree(s) i.e. 299 iteration(s).

[INFO 23-08-16 11:08:05.4509 UTC gradient_boosted_trees.cc:310] Final model num-trees:299 valid-loss:0.590370 valid-accuracy:0.866313

[INFO 23-08-16 11:08:05.4520 UTC hyperparameters_optimizer.cc:582] [1/50] Score: -0.59037 / -0.59037 HParams: fields { name: "min_examples" value { integer: 7 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 3 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.15 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.2 } }

[INFO 23-08-16 11:08:05.4525 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:05.4526 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:05.4580 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:05.4741 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.583674

[INFO 23-08-16 11:08:05.4742 UTC gradient_boosted_trees.cc:247] Truncates the model to 61 tree(s) i.e. 61 iteration(s).

[INFO 23-08-16 11:08:05.4753 UTC gradient_boosted_trees.cc:310] Final model num-trees:61 valid-loss:0.583674 valid-accuracy:0.866755

[INFO 23-08-16 11:08:05.4799 UTC hyperparameters_optimizer.cc:582] [2/50] Score: -0.583674 / -0.583674 HParams: fields { name: "min_examples" value { integer: 5 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 8 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.15 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.2 } }

[INFO 23-08-16 11:08:05.4807 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:05.4807 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:05.4861 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:05.5125 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.080487 train-accuracy:0.761895 valid-loss:1.138629 valid-accuracy:0.736609

[INFO 23-08-16 11:08:05.5642 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.010715 train-accuracy:0.761895 valid-loss:1.065719 valid-accuracy:0.736609

[INFO 23-08-16 11:08:06.6744 UTC gradient_boosted_trees.cc:1542] num-trees:300 train-loss:0.540563 train-accuracy:0.877271 valid-loss:0.581734 valid-accuracy:0.869854

[INFO 23-08-16 11:08:06.6744 UTC gradient_boosted_trees.cc:247] Truncates the model to 300 tree(s) i.e. 300 iteration(s).

[INFO 23-08-16 11:08:06.6745 UTC gradient_boosted_trees.cc:310] Final model num-trees:300 valid-loss:0.581734 valid-accuracy:0.869854

[INFO 23-08-16 11:08:06.6779 UTC hyperparameters_optimizer.cc:582] [3/50] Score: -0.581734 / -0.581734 HParams: fields { name: "min_examples" value { integer: 10 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 3 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.15 } } fields { name: "num_candidate_attributes_ratio" value { real: 1 } }

[INFO 23-08-16 11:08:06.6786 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:06.6787 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:06.6844 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:06.7151 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.057450 train-accuracy:0.761895 valid-loss:1.114456 valid-accuracy:0.736609

[INFO 23-08-16 11:08:06.8117 UTC gradient_boosted_trees.cc:1542] num-trees:300 train-loss:0.537262 train-accuracy:0.878780 valid-loss:0.585214 valid-accuracy:0.869854

[INFO 23-08-16 11:08:06.8117 UTC gradient_boosted_trees.cc:247] Truncates the model to 300 tree(s) i.e. 300 iteration(s).

[INFO 23-08-16 11:08:06.8117 UTC gradient_boosted_trees.cc:310] Final model num-trees:300 valid-loss:0.585214 valid-accuracy:0.869854

[INFO 23-08-16 11:08:06.8126 UTC hyperparameters_optimizer.cc:582] [4/50] Score: -0.585214 / -0.581734 HParams: fields { name: "min_examples" value { integer: 10 } } fields { name: "categorical_algorithm" value { categorical: "RANDOM" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 3 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.15 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.5 } }

[INFO 23-08-16 11:08:06.8139 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:06.8140 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:06.8191 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:06.8574 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.016525 train-accuracy:0.761895 valid-loss:1.069784 valid-accuracy:0.736609

[INFO 23-08-16 11:08:07.2475 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.588227

[INFO 23-08-16 11:08:07.2476 UTC gradient_boosted_trees.cc:247] Truncates the model to 113 tree(s) i.e. 113 iteration(s).

[INFO 23-08-16 11:08:07.2487 UTC gradient_boosted_trees.cc:310] Final model num-trees:113 valid-loss:0.588227 valid-accuracy:0.868969

[INFO 23-08-16 11:08:07.2525 UTC hyperparameters_optimizer.cc:582] [5/50] Score: -0.588227 / -0.581734 HParams: fields { name: "min_examples" value { integer: 7 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "BEST_FIRST_GLOBAL" } } fields { name: "max_num_nodes" value { integer: 64 } } fields { name: "use_hessian_gain" value { categorical: "false" } } fields { name: "shrinkage" value { real: 0.1 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.2 } }

[INFO 23-08-16 11:08:07.2582 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:07.2583 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:07.2630 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:07.2844 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.053989 train-accuracy:0.761895 valid-loss:1.109535 valid-accuracy:0.736609

[INFO 23-08-16 11:08:07.6031 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.569154

[INFO 23-08-16 11:08:07.6031 UTC gradient_boosted_trees.cc:247] Truncates the model to 161 tree(s) i.e. 161 iteration(s).

[INFO 23-08-16 11:08:07.6034 UTC gradient_boosted_trees.cc:310] Final model num-trees:161 valid-loss:0.569154 valid-accuracy:0.873838

[INFO 23-08-16 11:08:07.6057 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:07.6058 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:07.6059 UTC hyperparameters_optimizer.cc:582] [6/50] Score: -0.569154 / -0.569154 HParams: fields { name: "min_examples" value { integer: 2 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 5 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.15 } } fields { name: "num_candidate_attributes_ratio" value { real: 1 } }

[INFO 23-08-16 11:08:07.6114 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:07.6632 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.578871

[INFO 23-08-16 11:08:07.6632 UTC gradient_boosted_trees.cc:247] Truncates the model to 130 tree(s) i.e. 130 iteration(s).

[INFO 23-08-16 11:08:07.6638 UTC gradient_boosted_trees.cc:310] Final model num-trees:130 valid-loss:0.578871 valid-accuracy:0.869854

[INFO 23-08-16 11:08:07.6667 UTC hyperparameters_optimizer.cc:582] [7/50] Score: -0.578871 / -0.569154 HParams: fields { name: "min_examples" value { integer: 2 } } fields { name: "categorical_algorithm" value { categorical: "RANDOM" } } fields { name: "growing_strategy" value { categorical: "BEST_FIRST_GLOBAL" } } fields { name: "max_num_nodes" value { integer: 32 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.1 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.2 } }

[INFO 23-08-16 11:08:07.6677 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:07.6677 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:07.6714 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:0.981052 train-accuracy:0.761895 valid-loss:1.035441 valid-accuracy:0.736609

[INFO 23-08-16 11:08:07.6733 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:07.7146 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.080688 train-accuracy:0.761895 valid-loss:1.138783 valid-accuracy:0.736609

[INFO 23-08-16 11:08:07.7908 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.574698

[INFO 23-08-16 11:08:07.7909 UTC gradient_boosted_trees.cc:247] Truncates the model to 242 tree(s) i.e. 242 iteration(s).

[INFO 23-08-16 11:08:07.7910 UTC gradient_boosted_trees.cc:310] Final model num-trees:242 valid-loss:0.574698 valid-accuracy:0.871625

[INFO 23-08-16 11:08:07.7922 UTC hyperparameters_optimizer.cc:582] [8/50] Score: -0.574698 / -0.569154 HParams: fields { name: "min_examples" value { integer: 2 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 4 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.15 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.9 } }

[INFO 23-08-16 11:08:07.7931 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:07.7932 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:07.7991 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:07.8445 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.082622 train-accuracy:0.761895 valid-loss:1.140940 valid-accuracy:0.736609

[INFO 23-08-16 11:08:08.2101 UTC gradient_boosted_trees.cc:1542] num-trees:300 train-loss:0.488794 train-accuracy:0.890031 valid-loss:0.571949 valid-accuracy:0.873395

[INFO 23-08-16 11:08:08.2101 UTC gradient_boosted_trees.cc:247] Truncates the model to 284 tree(s) i.e. 284 iteration(s).

[INFO 23-08-16 11:08:08.2102 UTC gradient_boosted_trees.cc:310] Final model num-trees:284 valid-loss:0.571257 valid-accuracy:0.872953

[INFO 23-08-16 11:08:08.2127 UTC hyperparameters_optimizer.cc:582] [9/50] Score: -0.571257 / -0.569154 HParams: fields { name: "min_examples" value { integer: 10 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 5 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.1 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.5 } }

[INFO 23-08-16 11:08:08.2158 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:08.2158 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:08.2204 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:08.2651 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:0.991671 train-accuracy:0.761895 valid-loss:1.045193 valid-accuracy:0.736609

[INFO 23-08-16 11:08:09.2840 UTC gradient_boosted_trees.cc:1542] num-trees:300 train-loss:0.567582 train-accuracy:0.871475 valid-loss:0.596684 valid-accuracy:0.865870

[INFO 23-08-16 11:08:09.2840 UTC gradient_boosted_trees.cc:247] Truncates the model to 300 tree(s) i.e. 300 iteration(s).

[INFO 23-08-16 11:08:09.2840 UTC gradient_boosted_trees.cc:310] Final model num-trees:300 valid-loss:0.596684 valid-accuracy:0.865870

[INFO 23-08-16 11:08:09.2850 UTC hyperparameters_optimizer.cc:582] [10/50] Score: -0.596684 / -0.569154 HParams: fields { name: "min_examples" value { integer: 2 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 3 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.1 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.2 } }

[INFO 23-08-16 11:08:09.2866 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:09.2867 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:09.2915 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:09.3449 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.015325 train-accuracy:0.761895 valid-loss:1.070753 valid-accuracy:0.736609

[INFO 23-08-16 11:08:09.6511 UTC gradient_boosted_trees.cc:1542] num-trees:300 train-loss:0.499704 train-accuracy:0.890712 valid-loss:0.584889 valid-accuracy:0.869854

[INFO 23-08-16 11:08:09.6511 UTC gradient_boosted_trees.cc:247] Truncates the model to 298 tree(s) i.e. 298 iteration(s).

[INFO 23-08-16 11:08:09.6512 UTC gradient_boosted_trees.cc:310] Final model num-trees:298 valid-loss:0.584790 valid-accuracy:0.869411

[INFO 23-08-16 11:08:09.6544 UTC hyperparameters_optimizer.cc:582] [11/50] Score: -0.58479 / -0.569154 HParams: fields { name: "min_examples" value { integer: 7 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "BEST_FIRST_GLOBAL" } } fields { name: "max_num_nodes" value { integer: 16 } } fields { name: "use_hessian_gain" value { categorical: "false" } } fields { name: "shrinkage" value { real: 0.05 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.2 } }

[INFO 23-08-16 11:08:09.6593 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:09.6594 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:09.6638 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:09.7372 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.056130 train-accuracy:0.761895 valid-loss:1.113107 valid-accuracy:0.736609

[INFO 23-08-16 11:08:09.7959 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.578549

[INFO 23-08-16 11:08:09.7959 UTC gradient_boosted_trees.cc:247] Truncates the model to 105 tree(s) i.e. 105 iteration(s).

[INFO 23-08-16 11:08:09.7971 UTC gradient_boosted_trees.cc:310] Final model num-trees:105 valid-loss:0.578549 valid-accuracy:0.871625

[INFO 23-08-16 11:08:09.8037 UTC hyperparameters_optimizer.cc:582] [12/50] Score: -0.578549 / -0.569154 HParams: fields { name: "min_examples" value { integer: 2 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 8 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.1 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.2 } }

[INFO 23-08-16 11:08:09.8107 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:09.8107 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:09.8152 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:09.8803 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.081456 train-accuracy:0.761895 valid-loss:1.139474 valid-accuracy:0.736609

[INFO 23-08-16 11:08:10.0550 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.575113

[INFO 23-08-16 11:08:10.0551 UTC gradient_boosted_trees.cc:247] Truncates the model to 242 tree(s) i.e. 242 iteration(s).

[INFO 23-08-16 11:08:10.0553 UTC gradient_boosted_trees.cc:310] Final model num-trees:242 valid-loss:0.575113 valid-accuracy:0.870297

[INFO 23-08-16 11:08:10.0575 UTC hyperparameters_optimizer.cc:582] [13/50] Score: -0.575113 / -0.569154 HParams: fields { name: "min_examples" value { integer: 7 } } fields { name: "categorical_algorithm" value { categorical: "RANDOM" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 5 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.1 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.5 } }

[INFO 23-08-16 11:08:10.0586 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:10.0586 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:10.0638 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:10.1122 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.010652 train-accuracy:0.761895 valid-loss:1.064824 valid-accuracy:0.736609

[INFO 23-08-16 11:08:10.3474 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.574784

[INFO 23-08-16 11:08:10.3474 UTC gradient_boosted_trees.cc:247] Truncates the model to 249 tree(s) i.e. 249 iteration(s).

[INFO 23-08-16 11:08:10.3476 UTC gradient_boosted_trees.cc:310] Final model num-trees:249 valid-loss:0.574784 valid-accuracy:0.867641

[INFO 23-08-16 11:08:10.3491 UTC hyperparameters_optimizer.cc:582] [14/50] Score: -0.574784 / -0.569154 HParams: fields { name: "min_examples" value { integer: 7 } } fields { name: "categorical_algorithm" value { categorical: "RANDOM" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 4 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.15 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.9 } }

[INFO 23-08-16 11:08:10.3509 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:10.3510 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:10.3566 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:10.4110 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.013729 train-accuracy:0.761895 valid-loss:1.069266 valid-accuracy:0.736609

[INFO 23-08-16 11:08:10.8116 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.577748

[INFO 23-08-16 11:08:10.8117 UTC gradient_boosted_trees.cc:247] Truncates the model to 89 tree(s) i.e. 89 iteration(s).

[INFO 23-08-16 11:08:10.8120 UTC gradient_boosted_trees.cc:310] Final model num-trees:89 valid-loss:0.577748 valid-accuracy:0.871625

[INFO 23-08-16 11:08:10.8133 UTC hyperparameters_optimizer.cc:582] [15/50] Score: -0.577748 / -0.569154 HParams: fields { name: "min_examples" value { integer: 7 } } fields { name: "categorical_algorithm" value { categorical: "RANDOM" } } fields { name: "growing_strategy" value { categorical: "BEST_FIRST_GLOBAL" } } fields { name: "max_num_nodes" value { integer: 16 } } fields { name: "use_hessian_gain" value { categorical: "false" } } fields { name: "shrinkage" value { real: 0.15 } } fields { name: "num_candidate_attributes_ratio" value { real: 1 } }

[INFO 23-08-16 11:08:10.8143 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:10.8143 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:10.8194 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:10.8644 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.009908 train-accuracy:0.761895 valid-loss:1.065147 valid-accuracy:0.736609

[INFO 23-08-16 11:08:11.2479 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.578216

[INFO 23-08-16 11:08:11.2480 UTC gradient_boosted_trees.cc:247] Truncates the model to 195 tree(s) i.e. 195 iteration(s).

[INFO 23-08-16 11:08:11.2489 UTC gradient_boosted_trees.cc:310] Final model num-trees:195 valid-loss:0.578216 valid-accuracy:0.869854

[INFO 23-08-16 11:08:11.2556 UTC hyperparameters_optimizer.cc:582] [16/50] Score: -0.578216 / -0.569154 HParams: fields { name: "min_examples" value { integer: 5 } } fields { name: "categorical_algorithm" value { categorical: "RANDOM" } } fields { name: "growing_strategy" value { categorical: "BEST_FIRST_GLOBAL" } } fields { name: "max_num_nodes" value { integer: 256 } } fields { name: "use_hessian_gain" value { categorical: "false" } } fields { name: "shrinkage" value { real: 0.05 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.2 } }

[INFO 23-08-16 11:08:11.2566 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:11.2566 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:11.2647 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:11.3506 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.079317 train-accuracy:0.761895 valid-loss:1.137114 valid-accuracy:0.736609

[INFO 23-08-16 11:08:11.3940 UTC gradient_boosted_trees.cc:1542] num-trees:300 train-loss:0.552215 train-accuracy:0.876248 valid-loss:0.594582 valid-accuracy:0.869411

[INFO 23-08-16 11:08:11.3940 UTC gradient_boosted_trees.cc:247] Truncates the model to 294 tree(s) i.e. 294 iteration(s).

[INFO 23-08-16 11:08:11.3941 UTC gradient_boosted_trees.cc:310] Final model num-trees:294 valid-loss:0.594392 valid-accuracy:0.868969

[INFO 23-08-16 11:08:11.3949 UTC hyperparameters_optimizer.cc:582] [17/50] Score: -0.594392 / -0.569154 HParams: fields { name: "min_examples" value { integer: 2 } } fields { name: "categorical_algorithm" value { categorical: "RANDOM" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 3 } } fields { name: "use_hessian_gain" value { categorical: "false" } } fields { name: "shrinkage" value { real: 0.1 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.9 } }

[INFO 23-08-16 11:08:11.3962 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:11.3963 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:11.4011 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:11.4157 UTC gradient_boosted_trees.cc:1542] num-trees:300 train-loss:0.515704 train-accuracy:0.886719 valid-loss:0.592440 valid-accuracy:0.868083

[INFO 23-08-16 11:08:11.4158 UTC gradient_boosted_trees.cc:247] Truncates the model to 300 tree(s) i.e. 300 iteration(s).

[INFO 23-08-16 11:08:11.4158 UTC gradient_boosted_trees.cc:310] Final model num-trees:300 valid-loss:0.592440 valid-accuracy:0.868083

[INFO 23-08-16 11:08:11.4257 UTC hyperparameters_optimizer.cc:582] [18/50] Score: -0.59244 / -0.569154 HParams: fields { name: "min_examples" value { integer: 10 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "BEST_FIRST_GLOBAL" } } fields { name: "max_num_nodes" value { integer: 64 } } fields { name: "use_hessian_gain" value { categorical: "false" } } fields { name: "shrinkage" value { real: 0.02 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.2 } }

[INFO 23-08-16 11:08:11.4423 UTC gradient_boosted_trees.cc:459] Default loss set to BINOMIAL_LOG_LIKELIHOOD

[INFO 23-08-16 11:08:11.4423 UTC gradient_boosted_trees.cc:1085] Training gradient boosted tree on 22792 example(s) and 14 feature(s).

[INFO 23-08-16 11:08:11.4468 UTC gradient_boosted_trees.cc:1128] 20533 examples used for training and 2259 examples used for validation

[INFO 23-08-16 11:08:11.4613 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.579733

[INFO 23-08-16 11:08:11.4613 UTC gradient_boosted_trees.cc:247] Truncates the model to 78 tree(s) i.e. 78 iteration(s).

[INFO 23-08-16 11:08:11.4627 UTC gradient_boosted_trees.cc:310] Final model num-trees:78 valid-loss:0.579733 valid-accuracy:0.867641

[INFO 23-08-16 11:08:11.4684 UTC hyperparameters_optimizer.cc:582] [19/50] Score: -0.579733 / -0.569154 HParams: fields { name: "min_examples" value { integer: 2 } } fields { name: "categorical_algorithm" value { categorical: "RANDOM" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 8 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.1 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.5 } }

[INFO 23-08-16 11:08:11.4914 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.055888 train-accuracy:0.761895 valid-loss:1.113012 valid-accuracy:0.736609

[INFO 23-08-16 11:08:11.4956 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.586668

[INFO 23-08-16 11:08:11.4956 UTC gradient_boosted_trees.cc:247] Truncates the model to 61 tree(s) i.e. 61 iteration(s).

[INFO 23-08-16 11:08:11.4962 UTC gradient_boosted_trees.cc:310] Final model num-trees:61 valid-loss:0.586668 valid-accuracy:0.868969

[INFO 23-08-16 11:08:11.4974 UTC hyperparameters_optimizer.cc:582] [20/50] Score: -0.586668 / -0.569154 HParams: fields { name: "min_examples" value { integer: 10 } } fields { name: "categorical_algorithm" value { categorical: "RANDOM" } } fields { name: "growing_strategy" value { categorical: "BEST_FIRST_GLOBAL" } } fields { name: "max_num_nodes" value { integer: 16 } } fields { name: "use_hessian_gain" value { categorical: "false" } } fields { name: "shrinkage" value { real: 0.15 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.5 } }

[INFO 23-08-16 11:08:11.5397 UTC gradient_boosted_trees.cc:1542] num-trees:1 train-loss:1.012875 train-accuracy:0.761895 valid-loss:1.067941 valid-accuracy:0.736609

[INFO 23-08-16 11:08:12.4754 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.576467

[INFO 23-08-16 11:08:12.4754 UTC gradient_boosted_trees.cc:247] Truncates the model to 147 tree(s) i.e. 147 iteration(s).

[INFO 23-08-16 11:08:12.4757 UTC gradient_boosted_trees.cc:310] Final model num-trees:147 valid-loss:0.576467 valid-accuracy:0.870739

[INFO 23-08-16 11:08:12.4773 UTC hyperparameters_optimizer.cc:582] [21/50] Score: -0.576467 / -0.569154 HParams: fields { name: "min_examples" value { integer: 5 } } fields { name: "categorical_algorithm" value { categorical: "RANDOM" } } fields { name: "growing_strategy" value { categorical: "LOCAL" } } fields { name: "max_depth" value { integer: 5 } } fields { name: "use_hessian_gain" value { categorical: "true" } } fields { name: "shrinkage" value { real: 0.15 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.5 } }

[INFO 23-08-16 11:08:12.8409 UTC gradient_boosted_trees.cc:1542] num-trees:300 train-loss:0.498302 train-accuracy:0.892222 valid-loss:0.585352 valid-accuracy:0.870297

[INFO 23-08-16 11:08:12.8409 UTC gradient_boosted_trees.cc:247] Truncates the model to 296 tree(s) i.e. 296 iteration(s).

[INFO 23-08-16 11:08:12.8410 UTC gradient_boosted_trees.cc:310] Final model num-trees:296 valid-loss:0.585279 valid-accuracy:0.870297

[INFO 23-08-16 11:08:12.8441 UTC hyperparameters_optimizer.cc:582] [22/50] Score: -0.585279 / -0.569154 HParams: fields { name: "min_examples" value { integer: 10 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "BEST_FIRST_GLOBAL" } } fields { name: "max_num_nodes" value { integer: 16 } } fields { name: "use_hessian_gain" value { categorical: "false" } } fields { name: "shrinkage" value { real: 0.05 } } fields { name: "num_candidate_attributes_ratio" value { real: 0.2 } }

[INFO 23-08-16 11:08:13.1007 UTC early_stopping.cc:53] Early stop of the training because the validation loss does not decrease anymore. Best valid-loss: 0.579464

[INFO 23-08-16 11:08:13.1008 UTC gradient_boosted_trees.cc:247] Truncates the model to 129 tree(s) i.e. 129 iteration(s).

[INFO 23-08-16 11:08:13.1018 UTC gradient_boosted_trees.cc:310] Final model num-trees:129 valid-loss:0.579464 valid-accuracy:0.870297

[INFO 23-08-16 11:08:13.1084 UTC hyperparameters_optimizer.cc:582] [23/50] Score: -0.579464 / -0.569154 HParams: fields { name: "min_examples" value { integer: 5 } } fields { name: "categorical_algorithm" value { categorical: "CART" } } fields { name: "growing_strategy" value { categorical: "BEST_FIRST_GLOBAL" } } fields { name: "max_num_nodes" value { integer: 128 } } fields { name: "use_hessian_gain" value { categorical: "false" } } fields { name: "shrinkage" value { real: 0.1 } } fields { name: "num_candidate_attributes_ratio" value { real: 1 } }

[INFO 23-08-16 11:08:13.1165 UTC gradient_boosted_trees.cc:1542] num-trees:300 train-loss:0.511416 train-accuracy:0.884381 valid-loss:0.572223 valid-accuracy:0.874723

[INFO 23-08-16 11:08:13.1166 UTC gradient_boosted_trees.cc:247] Truncates the model to 291 tree(s) i.e. 291 iteration(s).