Qt/C++音视频开发60-坐标拾取/按下鼠标获取矩形区域/转换到视频源真实坐标

发布时间:2023年12月17日

一、前言

通过在通道画面上拾取鼠标按下的坐标,然后鼠标移动,直到松开,根据松开的坐标和按下的坐标,绘制一个矩形区域,作为热点或者需要电子放大的区域,拿到这个坐标区域,用途非常多,可以直接将区域中的画面放大,也可以将该圈起来的区域位置发给设备,由设备设定对应的热点区域作为集中观察点,可以用来人工智能分析,比如出现在该区域的人脸,可以判定为入侵,该区域内的画面被改动过,判定为物体非法挪动等。各种各样的分析算法应用上来,就可以做出非常多的检测效果,这些都有个前提,那就是用户能够在视频画面中自由的选择自己需要的区域,这就是要实现的功能。

采集到的视频数据,在UI界面上,可能是拉伸填充显示的,也可能是等比例缩放显示的,最重要的是,显示的窗体,几乎不大可能刚好是和分辨率大小一样,所以这就涉及到一个转换关系,就是根据窗体的尺寸和视频的尺寸,当前鼠标按下的坐标,需要换算成视频对应的坐标,换算公式是:视频X=坐标X / 窗体宽度 * 视频宽度,视频Y=坐标Y / 窗体高度 * 视频高度。所以在视频窗体控件上识别鼠标按下/鼠标移动/鼠标松开事件进行处理即可,最后发送信号出去,带上类型(鼠标按下/鼠标移动/鼠标松开)和QPoint坐标。为什么要带上类型呢?方便用户处理,比如识别到用户按下就记住坐标,移动的时候绘制方框,结束的时候发送滤镜执行裁剪也就是电子放大操作。

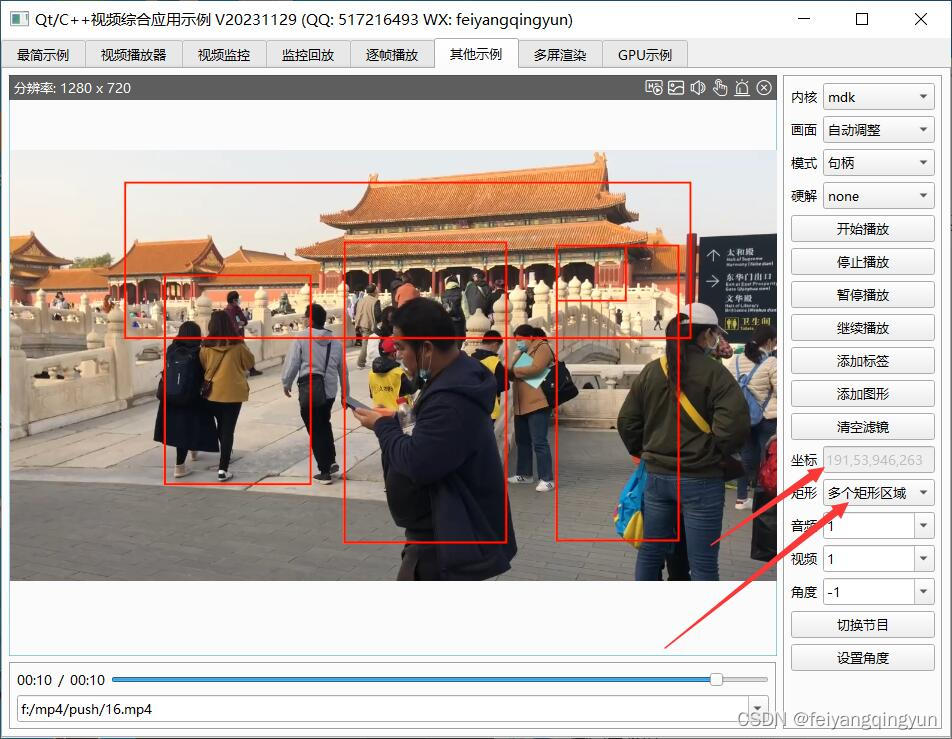

二、效果图

三、体验地址

- 国内站点:https://gitee.com/feiyangqingyun

- 国际站点:https://github.com/feiyangqingyun

- 个人作品:https://blog.csdn.net/feiyangqingyun/article/details/97565652

- 体验地址:https://pan.baidu.com/s/1d7TH_GEYl5nOecuNlWJJ7g 提取码:01jf 文件名:bin_video_demo。

- 视频主页:https://space.bilibili.com/687803542

四、功能特点

4.1. 基础功能

- 支持各种音频视频文件格式,比如mp3、wav、mp4、asf、rm、rmvb、mkv等。

- 支持本地摄像头设备和本地桌面采集,支持多设备和多屏幕。

- 支持各种视频流格式,比如rtp、rtsp、rtmp、http、udp等。

- 本地音视频文件和网络音视频文件,自动识别文件长度、播放进度、音量大小、静音状态等。

- 文件可以指定播放位置、调节音量大小、设置静音状态等。

- 支持倍速播放文件,可选0.5倍、1.0倍、2.5倍、5.0倍等速度,相当于慢放和快放。

- 支持开始播放、停止播放、暂停播放、继续播放。

- 支持抓拍截图,可指定文件路径,可选抓拍完成是否自动显示预览。

- 支持录像存储,手动开始录像、停止录像,部分内核支持暂停录像后继续录像,跳过不需要录像的部分。

- 支持无感知切换循环播放、自动重连等机制。

- 提供播放成功、播放完成、收到解码图片、收到抓拍图片、视频尺寸变化、录像状态变化等信号。

- 多线程处理,一个解码一个线程,不卡主界面。

4.2. 特色功能

- 同时支持多种解码内核,包括qmedia内核(Qt4/Qt5/Qt6)、ffmpeg内核(ffmpeg2/ffmpeg3/ffmpeg4/ffmpeg5/ffmpeg6)、vlc内核(vlc2/vlc3)、mpv内核(mpv1/mp2)、mdk内核、海康sdk、easyplayer内核等。

- 非常完善的多重基类设计,新增一种解码内核只需要实现极少的代码量,就可以应用整套机制,极易拓展。

- 同时支持多种画面显示策略,自动调整(原始分辨率小于显示控件尺寸则按照原始分辨率大小显示,否则等比缩放)、等比缩放(永远等比缩放)、拉伸填充(永远拉伸填充)。所有内核和所有视频显示模式下都支持三种画面显示策略。

- 同时支持多种视频显示模式,句柄模式(传入控件句柄交给对方绘制控制)、绘制模式(回调拿到数据后转成QImage用QPainter绘制)、GPU模式(回调拿到数据后转成yuv用QOpenglWidget绘制)。

- 支持多种硬件加速类型,ffmpeg可选dxva2、d3d11va等,vlc可选any、dxva2、d3d11va,mpv可选auto、dxva2、d3d11va,mdk可选dxva2、d3d11va、cuda、mft等。不同的系统环境有不同的类型选择,比如linux系统有vaapi、vdpau,macos系统有videotoolbox。

- 解码线程和显示窗体分离,可指定任意解码内核挂载到任意显示窗体,动态切换。

- 支持共享解码线程,默认开启并且自动处理,当识别到相同的视频地址,共享一个解码线程,在网络视频环境中可以大大节约网络流量以及对方设备的推流压力。国内顶尖视频厂商均采用此策略。这样只要拉一路视频流就可以共享到几十个几百个通道展示。

- 自动识别视频旋转角度并绘制,比如手机上拍摄的视频一般是旋转了90度的,播放的时候要自动旋转处理,不然默认是倒着的。

- 自动识别视频流播放过程中分辨率的变化,在视频控件上自动调整尺寸。比如摄像机可以在使用过程中动态配置分辨率,当分辨率改动后对应视频控件也要做出同步反应。

- 音视频文件无感知自动切换循环播放,不会出现切换期间黑屏等肉眼可见的切换痕迹。

- 视频控件同时支持任意解码内核、任意画面显示策略、任意视频显示模式。

- 视频控件悬浮条同时支持句柄、绘制、GPU三种模式,非绝对坐标移来移去。

- 本地摄像头设备支持指定设备名称、分辨率、帧率进行播放。

- 本地桌面采集支持设定采集区域、偏移值、指定桌面索引、帧率、多个桌面同时采集等。还支持指定窗口标题采集固定窗口。

- 录像文件同时支持打开的视频文件、本地摄像头、本地桌面、网络视频流等。

- 瞬间响应打开和关闭,无论是打开不存在的视频或者网络流,探测设备是否存在,读取中的超时等待,收到关闭指令立即中断之前的操作并响应。

- 支持打开各种图片文件,支持本地音视频文件拖曳播放。

- 视频流通信方式可选tcp/udp,有些设备可能只提供了某一种协议通信比如tcp,需要指定该种协议方式打开。

- 可设置连接超时时间(视频流探测用的超时时间)、读取超时时间(采集过程中的超时时间)。

- 支持逐帧播放,提供上一帧/下一帧函数接口,可以逐帧查阅采集到的图像。

- 音频文件自动提取专辑信息比如标题、艺术家、专辑、专辑封面,自动显示专辑封面。

- 视频响应极低延迟0.2s左右,极速响应打开视频流0.5s左右,专门做了优化处理。

- 支持H264/H265编码(现在越来越多的监控摄像头是H265视频流格式)生成视频文件,内部自动识别切换编码格式。

- 支持用户信息中包含特殊字符(比如用户信息中包含+#@等字符)的视频流播放,内置解析转义处理。

- 支持滤镜,各种水印及图形效果,支持多个水印和图像,可以将OSD标签信息和各种图形信息写入到MP4文件。

- 支持视频流中的各种音频格式,AAC、PCM、G.726、G.711A、G.711Mu、G.711ulaw、G.711alaw、MP2L2等都支持,推荐选择AAC兼容性跨平台性最好。

- 内核ffmpeg采用纯qt+ffmpeg解码,非sdl等第三方绘制播放依赖,gpu绘制采用qopenglwidget,音频播放采用qaudiooutput。

- 内核ffmpeg和内核mdk支持安卓,其中mdk支持安卓硬解码,性能非常凶残。

- 可以切换音视频轨道,也就是节目通道,可能ts文件带了多个音视频节目流,可以分别设置要播放哪一个,可以播放前设置好和播放过程中动态设置。

- 可以设置视频旋转角度,可以播放前设置好和播放过程中动态改变。

- 视频控件悬浮条自带开始和停止录像切换、声音静音切换、抓拍截图、关闭视频等功能。

- 音频组件支持声音波形值数据解析,可以根据该值绘制波形曲线和柱状声音条,默认提供了声音振幅信号。

- 标签和图形信息支持三种绘制方式,绘制到遮罩层、绘制到图片、源头绘制(对应信息可以存储到文件)。

- 通过传入一个url地址,该地址可以带上通信协议、分辨率、帧率等信息,无需其他设置。

- 保存视频到文件支持三种策略,自动处理、仅限文件、全部转码,转码策略支持自动识别、转264、转265,编码保存支持指定分辨率缩放或者等比例缩放。比如对保存文件体积有要求可以指定缩放后再存储。

- 支持加密保存文件和解密播放文件,可以指定秘钥文本。

- 提供的监控布局类支持64通道同时显示,还支持各种异型布局,比如13通道,手机上6行2列布局。各种布局可以自由定义。

- 支持电子放大,在悬浮条切换到电子放大模式,在画面上选择需要放大的区域,选取完毕后自动放大,再次切换放大模式可以复位。

- 各组件中极其详细的打印信息提示,尤其是报错信息提示,封装的统一打印格式。针对现场复杂的设备环境测试极其方便有用,相当于精确定位到具体哪个通道哪个步骤出错。

- 同时提供了简单示例、视频播放器、多画面视频监控、监控回放、逐帧播放、多屏渲染等单独窗体示例,专门演示对应功能如何使用。

- 监控回放可选不同厂家类型、回放时间段、用户信息、指定通道。支持切换回放进度。

- 可以从声卡设备下拉框选择声卡播放声音,提供对应的切换声卡函数接口。

- 支持编译到手机app使用,提供了专门的手机app布局界面,可以作为手机上的视频监控使用。

- 代码框架和结构优化到最优,性能强悍,注释详细,持续迭代更新升级。

- 源码支持windows、linux、mac、android等,支持各种国产linux系统,包括但不限于统信UOS/中标麒麟/银河麒麟等。还支持嵌入式linux。

- 源码支持Qt4、Qt5、Qt6,兼容所有版本。

4.3. 视频控件

- 可动态添加任意多个osd标签信息,标签信息包括名字、是否可见、字号大小、文本文字、文本颜色、背景颜色、标签图片、标签坐标、标签格式(文本、日期、时间、日期时间、图片)、标签位置(左上角、左下角、右上角、右下角、居中、自定义坐标)。

- 可动态添加任意多个图形信息,比如人工智能算法解析后的图形区域信息直接发给视频控件即可。图形信息支持任意形状,直接绘制在原始图片上,采用绝对坐标。

- 图形信息包括名字、边框大小、边框颜色、背景颜色、矩形区域、路径集合、点坐标集合等。

- 每个图形信息都可指定三种区域中的一种或者多种,指定了的都会绘制。

- 内置悬浮条控件,悬浮条位置支持顶部、底部、左侧、右侧。

- 悬浮条控件参数包括边距、间距、背景透明度、背景颜色、文本颜色、按下颜色、位置、按钮图标代码集合、按钮名称标识集合、按钮提示信息集合。

- 悬浮条控件一排工具按钮可自定义,通过结构体参数设置,图标可选图形字体还是自定义图片。

- 悬浮条按钮内部实现了录像切换、抓拍截图、静音切换、关闭视频等功能,也可以自行在源码中增加自己对应的功能。

- 悬浮条按钮对应实现了功能的按钮,有对应图标切换处理,比如录像按钮按下后会切换到正在录像中的图标,声音按钮切换后变成静音图标,再次切换还原。

- 悬浮条按钮单击后都用名称唯一标识作为信号发出,可以自行关联响应处理。

- 悬浮条空白区域可以显示提示信息,默认显示当前视频分辨率大小,可以增加帧率、码流大小等信息。

- 视频控件参数包括边框大小、边框颜色、焦点颜色、背景颜色(默认透明)、文字颜色(默认全局文字颜色)、填充颜色(视频外的空白处填充黑色)、背景文字、背景图片(如果设置了图片优先取图片)、是否拷贝图片、缩放显示模式(自动调整、等比缩放、拉伸填充)、视频显示模式(句柄、绘制、GPU)、启用悬浮条、悬浮条尺寸(横向为高度、纵向为宽度)、悬浮条位置(顶部、底部、左侧、右侧)。

五、相关代码

void VideoWidget::btnClicked(const QString &btnName)

{

QString flag = widgetPara.videoFlag;

QString name = STRDATETIMEMS;

if (!flag.isEmpty()) {

name = QString("%1_%2").arg(flag).arg(name);

}

if (btnName.endsWith("btnRecord")) {

QString fileName = QString("%1/%2.mp4").arg(recordPath).arg(name);

this->recordStart(fileName);

} else if (btnName.endsWith("btnStop")) {

this->recordStop();

} else if (btnName.endsWith("btnSound")) {

this->setMuted(true);

} else if (btnName.endsWith("btnMuted")) {

this->setMuted(false);

} else if (btnName.endsWith("btnSnap")) {

QString snapName = QString("%1/%2.jpg").arg(snapPath).arg(name);

this->snap(snapName, false);

} else if (btnName.endsWith("btnCrop")) {

if (videoThread) {

if (videoPara.videoCore == VideoCore_FFmpeg) {

QMetaObject::invokeMethod(videoThread, "setCrop", Q_ARG(bool, true));

}

}

} else if (btnName.endsWith("btnReset")) {

if (videoThread) {

this->removeGraph("crop");

if (videoPara.videoCore == VideoCore_FFmpeg) {

QMetaObject::invokeMethod(videoThread, "setCrop", Q_ARG(bool, false));

}

}

} else if (btnName.endsWith("btnAlarm")) {

} else if (btnName.endsWith("btnClose")) {

this->stop();

}

}

void AbstractVideoWidget::appendGraph(const GraphInfo &graph)

{

QMutexLocker locker(&mutex);

listGraph << graph;

this->update();

emit sig_graphChanged();

}

void AbstractVideoWidget::removeGraph(const QString &name)

{

QMutexLocker locker(&mutex);

int count = listGraph.count();

for (int i = 0; i < count; ++i) {

if (listGraph.at(i).name == name) {

listGraph.removeAt(i);

break;

}

}

this->update();

emit sig_graphChanged();

}

void AbstractVideoWidget::clearGraph()

{

QMutexLocker locker(&mutex);

listGraph.clear();

this->update();

emit sig_graphChanged();

}

QString FilterHelper::getFilter(const GraphInfo &graph, bool hardware)

{

//drawbox=x=10:y=10:w=100:h=100:c=#ffffff@1:t=2

QString filter;

//有个现象就是硬解码下的图形滤镜会导致原图颜色不对

if (hardware) {

return filter;

}

//暂时只实现了矩形区域

QRect rect = graph.rect;

if (rect.isEmpty()) {

return filter;

}

//过滤关键字用于电子放大

if (graph.name == "crop") {

filter = QString("crop=%1:%2:%3:%4").arg(rect.width()).arg(rect.height()).arg(rect.x()).arg(rect.y());

return filter;

}

QStringList list;

list << QString("x=%1").arg(rect.x());

list << QString("y=%1").arg(rect.y());

list << QString("w=%1").arg(rect.width());

list << QString("h=%1").arg(rect.height());

QColor color = graph.borderColor;

list << QString("c=%1@%2").arg(color.name()).arg(color.alphaF());

//背景颜色不透明则填充背景颜色

if (graph.bgColor == Qt::transparent) {

list << QString("t=%1").arg(graph.borderWidth);

} else {

list << QString("t=%1").arg("fill");

}

filter = QString("drawbox=%1").arg(list.join(":"));

return filter;

}

QString FilterHelper::getFilters(const QStringList &listFilter)

{

//挨个取出图片滤镜对应的图片和坐标

int count = listFilter.count();

QStringList listImage, listPosition, listTemp;

for (int i = 0; i < count; ++i) {

QString filter = listFilter.at(i);

if (filter.startsWith("movie=")) {

QStringList list = filter.split(";");

QString movie = list.first();

QString overlay = list.last();

movie.replace("[wm]", "");

overlay.replace("[wm]", "");

overlay.replace("[in]", "");

overlay.replace("[out]", "");

listImage << movie;

listPosition << overlay;

} else {

listTemp << filter;

}

}

//图片滤镜字符串在下面重新处理

QString filterImage, filterAll;

QString filterOther = listTemp.join(",");

//存在图片水印需要重新调整滤镜字符串

//1张图: movie=./osd.png[wm0];[in][wm0]overlay=0:0[out]

//2张图: movie=./osd.png[wm0];movie=./osd.png[wm1];[in][wm0]overlay=0:0[a];[a][wm1]overlay=0:0[out]

//3张图: movie=./osd.png[wm0];movie=./osd.png[wm1];movie=./osd.png[wm2];[in][wm0]overlay=0:0[a0];[a0][wm1]overlay=0:0[a1];[a1][wm2]overlay=0:0[out]

count = listImage.count();

if (count > 0) {

//加上标识符和头部和尾部标识符

for (int i = 0; i < count; ++i) {

QString flag = QString("[wm%1]").arg(i);

listImage[i] = listImage.at(i) + flag;

listPosition[i] = flag + listPosition.at(i);

listPosition[i] = (i == 0 ? "[in]" : QString("[a%1]").arg(i - 1)) + listPosition.at(i);

listPosition[i] = listPosition.at(i) + (i == (count - 1) ? "[out]" : QString("[a%1]").arg(i));

}

QStringList filters;

for (int i = 0; i < count; ++i) {

filters << listImage.at(i);

}

for (int i = 0; i < count; ++i) {

filters << listPosition.at(i);

}

//图片滤镜集合最终字符串

filterImage = filters.join(";");

//存在其他滤镜则其他滤镜在前面

if (listTemp.count() > 0) {

filterImage.replace("[in]", "[other]");

filterAll = "[in]" + filterOther + "[other];" + filterImage;

} else {

filterAll = filterImage;

}

} else {

filterAll = filterOther;

}

return filterAll;

}

QStringList FilterHelper::getFilters(const QList<OsdInfo> &listOsd, const QList<GraphInfo> &listGraph, bool noimage, bool hardware)

{

//滤镜内容字符串集合

QStringList listFilter;

//加入标签信息

foreach (OsdInfo osd, listOsd) {

QString filter = FilterHelper::getFilter(osd, noimage);

if (!filter.isEmpty()) {

listFilter << filter;

}

}

//加入图形信息

foreach (GraphInfo graph, listGraph) {

QString filter = FilterHelper::getFilter(graph, hardware);

if (!filter.isEmpty()) {

listFilter << filter;

}

}

//加入其他滤镜

QString filter = FilterHelper::getFilter();

if (!filter.isEmpty()) {

listFilter << filter;

}

return listFilter;

}

文章来源:https://blog.csdn.net/feiyangqingyun/article/details/135041561

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- vue2 v-for用法

- 报错解决方法——http404(Spring MVC)

- 牛客后端开发面试题2

- SAP消息编号 AQ316

- 深入理解 Vue.js 中的 Mixins

- 时序预测 | Python实现LSTM电力需求预测

- 【PUSDN】MySQL数据库建表规范【企业级】

- 数据库原理课程考试网站设计-计算机毕业设计源码78952

- L1-093 猜帽子游戏(Java)

- 【Android开发】移动程序设计期末复习练习题(一)