【Earth Engine】协同Sentinel-1/2实现某个城市的精细化相对财富(贫困)制图

1 简介与摘要

最近在做一些课题,需要使用Sentinel-1/2进行机器学习制图。

然后想着总结一下相关数据和方法,就花半小时写了个代码。

然后再花半小时写下这篇博客记录一下。

这篇博客主要参考数据(相对贫困指数,RWI)来自这个GEE社区网站,有缺数据的可以直接在这上面找,要用的时候调用一下就行了。这是这个数据的一个交互地图预览。

工作完全是在GEE平台上写的,如上面所说,这个工作跟我的课题内容关系不大,纯粹是拍脑袋的需求然后拍脑袋写的代码。

2 思路

思路就是用Sentinel-1/2的一些波段作为自变量,对自变量在2400m进行采样(因为这个RWI数据的分辨率就是2400),

然后相对贫困指数RWI作为因变量,

最后在10m尺度使用随机森林回归进行相对贫困制图。

因为我没相关需求,求快,所以这里就简单随便弄弄。

因为是随便弄弄,我也不计算什么乱七八糟的指数了,就用VV和VH还有一些光学波段作为自变量。

因变量就直接用原数据的RWI。

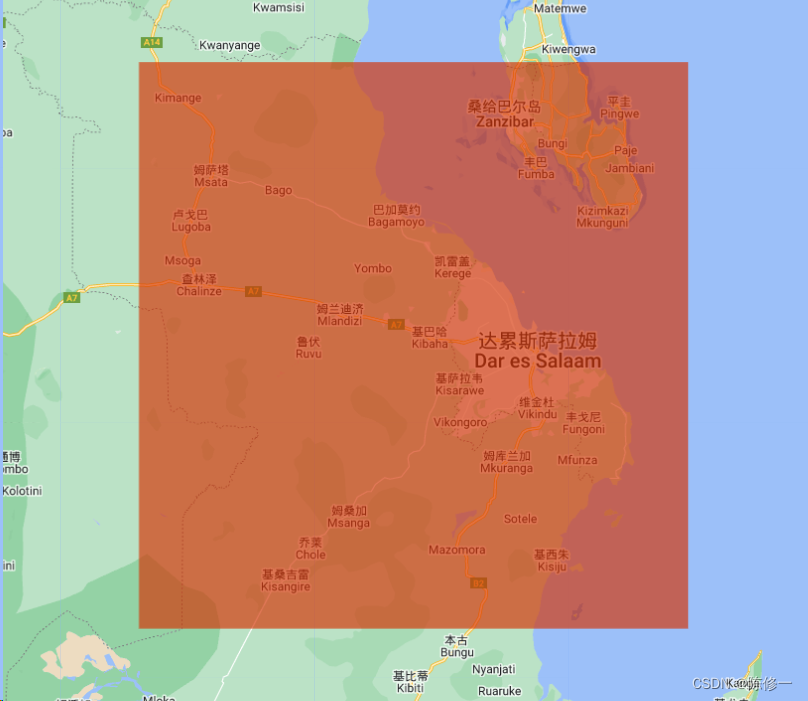

研究区选的是坦桑尼亚的原首都达雷斯萨拉姆及其周边,选这个地方没啥特殊的原因,纯粹是点开那个交互地图映入眼帘的就是这个地方(好耳熟的名字,依稀记得当时所里好像有好多非洲老哥来自这个地方)。

所以总的来说,就是基于Sentinel-1/2做一个高分辨率的(10m)相对贫困地图。

3 效果预览

惯例,在代码前面先放效果预览。

我的兴趣区(roi):

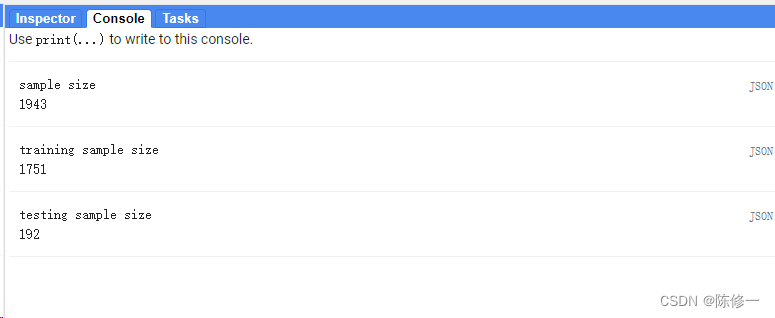

控制台:

上图的意思是区域内有1943个样本点,我拿90%(1751个)的去训练,10%(192个)去验证。

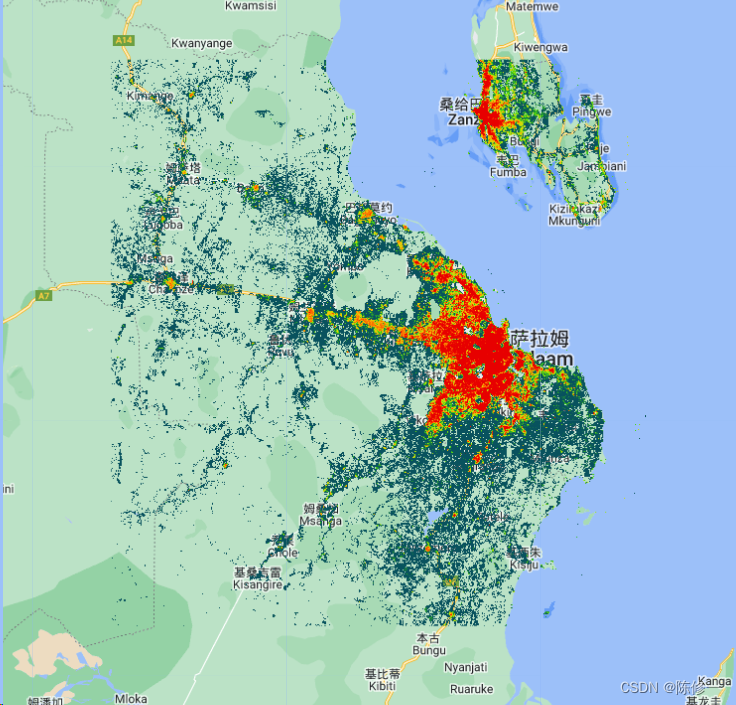

绘制结果:

红色的是RWI高值(不贫困),蓝色的是RWI低值(贫困)。

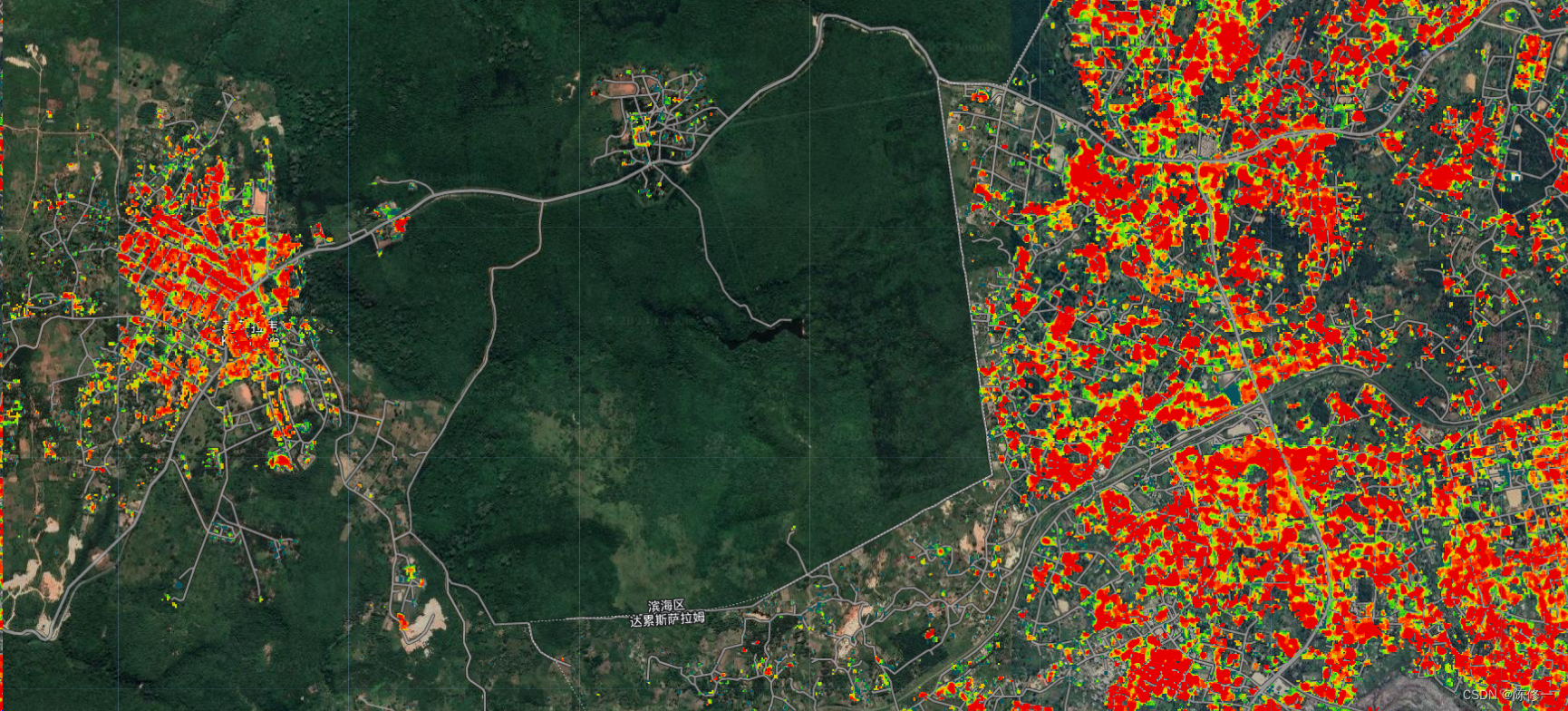

绘制结果的细节:

为什么看起来这么稀碎呢,因为我拿World Settlement Footprint掩膜了一下,常规操作,具体可看代码。

4 代码思路

首先我把我要用的数据拉进来。其中RWI数据我按我的ROI筛选一下,不然太多了。代码如下:

// Importing Built-up map

var wsf2019 = ee.ImageCollection('projects/sat-io/open-datasets/WSF/WSF_2019');

var wsf_01 = wsf2019.mosaic().eq(255);

var buildingup = wsf_01;

// Imorting RWI

var rwi = ee.FeatureCollection("projects/sat-io/open-datasets/facebook/relative_wealth_index")

.filterBounds(roi.geometry());

print("sample size", rwi.size())

考虑到要调用Sentinel-1和2,所以也把这些数据写进来,然后也要写上一些预处理。代码如下:

// Importing S1 SAR images.

var sentinel1 = ee.ImageCollection('COPERNICUS/S1_GRD')

.filterDate("2019-01-01", "2024-01-01")

.filterBounds(roi.geometry());

// print(sentinel1)

// Filter the S1 collection by metadata properties.

var vvVhIw = sentinel1

// Filter to get images with VV and VH dual polarization.

.filter(ee.Filter.listContains('transmitterReceiverPolarisation', 'VV'))

.filter(ee.Filter.listContains('transmitterReceiverPolarisation', 'VH'))

// Filter to get images collected in interferometric wide swath mode.

.filter(ee.Filter.eq('instrumentMode', 'IW'));

// Separate ascending and descending orbit images into distinct collections.

var vvVhIwAsc = vvVhIw.filter(

ee.Filter.eq('orbitProperties_pass', 'ASCENDING'));

var vvVhIwDesc = vvVhIw.filter(

ee.Filter.eq('orbitProperties_pass', 'DESCENDING'));

// Calculate temporal means.

// var vhIwAscMean = vvVhIwAsc.select('VH').mean();

// var vhIwDescMean = vvVhIwDesc.select('VH').mean();

// var vv = vvVhIwAsc.merge(vvVhIwDesc).select('VV').mean();

// var vh = vvVhIwAsc.merge(vvVhIwDesc).select('VH').mean();

// Importing S2 images.

// Cloud mask function.

function maskS2clouds(image) {

var qa = image.select('QA60');

// Bits 10 and 11 are clouds and cirrus, respectively.

var cloudBitMask = 1 << 10;

var cirrusBitMask = 1 << 11;

// Both flags should be set to zero, indicating clear conditions.

var mask = qa.bitwiseAnd(cloudBitMask).eq(0)

.and(qa.bitwiseAnd(cirrusBitMask).eq(0));

return image.updateMask(mask).divide(10000);

}

var sentinel2 = ee.ImageCollection("COPERNICUS/S2_SR")

.filterDate("2019-01-01", "2024-01-01")

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filterBounds(roi.geometry())

.map(maskS2clouds)

.mean();

再然后,把我要的波段挑出来,合并成一个image。简简单单搞一下子。代码如下:

// Indice.

var vv = vvVhIwAsc.merge(vvVhIwDesc).select('VV').mean();

var vh = vvVhIwAsc.merge(vvVhIwDesc).select('VH').mean();

var blue = sentinel2.select('B2');

var green = sentinel2.select('B3');

var red = sentinel2.select('B4');

var red_edge1 = sentinel2.select('B5');

var red_edge2 = sentinel2.select('B6');

var red_edge3 = sentinel2.select('B7');

var nir = sentinel2.select('B8');

var red_edge4 = sentinel2.select('B8A');

var SWIR1 = sentinel2.select('B11');

var SWIR2 = sentinel2.select('B12');

var image = ee.Image()

.addBands(vv)

.addBands(vh)

.addBands(blue)

.addBands(green)

.addBands(red)

.addBands(red_edge1)

.addBands(red_edge2)

.addBands(red_edge3)

.addBands(nir)

.addBands(red_edge4)

.addBands(SWIR1)

.addBands(SWIR2)

;

var band_name = ee.List(['VV', 'VH', 'B2', 'B3', 'B4', 'B5', 'B6', 'B7', 'B8', 'B8A', 'B11', 'B12'])

然后,就是用RWI数据对我选的这些波段(自变量进行采样)。考虑到RWI是2400m分辨率,所以我就在2400米采样。采样完把我们样本规模打印到控制台看看。代码如下:

// Sampling.

var samples = rwi.randomColumn('random'); // set a random field

var training_samples = samples.filter(ee.Filter.lt('random', 0.9)); // 90% training data

var training_samples = image.reduceRegions({collection: training_samples, reducer: ee.Reducer.mean(), scale: 2400}); // sampling

var testing_samples = samples.filter(ee.Filter.gte('random', 0.9)); // 10% testing data

var testing_samples = image.reduceRegions({collection: testing_samples, reducer: ee.Reducer.mean(), scale: 2400}); // sampling

print("training sample size", training_samples.size())

print("testing sample size", testing_samples.size())

接下来就是要正式的训练模型绘图。用刚才采样的训练集训练一个回归模型,并且用这个模型进行绘图,而且还可视化出来。代码如下:

// Regressing.

var regressor = ee.Classifier

.smileRandomForest(20) // number of estimators/trees

.setOutputMode('REGRESSION') // regression algorithm

.train(training_samples, // training samples

"rwi", // regressing target

band_name); // regressor features

var rwi_map = image.unmask(0).clip(roi).classify(regressor).rename('rwi'); // regress

var rwi_map = rwi_map.updateMask(buildingup).clip(roi); // mask

// Visualization.

var palettes = ["#08525e", "#40d60e", "#b9e40e", "#f9c404", "#f94600", "e70000"]

Map.addLayer(rwi_map,

{min: -0.2, max: 1, palette: palettes},

'RWI');

验证先不写了,先下班,改天再补上。

5 完整代码

我的代码链接在这,可以直接使用。

完整代码如下(和链接中相同):

// Importing Built-up map

var wsf2019 = ee.ImageCollection('projects/sat-io/open-datasets/WSF/WSF_2019');

var wsf_01 = wsf2019.mosaic().eq(255);

var buildingup = wsf_01;

// Imorting RWI

var rwi = ee.FeatureCollection("projects/sat-io/open-datasets/facebook/relative_wealth_index")

.filterBounds(roi.geometry());

print("sample size", rwi.size())

// Importing S1 SAR images.

var sentinel1 = ee.ImageCollection('COPERNICUS/S1_GRD')

.filterDate("2019-01-01", "2024-01-01")

.filterBounds(roi.geometry());

// print(sentinel1)

// Filter the S1 collection by metadata properties.

var vvVhIw = sentinel1

// Filter to get images with VV and VH dual polarization.

.filter(ee.Filter.listContains('transmitterReceiverPolarisation', 'VV'))

.filter(ee.Filter.listContains('transmitterReceiverPolarisation', 'VH'))

// Filter to get images collected in interferometric wide swath mode.

.filter(ee.Filter.eq('instrumentMode', 'IW'));

// Separate ascending and descending orbit images into distinct collections.

var vvVhIwAsc = vvVhIw.filter(

ee.Filter.eq('orbitProperties_pass', 'ASCENDING'));

var vvVhIwDesc = vvVhIw.filter(

ee.Filter.eq('orbitProperties_pass', 'DESCENDING'));

// Calculate temporal means.

// var vhIwAscMean = vvVhIwAsc.select('VH').mean();

// var vhIwDescMean = vvVhIwDesc.select('VH').mean();

// var vv = vvVhIwAsc.merge(vvVhIwDesc).select('VV').mean();

// var vh = vvVhIwAsc.merge(vvVhIwDesc).select('VH').mean();

// Importing S2 images.

// Cloud mask function.

function maskS2clouds(image) {

var qa = image.select('QA60');

// Bits 10 and 11 are clouds and cirrus, respectively.

var cloudBitMask = 1 << 10;

var cirrusBitMask = 1 << 11;

// Both flags should be set to zero, indicating clear conditions.

var mask = qa.bitwiseAnd(cloudBitMask).eq(0)

.and(qa.bitwiseAnd(cirrusBitMask).eq(0));

return image.updateMask(mask).divide(10000);

}

var sentinel2 = ee.ImageCollection("COPERNICUS/S2_SR")

.filterDate("2019-01-01", "2024-01-01")

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filterBounds(roi.geometry())

.map(maskS2clouds)

.mean();

// Indice.

var vv = vvVhIwAsc.merge(vvVhIwDesc).select('VV').mean();

var vh = vvVhIwAsc.merge(vvVhIwDesc).select('VH').mean();

var blue = sentinel2.select('B2');

var green = sentinel2.select('B3');

var red = sentinel2.select('B4');

var red_edge1 = sentinel2.select('B5');

var red_edge2 = sentinel2.select('B6');

var red_edge3 = sentinel2.select('B7');

var nir = sentinel2.select('B8');

var red_edge4 = sentinel2.select('B8A');

var SWIR1 = sentinel2.select('B11');

var SWIR2 = sentinel2.select('B12');

var image = ee.Image()

.addBands(vv)

.addBands(vh)

.addBands(blue)

.addBands(green)

.addBands(red)

.addBands(red_edge1)

.addBands(red_edge2)

.addBands(red_edge3)

.addBands(nir)

.addBands(red_edge4)

.addBands(SWIR1)

.addBands(SWIR2)

;

var band_name = ee.List(['VV', 'VH', 'B2', 'B3', 'B4', 'B5', 'B6', 'B7', 'B8', 'B8A', 'B11', 'B12'])

// Sampling.

var samples = rwi.randomColumn('random'); // set a random field

var training_samples = samples.filter(ee.Filter.lt('random', 0.9)); // 90% training data

var training_samples = image.reduceRegions({collection: training_samples, reducer: ee.Reducer.mean(), scale: 2400}); // sampling

var testing_samples = samples.filter(ee.Filter.gte('random', 0.9)); // 10% testing data

var testing_samples = image.reduceRegions({collection: testing_samples, reducer: ee.Reducer.mean(), scale: 2400}); // sampling

print("training sample size", training_samples.size())

print("testing sample size", testing_samples.size())

// Regressing.

var regressor = ee.Classifier

.smileRandomForest(20) // number of estimators/trees

.setOutputMode('REGRESSION') // regression algorithm

.train(training_samples, // training samples

"rwi", // regressing target

band_name); // regressor features

var rwi_map = image.unmask(0).clip(roi).classify(regressor).rename('rwi'); // regress

var rwi_map = rwi_map.updateMask(buildingup).clip(roi); // mask

// Visualization.

var palettes = ["#08525e", "#40d60e", "#b9e40e", "#f9c404", "#f94600", "e70000"]

Map.addLayer(rwi_map,

{min: -0.2, max: 1, palette: palettes},

'RWI');

6 后记

可能有些地方不太专业不太科学,希望诸位同行前辈不吝赐教或者有什么奇妙的想法可以和我共同探讨一下。可以在csdn私信我或者联系我邮箱(chinshuuichi@qq.com),不过还是希望大家邮箱联系我,csdn私信很糟糕而且我上csdn也很随缘。

如果对你有帮助,还望支持一下~点击此处施舍或扫下图的码。

-----------------------分割线(以下是乞讨内容)-----------------------

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- Power BI - 5分钟学习列填充值

- LeetCode-657/1275/1041

- LeetCode //C - 933. Number of Recent Calls

- Python爬虫实战之bilibili

- 获取用户密码的方法与编程实现

- 【Linux】修复 Linux 错误 - 没有可用的锁

- 恒温器探针样品座

- 都在卷鸿蒙开发,那就推荐 几个鸿蒙开源项目

- React-Native环境搭建(IOS)

- 关于ECMAScript基础入门的分享