EVA-CLIP

发布时间:2024年01月08日

Abstract & Introducation

- EVA-CLIP: 一系列显著的提升CLIP训练时的效率和有效性。

- 用最新的表征学习, 优化策略,增强 使得EVA-CLIP在同样数量的参数下比之前的CLIP模型要好,且花费更小的训练资源。

- pre-trained EVA 来初始化CLIP的训练

- the LAMB optimizer

- randomly deopping input tokens

- speedup trick: flash attention

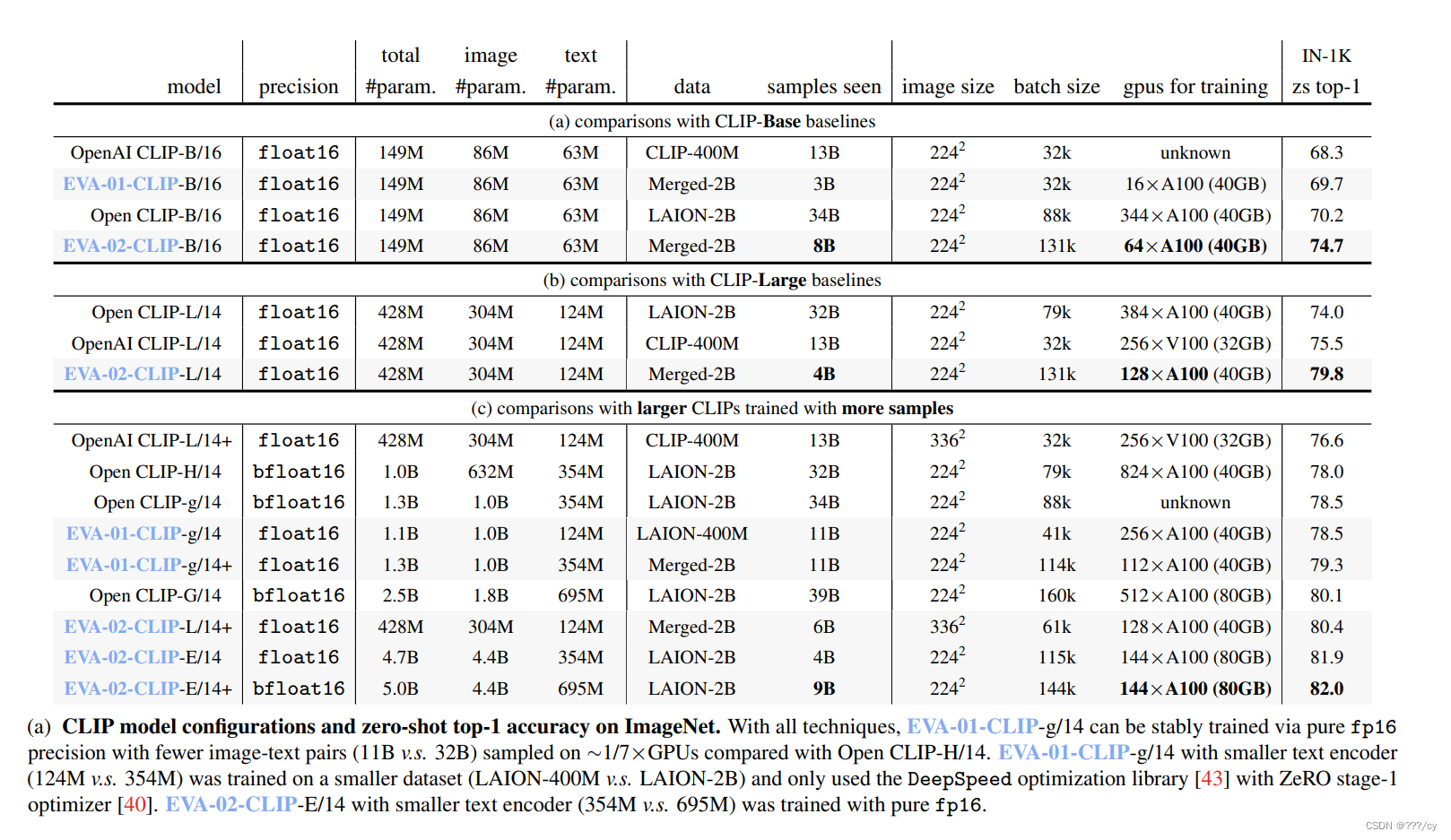

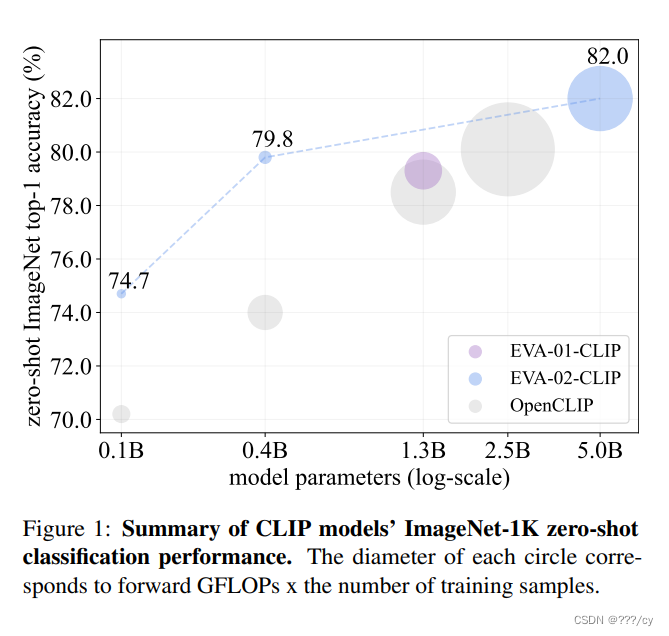

- 在ImageNet-1k val的成绩

- 82% zero-shot accuracy = EVA-02-CLIP-E/14 (5.0B-parameter)+ 9 biilion samples

- 80.4% zero-shot accuracy = EVA-02-CLIP-L/14 (430 million paramters) + 6 billion samples

Approach

- Better Initialization: pre-trained EVA weights to initializa the images encoder of EVA-CLIP

- Optimizer: LAMB, 为large-batch training设计的优化器。 its adaptive elementwise updating and layer-wise learning rates enhance training efficiency and accelerate convergence rates

- FLIP: we randomly mask 50% image tokens during training esulting in a significant reduction of time complexity by half.

Experiments

Settings

- Datasets: 2B = 1.6 billion (LAION-2B) + 0.4 billion (COYO-700M)

- 实验结果一目了然

https://arxiv.org/pdf/2303.15389.pdf

文章来源:https://blog.csdn.net/qq_45842681/article/details/135451962

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- linux中最常用的搜索命令

- 文件夹共享和防火墙设置

- Autojs6-起点阅读自动签到任务脚本

- 透明拼接屏生产商:如何选择合格供应商

- 【SpringBoot】—— 如何创建SpringBoot工程

- 关于 Ant Design 如何给组件去掉/关闭动画效果的解决方案【Antd v5 已解决】

- 计算机基础面试题 |01.精选计算机基础面试题

- linux go环境安装 swag

- (Matlab)基于CNN-GRU的多输入分类(卷积神经网络-门控循环单元网络)

- Linux下MySQL的安装部署