Python处理音频

发布时间:2024年01月04日

从video中抽取audio

from moviepy.editor import VideoFileClip

from pydub import AudioSegment

video_path = '/opt/audio/audios/video1.mp4'

audio_path = '/opt/audio/audios/video1.wav' # 提取的音频保存路径

# 加载视频文件

video = VideoFileClip(video_path)

# 提取音频

audio = video.audio

# 保存音频文件

audio.write_audiofile(audio_path)

# 读取音频文件

sound = AudioSegment.from_wav(audio_path)

# 将音频转换为单声道

sound = sound.set_channels(1)

sound = sound.set_frame_rate(16000)

# 保存音频文件(单声道)

sound.export(audio_path, format="wav")截取video

from pydub import AudioSegment

# 加载音频文件

audio = AudioSegment.from_file(

"xxx/1.wav")

# 定义起始和结束时间(单位为毫秒)

start_time = 3000

end_time = 28550

# 截取音频

extracted = audio[start_time:end_time]

# 导出截取的音频

extracted.export(

"xxx/3.wav", format="wav")拼接音频

import os

from pydub import AudioSegment

base_path = f'{os.getcwd()}/audios/reduce_noise/video2/'

# 要拼接的音频的路径list

short_audio_files = []

for i in range(100, 105):

path = base_path + str(i) + ".wav"

wav = AudioSegment.from_file(path)

duration_seconds = wav.duration_seconds

short_audio_files.append(path)

# 声明一个空白音频

merged_audio = AudioSegment.empty()

# 遍历每个短音频文件并添加到合并后的音频中

for audio_file in short_audio_files:

# 从文件加载短音频

short_audio = AudioSegment.from_file(audio_file)

# 将短音频追加到合并后的音频

merged_audio = merged_audio.append(short_audio, crossfade=0)

# 保存合并后的音频为一个长音频文件

merged_audio.export(f"{base_path}merged_audio.wav", format="wav")说话人分离:

主要使用whisper-diarization:GitHub - MahmoudAshraf97/whisper-diarization: Automatic Speech Recognition with Speaker Diarization based on OpenAI Whisper

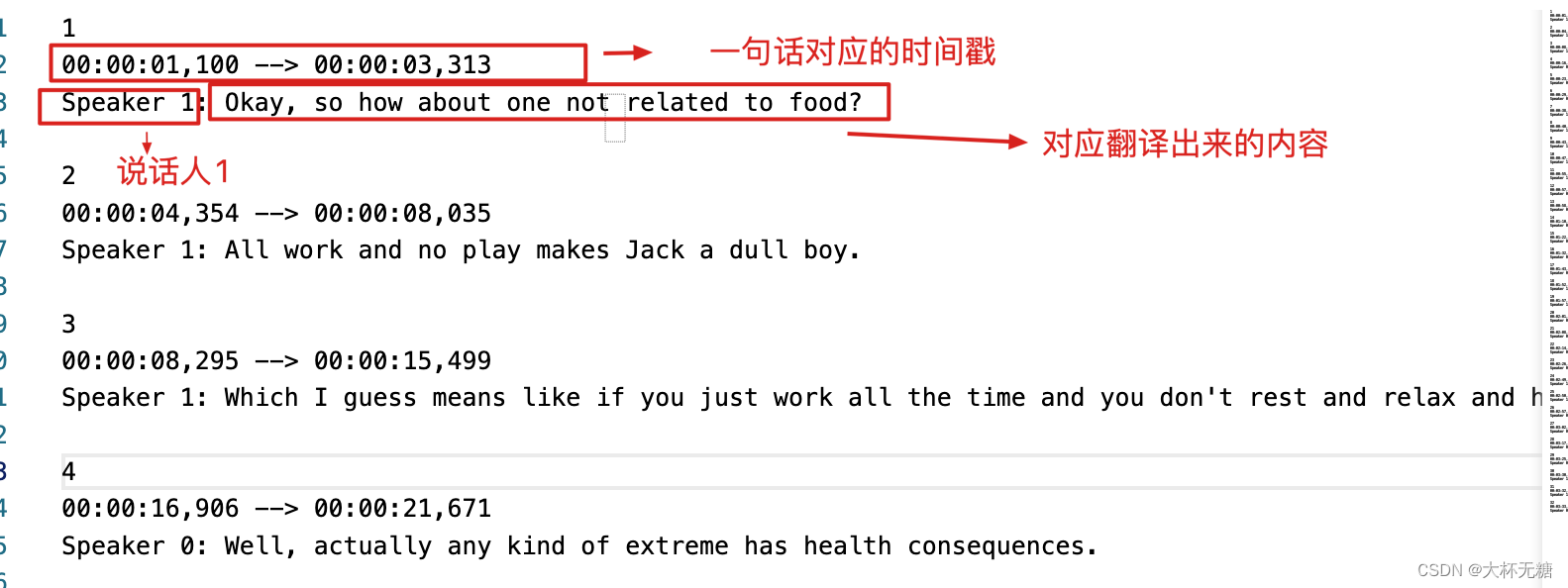

使用场景:一整个wav ,分理出不同人对应的wav,具体效果如下:

文章来源:https://blog.csdn.net/sunriseYJP/article/details/135388064

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 使用GitZip下载GitHub指定文件

- SCA|可作为有效改进策略的算法——正余弦优化算法(Matlab/Python)

- HAL库配置RS485通信

- IDEA插件中的postman,你试试

- 【教程】Ipa Guard为iOS应用提供免费加密混淆方案

- Linux网络文件共享服务1(基于FTP文件传输协议)

- 苹果Safari怎么清理缓存?很简单,学会这两招够了!

- 【ITK库学习】使用itk库进行图像配准:“Hello World”配准(一)

- SpringBoot Import提示Cannot resolve symbol

- 【PyTorch】记一次卷积神经网络优化过程