动手搓一个kubernetes管理平台(4)-操作kubernetes

golang有一个好处是 操作kubernetes很方便,因为有万能的clientset,clientset本质上是一个client的集合

type Clientset struct {

*discovery.DiscoveryClient

admissionregistrationV1 *admissionregistrationv1.AdmissionregistrationV1Client

admissionregistrationV1alpha1 *admissionregistrationv1alpha1.AdmissionregistrationV1alpha1Client

admissionregistrationV1beta1 *admissionregistrationv1beta1.AdmissionregistrationV1beta1Client

internalV1alpha1 *internalv1alpha1.InternalV1alpha1Client

appsV1 *appsv1.AppsV1Client

appsV1beta1 *appsv1beta1.AppsV1beta1Client

appsV1beta2 *appsv1beta2.AppsV1beta2Client

authenticationV1 *authenticationv1.AuthenticationV1Client

authenticationV1alpha1 *authenticationv1alpha1.AuthenticationV1alpha1Client

authenticationV1beta1 *authenticationv1beta1.AuthenticationV1beta1Client

authorizationV1 *authorizationv1.AuthorizationV1Client

authorizationV1beta1 *authorizationv1beta1.AuthorizationV1beta1Client

autoscalingV1 *autoscalingv1.AutoscalingV1Client

autoscalingV2 *autoscalingv2.AutoscalingV2Client

autoscalingV2beta1 *autoscalingv2beta1.AutoscalingV2beta1Client

autoscalingV2beta2 *autoscalingv2beta2.AutoscalingV2beta2Client

batchV1 *batchv1.BatchV1Client

batchV1beta1 *batchv1beta1.BatchV1beta1Client

certificatesV1 *certificatesv1.CertificatesV1Client

certificatesV1beta1 *certificatesv1beta1.CertificatesV1beta1Client

certificatesV1alpha1 *certificatesv1alpha1.CertificatesV1alpha1Client

coordinationV1beta1 *coordinationv1beta1.CoordinationV1beta1Client

coordinationV1 *coordinationv1.CoordinationV1Client

coreV1 *corev1.CoreV1Client

discoveryV1 *discoveryv1.DiscoveryV1Client

discoveryV1beta1 *discoveryv1beta1.DiscoveryV1beta1Client

eventsV1 *eventsv1.EventsV1Client

eventsV1beta1 *eventsv1beta1.EventsV1beta1Client

extensionsV1beta1 *extensionsv1beta1.ExtensionsV1beta1Client

flowcontrolV1alpha1 *flowcontrolv1alpha1.FlowcontrolV1alpha1Client

flowcontrolV1beta1 *flowcontrolv1beta1.FlowcontrolV1beta1Client

flowcontrolV1beta2 *flowcontrolv1beta2.FlowcontrolV1beta2Client

flowcontrolV1beta3 *flowcontrolv1beta3.FlowcontrolV1beta3Client

networkingV1 *networkingv1.NetworkingV1Client

networkingV1alpha1 *networkingv1alpha1.NetworkingV1alpha1Client

networkingV1beta1 *networkingv1beta1.NetworkingV1beta1Client

nodeV1 *nodev1.NodeV1Client

nodeV1alpha1 *nodev1alpha1.NodeV1alpha1Client

nodeV1beta1 *nodev1beta1.NodeV1beta1Client

policyV1 *policyv1.PolicyV1Client

policyV1beta1 *policyv1beta1.PolicyV1beta1Client

rbacV1 *rbacv1.RbacV1Client

rbacV1beta1 *rbacv1beta1.RbacV1beta1Client

rbacV1alpha1 *rbacv1alpha1.RbacV1alpha1Client

resourceV1alpha2 *resourcev1alpha2.ResourceV1alpha2Client

schedulingV1alpha1 *schedulingv1alpha1.SchedulingV1alpha1Client

schedulingV1beta1 *schedulingv1beta1.SchedulingV1beta1Client

schedulingV1 *schedulingv1.SchedulingV1Client

storageV1beta1 *storagev1beta1.StorageV1beta1Client

storageV1 *storagev1.StorageV1Client

storageV1alpha1 *storagev1alpha1.StorageV1alpha1Client

}

包括kubectl等常用kubernetes常用管理工具,都有使用clientset做为操作kubernetes的客户端对象,所以 ,我们也同样使用clientset来管理所有集群。

client.go

type Interface interface {

Client() (*kubernetes.Clientset, error)

Config() (*rest.Config, error)

Ping() error

Version() (*version.Info, error)

GetK8sVerberClientSet() (VerberInterface, error)

MetricClient() (*versioned.Clientset, error)

DynamicClient() (*dynamic.DynamicClient, error)

}

上述结构体就是用于操作每个集群的客户端对象,除了封装了最基本的clientset, 还有MetricClient,DynamicClient,分别用于监控以及一些自定义的crd, operator等,而且为了适配多个 api version的资源,还需要GetK8sVerberClientSet来根据api version进行客户端的初始化。

之前提到,所有操作kubernetes的原子操作,都是在pkg目录下进行统一封装的

pkg

├── api

│ ├── types.go

│ └── types_test.go

├── client

│ ├── client.go

│ ├── client_test.go

│ ├── types.go

│ ├── verber.go

│ └── verber_test.go

├── errors

│ ├── errors.go

│ ├── handler.go

│ ├── handler_test.go

│ ├── localizer.go

│ └── localizer_test.go

├── integration

│ ├── api

│ │ └── types.go

│ └── metric

│ └── api

│ └── types.go

├── resource

│ ├── clusterrole

│ │ ├── clusterrole.go

│ │ ├── clusterrole_test.go

│ │ └── common.go

│ ├── clusterrolebinding

│ │ ├── clusterrolebinding.go

│ │ ├── clusterrolebinding_test.go

│ │ └── common.go

│ ├── common

│ │ ├── condition.go

│ │ ├── deploy.go

│ │ ├── endpoint.go

│ │ ├── endpoint_test.go

│ │ ├── event.go

│ │ ├── namespace.go

│ │ ├── namespace_test.go

│ │ ├── pod.go

│ │ ├── pod_test.go

│ │ ├── podinfo.go

│ │ ├── podinfo_test.go

│ │ ├── resourcechannels.go

│ │ ├── resourcestatus.go

│ │ ├── scale.go

│ │ ├── serviceport.go

│ │ └── serviceport_test.go

│ ├── configmap

│ │ ├── common.go

│ │ ├── configmap.go

│ │ └── configmap_test.go

│ ├── controller

│ │ ├── controller.go

│ │ └── controller_test.go

│ ├── customresourcedefinition

│ │ └── common.go

│ ├── daemonset

│ │ ├── common.go

│ │ ├── daemonset.go

│ │ └── daemonset_test.go

│ ├── dataselect

│ │ ├── dataselect.go

│ │ ├── dataselect_test.go

│ │ ├── dataselectquery.go

│ │ ├── pagination.go

│ │ ├── pagination_test.go

│ │ ├── propertyname.go

│ │ ├── stdcomparabletypes.go

│ │ └── stdcomparabletypes_test.go

│ ├── deployment

│ │ ├── common.go

│ │ ├── deployment.go

│ │ ├── deployment_test.go

│ │ └── util.go

│ ├── endpoint

│ │ └── endpoint.go

│ ├── event

│ │ ├── common.go

│ │ ├── common_test.go

│ │ ├── event.go

│ │ └── event_test.go

│ ├── horizontalpodautoscaler

│ │ ├── common.go

│ │ ├── horizontalpodautoscaler.go

│ │ └── horizontalpodautoscaler_test.go

│ ├── ingress

│ │ ├── common.go

│ │ └── ingress.go

│ ├── limitrange

│ │ ├── limitrange.go

│ │ └── limitrange_test.go

│ ├── namespace

│ │ ├── common.go

│ │ ├── namespace.go

│ │ └── namespace_test.go

│ ├── node

│ │ ├── common.go

│ │ ├── node.go

│ │ └── node_test.go

│ ├── persistentvolume

│ │ ├── common.go

│ │ ├── persistentvolume.go

│ │ └── persistentvolume_test.go

│ ├── persistentvolumeclaim

│ │ ├── common.go

│ │ ├── persistentvolumeclaim.go

│ │ └── persistentvolumeclaim_test.go

│ ├── pod

│ │ ├── common.go

│ │ ├── common_test.go

│ │ ├── dumptool.go

│ │ ├── events.go

│ │ ├── events_test.go

│ │ ├── fieldpath.go

│ │ ├── fieldpath_test.go

│ │ ├── metrics.go

│ │ ├── pod.go

│ │ └── pod_test.go

│ ├── resourcequota

│ │ ├── resourcequota.go

│ │ └── resourcequota_test.go

│ ├── role

│ │ ├── common.go

│ │ ├── role.go

│ │ └── role_test.go

│ ├── rolebinding

│ │ ├── common.go

│ │ ├── rolebinding.go

│ │ └── rolebinding_test.go

│ ├── secret

│ │ ├── common.go

│ │ ├── secret.go

│ │ └── secret_test.go

│ ├── service

│ │ ├── common.go

│ │ ├── service.go

│ │ └── service_test.go

│ ├── serviceaccount

│ │ ├── common.go

│ │ ├── secret.go

│ │ └── serviceaccount.go

│ ├── statefulset

│ │ ├── common.go

│ │ ├── statefulset.go

│ │ └── statefulset_test.go

│ └── storageclass

│ ├── common.go

│ ├── storageclass.go

│ └── storageclass_test.go

└── wsconnect

└── ws.go

上述是pkg的代码目录,其中resource目录内,是对所有资源的原子操作,以node为例,

pkg/resource/node/node.go:

func getNodeIP(node v1.Node) string {

var nodeip string

nodeIps := node.Status.Addresses

for _, addressesDetail := range nodeIps {

if addressesDetail.Type == "InternalIP" {

nodeip = addressesDetail.Address

}

}

return nodeip

}

func getNodeConditionStatus(node v1.Node, conditionType v1.NodeConditionType) v1.ConditionStatus {

for _, condition := range node.Status.Conditions {

if condition.Type == conditionType {

return condition.Status

}

}

return v1.ConditionUnknown

}

随机选了2个方法,可以看到,对集群资源的原子操作都在这里进行, 这里仅仅进行最基本的原子操作,如查看资源版本,副本数,配置等等,这些原子操作的返回会相对较多,因为不确定用户要什么, 所以在这一层,会把能查询到的信息都返回出来。

但是实际使用中,用户往往不需要那么多数据,所以在pkg以上,创建了service的目录,对这些原子 操作进行封装和结果的过滤,从而更满足用户的实际需求。

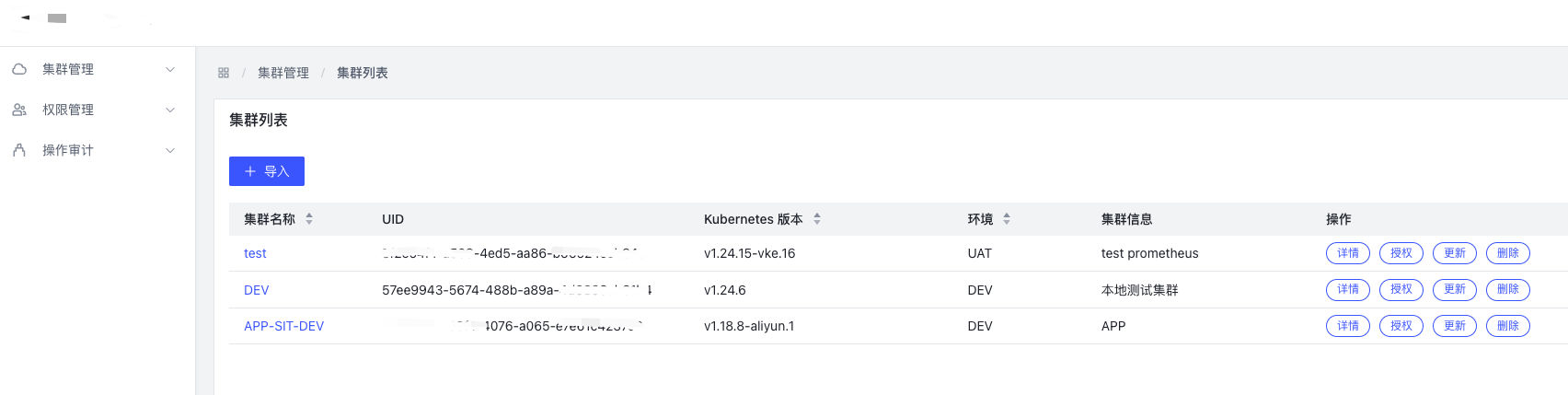

由于是多集群管理平台,一个平台内需要同时管理多个集群,所以,在每个集群在导入的时候,分别分配一个集群ID, 这个集群ID会作为集群的唯一标识,每个集群ID都会关联不同的kubeconfig, rbac的token。

所以,在服务收到需要操作集群的相关操作接口的时候,需要统一use一个方法,或者在每个handle的路由的时候,添加以下方法,获取对应集群的kubeconfig,并且对其做clientset的初始化 。

// 获取认证信息

authentication, err := getAuthentication(ctx, clusterId)

if err != nil {

log.Errorf("获取集群认证失败, %v", err.Error())

ctx.StatusCode(iris.StatusInternalServerError)

ctx.Values().Set("message", fmt.Sprintf("get cluster auth failed: %s", err.Error()))

return nil, nil, nil, nil, nil, "", err

}

// 初始化集群客户端

client := v1Kubernetes.GetK8sClientSet(authentication)

log.Debugf("获取clientSet成功: %v", client)

clientSet, err := client.Client()

if err != nil {

log.Errorf("获取K8S clientSet失败, %v", err.Error())

ctx.StatusCode(iris.StatusInternalServerError)

ctx.Values().Set("message", fmt.Sprintf("get cluster ClientSet failed: %s", err.Error()))

return nil, nil, nil, nil, nil, "", err

}

其中getAuthentication方法,则 是基于集群id,查询数据库,来获取相关的token或者kubeconfig

func getAuthentication(ctx *context.Context, clusterId string) (*client.Authentication, error) {

authentication := client.Authentication{}

handler := NewHandler()

c, err := handler.clusterService.GetClusterInfoByCID(clusterId)

if err != nil {

log.Errorf("获取集群信息失败, %v", err.Error())

ctx.StatusCode(iris.StatusInternalServerError)

ctx.Values().Set("message", fmt.Sprintf("get cluster failed: %s", err.Error()))

return nil, err

}

authentication.ApiserverHost = c.Authentication.ApiServer

p := ctx.Values().Get("profile")

u := p.(session.UserProfile)

// 管理员使用KubeConfig作集群权限认证

// 其他人员使用BearerToken作集群权限认证

var roleName string

for _, roleInfo := range u.ResourcePermissions {

if roleInfo.RoleName == "admin" {

roleName = "admin"

}

}

if roleName == "admin" {

authentication.AuthenticationMode = v1.KubeConfig

authentication.KubeConfig = c.Authentication.KubeConfig

} else {

authentication.AuthenticationMode = v1.BearerToken

for _, clusterrolebing := range u.KubernetesPermissions {

if clusterrolebing.ClusterUUID == clusterId {

clusterRoleName := clusterrolebing.ClusterRoleName

token, err := handler.clusterRoleBindingService.GetClusterRoleToken(clusterId, clusterRoleName)

if err != nil {

log.Errorf("获取cluster role token 失败, %v", err.Error())

ctx.StatusCode(iris.StatusInternalServerError)

ctx.Values().Set("message", fmt.Sprintf("get cluster role token failed: %s", err.Error()))

return nil, err

}

authentication.BearerToken = token

authentication.Insecure = true

log.Infof("成功获取k8s token: %v", token)

break

}

}

}

return &authentication, nil

}

至此,前端调用接口来操作kuerbenets的链路就很清晰了, 以操作node节点为例

前端请求 → 根路由 → 子路由封装 → 基于集群id初始化客户端 → 基于客户端调用pkg下的原子操作 → service侧进行相关封装, 封装有效返回

个人公众号, 分享一些日常开发,运维工作中的日常以及一些学习感悟,欢迎大家互相学习,交流

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- Spring MVC学习之——拦截器

- linux记录

- 回归预测 | Python基于ALO-BiGRU蚁狮优化算法优化双向BiGRU多变量回归预测+适应度曲线+多个评价指标

- C语言学习day10:switch语句

- C++提高编程二(STL、Vector容器、string字符串)

- MongoDB数字字符串排序问题

- 大坑!!!String.valueOf()输出“null“【查看源码】

- Jmeter测试实践:文件下载接口

- xbox无法登录,没有反应的解决方法*

- 计算机毕业设计-----ssm+mysql医药进销存系统