spark on yarn安装部署

发布时间:2024年01月17日

spark on yarn安装部署

使用的三台主机名称分别为bigdata1,bigdata2,bigdata3。所使用的安装包名称按自己的修改,安装包可去各大官网上下载

一,解压

tar -zxvf /opt/software/spark-3.1.1-bin-hadoop3.2.tgz -C /opt/module/

修改名称

mv spark-3.1.1-bin-hadoop3.2 spark-3.1.1

二,环境变量

vim /etc/profile

#.spark

export SPARK_HOME=/opt/module/spark-3.1.1

export PATH=$PATH:$SPARK_HOME/bin

刷新环境

source /etc/profile

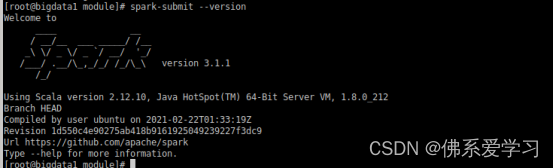

验证是否安装成功

spark-submit --version

使用在环境变量中添加

vim/etc/profile

```c

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

刷新环境

source /etc/profile

在hadoop-3.1.3/etc/hadoop下的yarn-site.xml添加

```c

vim /opt/module/hadoop-3.1.3/etc/hadoop/yarn-site.xml

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

分发、hadoop-3.1.3/etc/hadoop/yarn-site.xml

scp -r /opt/module/hadoop-3.1.3/etc/hadoop/yarn-site.xml root@bigdata2:/opt/module/hadoop-3.1.3/etc/hadoop/yarn-site.xml

scp -r /opt/module/hadoop-3.1.3/etc/hadoop/yarn-site.xml root@bigdata3:/opt/module/hadoop-3.1.3/etc/hadoop/yarn-site.xml

如果分发不过去就把主机名称改为ip

然后执行

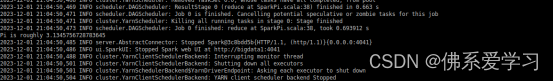

spark-submit --master yarn --class org.apache.spark.examples.SparkPi $SPARK_HOME/examples/jars/spark-examples_2.12-3.1.1.jar

文章来源:https://blog.csdn.net/2301_77578187/article/details/135629766

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!