pyrender复现pytorch3d渲染结果

pyrender复现pytorch3d渲染结果

一、写在前面

三维结果的可视化是很重要的一个工作,目前的三维渲染库,opendr(早期论文)、pyrender、pytorch3d。在我当前的任务上是要在本地电脑window上制作一个3D标注软件,仅有Cpu,我需要一个相对实时的效果,pytorch3d渲染256x256的图像需要3-5s,pyrender的渲染速度cpu上比pytorch3d快近100倍只需要0.01-0.03s。

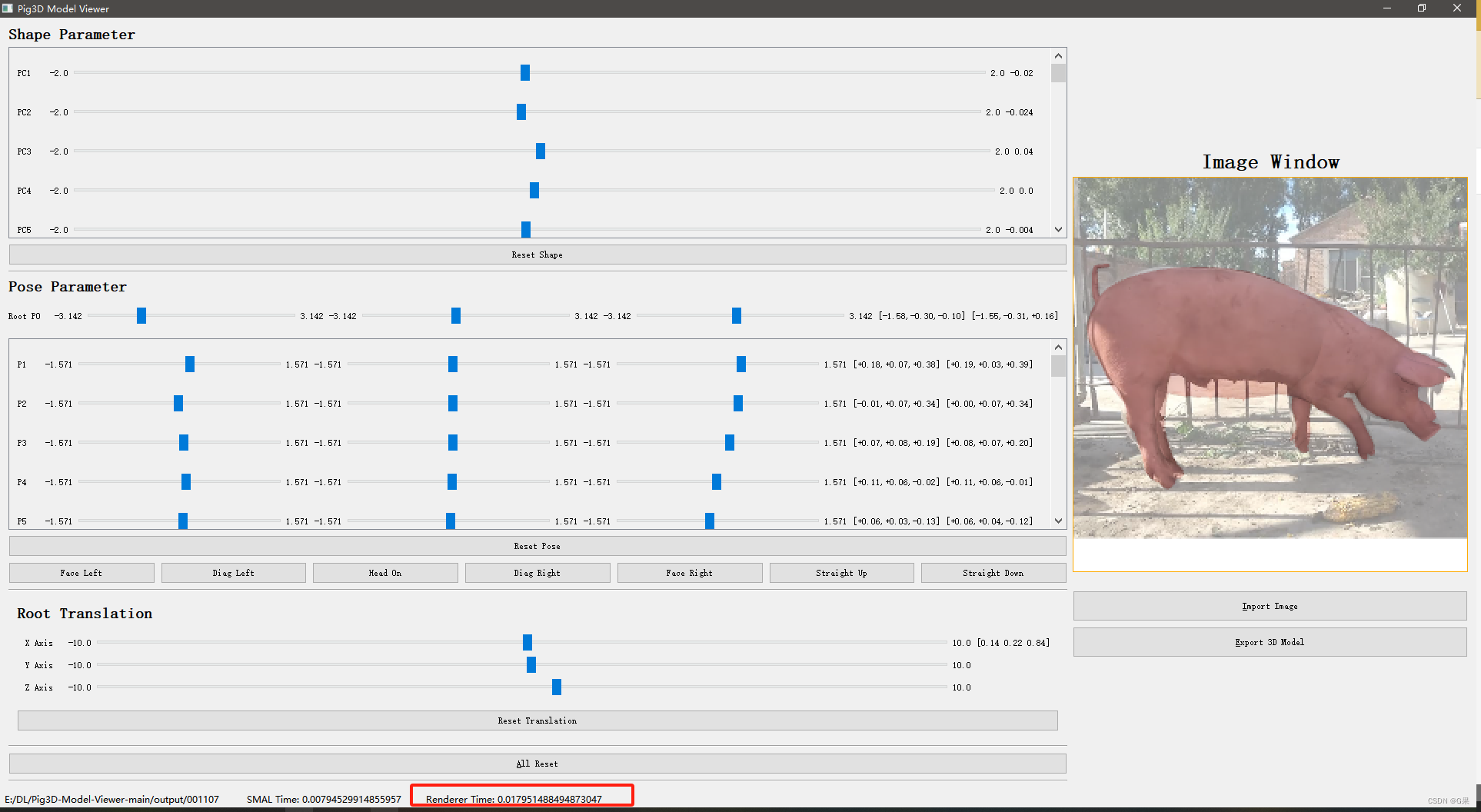

1.1 pyrender渲染时间 ≈ 0.02s

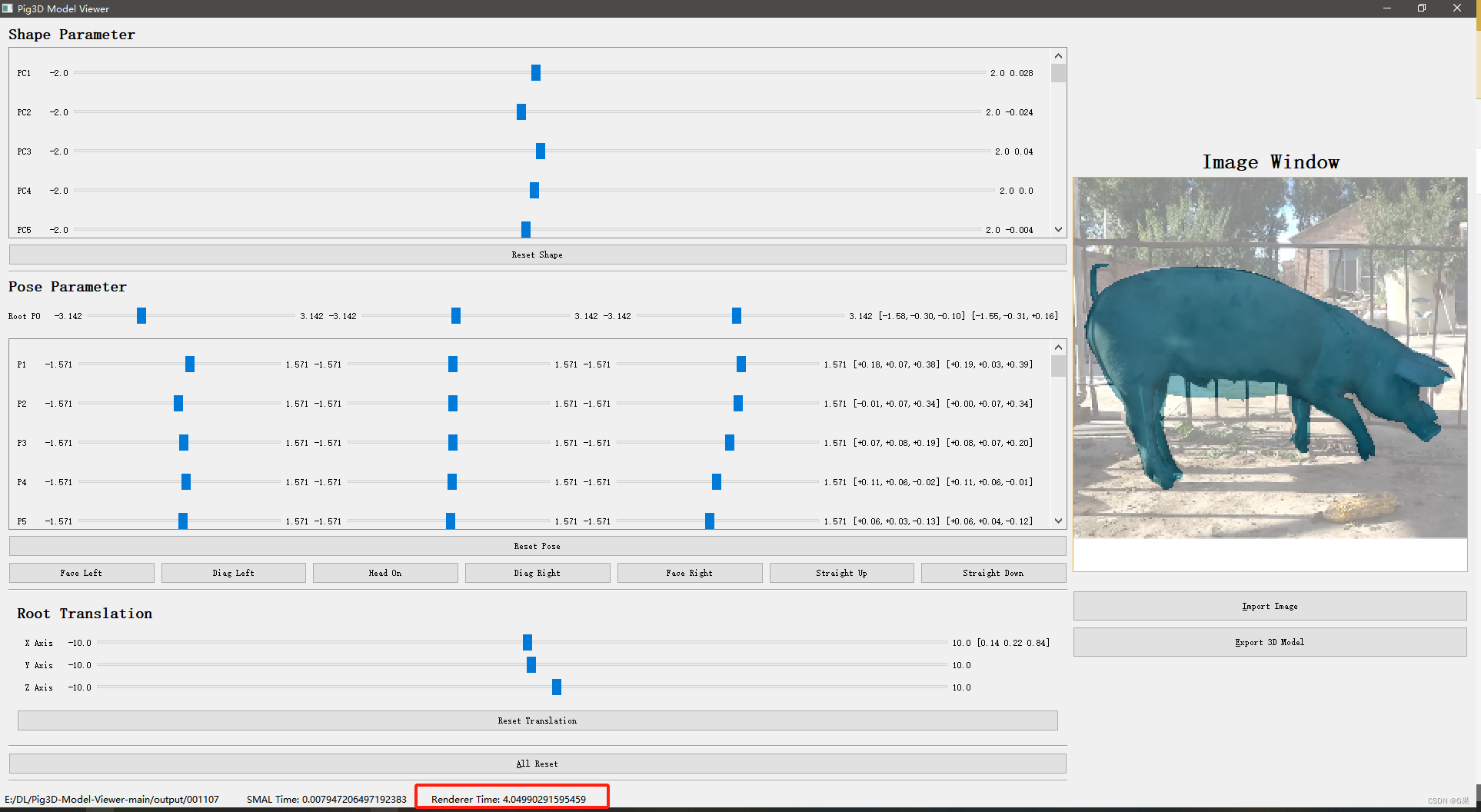

1.2 Pytorch3D渲染时间 ≈ 4s

参考信息

pyrender 文档

pytorch3d 文档

Converting camera matrices from OpenCV / Pytorch3D #228

why pyrender and pytorch3d cpu render so slow #188

https://github.com/lucasjinreal/realrender

difference between rendering using PyTorch3d and Pyrender #1291

二、pytorch3d的渲染函数

2.1 PyTorch3D 库创建了一个颜色渲染器(color_renderer)对象,并配置了相机、光照和渲染设置。

首先,PointLights 类用于创建光照对象,其中 location 参数指定了光源的位置。在这个示例中,光源位于三维空间的原点 [0.0, 0.0, 3.0]。

然后,RasterizationSettings 类用于配置光栅化(rasterization)的设置,包括图像大小、模糊半径和每像素面片数等。在这个示例中,image_size 参数设置了图像的大小(越大,渲染时间越长),blur_radius 参数设置为 0.0 表示不进行模糊处理,faces_per_pixel 参数设置为 1 表示每个像素只考虑一个面片(整数,越大渲染时间越长)。

接下来,创建 MeshRenderer 对象,其中的 rasterizer 参数使用了 MeshRasterizer 类,并传递了相机和光栅化设置。这意味着渲染器将使用指定的相机和光栅化设置进行渲染。

最后,shader 参数使用了 HardPhongShader 类,并传递了相机和光照对象。这表示颜色渲染器将使用指定的相机和光照设置来应用硬质光照模型。

2.2 PyTorch3D 库创建了一个 OpenGL 透视相机(OpenGLPerspectiveCameras)对象,并配置了相机的姿态。

首先,look_at_view_transform 函数用于生成相机的旋转矩阵 R 和平移向量 T,以实现相机的视角和位置。在这个示例中,函数的参数为 2.7, 0, 0,表示相机所看的目标点在三维空间中的坐标为 (2.7, 0, 0)。

然后,创建了一个 OpenGL 透视相机对象 OpenGLPerspectiveCameras,输入旋转矩阵 R 和平移向量 T。这将使用指定的姿态来配置相机。

# Data structures and functions for rendering

import torch

import torch.nn.functional as F

from scipy.io import loadmat

import numpy as np

from pytorch3d.structures import Meshes

from pytorch3d.renderer import (

OpenGLPerspectiveCameras, look_at_view_transform, look_at_rotation,

RasterizationSettings, MeshRenderer, MeshRasterizer, BlendParams,

PointLights, SoftPhongShader, SoftSilhouetteShader, Textures, HardPhongShader,

)

# from pytorch3d.io import load_objs_as_meshes

class Renderer(torch.nn.Module):

def __init__(self, image_size):

super(Renderer, self).__init__()

self.image_size = image_size

# RENDERER

MESH_COLOR = [0, 172, 223]

# self.dog_obj = load_objs_as_meshes(['data/dog_B/dog_B/dog_B_tpose.obj'])

self.mesh_color = torch.FloatTensor(MESH_COLOR)[None, None, :] / 255.0

'''BlendParams 类用于控制渲染结果中不同像素之间的混合方式,以产生平滑的渲染效果。

在这行代码中,BlendParams 是创建混合参数对象的构造函数,通过传递 sigma 和 gamma 参数来设置混合参数的值。sigma 控制了混合过程中的平滑程度。较大的 sigma 值将产生更加平滑的混合效果,而较小的 sigma 值则会产生更锐利的混合边缘。gamma 控制了混合过程中的权重调整。较大的 gamma 值将增加前景像素的权重,使其在混合中占据更大的比例,而较小的 gamma 值则会减小前景像素的权重,使其在混合中占据较小的比例。'''

blend_params = BlendParams(sigma=1e-4, gamma=1e-4)

raster_settings = RasterizationSettings(

image_size=self.image_size,

blur_radius=np.log(1. / 1e-4 - 1.) * blend_params.sigma,

faces_per_pixel=100,#细节更多,增加渲染时间

# bin_size=None

)

R, T = look_at_view_transform(2.7, 0, 0)

self.cameras = OpenGLPerspectiveCameras(device=R.device, R=R, T=T)

# lights = PointLights(device=R.device, location=[[0.0, 1.0, 0.0]])

self.renderer = MeshRenderer(

rasterizer=MeshRasterizer(

cameras=self.cameras,

raster_settings=raster_settings

),

shader=SoftSilhouetteShader(

blend_params=blend_params,

)

)

lights = PointLights(location=[[0.0, 0.0, 3.0]])

raster_settings_color = RasterizationSettings(

image_size=self.image_size,

blur_radius=0.0,

faces_per_pixel=1,

)

self.color_renderer = MeshRenderer(

rasterizer=MeshRasterizer(

cameras=self.cameras,

raster_settings=raster_settings_color

),

shader=HardPhongShader(

cameras=self.cameras,

lights=lights,

)

)

def forward(self, vertices, faces, render_texture=False):

tex = torch.ones_like(vertices) * self.mesh_color # (1, V, 3)

textures = Textures(verts_rgb=tex)

mesh = Meshes(verts=vertices, faces=faces, textures=textures)

# mesh = Meshes(verts=vertices, faces=faces, textures=self.dog_obj.textures)

if render_texture:

images = self.color_renderer(mesh)

else:

images = self.renderer(mesh)

return images

三、pyrender渲染函数

3.1 关键代码

调用 PyTorch3D 的look_at_view_transform函数获取相机平移T,相机的投影函数选择透视相机函数PerspectiveCamera,垂直视场角(yfov)设置为 π / 3.0,即 60 度。这意味着相机的视锥体将在垂直方向上覆盖 60 度的视角范围。

R, T = look_at_view_transform(2.7, 0, 0)

camera_translation = T.numpy()

camera_pose = np.eye(4)

camera_pose[:3, 3] = camera_translation

camera = pyrender.PerspectiveCamera(yfov=np.pi / 3.0)

3.2 完整代码

代码会根据 Trimesh 创建一个 pyrender.Mesh 对象,并将其添加到 pyrender.Scene 中。代码还为场景添加了一个透视摄像机和三个定向灯。最后,使用 OffscreenRenderer 渲染场景,并将生成的彩色图像作为 PyTorch 张量返回。

import os

#https://pyrender.readthedocs.io/en/latest/examples/offscreen.html

# fix for windows from https://github.com/mmatl/pyrender/issues/117

# edit C:\Users\bjb10042\.conda\envs\bjb_env\Lib\site-packages\pyrender

# os.environ['PYOPENGL_PLATFORM'] = 'osmesa'

import torch

from torchvision.utils import make_grid

import numpy as np

import pyrender

import trimesh

from pytorch3d.renderer import look_at_view_transform

class Renderer:

"""

Renderer used for visualizing the SMPL model

Code adapted from https://github.com/vchoutas/smplify-x

"""

def __init__(self, img_res=224):

self.renderer = pyrender.OffscreenRenderer(viewport_width=img_res,

viewport_height=img_res,

point_size=1)

self.focal_length = 5000

self.camera_center = [img_res // 2, img_res // 2]

def __call__(self, vertices, faces, camera_translation=None, focal_length=None):

material = pyrender.MetallicRoughnessMaterial(

metallicFactor=0.2,

alphaMode='OPAQUE',

baseColorFactor=(0.8, 0.3, 0.3, 1.0))

R, T = look_at_view_transform(2.7, 0, 0)

if np.all(camera_translation == None):

# camera_translation = np.array([0.0, 0.0, 50.0])

camera_translation = T.numpy()

if np.all(focal_length != None):

self.focal_length = focal_length[0]

mesh = trimesh.Trimesh(vertices[0], faces[0], process=False)

# rot = trimesh.transformations.rotation_matrix(

# np.radians(180), [1, 0, 0])

# mesh.apply_transform(rot)

mesh = pyrender.Mesh.from_trimesh(mesh, material=material)

scene = pyrender.Scene(ambient_light=(0.5, 0.5, 0.5))

scene.add(mesh, 'mesh')

camera_pose = np.eye(4)

camera_pose[:3, 3] = camera_translation

# fx, fy, cx, cy = camera_matrix[0, 0], camera_matrix[1, 1], camera_matrix[0, 2], camera_matrix[1, 2]

# fx = self.focal_length

# fy = self.focal_length

# cx = self.camera_center[0]

# cy = self.camera_center[1]

# camera = pyrender.IntrinsicsCamera(fx=fx, fy=fy, cx=cx, cy=cy, znear=1.0, zfar=100)

camera = pyrender.PerspectiveCamera(yfov=np.pi / 3.0)

scene.add(camera, pose=camera_pose)

light = pyrender.DirectionalLight(color=[1.0, 1.0, 1.0], intensity=1)

light_pose = np.eye(4)

light_pose[:3, 3] = np.array([0, -1, 1])

scene.add(light, pose=light_pose)

light_pose[:3, 3] = np.array([0, 1, 1])

scene.add(light, pose=light_pose)

light_pose[:3, 3] = np.array([1, 1, 2])

scene.add(light, pose=light_pose)

color, rend_depth = self.renderer.render(scene, flags=pyrender.RenderFlags.RGBA)

color = color.astype(np.float32) / 255.0

return torch.from_numpy(color).float().unsqueeze(0)

省流:pyrender用PerspectiveCamera替换pytorch3d的OpenGLPerspectiveCameras,加上相机平移和相机垂直视角即可

目前看来,pyrender的PerspectiveCamera和pytorch3d的OpenGLPerspectiveCameras两个函数的默认相机配置非常类似。从pytorch3d的源码上看OpenGLPerspectiveCameras继承了FoVPerspectiveCameras函数,默认的fov设置为60。两者很容易替换。

##############################

def OpenGLPerspectiveCameras(

znear: _BatchFloatType = 1.0,

zfar: _BatchFloatType = 100.0,

aspect_ratio: _BatchFloatType = 1.0,

fov: _BatchFloatType = 60.0,##

degrees: bool = True,

R: torch.Tensor = _R,

T: torch.Tensor = _T,

device: Device = "cpu",

) -> "FoVPerspectiveCameras":

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 银河麒麟桌面桌面操作系统v10保姆级安装

- FlinkSQL的联结和函数

- vscode 远程服务器 上传至 github

- git合并问题

- SpringBoot整合多数据源,并支持动态新增与切换

- 【C++】list容器迭代器的模拟实现

- python&Matplotlib七:使用Matplotlib完成3D绘图

- SpringBoot 文件上传下载

- 200. 岛屿数量(js)

- JavaWeb中的拦截器 Interceptor 解析