kubernetes volume 数据存储详解

?写在前面:如有问题,以你为准,

目前24年应届生,各位大佬轻喷,部分资料与图片来自网络

内容较长,页面右上角目录方便跳转

概述

保存在容器中的数据也会被清除,所以要实现持久化存储(Volume)

通过将本地目录挂载到pod,一个目录可以挂载到多个pod中的容器

通过Volume实现同一个Pod中不同容器之间的数据共享以及数据的持久化存储

Volume的生命周期不和Pod中的单个容器的生命周期有关,当容器终止或者重启的时候,Volume中的数据也不会丢失

EmptyDir:pod中临时存储空间可以被多个容器挂载,实现共享目录,pod删除其也删除

HostPath:将node上的目录挂载到pod里面的容器里面,实现了持久化存储,但是没有实现存储高可用

Kubernetes 目前支持多达 28 种数据卷类型(其中大部分特定于具体的云环境如 GCE/AWS/Azure 等)

非持久性存储:

emptyDir

HostPath

网络连接性存储:

SAN:iSCSI、ScaleIO Volumes、FC (Fibre Channel)

NFS:nfs,cfs

分布式存储

Glusterfs

RBD (Ceph Block Device)

CephFS

Portworx Volumes

Quobyte Volumes

云端存储

GCEPersistentDisk

AWSElasticBlockStore

AzureFile

AzureDisk

Cinder (OpenStack block storage)

VsphereVolume

StorageOS

自定义存储

基本存储

EmptyDir

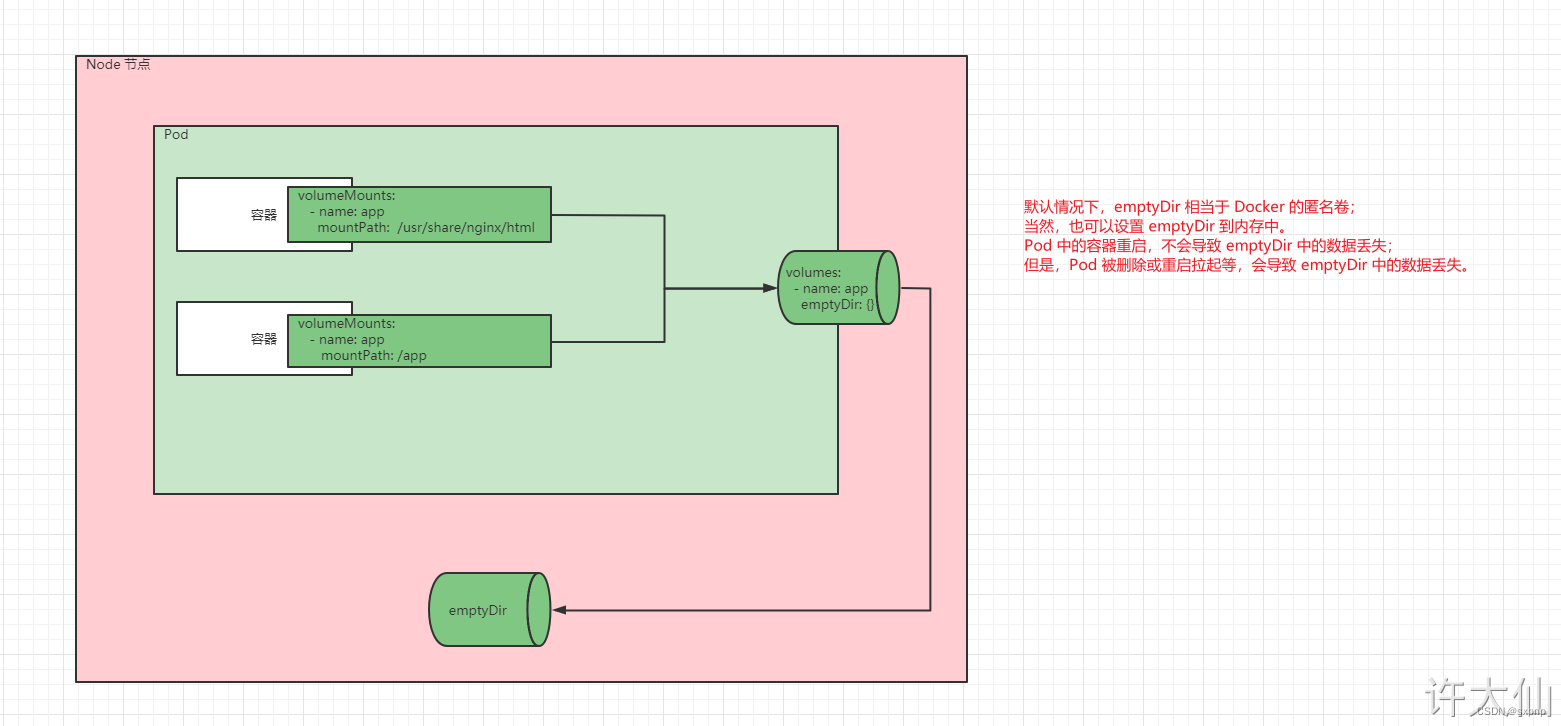

EmptyDir是最基础的Volume类型,一个EmptyDir就是Host上的一个空目录。

不是持久化数据存储,生命周期跟pod一样,一般是用于多容器共享目录

- EmptyDir是在Pod被分配到Node时创建的,它的初始内容为空,并且无须指定宿主机上对应的目录文件,因为kubernetes会自动分配一个目录,当Pod销毁时,EmptyDir中的数据也会被永久删除。

- EmptyDir的用途如下:

示例

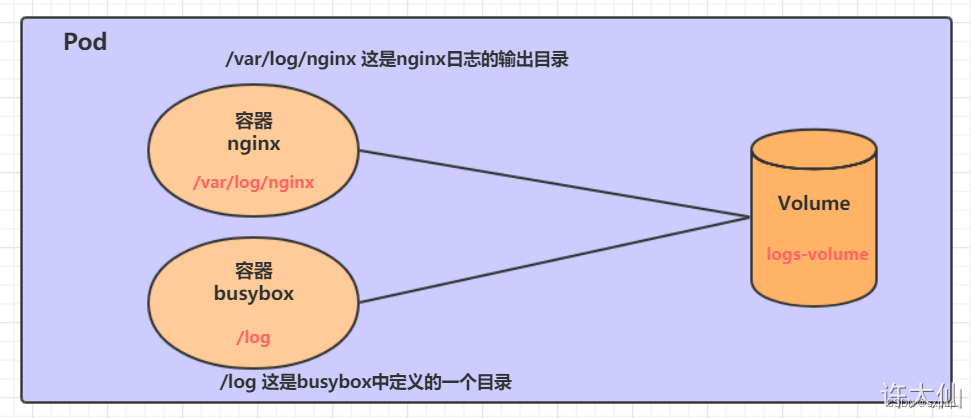

接下来,通过一个容器之间的共享案例来使用描述一个EmptyDir。

在一个Pod中准备两个容器nginx和busybox,然后声明一个volume分别挂载到两个容器的目录中,然后nginx容器负责向volume中写日志,busybox中通过命令将日志内容读到控制台。

apiVersion: v1

kind: Pod

metadata:

? name: volume-emptydir

? namespace: study

spec:

? containers:

??? - name: nginx

????? image: nginx:1.17.1

????? imagePullPolicy: IfNotPresent

????? command: ["/bin/sh","-c","tail -f /var/log/nginx/access.log"]

????? ports:

??????? - containerPort: 80

????? volumeMounts: # 将logs-volume挂载到nginx容器中对应的目录,该目录为/var/log/nginx

??????? - name: logs-volume # 卷名

????????? mountPath: /var/log/nginx # 要挂载到容器的目录,该目录且要容器必须存在

??? - name: busybox

????? image: busybox:1.30

????? imagePullPolicy: IfNotPresent

????? command: ["/bin/sh","-c","tail -f /logs/access.log"] # 初始命令,持续读取指定文件

????? # 读取的时候是前台,而kubectl logs命令就是读取前台日志/dev/stdout和/dev/stderr

????? volumeMounts: # 将logs-volume挂载到busybox容器中的对应目录,该目录为/logs

??????? - name: logs-volume

????????? mountPath: /logs

? volumes: # 声明volume,name为logs-volume,类型为emptyDir

??? - name: logs-volume # 用于挂载时指定的volume名字

????? emptyDir: {} # 指定volume类型[root@master k8s]# kubectl apply -f emptydir.yaml

pod/volume-emptydir created

[root@master k8s]# kubectl get pod volume-emptydir -n study -o wide

NAME????????????? READY?? STATUS??? RESTARTS?? AGE??? IP????????????? NODE??? NOMINATED NODE?? READINESS GATES

volume-emptydir?? 2/2???? Running?? 0????????? 142m?? 10.244.104.44?? node2?? <none>?????????? <none>[root@master k8s]# kubectl describe pod -n study

# 访问nginx,查看busybox挂载的目录是否有信息,有则说明nginx的日志在logs-volume,且busybox也挂载了目录

[root@master k8s]# curl 10.244.104.44

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

[root@master k8s]# kubectl logs -f volume-emptydir -n study -c busybox

192.168.100.53 - - [19/Feb/2023:08:15:33 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.61.1" "-"HostPath

HostPath就是将Node主机中的一个实际目录挂载到Pod中,以供容器使用,这样的设计就可以保证Pod销毁了,但是数据依旧可以保存在Node节点主机上

但是一旦Node节点故障了,Pod如果转移到别的Node节点上,又会出现问题

? volumes:

? - name: test-volume

??? hostPath:

????? # directory location on host

????? path: /data

????? # this field is optional

????? type: Directory # 目录必须存在指定路径

????? # FileOrCreate 不会创建文件的父目录,如果挂载文件的父目录不存在,则pod启动失败

????? # DirectoryOrCreate 如果不存在就创建,且权限为0755,与 Kubelet 具有相同的组和所有权

????? # File 文件必须存在于给定路径

????? # 如果不写type就跳过检测,则如果目录不存在,yaml创建时候也不会报错,类似于可选项示例

apiVersion: v1

kind: Pod

metadata:

? name: volume-hostpath

? namespace: study

spec:

? containers:

??? - name: nginx

????? image: nginx:1.17.1

????? imagePullPolicy: IfNotPresent

????? ports:

??????? - containerPort: 80

????? volumeMounts: # 将logs-volume挂载到nginx容器中对应的目录,该目录为/var/log/nginx

??????? - name: logs-volume

????????? mountPath: /var/log/nginx

??? - name: busybox

????? image: busybox:1.30

????? imagePullPolicy: IfNotPresent

????? command: ["/bin/sh","-c","tail -f /logs/access.log"] # 初始命令,动态读取指定文件

????? volumeMounts: # 将logs-volume挂载到busybox容器中的对应目录,该目录为/logs

??????? - name: logs-volume

????????? mountPath: /logs

? volumes: # 声明volume,name为logs-volume,类型为hostPath

??? - name: logs-volume

????? hostPath:

??????? path: /root/logs

??????? type: DirectoryOrCreate # 目录存在就使用,不存在就先创建再使用

? nodeName: master # 测试方便查看hostPath被挂载的目录[root@master k8s]# kubectl apply -f emptydir.yaml

pod/volume-hostpath created

[root@master k8s]# kubectl get pod -n study -o wide

NAME????????????? READY?? STATUS??? RESTARTS?? AGE?? IP????????????? NODE???? NOMINATED NODE?? READINESS GATES

volume-hostpath?? 2/2???? Running?? 0????????? 58s?? 10.244.219.71?? master?? <none>?????????? <none>

[root@master k8s]# curl 10.244.219.71

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

[root@master k8s]# cat /root/logs/access.log

192.168.100.53 - - [20/Feb/2023:06:23:56 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.61.1" "-"subPath

使用 mountPath 挂载 /etc/nginx ,但是我告诉 kubernetes 我挂载那个文件,此时就可以使用 subPath 了,此时 Kubernetes 就只会覆盖 /etc/nginx/nginx.conf 文件

换言之,mountPath 告诉 Kubernetes 我需要覆盖容器中的那个目录,但是有了 subPath ,Kubernetes 就知道了,哦,原来你只需要覆盖 mountPath 下边的子文件啊(subPath )

apiVersion: v1

kind: Pod

metadata:

? name: my-lamp-site

spec:

??? containers:

??? - name: mysql

????? image: mysql

????? env:

????? - name: MYSQL_ROOT_PASSWORD

??????? value: "rootpasswd"

????? volumeMounts:

????? - mountPath: /var/lib/mysql

??????? name: site-data

??????? subPath: m

??? - name: php

????? image: php:7.0-apache

????? volumeMounts:

????? - mountPath: /var/www/html

??????? name: site-data

??????? subPath: m

??? volumes:

??? - name: site-data

????? emptyDir: {}NFS

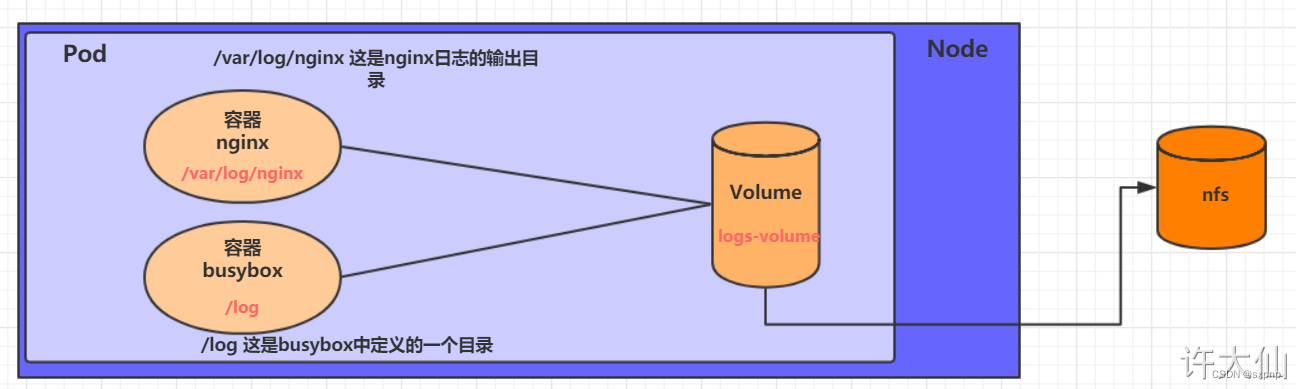

hostpath可以实现持久化,但是没有实现高可用,但是一旦Node节点故障了,Pod如果转移到别的Node节点上,又会出现问题,而NFS就是为解决持久化存储高可用

Master 搭建 NFS

首先需要准备NFS服务器,这里为了简单,直接在Master节点做NFS服务器。

yum install -y nfs-utils rpcbind

mkdir -pv /root/data/k8s-nfs

vim /etc/exports

# 将共享目录以读写权限暴露给192.168.100.0/24网段中的所有主机

/root/data/k8s-nfs 192.168.100.0/24(rw,no_root_squash)

# 读写权限,不使用root权限

systemctl start rpcbind

systemctl enable rpcbind

systemctl start nfs-server

systemctl enable nfs-server在Node节点上都安装NFS服务器,目的是为了Node节点可以驱动NFS设备

# 在Node节点上安装NFS服务,不需要启动

yum -y install nfs-utils

showmount -e 192.168.100.53

mount -t? nfs 192.168.100.53:/root/data/k8s-nfs /mnt #关机后

mount -t? nfs 192.168.100.53:/root/data/k8s-nfs/mnt示例

apiVersion: v1

kind: Pod

metadata:

? name: volume-nfs

? namespace: dev

spec:

? containers:

??? - name: nginx

????? image: nginx:1.17.1

????? imagePullPolicy: IfNotPresent

????? ports:

??????? - containerPort: 80

????? volumeMounts: # 将logs-volume挂载到nginx容器中对应的目录,该目录为/var/log/nginx

??????? - name: logs-volume

????????? mountPath: /var/log/nginx

??? - name: busybox

????? image: busybox:1.30

????? imagePullPolicy: IfNotPresent

????? command: ["/bin/sh","-c","tail -f /logs/access.log"] # 初始命令,动态读取指定文件

????? volumeMounts: # 将logs-volume挂载到busybox容器中的对应目录,该目录为/logs

??????? - name: logs-volume

????????? mountPath: /logs

? volumes: # 声明volume

??? - name: logs-volume

????? nfs:

??????? server: 192.168.100.53 # NFS服务器地址

??????? path: /root/data/k8s-nfs # 共享文件路径[root@master k8s]# kubectl apply -f? emptydir.yaml

pod/volume-hostpath created

[root@master k8s]# kubectl get pod -n study -o wide

NAME????????????? READY?? STATUS??? RESTARTS?? AGE?? IP????????????? NODE??? NOMINATED NODE?? READINESS GATES

volume-hostpath?? 2/2???? Running?? 0????????? 64s?? 10.244.104.53?? node2?? <none>?????????? <none>

[root@master k8s]# curl 10.244.104.53

[root@master k8s]# cat /root/data/k8s-nfs/access.log

192.168.100.53 - - [20/Feb/2023:07:15:55 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.61.1" "-"

192.168.100.53 - - [20/Feb/2023:07:49:20 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.61.1" "-"高级存储(pv pvc)

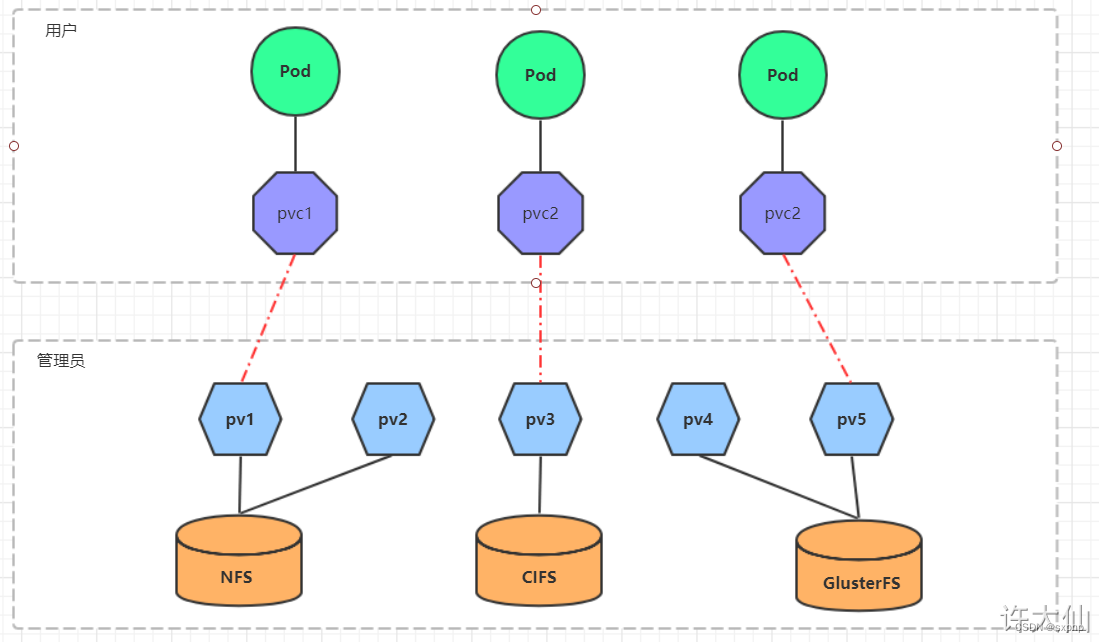

概述架构

此时就要求用户会搭建NFS系统等,并且会在yaml配置nfs

由于kubernetes支持的存储系统有很多,要求客户全部掌握,显然不现实。为了能够屏蔽底层存储实现的细节,方便用户使用,kubernetes引入了PV和PVC两种资源对(类似于资源池化,pv和pvc是抽象的存储资源)

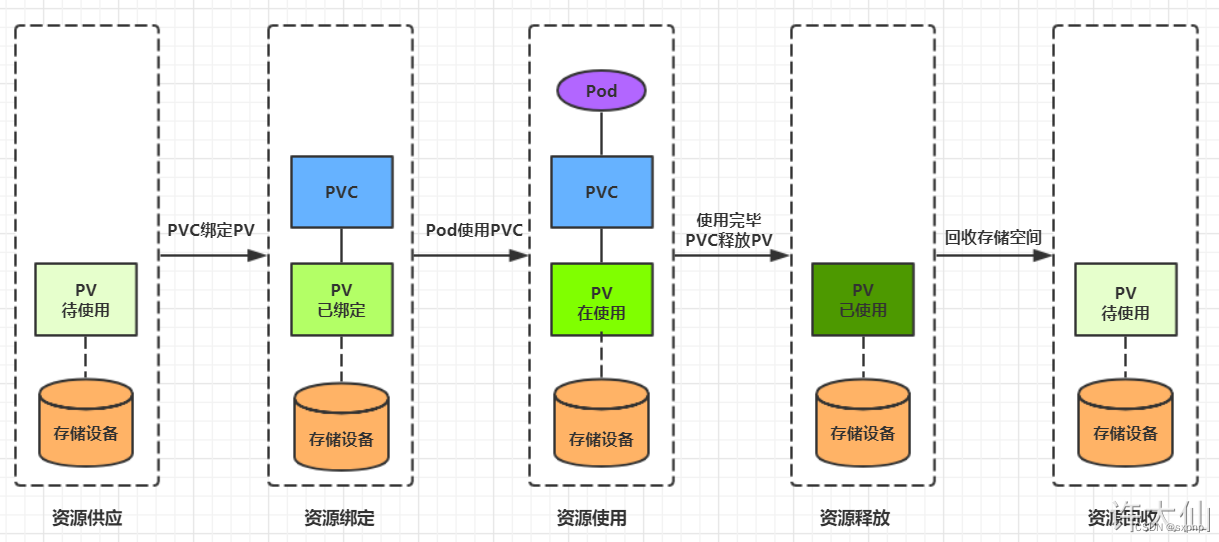

生命周期

- PVC和PV是一一对应的,PV和PVC之间的相互作用遵循如下的生命周期。

- 资源供应:管理员手动创建底层存储和PV。

- 资源绑定:

- 资源使用:用户可以在Pod中像volume一样使用PVC,Pod使用Volume的定义,将PVC挂载到容器内的某个路径进行使用。

- 资源释放:

- 资源回收:

理论与使用 PV

一般由kubernetes运维管理人员创建,然后提供给需要使用的人创建pvc

apiVersion: v1

kind: PersistentVolume

metadata:

? name: pv2

? # 没有namespace 是集群级别的资源,跨namespace使用

spec:

? nfs: # 存储类型,和底层正则的存储对应

??? path:

??? server:

? capacity: # 存储能力,目前只支持存储空间的设置

??? storage: 2Gi

? accessModes: # 访问模式

??? - ReadWriteOnce

??? # ReadOnlyMany

??? # ReadWriteMany

? storageClassName: # 存储类别

? persistentVolumeReclaimPolicy: # 回收策略- 存储类型:底层实际存储的类型,kubernetes支持多种存储类型,每种存储类型的配置有所不同。

- 存储能力(capacity):目前只支持存储空间的设置(storage=1Gi),不过未来可能会加入IOPS、吞吐量等指标的配置。

- 访问模式(accessModes):

- 回收策略( persistentVolumeReclaimPolicy):

- 存储类别(storageClassName):PV可以通过storageClassName参数指定一个存储类别。

- 状态(status):一个PV的生命周期,可能会处于4种不同的阶段。

mkdir -pv /root/data/{pv1,pv2,pv3}

chmod 777 -R /root/data

# 修改 NFS 的配置文件

vim /etc/exports

??? /root/data/pv1 ? ? 192.168.18.0/24(rw,no_root_squash)

??? /root/data/pv2 ? ? 192.168.18.0/24(rw,no_root_squash)

??? /root/data/pv3 ? ? 192.168.18.0/24(rw,no_root_squash)

systemctl restart nfs-serverapiVersion: v1

kind: PersistentVolume

metadata:

? name: pv1

spec:

? nfs: # 存储类型,和底层正则的存储对应

??? path: /root/data/pv1

??? server: 192.168.100.53

? capacity: # 存储能力,目前只支持存储空间的设置

??? storage: 1Gi

? accessModes: # 访问模式

??? - ReadWriteMany

? persistentVolumeReclaimPolicy: Retain # 回收策略

---

apiVersion: v1

kind: PersistentVolume

metadata:

? name: pv2

spec:

? nfs: # 存储类型,和底层正则的存储对应

??? path: /root/data/pv2

??? server: 192.168.100.53

? capacity: # 存储能力,目前只支持存储空间的设置

??? storage: 2Gi

? accessModes: # 访问模式

??? - ReadWriteMany

? persistentVolumeReclaimPolicy: Retain # 回收策略

?

---

apiVersion: v1

kind: PersistentVolume

metadata:

? name: pv3

spec:

? nfs: # 存储类型,和底层正则的存储对应

??? path: /root/data/pv3

??? server: 192.168.100.53

? capacity: # 存储能力,目前只支持存储空间的设置

??? storage: 3Gi

? accessModes: # 访问模式

??? - ReadWriteMany

? persistentVolumeReclaimPolicy: Retain # 回收策略[root@master k8s]# kubectl apply -f pv.yaml

persistentvolume/pv1 created

persistentvolume/pv2 created

persistentvolume/pv3 created

[root@master k8s]# kubectl get pv

NAME?? CAPACITY?? ACCESS MODES?? RECLAIM POLICY?? STATUS????? CLAIM?? STORAGECLASS?? REASON?? AGE

pv1??? 1Gi??????? RWX????????? ??Retain?????????? Available?????????????????????????????????? 27s

pv2??? 2Gi??????? RWX??????????? Retain?????????? Available?????????????????????????????????? 27s

pv3??? 3Gi??????? RWX??????????? Retain?????????? Available?????????????????????????????????? 27s

?CLAIM 如果被使用这里会显示pvc的名字理论与使用 PVC

PVC是对 PV 资源的申请,用来声明对存储空间、访问模式、存储类别需求信息

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

? name: pvc

? namespace: dev

spec:

? accessModes: # 访客模式

??? - ReadWriteMany

? selector: # 采用标签对PV选择

? storageClassName: # 存储类别

? resources: # 请求空间

??? requests:

????? storage: 5Gi- ①PVC的空间申请大小比PV的空间要大。

- ②PVC的storageClassName和PV的storageClassName不一致。

- ③PVC的accessModes和PV的accessModes不一致。

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

? name: pvc1

? namespace: study

spec:

? accessModes: # 访客模式

??? - ReadWriteMany # 和pv一样

? resources: # 请求空间

??? requests:

????? storage: 1Gi #申请pv1

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

? name: pvc2

? namespace: study

spec:

? accessModes: # 访客模式

??? - ReadWriteMany

? resources: # 请求空间

??? requests:

????? storage: 1Gi # 上面pv1已经被申请了没有同等容量的pv,所以申请pv2(2G)

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

? name: pvc3

? namespace: study

spec:

? accessModes: # 访客模式

??? - ReadWriteMany

? resources: # 请求空间

??? requests:

????? storage: 5Gi # 没有与这个相等也没有比他大的,所以申请失败[root@master k8s]# kubectl apply -f pvc.yaml

persistentvolumeclaim/pvc1 created

persistentvolumeclaim/pvc2 created

persistentvolumeclaim/pvc3 created

[root@master k8s]# kubectl get pvc -n study

NAME?? STATUS??? VOLUME?? CAPACITY?? ACCESS MODES?? STORAGECLASS?? AGE

pvc1?? Bound???? pv1????? 1Gi??????? RWX?????????????????????????? 54s

pvc2?? Bound???? pv2????? 2Gi??????? RWX?????????????????????????? 54s

pvc3?? Pending???????????????????????????????????????????????????? 54s

# Bound 被使用

# pvc3 没找到存储容量相等或大于所以一直在ping,可以另外创建新的pv

[root@master k8s]# kubectl get pv

NAME?? CAPACITY?? ACCESS MODES?? RECLAIM POLICY?? STATUS????? CLAIM??????? STORAGECLASS?? REASON?? AGE

pv1??? 1Gi??????? RWX??????????? Retain?????????? Bound?????? study/pvc1?????????????????????????? 23m

pv2??? 2Gi??????? RWX??????????? Retain?????????? Bound?????? study/pvc2?????????????????????????? 23m

pv3??? 3Gi??????? RWX??????????? Retain?????????? Available??????????????????????????????????????? 23m创建pod连接 PVC

apiVersion: v1

kind: Pod

metadata:

? name: pod1

? namespace: study

spec:

? containers:

? - name: busybox

??? image: busybox:1.30

??? command: ["/bin/sh","-c","while true;do echo pod1 >> /root/out.txt; sleep 10; done;"]

??? volumeMounts:

??? - name: volume

????? mountPath: /root/

? volumes:

??? - name: volume

????? persistentVolumeClaim: # 这个字段是pvc的kind: PersistentVolumeClaim

??????? claimName: pvc1

??????? readOnly: false # 只读

---

apiVersion: v1

kind: Pod

metadata:

? name: pod2

? namespace: study

spec:

? containers:

??? - name: busybox

????? image: busybox:1.30

????? command: ["/bin/sh","-c","while true;do echo pod1 >> /root/out.txt; sleep 10; done;"]

????? volumeMounts:

??????? - name: volume

????????? mountPath: /root/

? volumes:

??? - name: volume

????? persistentVolumeClaim:

??????? claimName: pvc2

??????? readOnly: false[root@master k8s]# kubectl apply -f pod-pvc.yaml

pod/pod1 created

pod/pod2 created

[root@master k8s]# ls /root/data/pv1/out.txt

/root/data/pv1/out.txt删除

[root@master k8s]# kubectl delete -f pod-pvc.yaml

pod "pod1" deleted

pod "pod2" deleted

[root@master k8s]# kubectl get pvc -n study

NAME?? STATUS??? VOLUME?? CAPACITY?? ACCESS MODES?? STORAGECLASS?? AGE

pvc1?? Bound???? pv1????? 1Gi??????? RWX?????????????????????????? 123m

pvc2?? Bound???? pv2????? 2Gi??????? RWX?????????????????????????? 123m

pvc3?? Pending???????????????????????????????????????????????????? 123m

[root@master k8s]# kubectl get pv

NAME?? CAPACITY?? ACCESS MODES?? RECLAIM POLICY?? STATUS????? CLAIM??????? STORAGECLASS?? REASON?? AGE

pv1??? 1Gi??????? RWX??????????? Retain?????????? Bound?????? study/pvc1?????????????????????????? 126m

pv2??? 2Gi??????? RWX??????????? Retain?????????? Bound?????? study/pvc2?????????????????????????? 126m

pv3??? 3Gi??????? RWX??????????? Retain?????????? Available??????????????????????????????????????? 126m

[root@master k8s]# kubectl delete -f pvc.yaml

persistentvolumeclaim "pvc1" deleted

persistentvolumeclaim "pvc2" deleted

persistentvolumeclaim "pvc3" deleted

[root@master k8s]# kubectl get pv -n study

NAME?? CAPACITY?? ACCESS MODES?? RECLAIM POLICY?? STATUS????? CLAIM??????? STORAGECLASS?? REASON?? AGE

pv1??? 1Gi??????? RWX??????????? Retain?????????? Released??? study/pvc1?????????????????????????? 127m

pv2??? 2Gi??????? RWX??????????? Retain?????????? Released??? study/pvc2?????????????????????????? 127m

pv3??? 3Gi??????? RWX??????????? Retain?????????? Available??????????????????????????????????????? 127m

# persistentVolumeReclaimPolicy:Retain 所以要手动释放,并重新声明将pv从Released 变为 Available

使用edit命令删除pv中的pvc的绑定信息即可变为available

[root@master k8s]# kubectl get pv -n study

NAME?? CAPACITY?? ACCESS MODES?? RECLAIM POLICY?? STATUS????? CLAIM??????? STORAGECLASS?? REASON?? AGE

pv1??? 1Gi??????? RWX?? ?????????Retain?????????? Released??? study/pvc1?????????????????????????? 129m

pv2??? 2Gi??????? RWX??????????? Retain?????????? Released??? study/pvc2?????????????????????????? 129m

pv3??? 3Gi??????? RWX??????????? Retain?????????? Available??????????????????????????????????????? 129m

[root@master k8s]# kubectl edit pv pv2 # pv1# 删除下面字段

? claimRef:

??? apiVersion: v1

??? kind: PersistentVolumeClaim

??? name: pvc2

??? namespace: study

??? resourceVersion: "849619"

??? uid: fac30e1d-90a5-4e8b-9092-e6b83fd55d62

persistentvolume/pv2 edited[root@master k8s]# kubectl get pv -n study

NAME?? CAPACITY?? ACCESS MODES?? RECLAIM POLICY?? STATUS????? CLAIM?? STORAGECLASS?? REASON?? AGE

pv1??? 1Gi??????? RWX??????????? Retain?????????? Available???????????????????? ??????????????134m

pv2??? 2Gi??????? RWX??????????? Retain?????????? Available?????????????????????????????????? 134m

pv3??? 3Gi??????? RWX??????????? Retain?????????? Available?????????????????????????????????? 134m问题

pv创建不会检测nfs的路径是否连上,哪怕nfs都没有这个配置也连不上也能创建,不会报错,甚至后续的pvc和pod连接pvc都不会报错

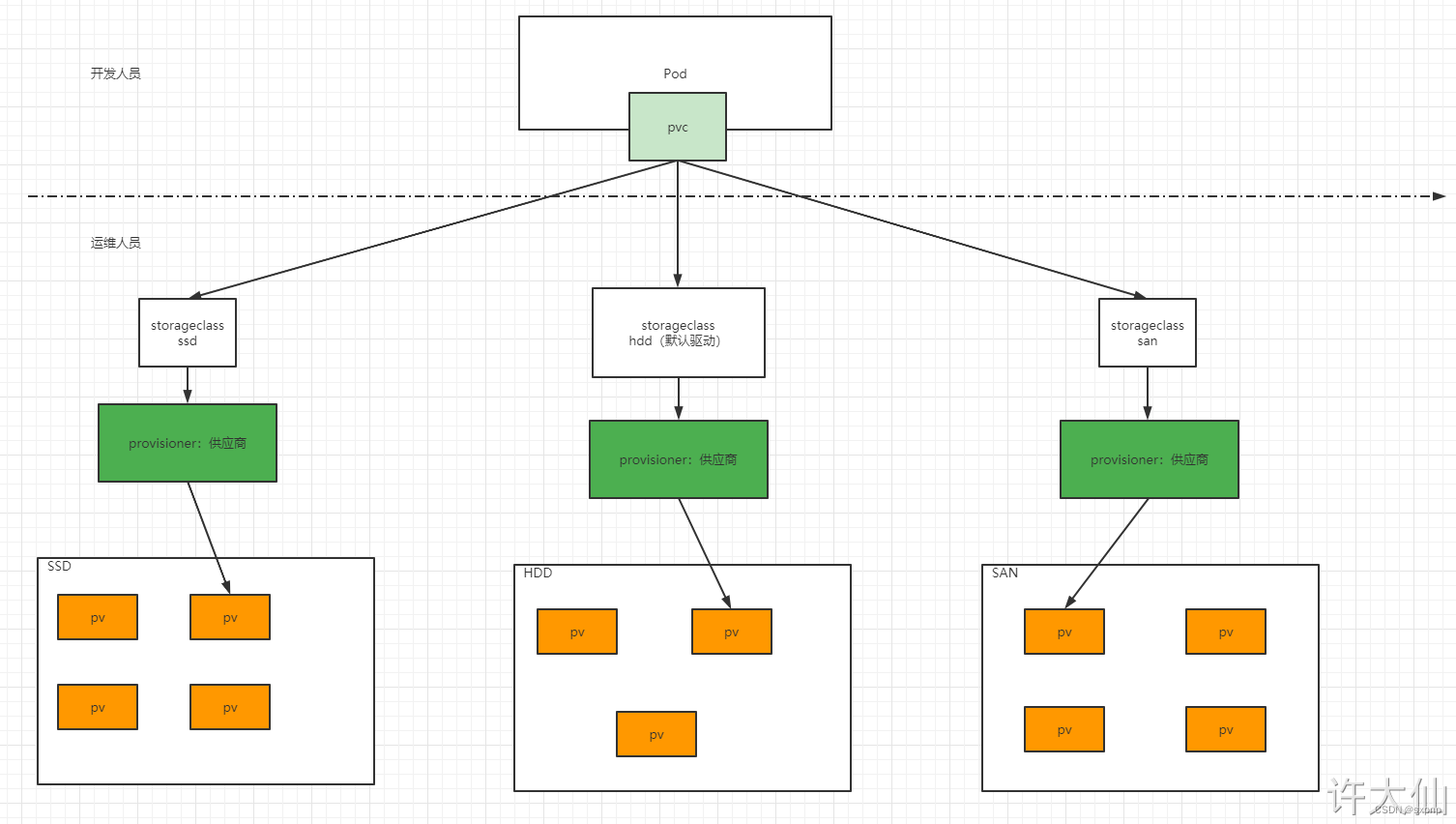

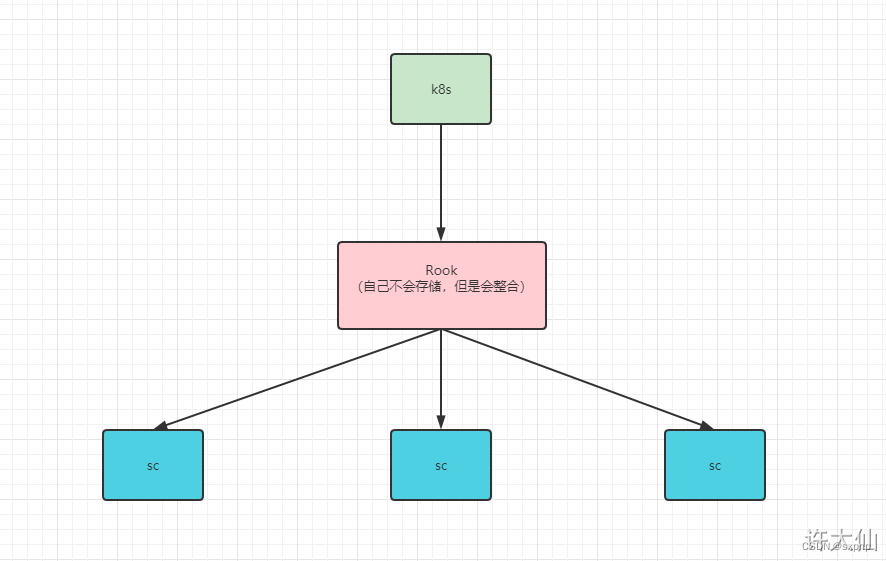

动态供应

- 静态供应:集群管理员创建若干 PV 卷。这些卷对象带有真实存储的细节信息,并且对集群用户可用(可见)。PV 卷对象存在于 Kubernetes API 中,可供用户消费(使用)。

- 动态供应:集群自动根据 PVC 创建出对应 PV 进行使用

- ① 集群管理员预先创建存储类(StorageClass)。

- ② 用户创建使用存储类的持久化存储声明(PVC:PersistentVolumeClaim)。

- ③ 存储持久化声明通知系统,它需要一个持久化存储(PV: PersistentVolume)。

- ④ 系统读取存储类的信息。

- ⑤ 系统基于存储类的信息,在后台自动创建 PVC 需要的 PV 。

- ⑥ 用户创建一个使用 PVC 的 Pod 。

- ⑦ Pod 中的应用通过 PVC 进行数据的持久化。

- ⑧ PVC 使用 PV 进行数据的最终持久化处理。

https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

设置 NFS 动态供应

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

? name: nfs-client

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # 指定一个供应商的名字

# or choose another name, 必须匹配 deployment 的 env PROVISIONER_NAME'

parameters:

? archiveOnDelete: "false" # 删除 PV 的时候,PV 中的内容是否备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

? name: nfs-client-provisioner

? labels:

??? app: nfs-client-provisioner

? namespace: default

spec:

? replicas: 1

? strategy:

??? type: Recreate

? selector:

??? matchLabels:

????? app: nfs-client-provisioner

? template:

??? metadata:

????? labels:

??????? app: nfs-client-provisioner

??? spec:

????? serviceAccountName: nfs-client-provisioner

????? containers:

??????? - name: nfs-client-provisioner

????????? image: ccr.ccs.tencentyun.com/gcr-containers/nfs-subdir-external-provisioner:v4.0.2

????????? volumeMounts:

??????????? - name: nfs-client-root

????????????? mountPath: /persistentvolumes

????????? env:

??????????? - name: PROVISIONER_NAME

????????????? value: k8s-sigs.io/nfs-subdir-external-provisioner

??????????? - name: NFS_SERVER

????????????? value: 192.168.65.100 # NFS 服务器的地址

??????????? - name: NFS_PATH

????????????? value: /nfs/data # NFS 服务器的共享目录

????? volumes:

??????? - name: nfs-client-root

????????? nfs:

??????????? server: 192.168.65.100

??????????? path: /nfs/data

---

apiVersion: v1

kind: ServiceAccount

metadata:

? name: nfs-client-provisioner

? namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

? name: nfs-client-provisioner-runner

rules:

? - apiGroups: [""]

??? resources: ["nodes"]

??? verbs: ["get", "list", "watch"]

? - apiGroups: [""]

??? resources: ["persistentvolumes"]

??? verbs: ["get", "list", "watch", "create", "delete"]

? - apiGroups: [""]

??? resources: ["persistentvolumeclaims"]

??? verbs: ["get", "list", "watch", "update"]

? - apiGroups: ["storage.k8s.io"]

??? resources: ["storageclasses"]

??? verbs: ["get", "list", "watch"]

? - apiGroups: [""]

??? resources: ["events"]

??? verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

? name: run-nfs-client-provisioner

subjects:

? - kind: ServiceAccount

??? name: nfs-client-provisioner

??? namespace: default

roleRef:

? kind: ClusterRole

? name: nfs-client-provisioner-runner

? apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

? name: leader-locking-nfs-client-provisioner

? namespace: default

rules:

? - apiGroups: [""]

??? resources: ["endpoints"]

??? verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

? name: leader-locking-nfs-client-provisioner

? namespace: default

subjects:

? - kind: ServiceAccount

??? name: nfs-client-provisioner

??? namespace: default

roleRef:

? kind: Role

? name: leader-locking-nfs-client-provisioner

? apiGroup: rbac.authorization.k8s.io测试动态供应

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

? name: nginx-pvc

? namespace: default

? labels:

??? app: nginx-pvc

spec:

? storageClassName: nfs-client # 注意此处

? accessModes:

? - ReadWriteOnce

? resources:

??? requests:

????? storage: 2Gi

---

apiVersion: v1

kind: Pod

metadata:

? name: nginx

? namespace: default

? labels:

??? app: nginx

spec:

? containers:

? - name: nginx

??? image: nginx:1.20.2

??? resources:

????? limits:

??????? cpu: 200m

??????? memory: 500Mi

????? requests:

??????? cpu: 100m

??????? memory: 200Mi

??? ports:

??? - containerPort:? 80

????? name:? http

??? volumeMounts:

??? - name: localtime

????? mountPath: /etc/localtime

??? - name: html

????? mountPath: /usr/share/nginx/html/

? volumes:

??? - name: localtime

????? hostPath:

??????? path: /usr/share/zoneinfo/Asia/Shanghai

??? - name: html???

????? persistentVolumeClaim:

??????? claimName:? nginx-pvc

??????? readOnly: false?

? restartPolicy: Always设置 SC 为默认驱动

kubectl patch storageclass <your-class-name> -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

kubectl patch storageclass nfs-client -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

? name: nginx-pvc

? namespace: default

? labels:

??? app: nginx-pvc

spec:

? # storageClassName: nfs-client 不写,就使用默认的

? accessModes:

? - ReadWriteOnce

? resources:

??? requests:

????? storage: 2Gi

---

apiVersion: v1

kind: Pod

metadata:

? name: nginx

? namespace: default

? labels:

??? app: nginx

spec:

? containers:

? - name: nginx

??? image: nginx:1.20.2

??? resources:

????? limits:

??????? cpu: 200m

??????? memory: 500Mi

????? requests:

??????? cpu: 100m

??????? memory: 200Mi

??? ports:

??? - containerPort:? 80

????? name:? http

??? volumeMounts:

??? - name: localtime

????? mountPath: /etc/localtime

??? - name: html

????? mountPath: /usr/share/nginx/html/

? volumes:

??? - name: localtime

????? hostPath:

??????? path: /usr/share/zoneinfo/Asia/Shanghai

??? - name: html???

????? persistentVolumeClaim:

??????? claimName:? nginx-pvc

??????? readOnly: false?

? restartPolicy: Always展望

特殊存储卷

ConfigMap

ConfigMap是一个比较特殊的存储卷,它的主要作用是用来存储配置信息的

创建configmap

apiVersion: v1

kind: ConfigMap

metadata:

? name: configmap-test

? namespace: study

data: # 下面都是要存储的数据

? info: # 会创建一个名为info文件,文件内容如下

??? username:admin

??? password:123456

# 如果更新ConfigMap中的内容,容器中的值也会动态更新

# 更改后重新 apply 或 使用 edit命令

# 注意:需要一定时间[root@master k8s]# kubectl apply? -f configmap.yaml

configmap/configmap-test created

[root@master k8s]# kubectl get cm -n study

NAME?????????????? DATA?? AGE

configmap-test???? 1????? 22s

kube-root-ca.crt?? 1????? 4d21h创建pod挂载configmap

apiVersion: v1

kind: Pod

metadata:

? name: pod-configmap

? namespace: study

spec:

? containers:

??? - name: nginx

????? image: nginx:1.17.1

????? volumeMounts:

??????? - mountPath: /configmap/config

????????? name: config

? volumes:

??? - name: config

????? configMap:

??????? name: configmap-test # 绑定 configmap[root@master k8s]# kubectl apply -f configmap.yaml

pod/pod-configmap created

[root@master k8s]# kubectl get pod -n study

NAME??????????? READY?? STATUS??? RESTARTS?? AGE

pod-configmap?? 1/1???? Running?? 0????????? 11s

[root@master k8s]# kubectl exec -it -n study pod-configmap -c nginx /bin/sh

# ls /configmap/config

info

# cat /configmap/config/info

username:admin password:123456Secret

在kubernetes中,还存在一种和ConfigMap非常类似的对象,称为Secret对象,它主要用来存储敏感信息,例如密码、密钥、证书等等

Secret 是一个用于存储敏感数据的资源,所有的数据要经过base64编码,数据实际会存储在K8s中Etcd,然后通过创建Pod时引用该数据。

查询 Secret 的时候是加密,在pod容器里面显示的是解密后的内容

kubectl create secret 支持三种数据类型:

| 内置类型 | 用法 |

| Opaque | 用户定义的任意数据 |

| kubernetes.io/service-account-token | 服务账号令牌 |

| kubernetes.io/dockercfg | ~/.dockercfg 文件的序列化形式 |

| kubernetes.io/dockerconfigjson | ~/.docker/config.json 文件的序列化形式 |

| kubernetes.io/basic-auth | 用于基本身份认证的凭据 |

| kubernetes.io/ssh-auth | 用于 SSH 身份认证的凭据 |

| kubernetes.io/tls | 用于 TLS 客户端或者服务器端的数据 |

| bootstrap.kubernetes.io/token | 启动引导令牌数据 |

yaml 解析

# 准备base64加密数据

echo -n "admin" | base64

echo -n "123456" | base64apiVersion: v1

kind: Secret

metadata:

? name: secret

? namespace: dev

type: Opaque

data: # 下面存存储敏感信息,已base64加密

? # 这里 username 是一个文件名,这里 password 是一个文件名

? username: YWRtaW4=

? password: MTIzNDU2

? # mysql-root-password:"MTIzNDU2"

stringData: #如果不手动进行编码加密,可以将这个操作让k8s来做,使用stringdata

? username: admin

? password: "123456"

# 不能直接写数字,不然会报错 cannot convert int64 to string,如果要写数字就加""注:如果同时使用data和stringData,那么data会被忽略

[root@master k8s]# kubectl apply -f secret.yaml

secret/secret created

[root@master k8s]# kubectl describe secrets -n study

Name:???????? secret

Namespace:??? study

Labels:?????? <none>

Annotations:? <none>

Type:? Opaque

Data

====

password:? 6 bytes

username:? 5 bytes?数据卷挂载

apiVersion: v1

kind: Pod

metadata:

??name: pod-secret

??namespace: study

spec:

??containers:

????- name: nginx

??????image: nginx:1.17.1

??????volumeMounts:

????????- mountPath: /secret/config

??????????name: secret-config

??volumes:

????- name: secret-config

??????secret:

????????secretName: secretkubectl exec -it -n study pod-secret -c nginx /bin/sh

# cat /secret/config/password

123456解码 Secret

kubectl get secret db-user-pass -o jsonpath='{.data}' | base64变量注入

[root@master cks]# echo -n "123456" | base64

MTIzNDU2apiVersion: v1

kind: Secret

metadata:

? name: mysql

type: Opaque

data:

? mysql-root-password: "MTIzNDU2"

---

apiVersion: apps/v1

kind: Deployment

metadata:

? name: mysql

spec:

? selector:

??? matchLabels:

????? app: mysql

? template:

??? metadata:

????? labels:

??????? app: mysql

??? spec:

????? containers:

????? - name: mysql-db

??????? image: mysql:5.7.30

??????? env:

??????? - name: MYSQL_ROOT_PASSWORD

????????? valueFrom:

??????????? secretKeyRef:

????????????? name: mysql

????????????? key: mysql-root-password将 secret 的mysql中的mysql-root-passwd变量注入到 容器中的 MYSQL_ROOT_PASSWORD 变量

[root@master cks]# kubectl exec -it mysql-6c8b6c4d74-vbflz /bin/sh

# mysql -uroot -p123456

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor.? Commands end with ; or \g.

Your MySQL connection id is 4

Server version: 5.7.30 MySQL Community Server (GPL)

Copyright (c) 2000, 2020, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>镜像拉取密码

imagePullSecret:Pod拉取私有镜像仓库的时使用的账户密码,会传递给kubelet,然后kubelet就可以拉取有密码的仓库里面的镜像

kubectl create secret docker-registry docker-harbor-registrykey --docker-server=192.168.18.119:85 \

????????? --docker-username=admin --docker-password=Harbor12345 \

????????? --docker-email=1900919313@qq.com

apiVersion: v1

kind: Pod

metadata:

? name: redis

spec:

? containers:

??? - name: redis

????? image: 192.168.18.119:85/yuncloud/redis # 这是Harbor的镜像私有仓库地址

? imagePullSecrets:

??? - name: docker-harbor-registrykey本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 【C++】vector模拟实现过程中值得注意的点

- K8S 外部访问配置、 Ingress、NodePort

- 电视盒子哪个牌子好?2023年度盘点电视盒子排行榜

- Elasticsearch:如何使用 Elasticsearch 进行排序

- jenkins安装配置,使用Docker发布maven项目全过程记录(1)

- hfish蜜罐docker部署

- 使用Java实现一个简单的贪吃蛇小游戏

- Spark DataFrame:从底层逻辑到应用场景的深入解析

- Go 实现二分查找

- 【Java | 多线程案例】定时器的实现