Unity中URP下使用屏幕坐标采样深度图

文章目录

前言

在上一篇文章中,我们实现了URP下深度图的使用。

但是,因为是使用模型UV采样的原因。所以,深度图效果不对

所以,在这一篇文章中,我们使用屏幕坐标来采样深度图。

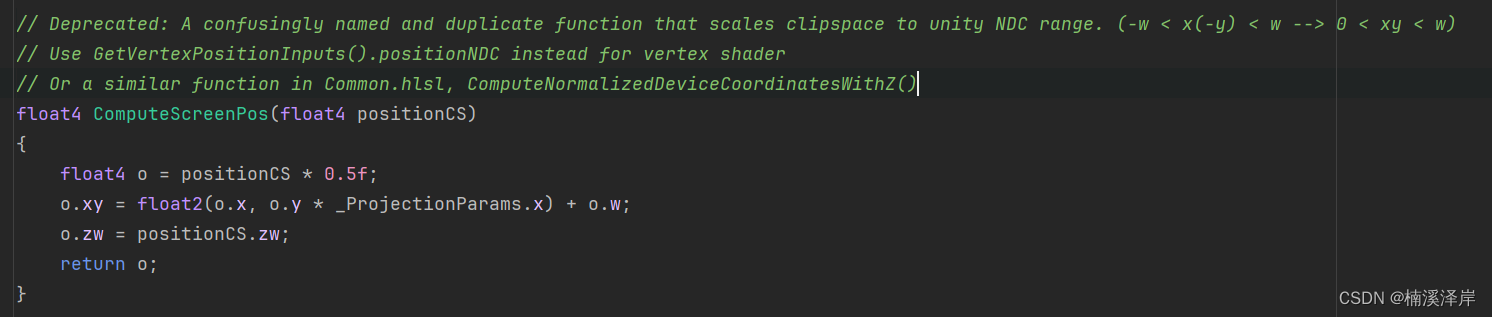

一、Unity使用了ComputeScreenPos函数得到屏幕坐标

1、 我们来看一下这个函数干了什么

齐次裁剪空间进行透视除法

- x n d c = x w x_{ndc} = \frac{x}{w} xndc?=wx?

然后进行如下步骤,化简得到该函数结果

- x s c r e e n = ( x n d c ? 0.5 + 0.5 ) ? w i d t h x_{screen} = (x_{ndc}*0.5+0.5)*width xscreen?=(xndc??0.5+0.5)?width

- x s c r e e n = ( x w ? 0.5 + 0.5 ) ? w i d t h x_{screen} = (\frac{x}{w}*0.5+0.5)*width xscreen?=(wx??0.5+0.5)?width

- x s c r e e n w i d t h = ( x w ? 0.5 + 0.5 ) \frac{x_{screen}}{width}=(\frac{x}{w}*0.5+0.5) widthxscreen??=(wx??0.5+0.5)

- x s c r e e n w i d t h ? w = ( x ? 0.5 + w ? 0.5 ) \frac{x_{screen}}{width}*w=(x*0.5+w*0.5) widthxscreen???w=(x?0.5+w?0.5)

2、我们看一下该函数实现该结果的意义

x s c r e e n w i d t h ? w \frac{x_{screen}}{width}*w widthxscreen???w

-

当 x s c r e e n = 0 x_{screen} = 0 xscreen?=0

x s c r e e n w i d t h ? w = 0 \frac{x_{screen}}{width}*w = 0 widthxscreen???w=0 -

当 x s c r e e n = w i d t h x_{screen} =width xscreen?=width

x s c r e e n w i d t h ? w = w \frac{x_{screen}}{width}*w = w widthxscreen???w=w -

得到最终o的范围

-

( [ 0 , w ] , [ 0 , w ] , z , w ) ([0,w],[0,w],z,w) ([0,w],[0,w],z,w)

-

( [ 0 , w ] w , [ 0 , w ] w , z w , 1 ) (\frac{[0,w]}{w},\frac{[0,w]}{w},\frac{z}{w},1) (w[0,w]?,w[0,w]?,wz?,1)

-

( [ 0 , 1 ] , [ 0 , 1 ] , z w , 1 ) ([0,1],[0,1],\frac{z}{w},1) ([0,1],[0,1],wz?,1)

二、在Shader中使用(法一)

1、在Varying结构体中

float4 screenPos : TEXCOORD1;

2、在顶点着色器中

o.screenPos = ComputeScreenPos(o.positionCS);

3、在片元着色器中

float2 uv = i.screenPos.xy / i.screenPos.w;

float4 cameraDepthTex = SAMPLE_TEXTURE2D(_CameraDepthTexture,sampler_CameraDepthTexture,uv);

float depthTex = Linear01Depth(cameraDepthTex,_ZBufferParams);

return depthTex;

三、在Shader中使用(法二)

- 原理:用 齐次裁剪坐标 屏幕宽高 \frac{齐次裁剪坐标} {屏幕宽高} 屏幕宽高齐次裁剪坐标?刚好得到[0,1]之间的屏幕uv坐标

1、在片元着色器中

float2 uv = i.positionCS/ _ScreenParams.xy;

float4 cameraDepthTex = SAMPLE_TEXTURE2D(_CameraDepthTexture,sampler_CameraDepthTexture,uv);

float depthTex = Linear01Depth(cameraDepthTex,_ZBufferParams);

return depthTex;

四、最终效果

Shader "MyShader/URP/P4_1"

{

Properties

{

_Color("Color",Color) = (0,0,0,0)

_MainTex("MainTex",2D) = "white"{}

}

SubShader

{

Tags

{

//告诉引擎,该Shader只用于 URP 渲染管线

"RenderPipeline"="UniversalPipeline"

//渲染类型

"RenderType"="Transparent"

//渲染队列

"Queue"="Transparent"

}

//Blend One One

ZWrite Off

Pass

{

Name "Unlit"

HLSLPROGRAM

#pragma vertex vert

#pragma fragment frag

// Pragmas

#pragma target 2.0

// Includes

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Color.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Input.hlsl"

CBUFFER_START(UnityPerMaterial)

half4 _Color;

CBUFFER_END

//纹理的定义,如果是编译到GLES2.0平台,则相当于sample2D _MainTex;否则相当于 Texture2D _MainTex;

TEXTURE2D(_MainTex);SAMPLER(SamplerState_linear_mirrorU_ClampV); float4 _MainTex_ST;

TEXTURE2D(_CameraDepthTexture);SAMPLER(sampler_CameraDepthTexture);

//struct appdata

//顶点着色器的输入

struct Attributes

{

float3 positionOS : POSITION;

float2 uv : TEXCOORD0;

};

//struct v2f

//片元着色器的输入

struct Varyings

{

float4 positionCS : SV_POSITION;

float2 uv : TEXCOORD0;

float4 screenPos : TEXCOORD1;

};

//v2f vert(Attributes v)

//顶点着色器

Varyings vert(Attributes v)

{

Varyings o = (Varyings)0;

float3 positionWS = TransformObjectToWorld(v.positionOS);

o.positionCS = TransformWorldToHClip(positionWS);

o.uv = TRANSFORM_TEX(v.uv,_MainTex);

o.screenPos = ComputeScreenPos(o.positionCS);

return o;

}

//fixed4 frag(v2f i) : SV_TARGET

//片元着色器

half4 frag(Varyings i) : SV_TARGET

{

half4 c;

float4 mainTex = SAMPLE_TEXTURE2D(_MainTex,SamplerState_linear_mirrorU_ClampV,i.uv);

//c = _Color * mainTex;

//深度图

//float2 uv = i.screenPos.xy / i.screenPos.w;

float2 uv = i.positionCS/ _ScreenParams.xy;

float4 cameraDepthTex = SAMPLE_TEXTURE2D(_CameraDepthTexture,sampler_CameraDepthTexture,uv);

float depthTex = Linear01Depth(cameraDepthTex,_ZBufferParams);

return depthTex;

}

ENDHLSL

}

}

FallBack "Hidden/Shader Graph/FallbackError"

}

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- Tiktok怎样多开不封号?海外云手机就能解决!

- Neo4j数据库自动启停与定时备份

- 椋鸟C语言笔记#29:联合体、枚举类型

- React面试题:React.Component和React.PureComponent的区别?

- CentOS7安装Neo4j

- 在电脑上免费压缩视频的 16 个视频压缩软件

- 关于库函数和自定义函数

- 力扣刷题记录(24)LeetCode:53、392、115

- 【前端基础】script引入资源脚本加载失败解决方案(重新加载获取备用资源)

- SSH:关键组件Kex, HostKey, HMAC算法及其用途